探索可提示概念分割技术,解析其与传统方法的差异,并阐述YOLOE-26等相关模型如何实现开放词汇能力。

探索可提示概念分割技术,解析其与传统方法的差异,并阐述YOLOE-26等相关模型如何实现开放词汇能力。

视觉人工智能正飞速发展,并被广泛应用于现实环境中的图像与视频分析。例如,从交通管理系统到零售分析等各类应用,都正与计算机视觉模型进行深度融合。

在许多此类应用中,视觉模型(如目标检测模型)经过训练可识别预定义的物体集合,包括车辆、人员和设备。训练过程中,这些模型会接触大量标注示例,从而学习每个物体的特征表现,并掌握如何在场景中将其与其他物体区分开来。

在分割任务中,模型更进一步,能够为这些物体生成精确的像素级轮廓。这使得系统能够准确理解每个物体在图像中的具体位置。

只要系统只需识别其训练数据中的内容,这种方法就效果良好。然而在现实环境中,这种情况很少发生。

视觉场景通常具有动态性。新物体和视觉概念不断出现,环境条件持续变化,用户往往需要对原始训练场景中未包含的segment 。

这些局限性在分割任务中尤为明显。随着视觉人工智能的持续发展,人们日益需要更灵活的分割模型,这些模型能够适应新概念而无需反复重新训练。正因如此,可提示概念分割(PCS)正受到越来越多的关注。

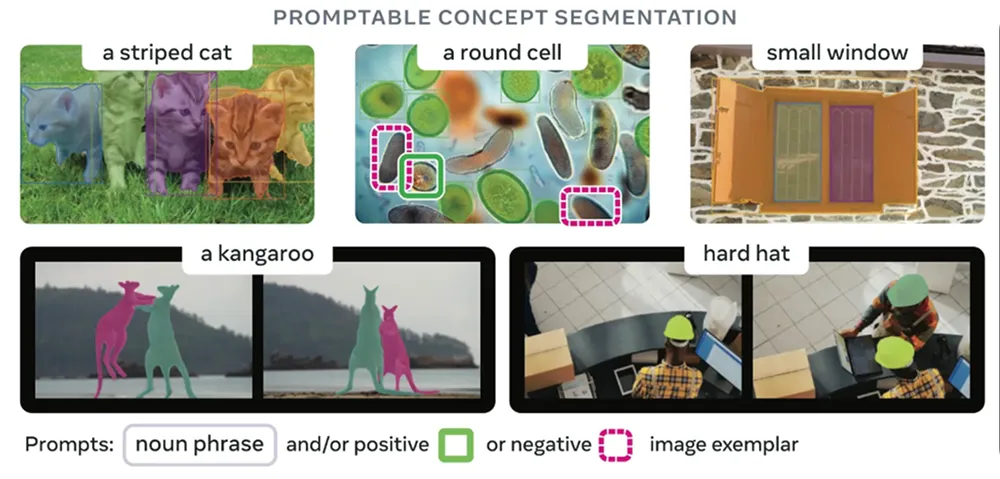

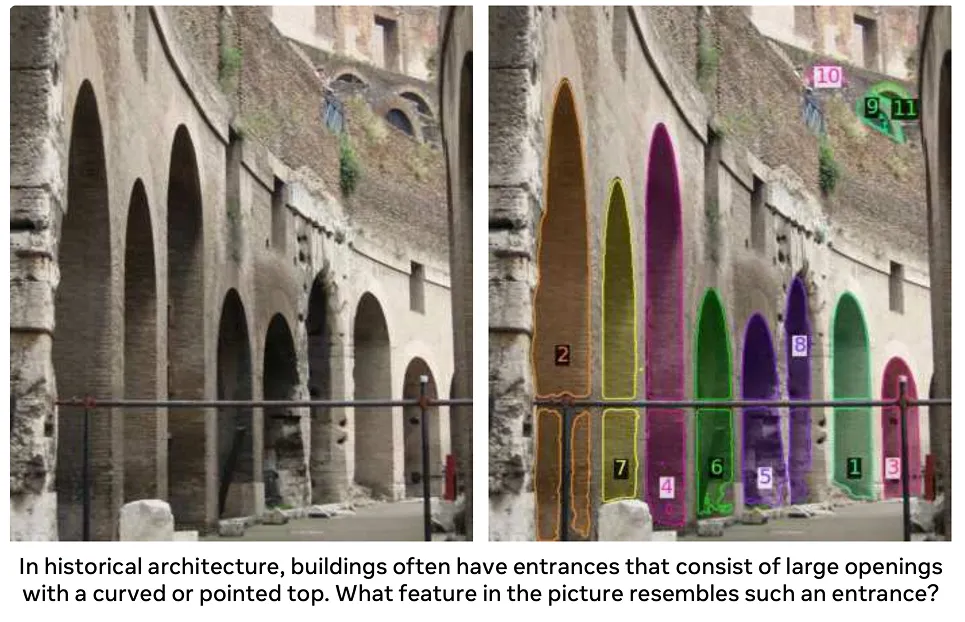

用户无需依赖固定的对象类别列表segment 文本、视觉提示或示例图像描述需要segment 的内容。这些模型能够识别并segment 符合描述概念的区域,即使该概念在训练过程中未被明确纳入。

本文将探讨可提示概念分割的工作原理、其与传统方法的差异,以及当前的应用场景。

在大多数情况下,分割模型经过训练后能够识别有限的对象类型。当视觉人工智能系统仅需detect segment 特定segment 时,这种方法效果良好。

然而在实际应用中,视觉场景具有动态性。新物体不断出现,任务需求持续变化,用户常常需要对原始标签集未涵盖segment 。要支持这些场景,通常需要收集新的高质量数据和标注,并重新训练模型,这将增加成本并延缓部署进程。

可提示概念分割技术通过让用户告知模型需要寻找的内容(而非从固定标签列表中选择),解决了这一问题。用户描述所需对象或概念后,模型便会高亮图像中所有匹配区域。这使得将用户意图与图像中的实际像素建立关联变得更为简便。

支持可提示概念分割的模型具有灵活性,因为它们能够处理不同类型的输入。换言之,向模型传达搜索目标的方式不止一种,例如文本描述、视觉提示或示例图像。

下面让我们更详细地看看每种方法:

在深入探讨可提示概念分割的工作原理之前,让我们先将其与各种传统的对象分割方法进行比较。

PCS支持开放词汇量和提示驱动的模型。它能够处理通过提示描述的新概念,而传统分段技术则无法做到。传统分段方法存在多种类型,每种方法都有其自身的假设和局限性。

以下是传统细分法中几种关键类型的简要概述:

所有这些方法都依赖于预先定义的对象类别列表。它们在该范围内表现良好,但对列表之外的概念处理效果欠佳。当需要分割新的特定对象时,通常需要额外的训练数据和模型微调。

PCS旨在改变这种状况。它不再将用户限制在预定义的类别中,而是在推理时允许用户自由描述图像segment 需要segment 的对象。

接下来,让我们逐步了解分割模型是如何演变为可提示概念分割的。

一种标志着细分领域变革的流行基础模型是 SAM,即"任意分割模型"。该模型于2023年问世,SAM 突破性在于摒弃预设物体分类体系,转而SAM 通过点标记或边界框等简单视觉提示引导分割过程。

SAM无需再手动选择标签。只需标注目标物体的位置,模型便能自动生成对应的遮罩。这使得分割操作更加灵活,但用户仍需引导模型关注目标区域。

2024年发布的SAM 在此基础上进一步发展,能够处理更复杂的场景,并将可提示分割技术扩展至视频领域。该模型在不同光照条件、物体形状及运动状态下均展现出更强的鲁棒性,同时仍主要依赖视觉提示来引导分割过程。

SAM 模型是这一演进历程中的最新成果。该模型于去年发布,作为统一模型,它融合了视觉理解与语言指导能力,能够在图像和视频分割任务中实现一致的行为表现。

SAM ,用户不再局限于指点或绘制提示。相反,他们segment 描述想要segment 的内容segment 模型会自动在图像或视频帧中搜索符合该描述的区域。

分割过程由概念而非固定对象类别引导,支持在不同场景和时间跨度中灵活运用开放词汇。实际上,SAM 在庞大的学习概念空间中运行,该空间基于从维基数据等来源衍生出的本体论构建,并通过大规模训练数据不断扩展。

相较于主要依赖几何提示的早期版本SAM 标志着向更灵活、概念驱动的分割方向迈进。这使其更适用于现实世界中的应用场景——在这些场景中,目标对象或概念可能发生变化,且无法总是预先定义。

那么,可提示概念分割是如何运作的呢?它基于大型预训练的视觉和视觉语言模型,这些模型是在海量图像数据集(通常还包含配对文本)上训练而成的。这种训练使它们能够学习普遍的视觉模式和语义含义。

大多数PCS模型采用transformer架构,该架构通过一次性处理整幅图像来理解不同区域之间的关联关系。transformer 图像中transformer 视觉特征,而文本编码器则将文字转换为模型可处理的数值表示形式。

在训练过程中,这些模型能够从不同类型的监督中学习,包括定义精确物体边界的像素级遮罩、大致定位物体的边界框,以及描述图像内容的图像级标签。利用不同类型的标注数据进行训练,有助于模型同时捕捉精细细节与更广泛的视觉概念。

在推理阶段,即模型实际用于预测时,PCS遵循提示驱动流程。用户可通过文本描述、点或框等视觉提示,或示例图像提供指导。模型将提示与图像共同编码为共享的内部表征(嵌入),并识别与描述概念相匹配的区域。

随后,掩码解码器将这种共享表征转换为精确的像素级分割掩码。由于该模型将视觉特征与语义含义相联系,即使训练过程中未明确包含segment 概念,它仍能segment 这些segment 。

此外,通过调整提示词或添加额外指导,通常可以优化输出结果,这有助于模型处理复杂或模糊的场景。这种迭代过程为部署过程中的实际优化提供了支持。

可提示概念分割模型通常通过其segment 概念segment 效果以及在不同场景中的鲁棒性表现进行评估。基准测试常聚焦于遮罩质量、泛化能力及计算效率,以反映实际部署需求。

接下来,让我们看看可提示概念分割技术已在哪些领域得到应用,并开始产生实质性影响。

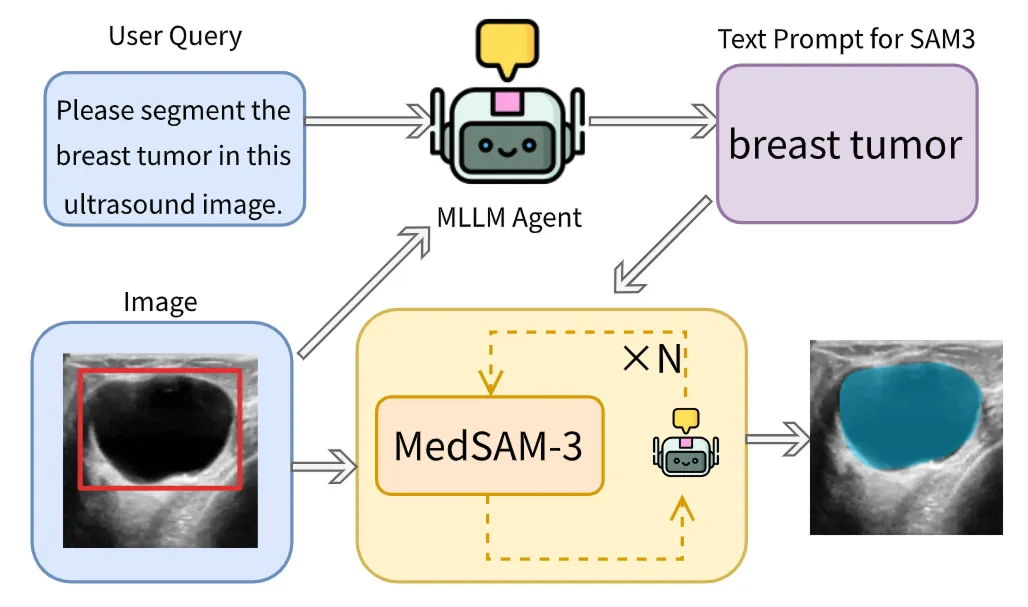

医学影像涉及众多生物结构、疾病类型及扫描方式,且新病例每日涌现。传统分割模型难以应对如此多样的挑战。

PCS技术能自然融入这一领域,因为它允许临床医生描述所需定位的区域,而非从简短僵化的选项列表中选择。通过文本短语或视觉提示,PCS可直接对segment 或关注区域segment ,无需为每项新任务重新训练模型。这使其更易应对多样化的临床需求,减少手动绘制掩膜的必要性,并能跨多种成像类型应用。

一个典型的例子是 SAM , SAM 应用于医学影像中的文本可提示式PCS。该模型可通过明确的解剖学和病理学术语进行提示,例如器官名称(如肝脏或肾脏)以及病变相关概念(如肿瘤或病灶)。在接收到提示后,模型能直接对医学影像中的对应区域进行分割。

MedSAM-3还集成了多模态大型语言模型(MLLM或多模态LLM),能够对文本和图像进行推理。这些模型采用"人工干预式"架构运行,通过迭代优化结果来提升复杂场景下的准确率。

MedSAM-3在X光、MRI、CT、超声和视频数据中均表现优异,彰显了PCS技术如何在真实临床环境中实现更灵活高效的医学影像工作流程。

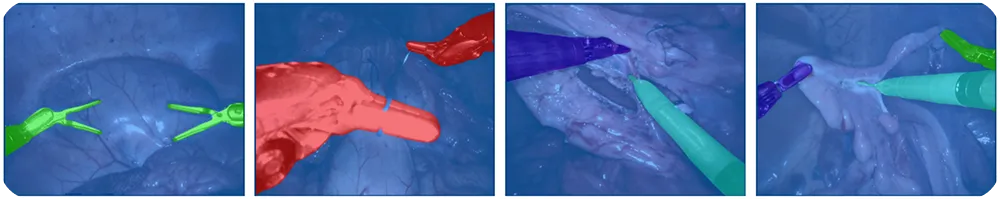

机器人手术依赖视觉系统来track 并理解瞬息万变的手术场景。器械快速移动、光照条件变化,且新器械随时可能出现,这使得预先定义的标注系统难以维持。

借助PCS技术,机器人能够实时track 、引导摄像头并执行手术步骤。这减少了人工标注需求,使系统更易于适应不同手术流程。外科医生或自动化系统可通过文本提示(如"钳夹""手术刀""摄像头器械")标注图像中需分割的对象。

另一种与可提示概念分割相关的先进模型是Ultralytics 。该模型Ultralytics YOLO 家族引入了开放词汇量、提示驱动的分割能力。

YOLOE-26Ultralytics 架构构建,支持开放词汇的实例分割。该模型允许用户通过多种方式引导分割过程。

该模型支持文本提示,通过简短且基于视觉的短语即可指定目标对象;同时支持视觉提示,根据图像线索提供额外指引。此外,YOLOE-26还包含无提示模式以实现零样本推理,模型可从内置词汇表中检测并分割对象,无需用户提供提示。

YOLOE-26特别适用于视频分析、机器人感知和边缘计算系统等场景,在这些场景中物体类别可能变化,但低延迟和可靠吞吐量始终至关重要。它在数据标注和数据集整理方面也具有显著优势,通过自动化部分标注流程来简化工作流。

以下是使用可提示概念分割的主要优势:

尽管PCS具有明显优势,但以下几点局限性仍需考虑:

在探索可提示分割技术时,您可能会思考:它最适合哪些应用场景?而像YOLO26这样的传统计算机视觉模型又在何种情况下更适合解决您面临的问题?可提示分割技术对通用物体效果良好,但对于需要极高精度和一致性结果的应用场景则不太适用。

缺陷检测就是一个很好的例子。在制造过程中,缺陷往往细微而隐蔽,例如微小的划痕、凹痕、错位或表面不平整。这些缺陷还会因材料、照明条件和生产环境的不同而呈现出显著差异。

这些问题难以通过简单提示描述,更难让通用模型detect 。总体而言,基于提示的模型往往会遗漏缺陷或产生不稳定结果,而专门针对缺陷数据训练的模型在实际检测系统中可靠性更高。

可提示概念分割使视觉系统更易适应现实世界——新物体和新概念在此处层出不穷。用户无需受限于固定标签,只需描述segment 目标segment 剩下的工作便可交由模型完成segment 从而节省时间并减少人工干预。尽管仍存在局限性,PCS技术已开始改变分割技术的实际应用方式segment 并有望成为未来视觉系统的核心组成部分。

访问我们的GitHub仓库并加入社区,深入探索人工智能领域。浏览解决方案页面,了解机器人技术中的AI应用及制造业的计算机视觉技术。探索我们的许可方案,立即开启视觉AI之旅!