Revisit key moments from YOLO Vision 2025 Shenzhen, where Ultralytics brought together innovators, partners, and the AI community for a day of inspiration.

Revisit key moments from YOLO Vision 2025 Shenzhen, where Ultralytics brought together innovators, partners, and the AI community for a day of inspiration.

On October 26, YOLO Vision 2025 (YV25) made its China debut at Building B10 in the OCT Creative Culture Park in Shenzhen. Ultralytics’ hybrid Vision AI event brought together more than 200 attendees in person, with many more joining online via YouTube and Bilibili.

The YV25 Shenzhen livestream has already passed 3,500 views on YouTube, and is continuing to gain attention as the event highlights are shared across the community. It was a day filled with ideas, conversation, and hands-on exploration of where Vision AI is heading next.

The day started with a warm welcome from our host, Huang Xueying, who invited everyone to connect, learn, and take part in the discussions throughout the event. She explained that this was the second YOLO Vision of the year, following the London edition in September and shared how exciting it was to bring the Vision AI community together again here in Shenzhen.

In this article, we’ll revisit the highlights from the day, including the model updates, the speaker sessions, live demos, and the community moments that brought everyone together. Let's get started!

The first keynote of the day was led by Ultralytics Founder & CEO Glenn Jocher, who shared how Ultralytics YOLO models have grown from a research breakthrough into some of the most widely used Vision AI models in the world. Glenn explained that his early work focused on making YOLO easier to use.

He ported the models to PyTorch, improved documentation, and shared everything openly so developers everywhere could build on top of it. As he recalled, “I jumped in head first in 2018. I decided this is where my future was.” What began as a personal effort quickly became a global open-source movement.

Today, Ultralytics YOLO models power billions of inferences every day, and Glenn emphasized that this scale was only possible because of the people who helped build it. Researchers, engineers, students, hobbyists, and open-source contributors from around the world have shaped YOLO into what it is today.

As Glenn put it, “There’s almost a thousand of them [contributors] out there and we’re super grateful for that. We wouldn’t be here where we are today without these people.”

The first look at Ultralytics YOLO26 was shared earlier this year at the YOLO Vision 2025 London event, where it was introduced as the next major step forward in the Ultralytics YOLO model family. At YV25 Shenzhen, Glenn provided an update on the progress since that announcement and gave the AI community a closer look at how the model has been evolving.

YOLO26 is designed to be smaller, faster, and more accurate, while staying practical for real-world use. Glenn explained that the team has spent the past year refining the architecture, benchmarking performance across devices, and incorporating insights from research and community feedback. The goal is to deliver state-of-the-art performance without making models harder to deploy.

One of the core updates Glenn highlighted is that YOLO26 is paired with a dedicated hyperparameter tuning campaign, shifting from training entirely from scratch to fine-tuning on larger datasets. He elaborated that this approach is much more aligned with actual real-world use cases.

Here are some of the other key improvements shared at the event:

Together, these updates result in models that are up to 43% faster on CPU while also being more accurate than Ultralytics YOLO11, making YOLO26 especially impactful for embedded devices, robotics, and edge systems.

YOLO26 will support all the same tasks and model sizes currently available in YOLO11, resulting in 25 model variants across the family. This includes models for detection, segmentation, pose estimation, oriented bounding boxes, and classification, ranging from nano up to extra large.

The team is also working on five promptable variants. These are models that can take a text prompt and return bounding boxes directly, without needing training.

It is an early step toward more flexible, instruction based vision workflows that are easier to adapt to different use cases. The YOLO26 models are still under active development, but the early performance results are strong, and the team is working toward releasing them soon.

After the YOLO26 update, Glenn welcomed Prateek Bhatnagar, our Head of Product Engineering, to give a live demo of the Ultralytics Platform. This platform is being built to bring key parts of the computer vision workflow together, including exploring datasets, annotating images, training models, and comparing results.

Prateek pointed out that the platform stays true to Ultralytics’ open-source roots, introducing two community spaces, a dataset community and a projects community, where developers can contribute, reuse, and improve each other’s work. During the demo, he showcased AI-assisted annotation, easy cloud training, and the ability to fine-tune models directly from the community, without needing local GPU resources.

The platform is currently in development. Prateek encouraged the audience to watch for announcements and noted that the team is growing in China to support the launch.

With the momentum building, the event shifted into a panel discussion featuring several of the researchers behind different YOLO models. The panel included Glenn Jocher, along with Jing Qiu, our Senior Machine Learning Engineer; Chen Hui, a Machine Learning Engineer at Meta and one of the authors of YOLOv10; and Bo Zhang, an Algorithm Strategist at Meituan and one of the authors of YOLOv6.

The discussion focused on how YOLO continues to evolve through real-world use. The speakers touched on how progress is often driven by practical deployment challenges, such as running efficiently on edge devices, improving small object detection, and simplifying model export.

Rather than chasing just accuracy, the panel noted the importance of balancing speed, usability, and reliability in production environments. Another shared takeaway was the value of iteration and community feedback.

Here are some other interesting insights from the conversation:

Next, let’s take a closer look at some of the keynote talks at YV25 Shenzhen, where leaders across the AI community shared how vision AI is evolving, from digital humans and robotics to multimodal reasoning and efficient edge deployment.

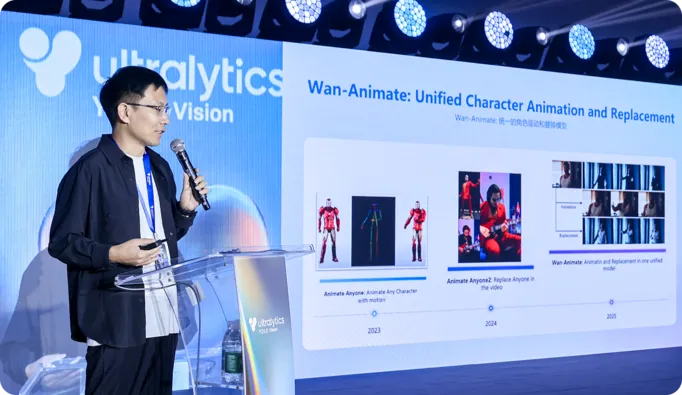

In an insightful session, Dr. Peng Zhang from Alibaba Qwen Lab shared how his team is developing large video models that can generate expressive digital humans with more natural movement and control. He walked through Wan S2V and Wan Animate, which use audio or motion references to produce realistic speech, gesture, and animation, addressing the limitations of purely text-driven generation.

Dr. Zhang also talked about progress being made toward real-time interactive avatars, including zero-shot cloning of appearance and motion and lightweight models that can animate a face directly from a live camera feed, bringing lifelike digital humans closer to running smoothly on everyday devices.

One of the key themes at YV25 Shenzhen was the shift from vision models that simply see the world to systems that can act within it. In other words, perception is no longer the end of the pipeline; it is becoming the start of action.

For instance, in his keynote, Hu Chunxu from D-Robotics described how their development kits and SoC (system on a chip) solutions integrate sensing, real-time motion control, and decision-making on a unified hardware and software stack. By treating perception and action as a continuous feedback loop, rather than separate stages, their approach supports robots that can move, adapt, and interact more reliably in real environments.

Alex Zhang from Baidu Paddle echoed this idea in his talk, explaining how YOLO and PaddleOCR work together to detect objects and then interpret the text and structure around them. This enables systems to convert images and documents into usable, structured information for tasks such as logistics, inspections, and automated processing.

Another interesting topic at YV25 Shenzhen was how Vision AI is becoming more efficient and capable on edge devices.

Paul Jung from DEEPX spoke about deploying YOLO models directly on embedded hardware, reducing reliance on the cloud. By focusing on low power consumption, optimized inference, and hardware-aware model tuning, DEEPX enables real-time perception for drones, mobile robots, and industrial systems operating in dynamic environments.

Similarly, Liu Lingfei from Moore Threads shared how the Moore Threads E300 platform integrates central processing unit (CPU), graphics processing unit (GPU), and neural processing unit (NPU) computing to deliver high-speed vision inference on compact devices.

The platform can run multiple YOLO streams at high frame rates, and its toolchain simplifies steps like quantization, static compilation, and performance tuning. Moore Threads has also open-sourced a wide set of computer vision models and deployment examples to lower the barrier for developers.

Until recently, building a single model that can both understand images and interpret language required large transformer architectures that were expensive to run. At YV25 Shenzhen, Yue Ziyin from Yuanshi Intelligence gave an overview of RWKV, an architecture that blends the long-context reasoning abilities of transformers with the efficiency of recurrent models.

He explained how Vision-RWKV applies this design to computer vision by processing images in a way that scales linearly with resolution. This makes it suitable for high-resolution inputs and for edge devices where computation is limited.

Yue also showed how RWKV is being used in vision-language systems, where image features are paired with text understanding to move beyond object detection into interpreting scenes, documents, and real-world context.

While the talks on stage looked ahead to where vision AI is going, the booths on the floor showed how it is already being used today. Attendees got to see models running live, compare hardware options, and talk directly with the teams building these systems.

Here’s a glimpse of the tech that was being displayed:

In addition to all the exciting tech, one of the best parts of YV25 Shenzhen was bringing the computer vision community and Ultralytics team together in-person again. Throughout the day, people gathered around demos, shared ideas during coffee breaks, and continued conversations long after the talks ended.

Researchers, engineers, students, and builders compared notes, asked questions, and exchanged real-world experiences from deployment to model training. And thanks to Cinco Jotas from Grupo Osborne, we even brought a touch of Spanish culture to the event with freshly carved jamón, creating a warm moment of connection. A beautiful venue, an enthusiastic crowd, and a shared sense of momentum made the day truly special.

From inspiring keynotes to hands-on demos, YOLO Vision 2025 Shenzhen captured the spirit of innovation that defines the Ultralytics community. Throughout the day, speakers and attendees exchanged ideas, explored new technologies, and connected over a shared vision for the future of AI. Together, they left energized and ready for what’s next with Ultralytics YOLO.

Reimagine what’s possible with AI and computer vision. Join our community and GitHub repository to discover more. Learn more about applications like computer vision in agriculture and AI in retail. Explore our licensing options and get started with computer vision today!