Ultralytics redefines state-of-the-art vision AI with YOLO26

Jan 14, 2026

See how Ultralytics YOLO26 sets a new standard for vision AI across speed, simplicity, and real-world deployability from edge devices to large-scale servers.

Jan 14, 2026

See how Ultralytics YOLO26 sets a new standard for vision AI across speed, simplicity, and real-world deployability from edge devices to large-scale servers.

Today, we’re officially launching Ultralytics YOLO26, our new model that establishes a new baseline for state-of-the-art performance. First introduced by our Founder and CEO, Glenn Jocher, at YOLO Vision 2025 (YV25) in London, it is our most advanced and deployable model to date.

Designed to be lightweight, compact, and fast, YOLO26 is engineered for where real-time vision AI applications actually run in the real world. With native end-to-end inference built directly into the model, YOLO26 simplifies deployment, reduces system complexity, and delivers reliable performance across edge devices and large-scale production environments.

In fact, the smallest version of YOLO26, the nano model, runs up to 43% faster on standard CPUs, enabling efficient real-time vision AI solutions on mobile applications, smart cameras, and other edge devices. Built on Ultralytics’ vision to make impactful vision AI capabilities accessible to everyone, YOLO26 combines state-of-the-art performance with simplicity, making it easy to use and deploy.

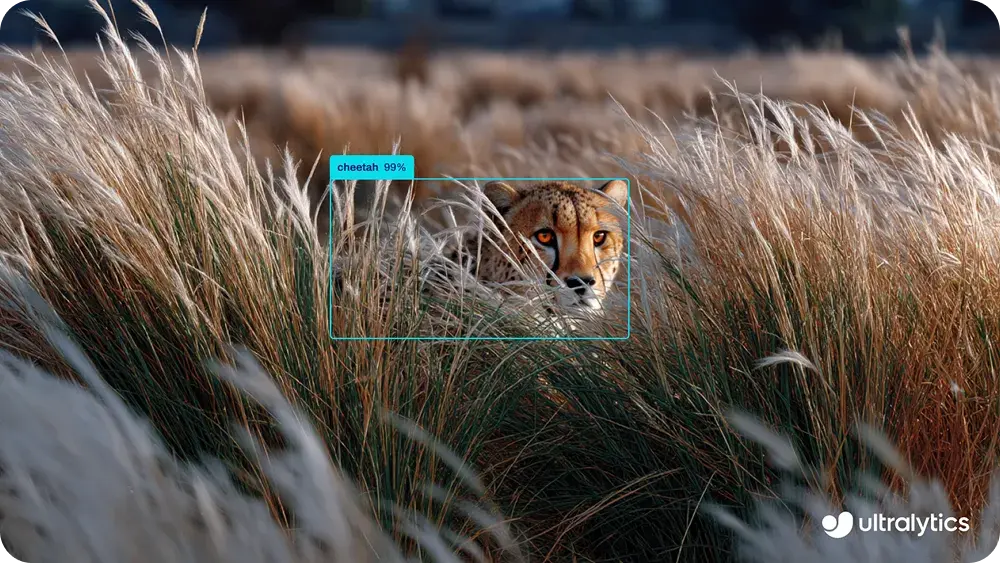

Computer vision is rapidly moving beyond the cloud. Real-world applications increasingly demand real-time inference, low latency, hardware flexibility, and predictable performance on devices such as drones, cameras, mobile systems, and embedded platforms.

YOLO26 was built explicitly for this shift. By rethinking the object detection pipeline from the ground up, Ultralytics has created a model architecture that removes unnecessary complexity while delivering state-of-the-art accuracy and speed.

For example, traditional Ultralytics object detection models rely on an additional post-processing step called Non-Maximum Suppression to filter overlapping predictions after inference. YOLO26 eliminates this extra step by enabling native end-to-end inference, allowing the model to produce final detections directly. It unlocks faster, more predictable, and more reliable real-world deployment.

YOLO26 isn’t an incremental update. It represents a structural leap forward in how production-grade Vision AI is trained, deployed, and scaled.

.webp)

One of the key aspects of YOLO26 is how it builds on the strengths of earlier models like Ultralytics YOLO11 while expanding what’s possible with computer vision. Out of the box, YOLO26 supports the same core computer vision tasks as YOLO11, including object detection, instance segmentation, and image classification.

It also continues to support pose estimation, oriented bounding box object detection for aerial and satellite imagery, and object tracking across video streams. Like YOLO11, YOLO26 is available in five model variants, Nano (n), Small (s), Medium (m), Large (l), and Extra large (x), giving users options that balance speed, size, and accuracy.

Ultralytics YOLO26 includes a range of advancements designed to enhance performance, reliability, and real-world usability. Here’s a glimpse of the key features of YOLO26:

The development of YOLO26 was a collective effort shaped by our team’s research and the feedback we received from the community, our partners, and clients. We set out to simplify the architecture, improve efficiency, and make the model more adaptable for real-world use.

Reflecting on that journey, Glenn Jocher explained, “One of the biggest challenges was making sure users can get the most out of YOLO26 while still delivering top performance.” His perspective highlights a core design principle of YOLO26: keeping vision AI easy to use.

Expanding on this idea, Jing Qiu, our Senior Machine Learning Engineer, added, “Building the new Ultralytics YOLO model was about staying steady, no rush. I kept refining, focused only on that speed-accuracy balance. When it came together, it was a quiet satisfaction - proof that sticking with details works.”

Ultralytics YOLO26 will be publicly available from today through the Ultralytics Platform with full support across training, inference, and export workflows. Organizations deploying YOLO26 in commercial or closed environments can access enterprise licensing options, which include support for production deployment, long-term maintenance, and scalable edge rollouts.

Like our previous models, it is also fully supported through the Ultralytics Python package, making it possible for users to get started right away. Users can train, validate, and deploy YOLO26 with the same streamlined workflow they already know, while taking advantage of a range of export options such as ONNX, TensorRT, CoreML, TFLite, OpenVINO, and more.

Ultralytics YOLO26 represents our next step in making vision AI faster, lighter, and easier to use. But this is only the beginning.

The real impact comes from what the vision AI community creates with it. We look forward to seeing your innovations and continuing to shape the future of computer vision together.

Connect with our community and explore our GitHub repository to dive deeper into AI. Discover industry solutions like AI in robotics and computer vision in logistics, check out our licensing options, and begin building with computer vision today.