From fitness apps to patient monitoring, discover how computer vision addresses the question: can AI detect human actions in real-world settings?

From fitness apps to patient monitoring, discover how computer vision addresses the question: can AI detect human actions in real-world settings?

Daily life is full of small movements we rarely stop to think about. Walking across a room, sitting at a desk, or waving to a friend may feel effortless to us, yet detecting them with AI is far more complicated. What comes naturally to humans translates into something much more complex when a machine is trying to comprehend it.

This ability is known as human activity recognition (HAR), and it enables computers to detect and interpret patterns in human behavior. A fitness app is a great example of HAR in action. By tracking steps and workout routines, it shows how AI can monitor daily activities.

Seeing HAR’s potential, many industries have started to adopt this technology. In fact, the human action recognition market is expected to reach over $12.56 billion by 2033.

A significant part of this progress is driven by computer vision, a branch of AI that enables machines to analyze visual data, such as images and videos. With computer vision and image recognition, HAR has evolved from a research concept into a practical and exciting part of cutting-edge AI applications.

In this article, we’ll explore what HAR is, the different methods used to recognize human actions, and how computer vision helps answer the question: Can AI detect human actions in real-world applications? Let’s get started!

Human action recognition makes it possible for computer systems to understand human activities or actions by analyzing body movements. Unlike simply detecting a person in an image, HAR can assist in identifying what the person is doing. For instance, distinguishing between walking and running, recognizing a wave of the hand, or noticing when someone falls down.

The foundation of HAR lies in patterns of movement and posture. A slight change in how a human’s arms or legs are positioned can signal a variety of actions. By capturing and interpreting these subtle details, HAR systems can get meaningful insights from body movements.

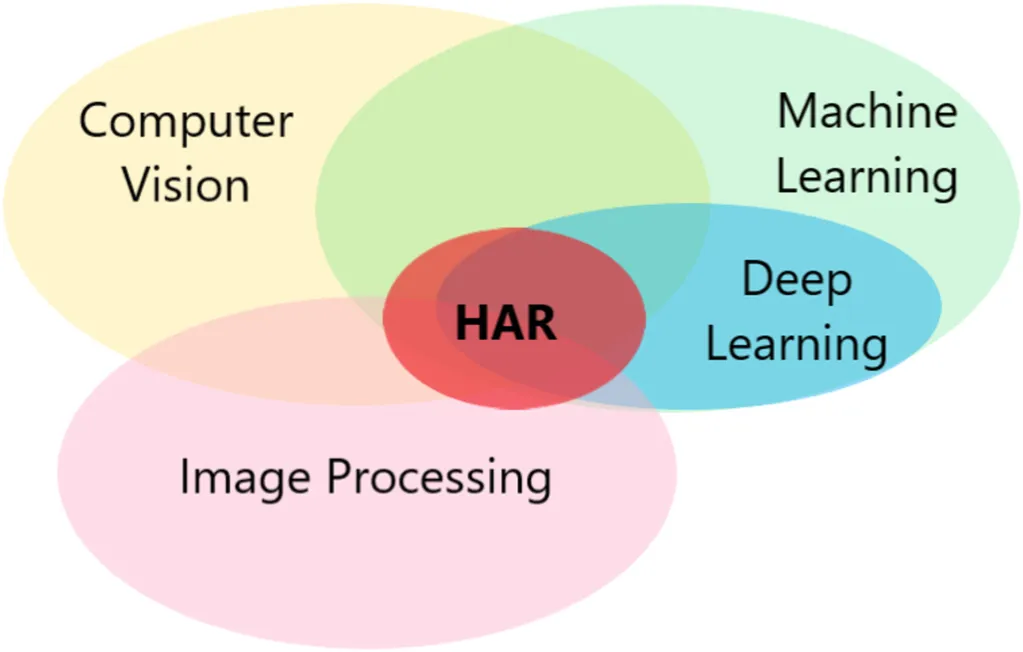

To achieve this, human action recognition combines multiple technologies such as machine learning, deep learning models, computer vision, and image processing, which work together to analyze body movements and interpret human actions with higher accuracy.

Earlier HAR systems were much more limited. They could handle only a few simple, repetitive actions in controlled environments and often struggled in real-world situations.

Today, thanks to AI and large amounts of video data, HAR has advanced significantly in both accuracy and robustness. Modern systems can recognize a wide range of activities with much greater accuracy, making the technology practical for areas like healthcare, security, and interactive devices.

Now that we have a better understanding of what human action recognition is, let’s take a look at the different ways machines can detect human actions.

Here are some of the common methods:

For any HAR model or system, datasets are the starting point. A HAR dataset is a collection of examples, such as video clips, images, or sensor data, that capture actions like walking, sitting, or waving. These examples are used to train AI models to recognize patterns in human motion, which can then be applied in real-life applications.

The quality of the training data directly affects how well a model performs. Clean, consistent data makes it easier for the system to recognize actions accurately.

That’s why datasets are often preprocessed before training. One common step is normalization, which scales values consistently to reduce errors and prevent overfitting (when a model performs well on training data but struggles with new data).

To measure how models perform beyond training, researchers rely on evaluation metrics and benchmark datasets that allow fair testing and comparison. Popular collections like UCF101, HMDB51, and Kinetics include thousands of labeled video clips for human action detection. On the sensor side, datasets gathered from smartphones and wearables provide valuable motion signals that make recognition models more robust across different environments.

Of the different ways to detect human actions, computer vision has quickly become one of the most popular and widely researched. Its key advantage is that it can pull rich details straight from images and video. By looking at pixels frame by frame and analyzing motion patterns, it can recognize activities in real time without the need for people to wear extra devices.

Recent progress in deep learning, especially convolutional neural networks (CNNs), which are designed to analyze images, has made computer vision faster, more accurate, and more reliable.

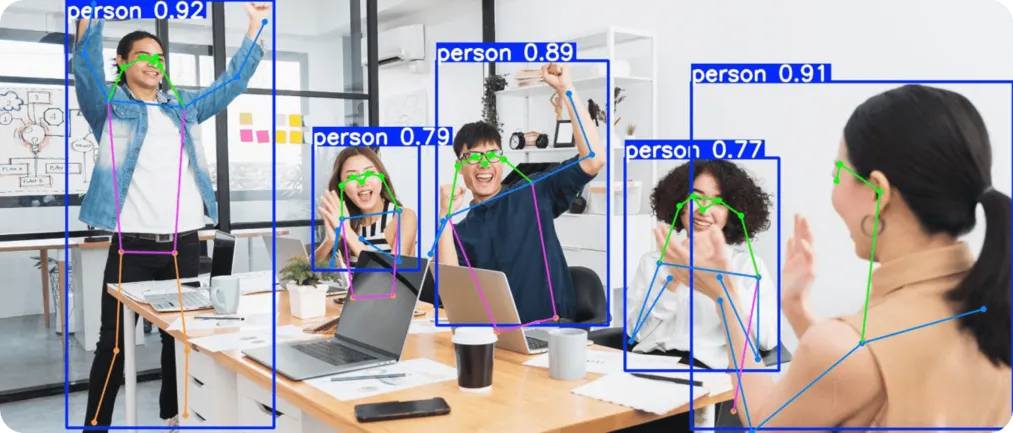

For instance, widely used state-of-the-art computer vision models like Ultralytics YOLO11 are built on these advances. YOLO11 supports tasks such as object detection, instance segmentation, tracking people across video frames, and estimating human poses, making it a great tool for human activity recognition.

Ultralytics YOLO11 is a Vision AI model designed for both speed and precision. It supports core computer vision tasks such as object detection, object tracking, and pose estimation. These capabilities are especially useful for human activity recognition.

Object detection identifies and locates people in a scene, tracking follows their movements across video frames to recognize action sequences, and pose estimation maps key human body joints to distinguish between similar activities or detect sudden changes like a fall.

For example, insights from the model can be used to tell the difference between someone sitting quietly, then standing up, and finally raising their arms to cheer. These simple everyday actions may appear similar at a glance, but carry very different meanings when analyzed in a sequence.

Next, let’s take a closer look at how human activity recognition powered by computer vision is applied in real-world use cases that impact our daily lives.

In healthcare, small changes in movement can provide useful insights into a person’s condition. For instance, a stumble by an elderly patient or the angle of a limb during rehabilitation may reveal risks or progress. These signs are often easy to miss by traditional means, like checkups.

YOLO11 can help by using pose estimation and image analysis to monitor patients in real time. It can be used to detect falls, track recovery exercises, and observe daily activities such as walking or stretching. Because it works through visual analysis without the need for sensors or wearable devices, it offers a simple way to gather accurate information that supports patient care.

Security systems rely on detecting unusual human activities quickly, such as someone loitering, running in a restricted area, or showing sudden aggression. These signs are often missed in busy environments where security guards can’t manually watch everything. That’s where computer vision and YOLO11 come in.

YOLO11 makes security monitoring easier by powering real-time video surveillance that can detect suspicious movements and send instant alerts. It supports crowd safety in public spaces and strengthens intrusion detection in private areas.

With this approach, security guards can work alongside computer vision systems, creating a human-computer interaction and partnership that enables faster and more timely responses to suspicious activities.

Here are some of the advantages of using computer vision for human activity recognition:

While there are many benefits to using computer vision for HAR, there are also limitations to consider. Here are some factors to keep in mind:

Artificial intelligence and computer vision are making it possible for machines to recognize human actions more accurately and in real time. By analyzing video frames and patterns of movement, these systems can identify both everyday gestures and sudden changes. As the technology continues to improve, human activity recognition is moving beyond research labs and becoming a practical tool for healthcare, security, and everyday applications.

Explore more about AI by visiting our GitHub repository and joining our community. Check out our solution pages to learn about AI in robotics and computer vision in manufacturing. Discover our licensing options to get started with Vision AI.