Find out how self-supervised learning denoises images, removes noise, and enhances clarity using AI techniques for photography, medical, and vision systems.

Find out how self-supervised learning denoises images, removes noise, and enhances clarity using AI techniques for photography, medical, and vision systems.

Images are part of our daily lives, from the photos we take to the videos recorded by cameras in public places. They contain insightful information, and cutting-edge technology makes it possible to analyze and interpret this data.

In particular, computer vision, a branch of artificial intelligence (AI), enables machines to process visual information and understand what they see, much like humans do. However, in real-world applications, images are often far from perfect.

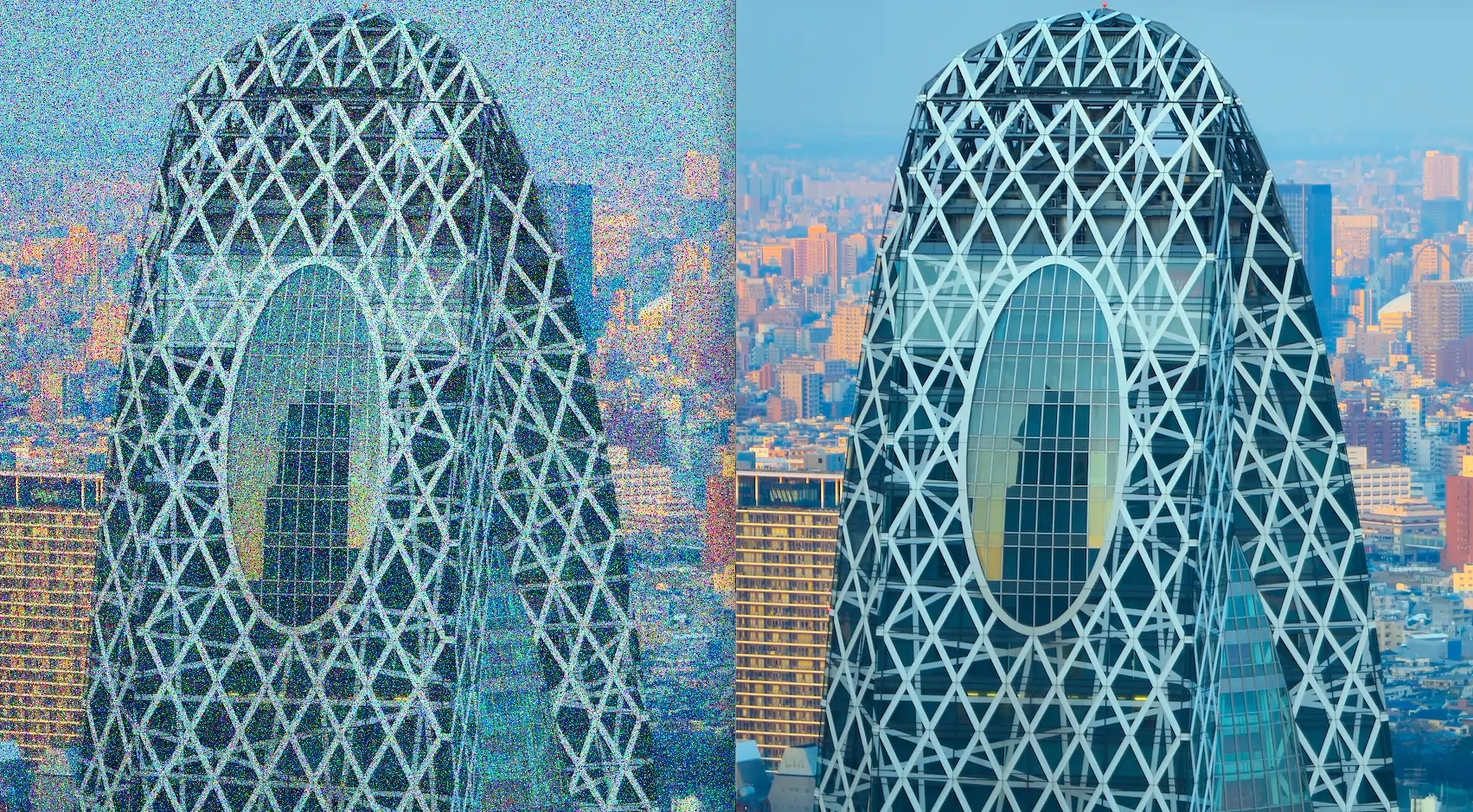

Image noise caused by rain, dust, low light, or sensor limitations can hide important details, making it harder for Vision AI models to detect objects or interpret scenes accurately. Image denoising helps reduce this noise, making it possible for Vision AI models to see details more clearly and make better predictions.

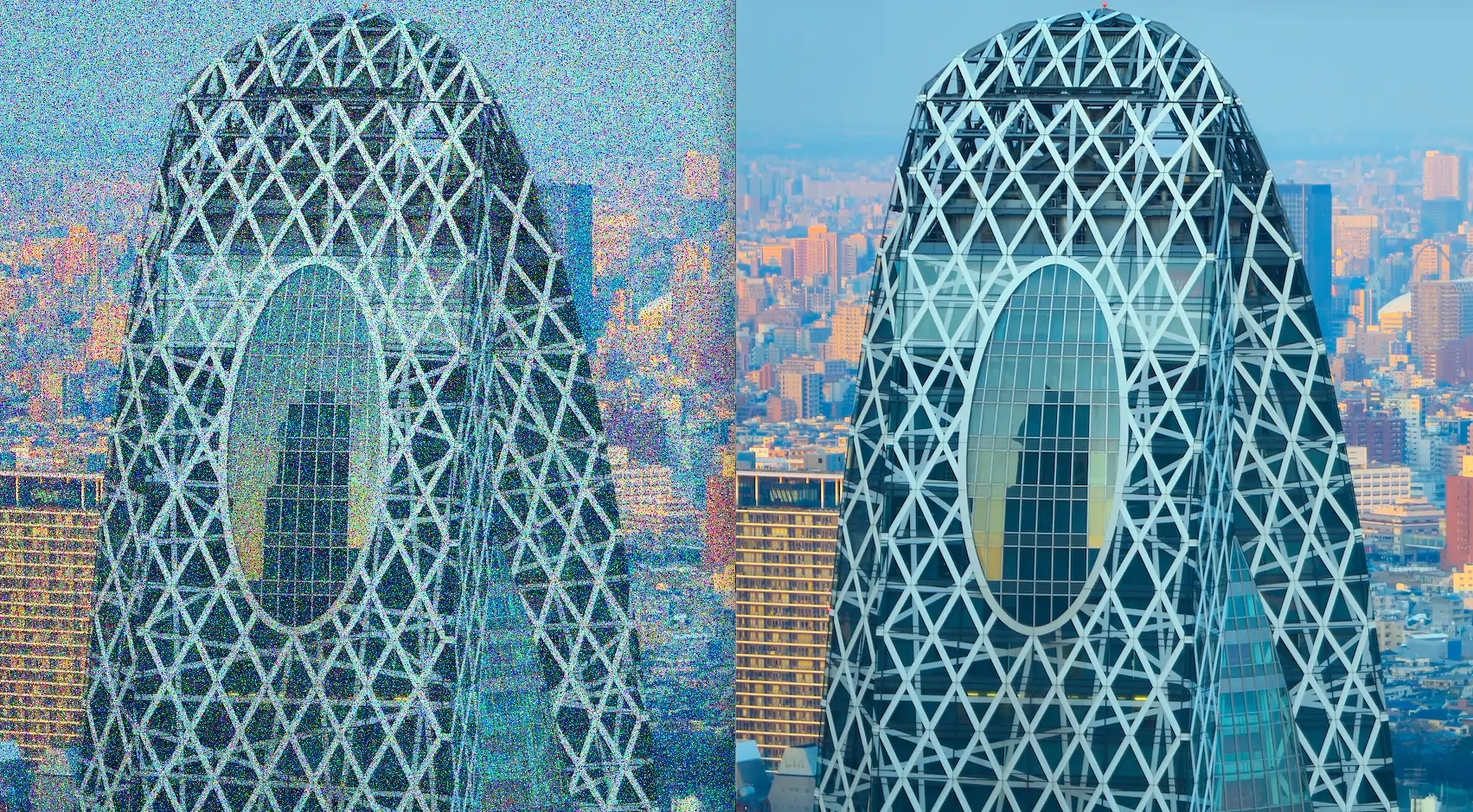

Traditionally, image denoising has relied on supervised learning, where models are trained using pairs of noisy and clean images to learn how to remove noise. However, collecting perfectly clean reference images isn’t always practical.

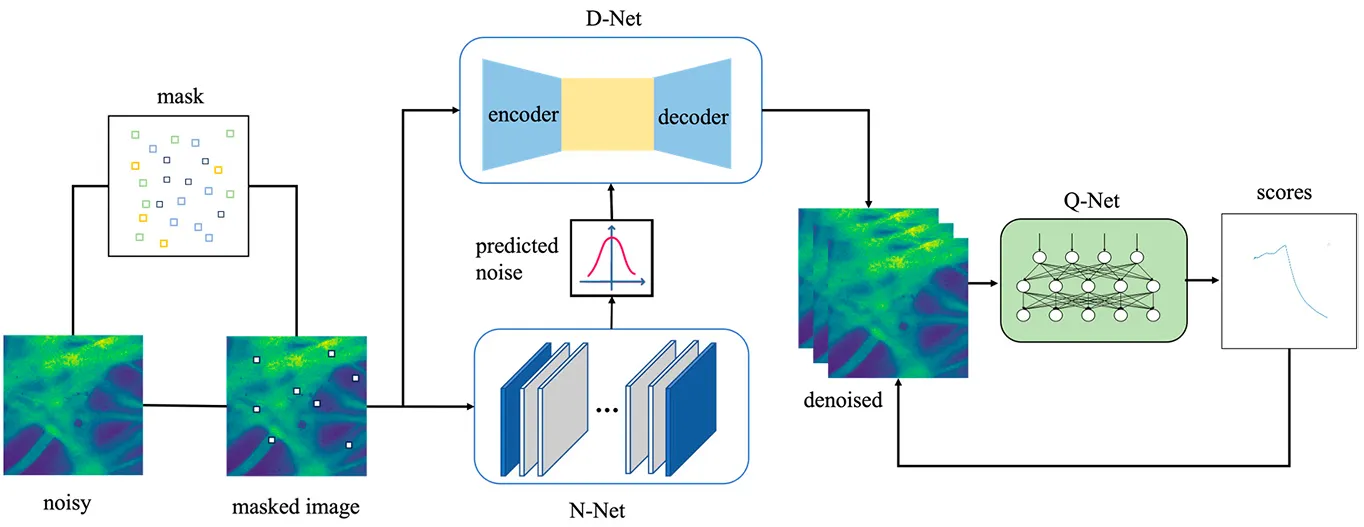

To tackle this challenge, researchers have developed self-supervised image denoisers. They aim to train AI models to learn directly from the data, creating their own learning signals to remove noise and keep important details without needing clean reference images.

In this article, we’ll take a closer look at self-supervised image denoisers, how they work, the key techniques behind them, and their real-world applications. Let’s get started!

Noisy images can make it difficult for Vision AI models to interpret what’s in a picture. A photo taken in low-light conditions, for instance, may appear grainy or blurred, hiding subtle features that help a model identify objects accurately.

In supervised learning–based denoising, models are trained using pairs of images, one noisy and one clean, to learn how to remove unwanted noise. While this approach works well, collecting perfectly clean reference data is often time-consuming and difficult in real-world scenarios.

That’s why researchers have turned to self-supervised image denoising. Self-supervised image denoising builds on the concept of self-supervised learning, where models teach themselves by creating their own learning signals from the data.

Since this method doesn’t depend on large labeled datasets, self-supervised denoising is faster, more scalable, and easier to apply across domains such as low-light photography, medical imaging, and satellite image analysis, where clean reference images are often unavailable.

Instead of relying on clean reference images, this approach trains directly on noisy data by predicting masked pixels or reconstructing missing parts. Through this process, the model learns to tell the difference between meaningful image details and random noise, leading to clearer and more accurate outputs.

While it might seem similar to unsupervised learning, self-supervised learning is actually a special case of it. The key distinction is that in self-supervised learning, the model creates its own labels or training signals from the data to learn a specific task. In contrast, unsupervised learning focuses on finding hidden patterns or structures in data without any explicit task or predefined goal.

With respect to self-supervised denoising, there are several ways learning happens. Some self-supervised denoise models fill in masked or missing pixels, while others compare multiple noisy versions of the same image to find consistent details.

For example, a popular method known as blind-spot learning focuses on training the denoiser model to ignore the pixel it is reconstructing and rely on the surrounding context instead. Over time, the model rebuilds high-quality images while preserving essential textures, edges, and colors.

Next, we’ll explore the process behind how self-supervised learning removes noise.

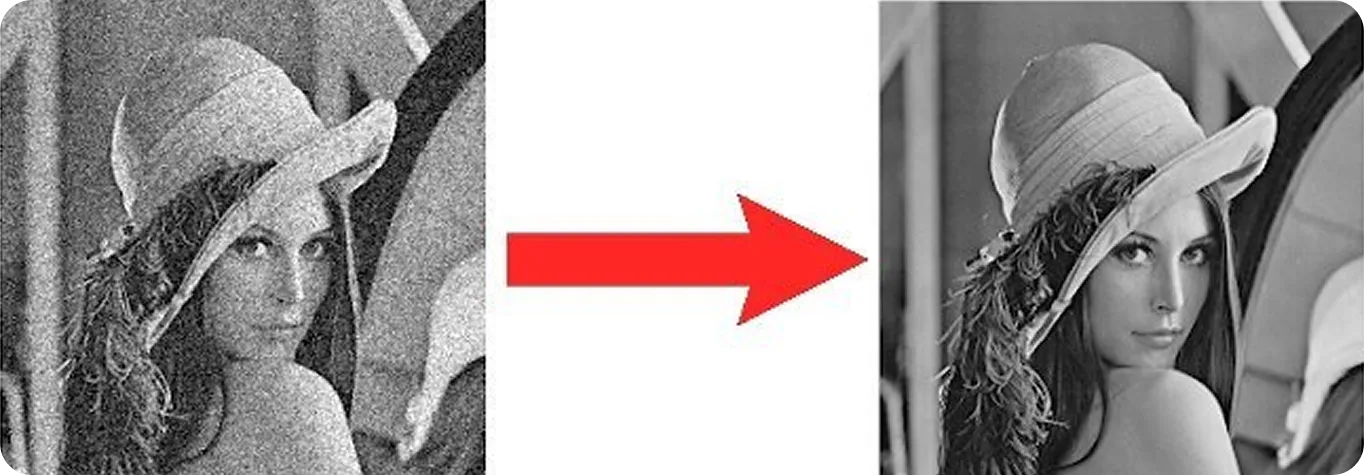

The process of self-supervised denoising typically begins by feeding noisy images into the denoising model. The model analyzes nearby pixels to estimate what each unclear or masked pixel should look like, gradually learning to tell the difference between noise and real visual details.

Consider an image of a dark, grainy sky. The model looks at nearby stars and surrounding patterns to predict what each noisy patch should look like without the noise. By repeating this process across the entire image, it learns to separate random noise from meaningful features, producing a clearer and more accurate result.

In other words, the model predicts a cleaner version of the image based on context, without ever needing a perfectly clean reference. This process can be implemented using different types of models, each with unique strengths in handling noise.

Here’s a quick look at the types of models commonly used for self-supervised image denoising:

Training these models with images taken in different lighting and ISO settings helps them work well in many real-world situations. In digital cameras, ISO settings control how much the camera brightens the image by amplifying the signal it receives.

A higher ISO makes photos brighter in dark places but also increases noise and reduces detail. By learning from images taken at different ISO levels, the models get better at telling real details apart from noise, leading to clearer and more accurate results.

Denoisers learn to tell noise from real image details through different training techniques, which are separate from the model types used for denoising. Model types such as CNNs, autoencoders, and transformers describe the structure of the network and how it processes visual information.

Training techniques, on the other hand, define how the model learns. Some methods use context-based prediction, where the model fills in missing or masked pixels by using information from nearby areas.

Others use reconstruction-based learning, where the model compresses an image into a simpler form and then rebuilds it, helping it recognize meaningful structures like edges and textures while filtering out random noise.

Together, the model type and the training technique determine how effectively a denoiser can clean images. By combining the right architecture with the right learning approach, self-supervised denoisers can adapt to many types of noise and produce clearer, more accurate images even without clean reference data.

Key techniques in self-supervised AI image denoising

Here are some of the most widely used training techniques that enable effective self-supervised image denoising:

Image denoising is a careful balance between two goals: reducing noise and keeping fine details intact. Too much denoising can make an image look soft or blurry, while too little can leave unwanted grain or artifacts behind.

To understand how well a model strikes this balance, researchers use evaluation metrics that measure both image clarity and detail preservation. These metrics show how well a model cleans up an image without losing important visual information.

Here are common evaluation metrics that help measure image quality and denoising performance:

Now that we have a better understanding of what denoising is, let’s explore how self-supervised image denoising is applied in real-world scenarios.

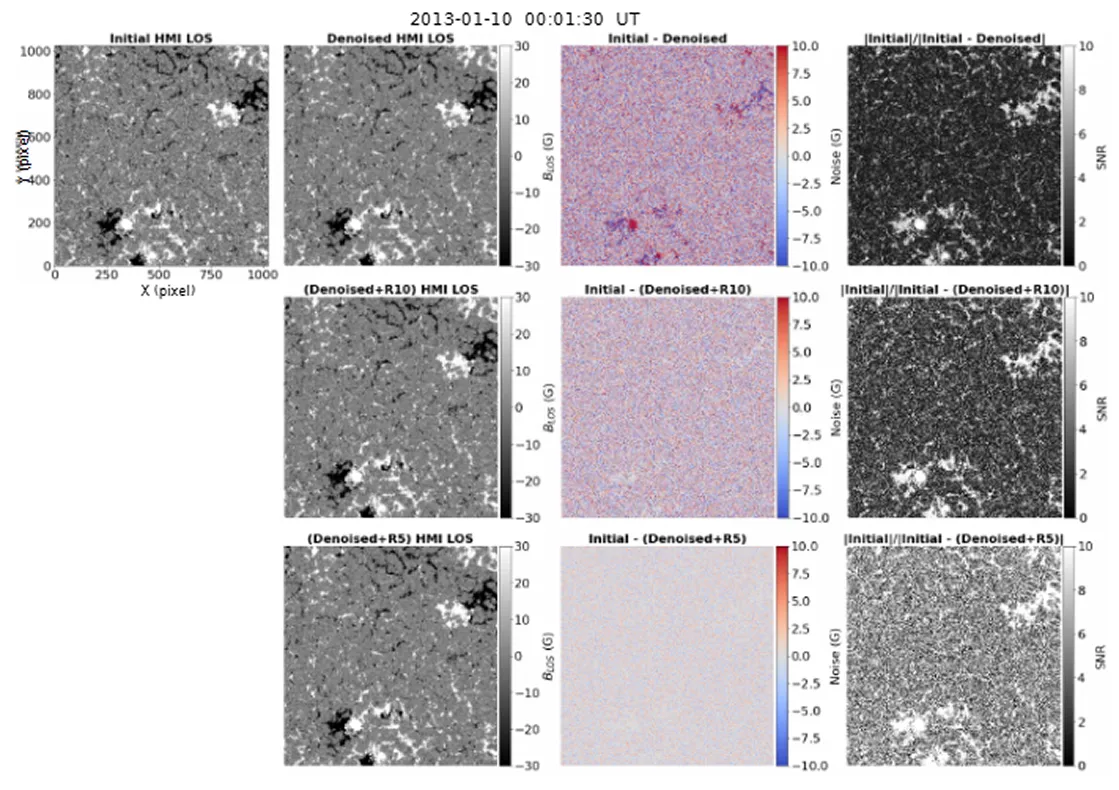

Taking clear photos of stars and galaxies isn’t easy. The night sky is dark, so cameras often require long exposure times, which can introduce unwanted noise. This noise can blur fine cosmic details and make faint signals harder to detect

Traditional denoising tools can help reduce noise, but they often remove important details along with it. Self-supervised denoising offers a smarter alternative. By learning directly from noisy images, the AI model can recognize patterns that represent real features and separate them from random noise.

The result is much clearer images of celestial objects like stars, galaxies, and the Sun, revealing faint details that might otherwise go unnoticed. It can also enhance subtle astronomical features, improving image clarity and making data more useful for scientific research.

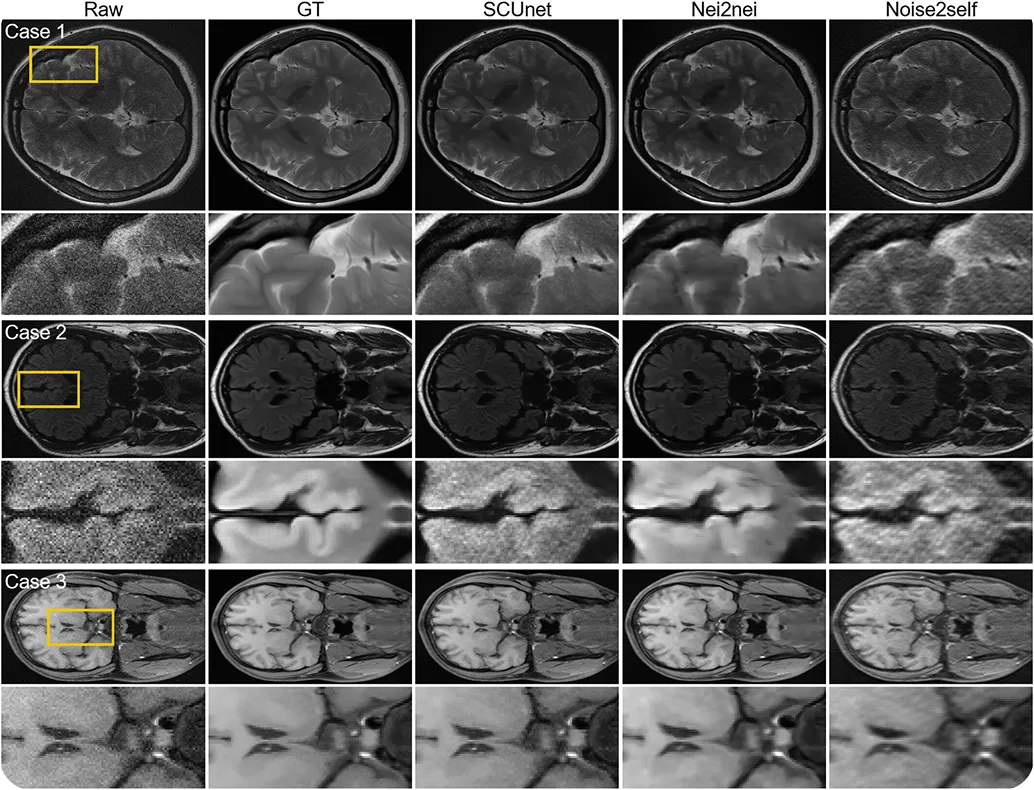

Medical scans like MRIs, CTs, and microscopy images often pick up noise that can make small details harder to see. This can be a problem when doctors need to spot early signs of illness or track changes over time.

Image noise can come from patient movement, low signal strength, or limits on how much radiation can be used. To make medical scans clearer, researchers have explored self-supervised denoising methods like Noise2Self and other similar approaches.

These models are trained directly on noisy brain MRI images, learning the noise patterns on their own and cleaning them up without needing perfectly clear examples. The processed images showed sharper textures and better contrast, making fine structures easier to identify. Such AI-powered denoisers streamline the workflow in diagnostic imaging and improve real-time analysis efficiency.

In most cases, denoising has a significant impact across a wide range of computer vision applications. By removing unwanted noise and distortions, it produces cleaner and more consistent input data for Vision AI models to process.

Clearer images lead to improved performance in computer vision tasks such as object detection, instance segmentation, and image recognition. Here are some examples of applications where Vision AI models, such as Ultralytics YOLO11 and Ultralytics YOLO26, can benefit from denoising:

Here are some key benefits of using self-supervised denoising in imaging systems:

Despite its benefits, self-supervised denoising also comes with certain limitations. Here are a few factors to consider:

Self-supervised denoising helps AI models learn directly from noisy images, producing clearer results while preserving fine details. It works effectively across a variety of challenging scenarios, such as low-light, high ISO, and detailed imagery. As AI continues to evolve, such techniques will likely play an essential role in various computer vision applications.

Join our community and explore our GitHub repository to discover more about AI. If you're looking to build your own Vision AI project, check out our licensing options. Explore more about applications like AI in healthcare and Vision AI in retail by visiting our solutions pages.