Explore how vision AI can be used for smarter driver attention monitoring to detect fatigue, prevent drowsy driving, and make roads safer for everyone.

Explore how vision AI can be used for smarter driver attention monitoring to detect fatigue, prevent drowsy driving, and make roads safer for everyone.

A long stretch of highway, a late-night drive, or a busy day can leave any driver feeling tired. But even a brief lapse in attention can make a big difference when it comes to road safety.

That’s why many car manufacturers are turning to new technology to help keep drivers alert and focused. From sensors that track steering patterns to cameras that watch for signs of fatigue, today’s vehicles are getting smarter about recognizing when a driver’s attention starts to drift.

In particular, thanks to computer vision, a branch of artificial intelligence (AI), machines can now interpret images and video similar to how humans can. When it comes to vehicles, computer vision can be used in driver attention monitoring systems to analyze a driver’s posture, facial expressions, and eye movements.

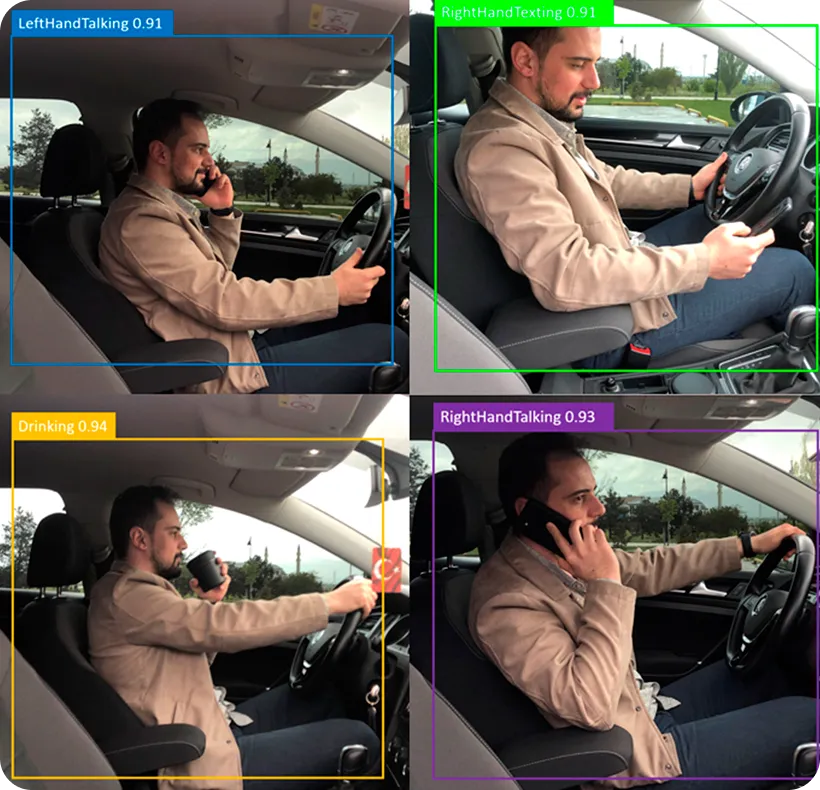

For instance, vision AI models like Ultralytics YOLO11 and Ultralytics YOLO26 support computer vision tasks such as object detection (identifying and locating objects in an image), object tracking (following those objects across frames), and instance segmentation (separating individual objects from the background), which can be applied to monitor a driver’s posture, head position, and overall attentiveness.

In this article, we’ll explore what causes driver inattention and drowsiness, how Vision AI powers driver attention monitoring systems, and how these systems are shaping a safer future for drivers everywhere.

Staying focused behind the wheel requires constant attention. Every second, drivers process road signs, traffic signals, and the movement of surrounding vehicles. When that focus slips, even briefly, the risk of an accident rises sharply.

Studies show that drivers who take their eyes off the road more than once or frequently press in-car buttons are more likely to be involved in a crash or near miss, even if the increase in risk isn’t exactly twofold.

These findings highlight how easily attention can drift, a problem known as driver inattention. This occurs when a driver’s eyes or mind wander from the road, whether by checking a phone, changing the music, or getting lost in thought. Even a quick two-second glance away can be enough to miss a slowing car or a red light ahead.

Meanwhile, driver drowsiness develops gradually, often caused by tiredness or lack of sleep. A sleepy driver may blink slowly, nod their head, or loosen their grip on the wheel. As fatigue sets in, reaction times slow, and maintaining control becomes more difficult.

Here are some common signs of driver inattention or drowsiness:

These signs may start small but become more noticeable over time, especially on long trips or at night. Recognizing them early helps drivers stay alert, focused, and safe on the road.

Even with advanced features like lane-keeping assist, which helps keep the vehicle centered, or adaptive cruise control, which maintains a safe following distance, accidents can still happen if the driver’s attention slips. Technology can enhance safety, but staying alert is still the driver’s responsibility.

To help reduce these risks, new innovations now use cameras powered by Vision AI to monitor the driver’s face and eyes. These technologies, known as driver attention monitoring systems, are designed to detect early signs of fatigue or distraction and help keep drivers alert and safe.

When the system notices warning cues, such as slower blinking, head nodding, or gaze drifting away from the road, it typically sends gentle alerts, like a visual or audible warning, prompting the driver to refocus before danger increases.

Driver attention monitoring systems are designed to recognize when a driver begins to lose focus, which can happen gradually without the driver even realizing it. These systems typically use a small camera mounted near the steering wheel or on the dashboard to continuously observe the driver’s face and eyes.

To ensure reliable performance in all conditions, many systems use infrared cameras that can capture facial details clearly even at night or in low light and poor weather. Since driver attention monitoring is an application where timing is critical, the footage captured by the cameras must be analyzed in real time to detect and respond to signs of fatigue or distraction.

So how do these systems actually work? Cameras capture live video that is analyzed by Vision AI models trained to interpret human posture and facial behavior. These models detect subtle visual cues that reveal how alert or distracted the driver may be.

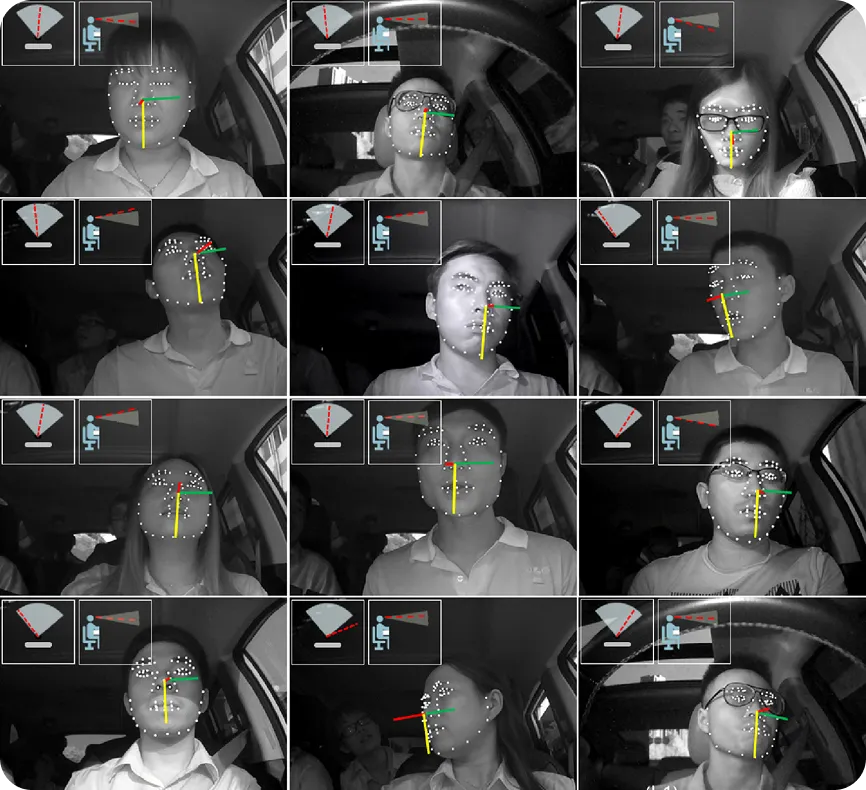

A Vision AI system integrated with these models can detect and track the driver’s face and overall body posture using pose estimation techniques. It identifies key points such as the eyes, nose, mouth, shoulders, and head position, allowing it to monitor movement and alignment even when lighting changes or the driver shifts position.

Once the driver’s face is detected, the system estimates their head’s orientation to determine where the driver is looking, whether straight ahead at the road, downward toward a device, or off to the side. The system then focuses on the eyes, analyzing blink frequency, blink duration, and gaze direction to assess attention.

Slow blinking, closed eyes, or eyes that drift away from the road can indicate tiredness or distraction. Some systems also use machine learning to track posture and movement patterns over time, comparing them with normal, attentive behavior to identify gradual signs such as head nodding, slouching, or slower reactions.

When early signs of fatigue or inattention are detected, the system sends gentle alerts to help the driver refocus. These alerts can take the form of a soft chime, a vibration in the steering wheel, or a reminder displayed on the dashboard. If the driver does not respond, the warnings become more noticeable until the driver starts paying attention to the road again.

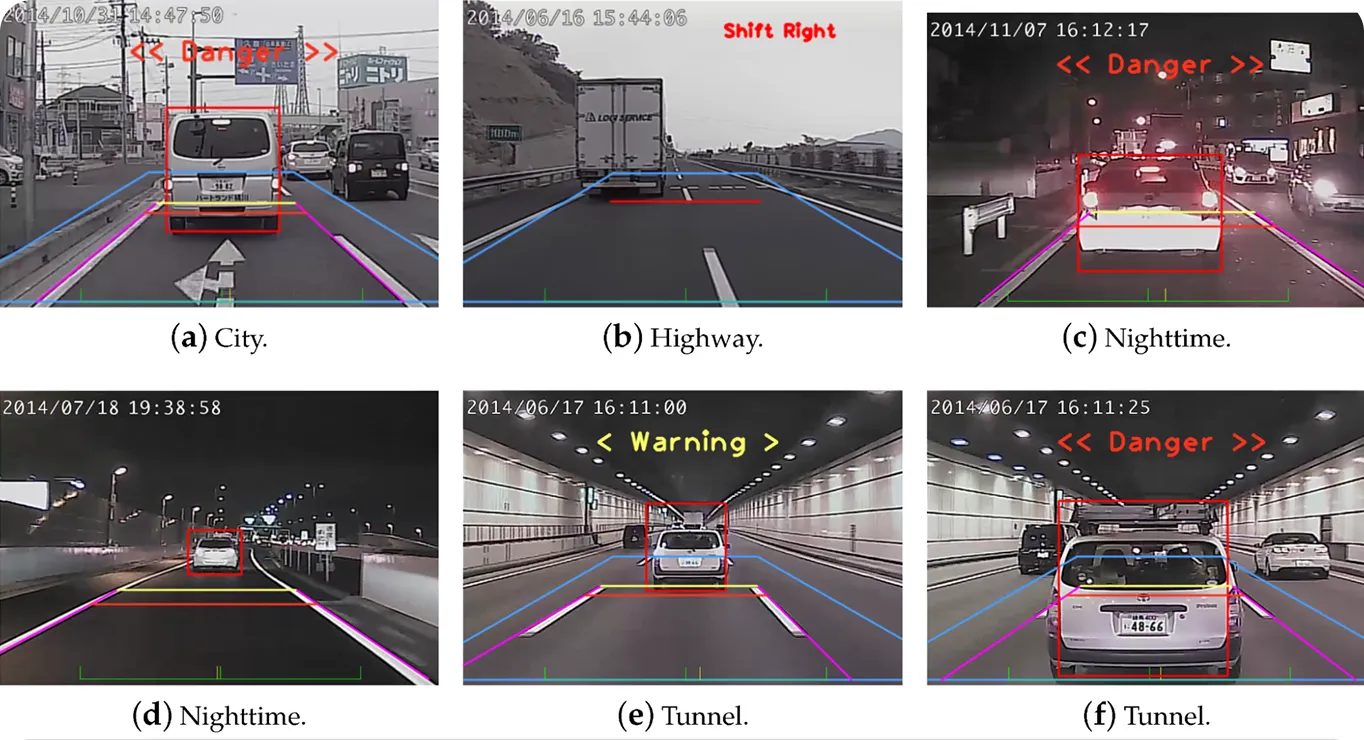

In addition to monitoring driver attention, Vision AI can enhance a range of safety and driver assistance features found in today’s vehicles. For example, when the system detects signs of distraction or drowsiness, it can share that information with other onboard systems, making it possible for the car to adjust its behavior and help maintain a safe, steady drive.

Here are a few other systems designed to assist a driver:

Driver attention monitoring systems are not just experimental innovations; they are already being put into production. Fatigue and distraction are everyday challenges for long-haul truck drivers, who often spend long hours on the road with few breaks.

Vision AI is now helping to tackle this issue by monitoring a driver’s alertness in real time. A good example is the upgraded Driver Alert Support system introduced by Volvo Trucks. It combines an inward-facing eye-tracking camera with a forward-facing camera that monitors lane position and driving patterns. Beyond this, other automakers are integrating driver-monitoring systems powered by computer vision and AI to help keep drivers alert and focused.

Here are some key advantages of using driver attention monitoring systems:

Staying alert behind the wheel is essential for safe driving. Even a brief moment of distraction or fatigue can raise the risk of an accident.

Driver attention monitoring systems powered by Vision AI help reduce that risk by tracking visual cues such as eye movements, blink patterns, and head position. By recognizing early signs of drowsiness or inattention, these systems can alert drivers in time to refocus and stay safe on the road.

Explore more about AI by joining our community and visiting our GitHub repository. Check out our solution pages to read about AI in robotics and computer vision in healthcare. Discover our licensing options and start building with Vision AI today!