Learn how pose estimation works, its real-world applications, and how models like Ultralytics YOLO11 enable machines to interpret body movement and posture.

Learn how pose estimation works, its real-world applications, and how models like Ultralytics YOLO11 enable machines to interpret body movement and posture.

When you see someone slouched over or standing tall with their shoulders back, it’s immediately clear whether they have poor or confident posture. No one needs to explain it to you. That’s because, over time, we’ve naturally learned to interpret body language.

Through experience and observation, our brains have become very good at recognizing the posture of various objects, including humans. Thanks to recent advancements in artificial intelligence (AI) and computer vision, a field that enables machines to interpret visual information from the world, machines are now beginning to learn and replicate this ability as well.

Pose estimation is a computer vision task that helps machines figure out the position and orientation of a person or object by looking at images or video. It does this by identifying key points on the body, like joints and limbs, to understand how someone, or even something, is moving.

This technology is being widely used across fields like fitness, healthcare, and animation. In workplace environments, for example, it can be used to monitor employee posture and support safety and wellness initiatives. Computer vision models like Ultralytics YOLO11 make this possible by estimating human poses in real-time.

In this article, we’ll take a closer look at pose estimation and how it works, along with real-world use cases where it’s making a difference. Let’s get started!

Research into pose estimation started back in the late 1960s and 70s. Over the years, approaches toward this computer vision task have shifted from basic math and geometry to more advanced methods driven by artificial intelligence.

Initially, techniques depended on fixed camera angles and known reference points. Later, they evolved to include 3D models and feature matching. Today, deep learning models like YOLO11 can detect body positions in real time from images or video, making pose estimation faster and more accurate than ever before.

As technology improved, researchers saw the potential applications of being able to monitor and track the poses of various objects, especially humans and animals. Pose estimation is especially important because it enables AI tools to understand and measure posture and movement in ways that weren’t possible before.

For example, it allows computers to recognize gestures for hands-free interaction, analyzes athletes’ movements to improve performance, powers realistic animations in video games, and even supports healthcare by tracking patients’ recovery progress.

Pose estimation is different from other computer vision tasks like object detection and instance segmentation. These tasks focus primarily on identifying and locating objects within an image.

Object detection, for instance, draws bounding boxes around items like people, vehicles, or animals to indicate their presence and position. Instance segmentation takes this a step further by outlining the precise shape of each object at the pixel level.

However, both of these methods are mainly concerned with what the object is and where it is - they don’t provide any information about how the object is positioned or what it might be doing. That’s where pose estimation becomes crucial.

By identifying key points on the body, such as elbows, knees, or even a tail, pose estimation can interpret posture and movement. This allows for a deeper understanding of actions, gestures, and body dynamics, including motion in 3D space.

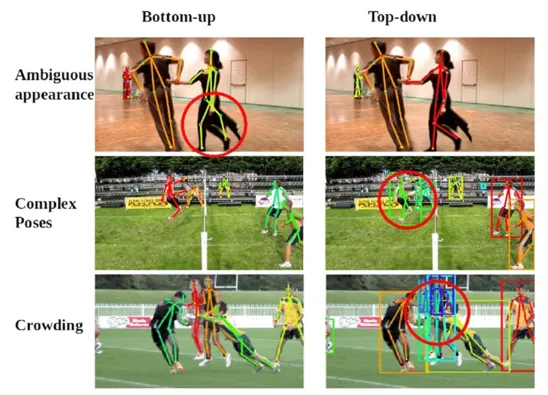

Pose estimation models generally follow two main approaches: bottom-up and top-down. In the bottom-up approach, the model first detects individual key points, like elbows, knees, or shoulders, and then groups them to figure out which person or object they belong to. In contrast, the top-down approach starts by detecting each object first (such as a person in the image) and then locates the key points for that specific object.

Some newer models, such as YOLO11, blend the benefits of both approaches. It keeps the efficiency of the bottom-up method by skipping the manual grouping step, while also leveraging the precision of top-down systems by detecting people and estimating their poses all at once - in a single, streamlined process.

As we walk through how pose estimation models work, you might be wondering: how do these models actually learn to estimate the pose of different objects? That’s where the idea of custom training enters the picture.

Custom training means teaching a model to recognize specific key points using your own data. Since building a model from scratch requires a large amount of labeled images and significant time, many people opt for transfer learning. This involves starting with a model that has already been trained on a large dataset, such as the YOLO11 pose estimation model, which is pre-trained on the COCO-Pose dataset, and then fine-tuning it with your own data for a specific task or use case.

Let’s say you’re working with yoga poses - you can fine-tune YOLO11 using images where each pose is labeled with key points specific to that activity. To do this, you’ll need a custom dataset of annotated images that the model can learn from.

During training, you can adjust settings like batch size (the number of images processed at once), learning rate (how quickly the model updates its learning), and epochs (how many times the model cycles through the dataset) to improve accuracy. This makes it much easier to build pose estimation models tailored to your specific needs.

Now that we’ve discussed what pose estimation is and how it works, let’s take a closer look at some of its real-world use cases.

Pose estimation is gradually becoming a reliable tool in the healthcare industry, especially in physical therapy. Using AI and computer vision, these systems can track posture and movements in real time and provide feedback, similar to what a physiotherapist would offer.

For example, a patient recovering from knee surgery can use a pose estimation system to make sure they’re doing their rehab exercises correctly. The system can spot any incorrect movements and offer suggestions for improvement, helping the patient stay on track and avoid injury.

Beyond rehabilitation, pose estimation is also making its way into fitness apps. For example, someone working out at home can use the app to check their form during exercises. The app can give real-time feedback, like adjusting the angle of a squat or making sure your back is straight during a deadlift. This helps users improve their form and prevent injuries without needing a trainer.

Pose estimation has changed the way motion capture works in entertainment, making it simpler and more accessible. In the past, motion capture required placing markers on a person’s body and tracking them with special cameras, which could be tricky and expensive.

Now, with advances in AI and computer vision, we can use regular cameras and algorithms to track body movements without needing markers, making the process more efficient and accurate, even in real-time.

A great example of this is Disney's AR (Augmented Reality) Poser. This fun tool lets you take a photo with your phone and have a digital character copy your pose in augmented reality. It works by analyzing your pose in the picture and matching it to a 3D character, creating a fun, personalized AR selfie.

Studying animal behavior helps scientists understand how animals communicate, find mates, care for their young, and live in groups. This knowledge is vital for protecting wildlife and gaining a deeper understanding of the natural world.

Pose estimation simplifies this process by tracking animal movements and posture using images and videos, without attaching sensors or tags to the animals. These systems can automatically monitor their poses, providing insights into behaviors like grooming, playing, or fighting.

An interesting example of this is scientists using pose estimation to study ape behavior. In fact, researchers have compiled datasets like OpenApePose, which contains over 71,000 labeled images from six ape species.

Here are some of the key benefits that pose estimation can bring to various industries:

While the advantages of pose estimation are clear across various fields, there are also some challenges to consider. Here are a few key limitations to keep in mind:

Pose estimation has come a long way from its early days, evolving from systems that used markers to impactful tools driven by deep learning models like YOLO11. Whether it's improving physical therapy, powering interactive AR experiences, or helping with wildlife research, pose estimation is changing the way machines understand movement and posture. As technology keeps advancing, addressing its limitations will be key to unlocking even more practical uses and making machines better at understanding how we and other living beings move.

Curious about AI? Explore our GitHub repository, connect with our community, and check out our licensing options to jumpstart your computer vision project. Learn more about innovations like AI in retail and computer vision in the logistics industry on our solutions pages.