Discover why pruning and quantization are essential for optimizing computer vision models and enabling faster performance on edge devices.

Discover why pruning and quantization are essential for optimizing computer vision models and enabling faster performance on edge devices.

Edge devices are becoming increasingly common with advancing technology. From smartwatches that track your heart rate to aerial drones that monitor streets, edge systems can process data in real time locally within the device itself.

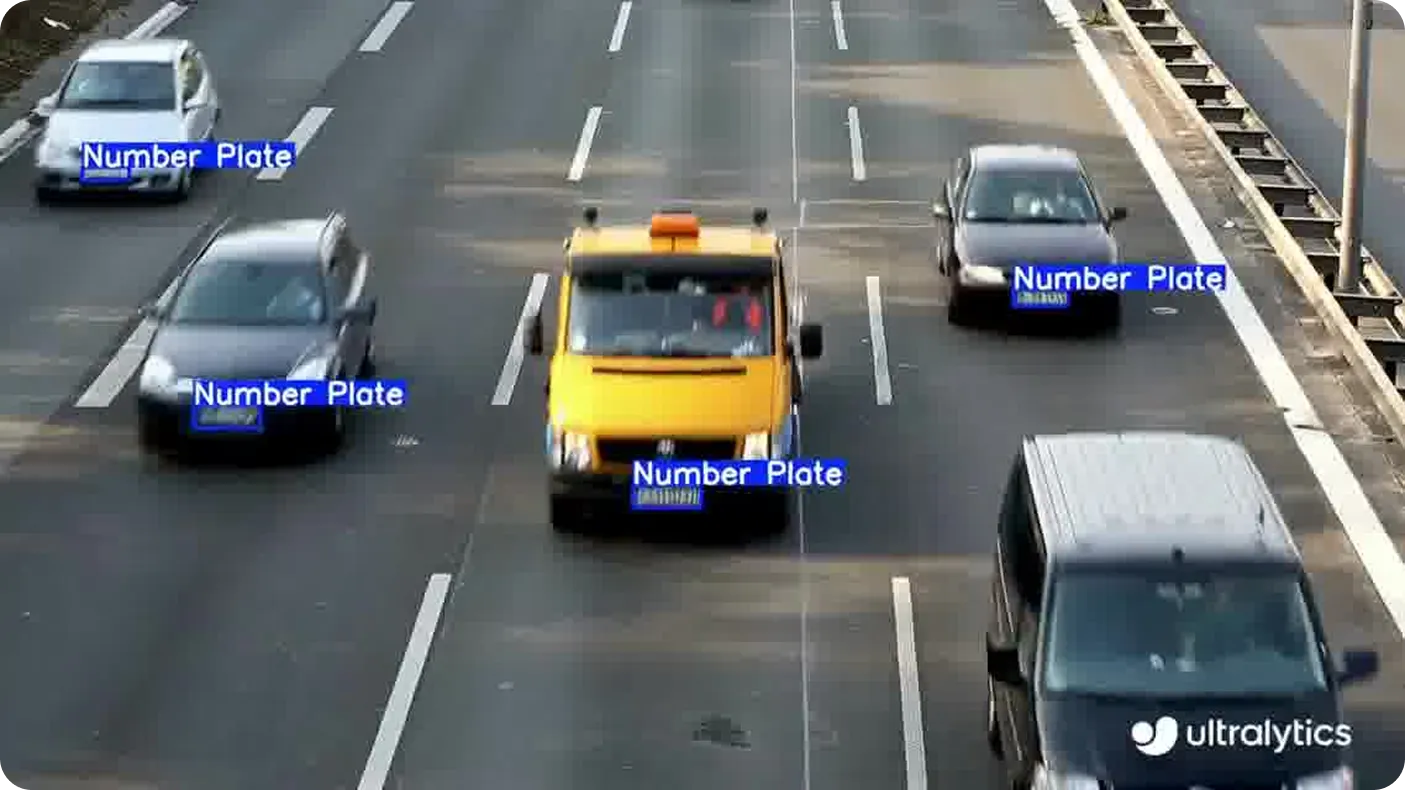

This method is often faster and more secure than sending data to the cloud, especially for applications involving personal data, such as license plate detection or gesture tracking. These are examples of computer vision, a branch of artificial intelligence (AI) that enables machines to interpret and understand visual information.

However, an important consideration is that such applications require Vision AI models capable of handling heavy computation, using minimal resources, and operating independently. Most computer vision models are developed for high-performance systems, making them less suitable for direct deployment on edge devices.

To bridge this gap, developers often apply targeted optimizations that adapt the model to run efficiently on smaller hardware. These adjustments are critical for real-world edge deployments, where memory and processing power are limited.

Interestingly, computer vision models like Ultralytics YOLO11 are already designed with edge efficiency in mind, making them great for real-time tasks. However, their performance can be further enhanced using model optimization techniques such as pruning and quantization, enabling even faster inference and lower resource usage on constrained devices.

In this article, we’ll take a closer look at what pruning and quantization are, how they work, and how they can help YOLO models perform in real-world edge deployments. Let’s get started!

When preparing Vision AI models for deployment on edge devices, one of the key goals is to make the model lightweight and reliable without sacrificing performance. This often involves reducing the model’s size and computational demands so it can operate efficiently on hardware with limited memory, power, or processing capacity. Two common ways to do this are pruning and quantization.

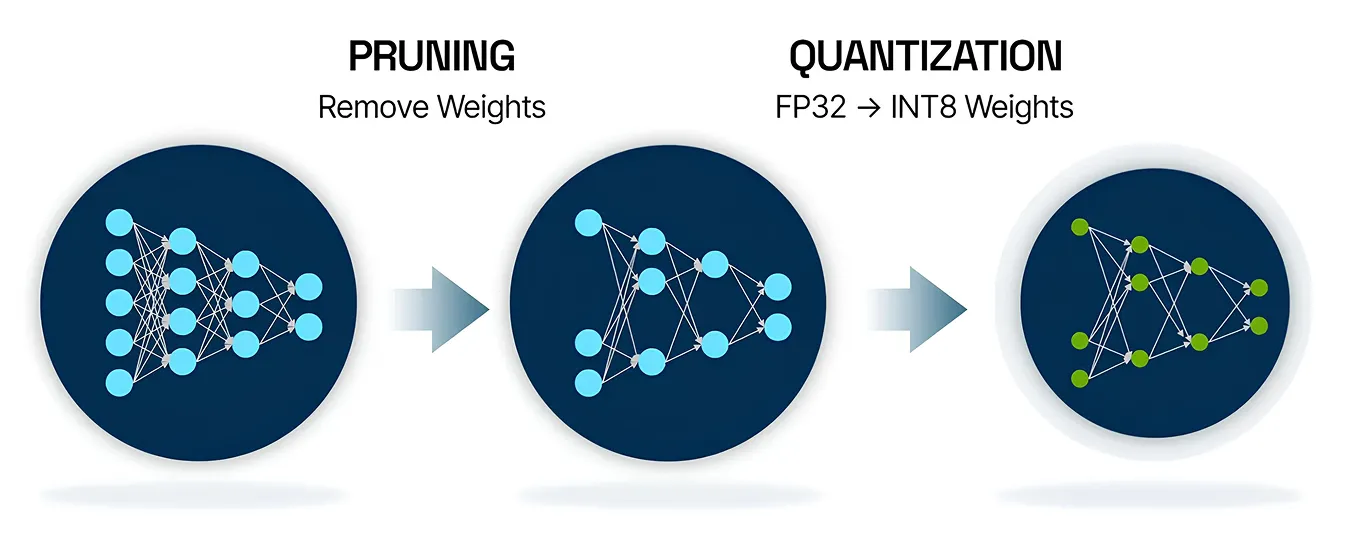

Pruning is an AI model optimization technique that helps make neural networks smaller and more efficient. In many cases, parts of a model, such as certain connections or nodes, don’t contribute much to its final predictions. Pruning works by identifying and removing these less important parts, which reduces the model’s size and speeds up its performance.

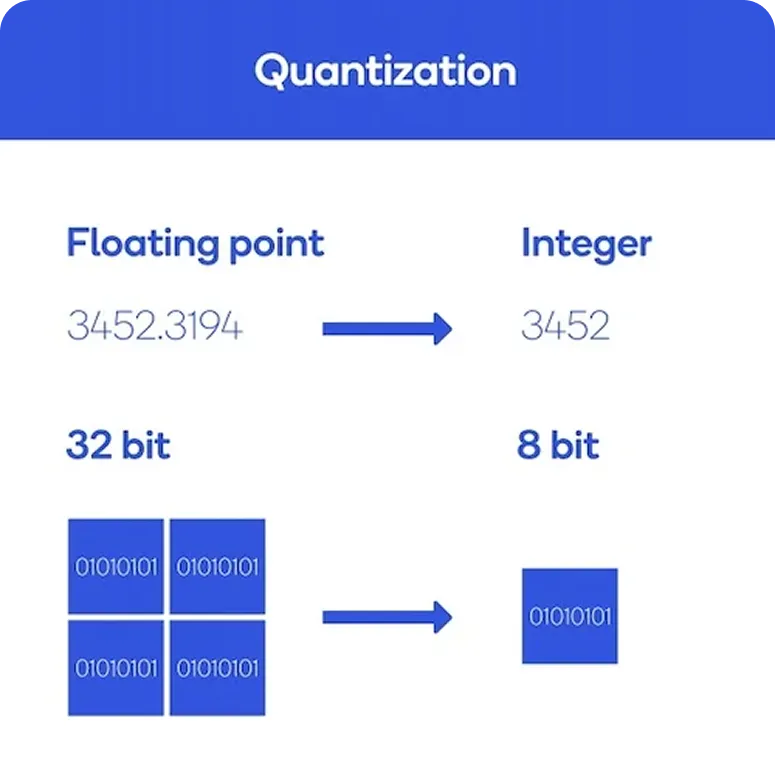

On the other hand, quantization is an optimization technique that reduces the precision of the numbers a model uses. Instead of relying on high-precision 32-bit floating-point numbers, the model switches to smaller, more efficient formats like 8-bit integers. This change helps lower memory usage and speeds up inference, the process where the model makes predictions.

Now that we have a better understanding of what pruning and quantization are, let’s walk through how they both work.

Pruning is done using a process known as sensitivity analysis. It identifies which parts of the neural network models, such as certain weights, neurons, or channels, contribute the least to the final output prediction. These parts can be removed with minimal effect on accuracy. After pruning, the model is usually retrained to fine-tune its performance. This cycle can be repeated to find the right balance between its size and accuracy.

Meanwhile, model quantization focuses on how the model handles data. It begins with calibration, where the model runs on sample data to learn the range of values it needs to process. Those values are then converted from 32-bit floating point to lower precision formats like 8-bit integers.

There are several tools available that make it easier to use pruning and quantization in real-world AI projects. Most AI frameworks, such as PyTorch and TensorFlow, include built-in support for these optimization techniques, allowing developers to integrate them directly into the model deployment process.

Once a model is optimized, tools like ONNX Runtime can help run it efficiently across various hardware platforms like servers, desktops, and edge devices. Also, Ultralytics offers integrations that allow YOLO models to be exported in formats suitable for quantization, making it easier to reduce model size and boost performance.

Ultralytics YOLO models like YOLO11 are widely recognized for their fast, single-step object detection, making them ideal for real-time Vision AI tasks. They are already designed to be lightweight and efficient enough for edge deployment. However, the layers responsible for processing visual features, called convolutional layers, can still demand considerable computing power during inference.

You might wonder: if YOLO11 is already optimized for edge use, why does it need further optimization? Simply put, not all edge devices are the same. Some run on very minimal hardware, like tiny embedded processors that consume less power than a standard LED light bulb.

In these cases, even a streamlined model like YOLO11 needs additional optimization to guarantee smooth, reliable performance. Techniques like pruning and quantization help reduce the model’s size and speed up inference without significantly impacting accuracy, making them ideal for such constrained environments.

To make it easier to apply these optimization techniques, Ultralytics supports various integrations that can be used to export YOLO models into multiple formats like ONNX, TensorRT, OpenVINO, CoreML, and PaddlePaddle. Each format is designed to work well with specific types of hardware and deployment environments.

For example, ONNX is often used in quantization workflows due to its compatibility with a wide range of tools and platforms. TensorRT, on the other hand, is highly optimized for NVIDIA devices and supports low-precision inference using INT8, making it ideal for high-speed deployment on edge GPUs.

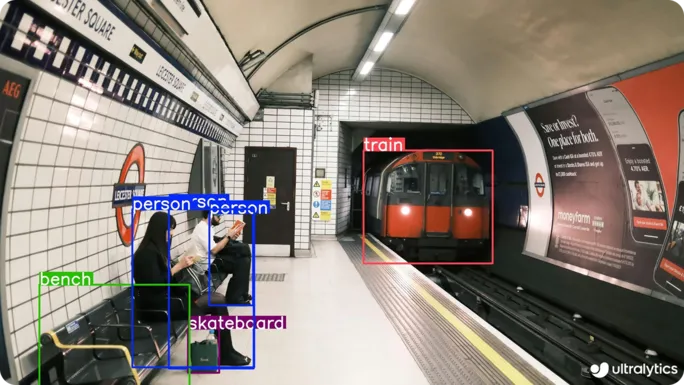

As computer vision continues to expand into various real-world applications, optimized YOLO models make it possible to run tasks like object detection, instance segmentation, and object tracking on smaller, faster hardware. Next, let’s discuss a couple of use cases where pruning and quantization make these computer vision tasks more efficient and practical.

Many industrial spaces, as well as public areas, depend on real-time monitoring to stay safe and secure. Places like transit stations, manufacturing sites, and large outdoor facilities need Vision AI systems that can detect people or vehicles quickly and accurately. Often, these locations operate with limited connectivity and hardware constraints, which makes it difficult to deploy large models.

In such cases, an optimized Vision AI model like YOLO11 is a great solution. Its compact size and fast performance make it perfect for running on low-power edge devices, such as embedded cameras or smart sensors. These models can process visual data directly on the device, enabling real-time detection of safety violations, unauthorized access, or abnormal activity, without relying on constant cloud access.

Construction sites are fast-paced and unpredictable environments, filled with heavy machinery, moving workers, and constant activity. Conditions can change quickly due to shifting schedules, equipment movement, or even sudden changes in weather. In such a dynamic setting, worker safety can feel like a continuous challenge.

Real-time monitoring plays a crucial role, but traditional systems often rely on cloud access or expensive hardware that may not be practical on-site. This is where models like YOLO11 can be impactful. YOLO11 can be optimized to run on small, efficient edge devices that work directly at the site without needing an internet connection.

For instance, consider a large construction site such as a highway expansion that spans several acres. In this type of setting, manually tracking every vehicle or piece of equipment can be difficult and time-consuming. A drone equipped with a camera and an optimized YOLO11 model can help by automatically detecting and following vehicles, monitoring traffic flow, and identifying safety issues like unauthorized access or unsafe driving behavior.

Here are some key advantages that computer vision model optimization methods like pruning and quantization offer:

While pruning and quantization offer many advantages, they also come with certain trade-offs that developers should consider when optimizing models. Here are some limitations to keep in mind:

Pruning and quantization are useful techniques that help YOLO models perform better on edge devices. They reduce the size of the model, lower its computing needs, and speed up predictions, all without a noticeable loss in accuracy.

These optimization methods also give developers the flexibility to adjust models for different types of hardware without needing to rebuild them completely. With some tuning and testing, it becomes easier to apply Vision AI in real-world situations.

Join our growing community! Explore our GitHub repository to learn more about AI. Ready to start your computer vision projects? Check out our licensing options. Discover AI in agriculture and Vision AI in healthcare by visiting our solutions pages!