Learn how image matching in Vision AI works and explore the core technologies that help machines detect, compare, and understand visual data.

Learn how image matching in Vision AI works and explore the core technologies that help machines detect, compare, and understand visual data.

When you look at two pictures of the same object, such as a painting and a photograph of a car, it is easy to notice what they have in common. For machines, however, this isn’t so straightforward.

To make such comparisons, machines rely on computer vision, a branch of artificial intelligence (AI) that helps them interpret and understand visual information. Computer vision enables systems to detect objects, understand scenes, and extract patterns from images or videos.

In particular, some visual tasks go beyond analyzing a single image. They involve comparing images to find similarities, spot differences, or track changes over time.

Vision AI spans a wide set of techniques, and one essential capability, known as image matching, focuses on identifying similarities between images, even when lighting, angles, or backgrounds vary. This technique can be used in various applications, including robotics, augmented reality, and geo-mapping.

In this article, we’ll explore what image matching is, its core techniques, and some of its real-world applications. Let’s get started!

Image matching makes it possible for a computer system to understand if two images contain similar content. Humans can do this intuitively by noticing shapes, colors, and patterns.

Computers, on the other hand, rely on numerical data. They analyze images by investigating each pixel, which is the smallest unit of a digital image.

Every image is stored as a grid of pixels, and each pixel typically holds values for red, green, and blue (RGB). These values can change when an image is rotated, resized, viewed from a different angle, or captured under different lighting conditions. Because of these variations, comparing images pixel-by-pixel is often unreliable.

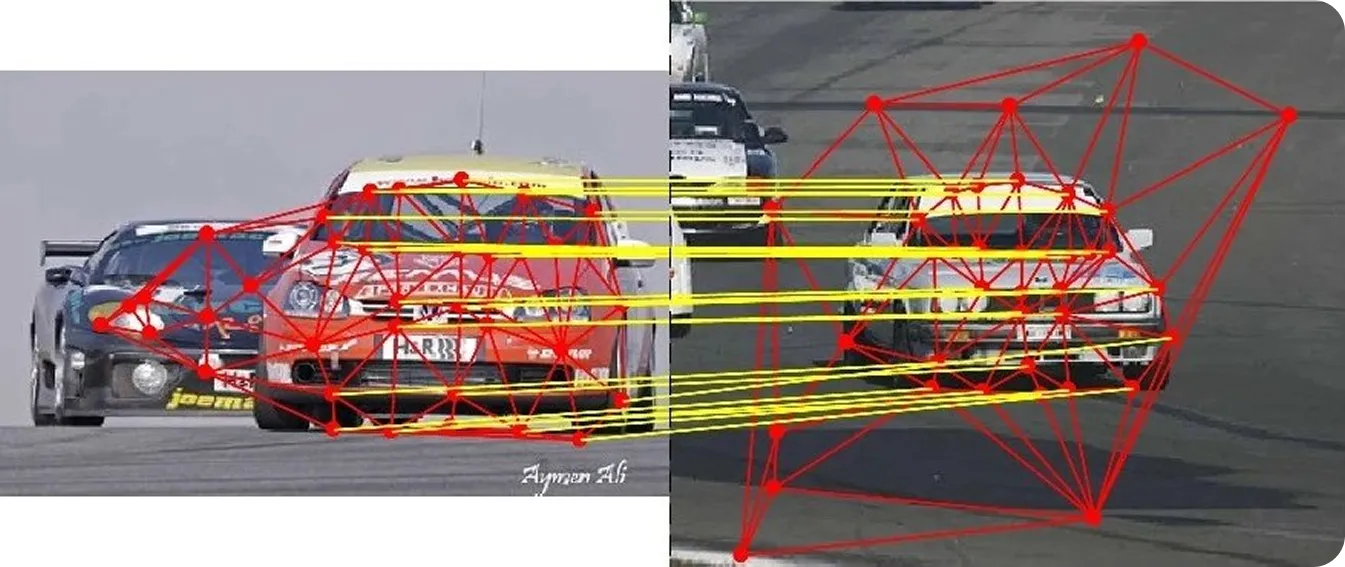

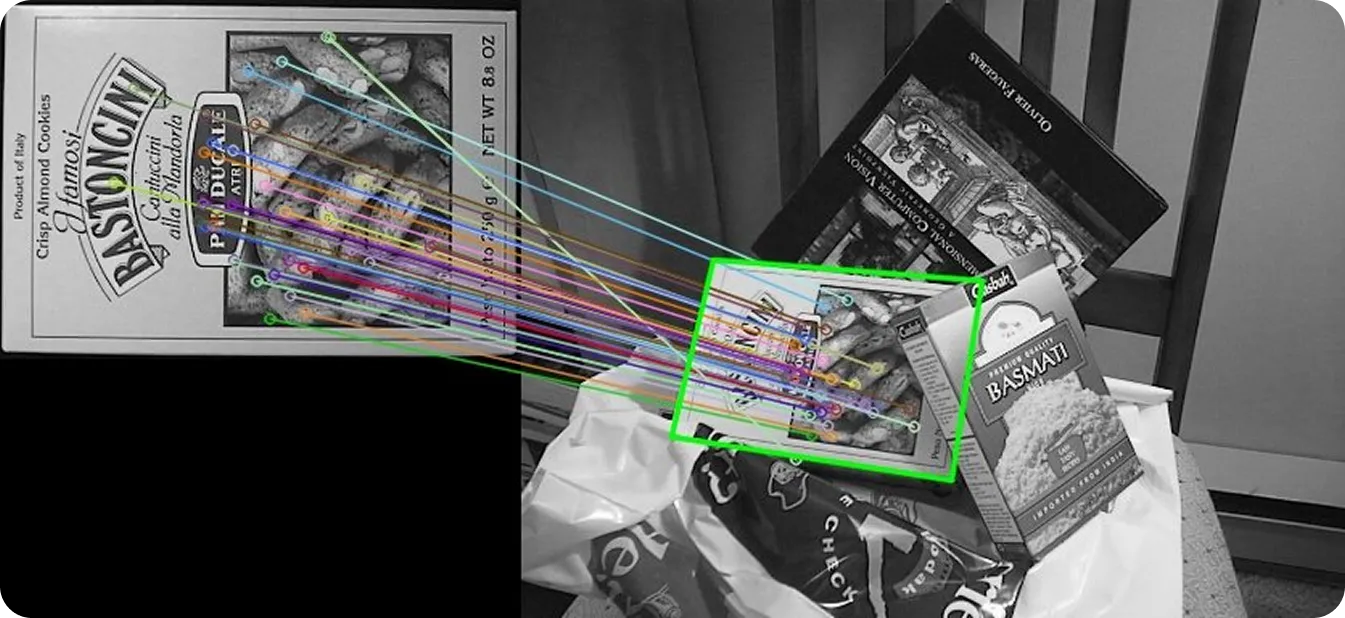

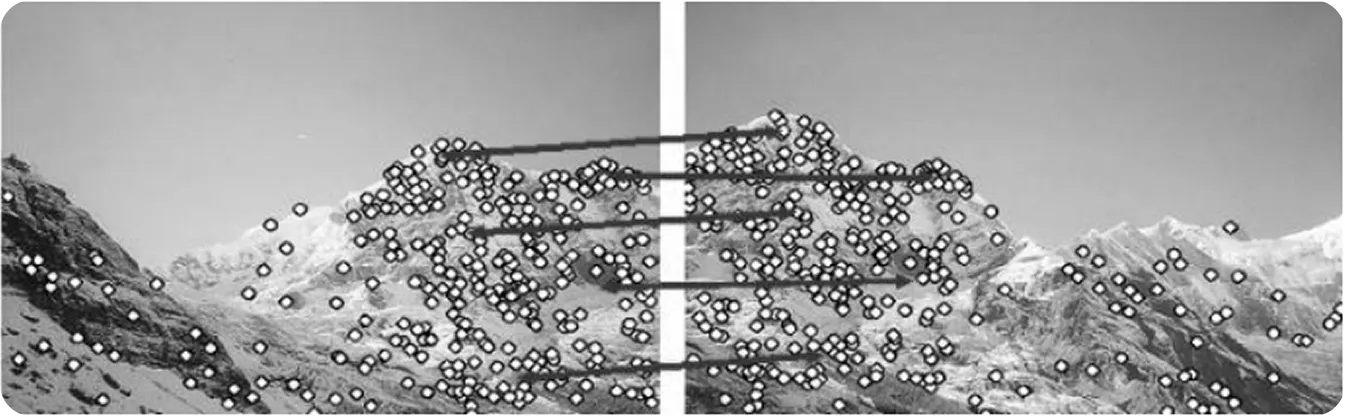

To make comparisons more consistent, image matching focuses on local features or corners, edges, and textured regions that tend to stay stable even when an image changes slightly. By detecting these features, or keypoints, across multiple images, a system can compare them with much greater accuracy.

This process is widely used in use cases like navigation, localization, augmented reality, mapping, 3D reconstruction, and visual search. When systems identify the same points across different images or multiple frames, they can track movement, understand scene structure, and make reliable decisions in dynamic environments.

Image matching involves several key steps that help systems identify and compare similar regions within images. Each step improves accuracy, consistency, and robustness under different conditions.

Here’s a step-by-step look at how image matching works:

Before we explore the real-world applications of image matching, let’s first take a closer look at the image-matching techniques used in computer vision systems.

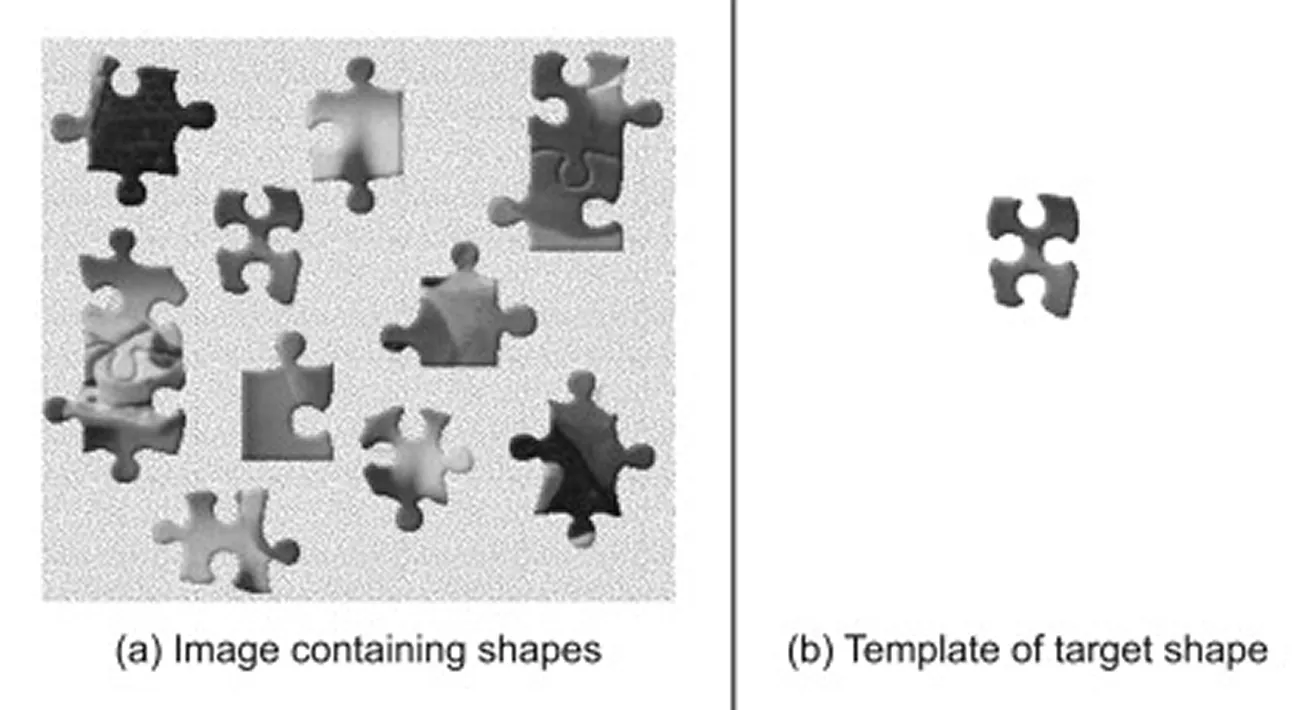

Template matching is one of the most straightforward image-matching methods. It is generally considered an image-processing technique rather than a modern computer-vision method because it relies on direct pixel comparisons and doesn’t extract deeper visual features.

It is used to locate a smaller reference image, or template, within a larger scene. It works using an algorithm that slides a template across the main image and calculates a similarity score at each position to measure how closely the two regions match. The area with the highest score is considered the best match, indicating where the object is most likely to appear in the scene.

This technique works well when the object’s scale, rotation, and lighting remain consistent, making it a good choice for controlled environments or baseline comparisons. However, its performance declines when the object looks different from the template, such as when its size changes, it is rotated, partially occluded, or appears against a noisy or complex background.

Before deep learning became widely adopted, image matching mostly relied on classical computer vision algorithms that detected distinctive keypoints in an image. Instead of comparing every pixel, these methods analyze image gradients, or changes in intensity, to highlight corners, edges, and textured regions that stand out.

Each detected keypoint is then represented using a compact numerical summary called a descriptor. When comparing two images, a matcher evaluates these descriptors to find the most similar pairs.

A strong similarity score usually indicates that the same physical point appears in both images. Matchers also use specific distance metrics or scoring rules to judge how closely features align, improving overall reliability.

Here are some of the key classical computer vision algorithms used for image matching:

Unlike classical methods that rely on specific rules, deep learning automatically learns features from large datasets, which are collections of visual data that AI models learn patterns from. These models typically run on GPUs (Graphics Processing Units), which provide the high computational power needed to process large batches of images and train complex neural networks efficiently.

This gives AI models the ability to handle real-world changes such as lighting, camera angles, and occlusions. Some models also combine all steps into a single workflow, supporting robust performance in challenging conditions.

Here are some deep learning-based approaches for image feature extraction and matching:

Now that we have a better understanding of how image matching works, let’s look at some real-world applications where it plays an important role.

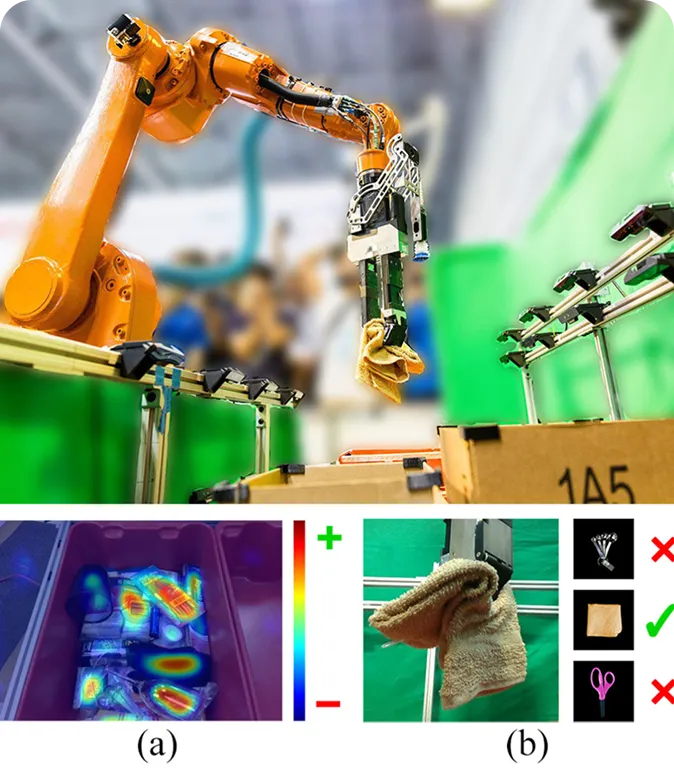

Robots often operate in busy and changing environments, where they need to understand which objects are present and how they are placed. Image matching can help robots understand objects they see by comparing them with stored or reference images. This makes it easier for these robots to recognize objects, track their movement, and adapt even when lighting or camera angles change.

For instance, in a warehouse, a robotic pick-and-place system can use image matching to identify and handle different items. The robot first grabs an object, then compares its image with reference samples to identify it.

Once the match is found, the robot knows how to sort or place it correctly. This approach allows robots to recognize both familiar and new objects without retraining the entire system. It also helps them make better real-time decisions, like organizing shelves, assembling parts, or rearranging items.

In areas such as drone mapping, virtual reality, and building inspection, systems often need to reconstruct a 3D model from multiple 2D images. To do this, they rely on image matching to identify common keypoints, like corners or textured regions, that appear across several images.

These shared points help the system understand how the images relate to one another in 3D space. This idea is closely related to Structure from Motion (SfM), a technique that builds 3D structures by identifying and matching keypoints across images captured from different viewpoints.

If the matching isn’t accurate, the resulting 3D model can appear distorted or incomplete. For this reason, researchers have been working to improve the reliability of image matching for 3D reconstruction, and recent advancements have shown promising results.

One interesting example is HashMatch, a faster and more robust image-matching algorithm. HashMatch converts image details into compact patterns called hash codes, which makes it easier to identify correct matches and remove outliers, even when lighting or viewpoints vary.

When tested on large-scale datasets, HashMatch produced cleaner and more realistic 3D reconstruction models with fewer alignment errors. This makes it especially useful for applications like drone mapping, AR systems, and cultural heritage preservation, where precision is critical.

When it comes to augmented reality (AR), keeping virtual objects aligned with the real world is often a challenge. Outdoor environments can change constantly depending on environmental conditions, such as sunlight and weather. Subtle differences in the real world can make virtual elements appear unstable or slightly out of place.

To solve this issue, AR systems use image matching to interpret their surroundings. By comparing live camera frames with stored reference images, they can understand where the user is and how the scene has changed.

For instance, in a study involving military-style outdoor AR training with XR (Extended Reality) glasses, researchers used SIFT and other feature-based methods to match visual details between real and reference images. Accurate matches kept the virtual elements correctly aligned with the real world, even when the user moved quickly or the lighting changed.

Image matching is a core component of computer vision, enabling systems to understand how different images relate to one another or how a scene changes over time. It plays a critical role in robotics, augmented reality, 3D reconstruction, autonomous navigation, and many other real-world applications where precision and stability are essential.

With advanced AI models like SuperPoint and LoFTR, today’s systems are becoming far more robust than earlier methods. As machine learning techniques, specialized vision modules, neural networks, and datasets continue to advance, image matching will likely become faster, more accurate, and more adaptable.

Join our growing community and explore our GitHub repository for hands-on AI resources. To build with Vision AI today, explore our licensing options. Learn how AI in agriculture is transforming farming and how Vision AI in healthcare is shaping the future by visiting our solutions pages.