Explore what artificial intelligence is and discover its main branches, like machine learning, computer vision, and more, that power today’s intelligent systems.

Explore what artificial intelligence is and discover its main branches, like machine learning, computer vision, and more, that power today’s intelligent systems.

Technology is always improving, and as a society, we’re constantly looking for new ways to make our lives more efficient, safer, and easier. From the invention of the wheel to the rise of the internet, each advancement has changed how we live and work. The latest key technology in this effort is artificial intelligence (AI).

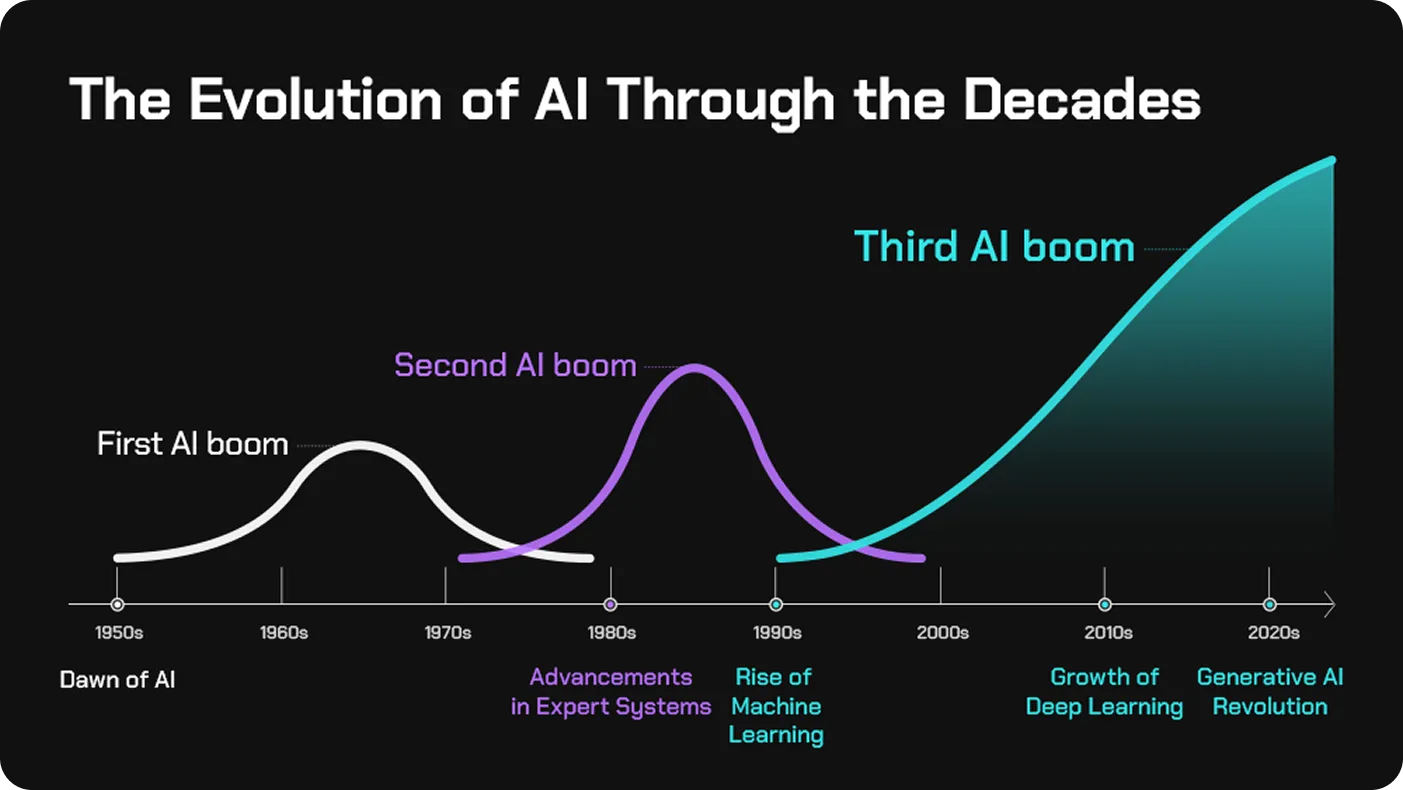

We are currently in what is referred to as the "AI boom" - a period of rapid growth and adoption of AI technologies across industries. However, this isn't the first time AI has seen a surge in interest. There have been previous waves, dating back to the 1950s and again in the 1980s, but today’s boom is driven by massive computing power, big data, and advanced machine learning models that are more powerful than ever.

Every week, new discoveries and innovations are being introduced by researchers, startups, and tech giants alike, pushing the boundaries of what AI can do. From improving healthcare diagnostics to powering smart assistants, AI is becoming deeply integrated into our daily lives. In fact, by 2033, the global AI market value is expected to reach $4.8 trillion.

In this article, we’ll take a closer look at what artificial intelligence really is, break down its key branches, and discuss how it’s transforming the world.

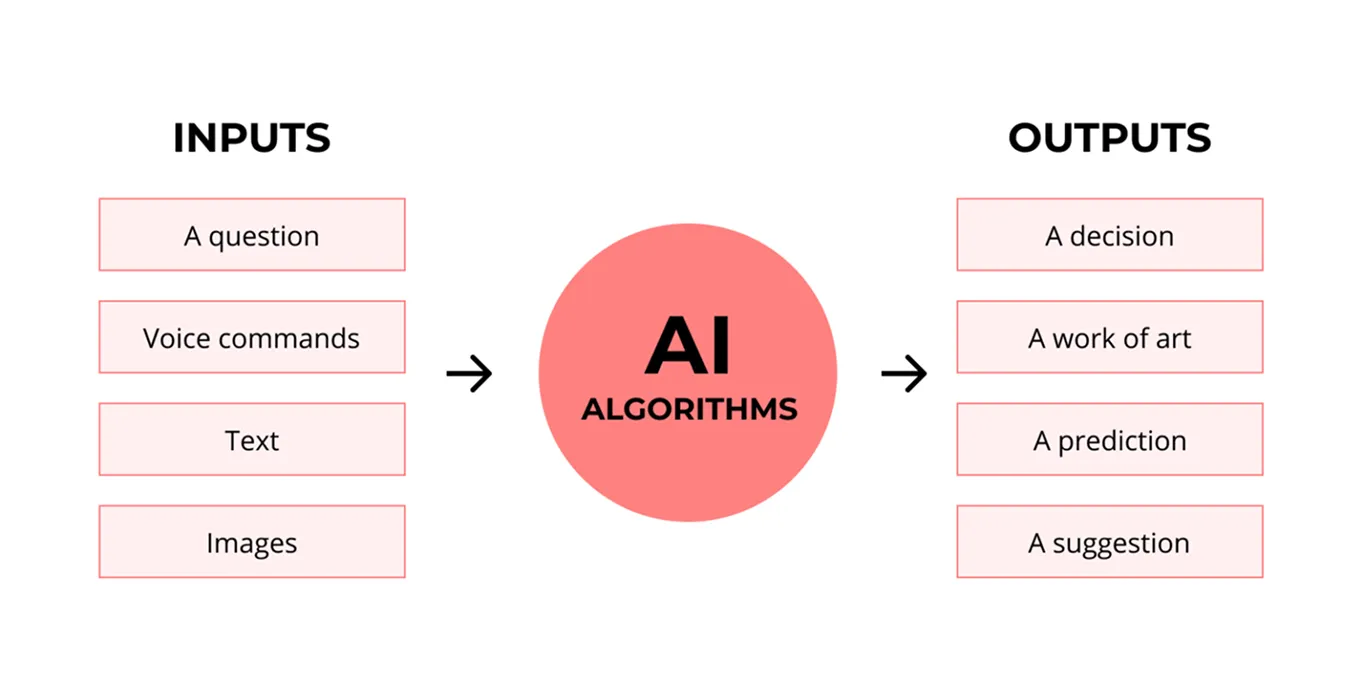

Artificial Intelligence is one of the most talked-about technologies today, but what does it actually mean? At its core, AI refers to machines or computer systems that are built to perform tasks that typically require human intelligence. These tasks might include understanding language, recognizing images, making decisions, or learning from experience.

While the idea of thinking machines may sound futuristic, AI is already being rapidly adopted all around us. For instance, AI forms the heart of applications like recommendation systems, voice assistants, and smart cameras.

Most of the AI solutions we use today fall under what's called narrow or weak AI. This means it is designed to do one task - and do it really well. For example, one AI system might be trained just to recognize faces in a photo, while another is built to recommend movies based on your viewing history. These systems don’t actually think like humans or understand the world; they simply follow patterns in data to complete specific jobs.

To make all this happen, AI innovations rely on something called models. You can think of an AI model as a digital brain that learns from large amounts of data. These models are trained using algorithms (a set of step-by-step instructions) to spot patterns, make predictions, or even generate content. The more data they have and the better they’re trained, the more accurate and useful they become.

Here’s a quick look back at how AI has developed over the decades, from early theories about machine thinking to the impactful tools we use today:

The term AI can be thought of as an umbrella that covers several different areas or branches, each focusing on a specific ability - like learning from data, understanding language, or interpreting visuals. These branches often work together to help AI systems perform useful, real-world tasks.

Here’s a quick overview of some of the core branches of AI:

Each of these branches plays a different role, but together, they enable the development of smart systems that are becoming a part of our everyday lives.

Now that we've introduced the core branches of AI, let’s take a closer look at each one. We’ll walk through how these different areas work and where you might see them in action.

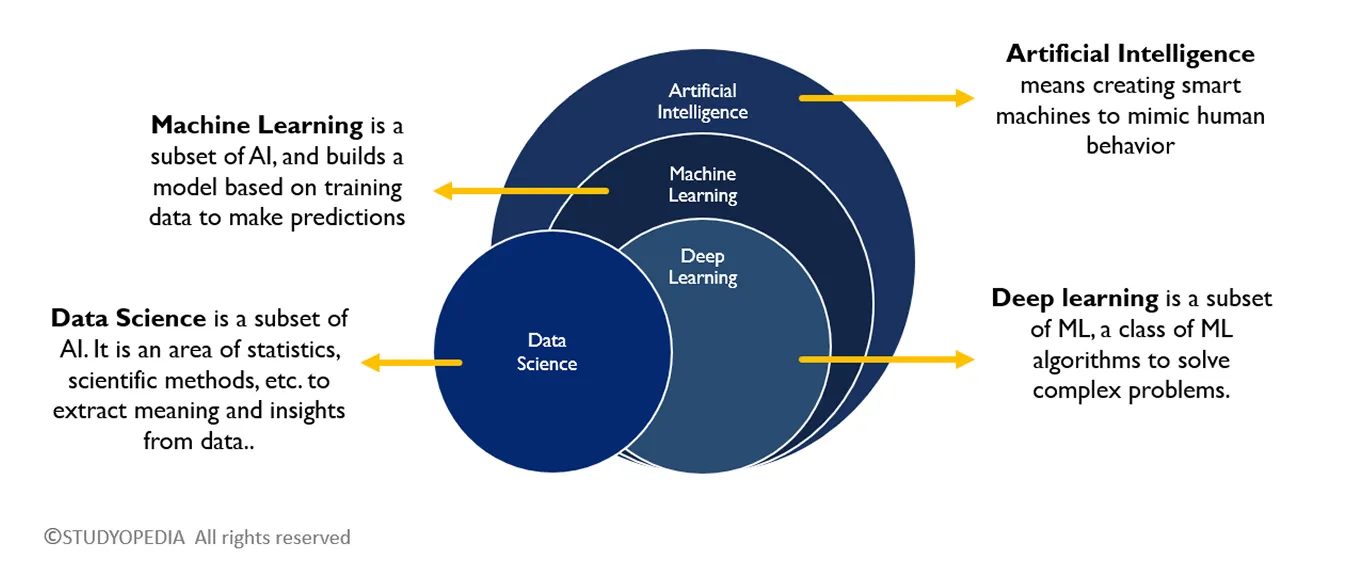

Data science is often confused with machine learning, but they are not the same thing. Data science is focused on understanding and analyzing data to look for trends, create visualizations, and help people make informed decisions. Its goal is to interpret information and tell stories with data.

Machine learning, on the other hand, is centered around building systems that can learn from data and make predictions or decisions without being explicitly programmed. While data science asks, “What does this data tell us?”, machine learning asks, “How can a system use this data to improve automatically over time?”

A good example of machine learning in action is Spotify’s “Discover Weekly” playlist. Spotify is an audio streaming and media service provider that doesn’t just track what songs you play. It learns from what you like, skip, or save, and compares that behavior to millions of other users.

Then, it uses machine learning models to predict and recommend songs you’re likely to enjoy. This personalized experience is made possible because the system keeps learning and adapting, helping you discover music you didn’t even know you were looking for.

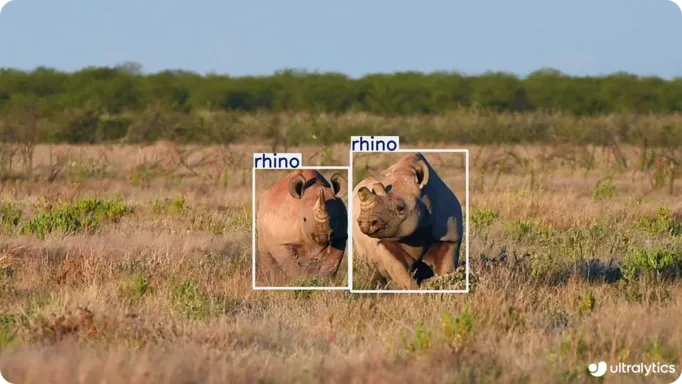

Computer vision models like Ultralytics YOLO11 help machines understand images and videos by identifying objects, people, and scenes. These models are trained using labeled pictures so they can learn what different things look like.

Once trained, they can be used for tasks like object detection (finding and locating things in an image), image classification (figuring out what an image shows), and tracking movement. This allows AI systems to see and respond to the world around them - whether it’s in a self-driving car, a medical scanner, or a security camera.

For instance, one interesting use of computer vision is in wildlife conservation. Drones equipped with cameras and models like YOLO11 can be used to monitor endangered animals in remote areas. They can count how many animals are in a group, track their movement, and even spot threats like poachers, all without disturbing the environment.

It’s a great example of how computer vision is not just a high-tech tool, but something that’s making a real impact towards protecting the planet.

Similar to computer vision, NLP focuses on just one type of data - language. Instead of images or video, NLP helps machines understand and work with human language in both written and spoken forms. It allows computers to read text, understand meaning, recognize speech, and even respond in a way that feels natural. This is the technology behind tools like voice assistants (Siri, Alexa), chatbots, translation apps, and email filters.

For example, Duolingo, the popular language learning app, uses a language model to simulate real-life conversations - like ordering food or booking a hotel. The AI model understands what you’re trying to say, corrects your mistakes, and explains grammar in simple, easy-to-understand terms, just like a real tutor. This makes language learning more interactive and engaging, showcasing how NLP helps people communicate more effectively with the support of AI.

The sudden surge in AI interest worldwide is thanks to generative AI. Unlike traditional AI systems that analyze or classify data, generative AI learns patterns from huge datasets and uses that knowledge to produce original content. These models don’t just follow instructions; they generate new material based on what they’ve learned, often mimicking human creativity and style.

One of the most popular examples is ChatGPT, which can write essays, answer questions, and hold natural conversations. More recently, similar advanced tools like xAI’s Grok-3 have been introduced.

Beyond this, in fields like entertainment and gaming, generative AI is opening up new creative possibilities. Game developers are using AI to create dynamic storylines, dialog, and characters that respond to players in real-time.

Likewise, in film and media, generative tools help design visual effects, write scripts, and even compose music. As these technologies continue to evolve, they’re not just assisting creators - they’re becoming creative partners in shaping immersive, personalized experiences.

Many people compare AI innovation to robots, as seen in the movie The Terminator, but the reality is that AI is just not that advanced yet. While science fiction often imagines fully autonomous machines that think and act like humans, today’s robots are much more practical and task-focused.

Robotics, as a branch of AI, combines mechanical systems with intelligent software to help machines move, sense their surroundings, and take action in the real world. These robots often use other areas of AI, like computer vision to see and machine learning to adapt, so that they can complete specific tasks safely and efficiently.

Take, for example, Boston Dynamics' robot, Stretch, which is designed for warehouse automation. Stretch can scan its surroundings, identify boxes, and move them onto trucks or shelves with minimal human input. It uses AI to make real-time decisions about how to move and where to place objects, making it a reliable tool in logistics and supply chain operations.

Alongside the recent enthusiasm and interest in AI, there are also many important conversations happening around its ethical implications. As AI becomes more advanced and deeply embedded in daily life, people are raising concerns about how it’s used, who controls it, and what safeguards are in place.

One major issue is bias in AI systems; since these technologies learn from real-world data, they can pick up and reinforce existing human prejudices. This can lead to inaccurate results, especially in sensitive areas like hiring or law enforcement.

There’s also concern over the lack of transparency, as many AI systems operate like “black boxes,” making decisions that even their creators can’t fully explain. Another growing issue is the misuse of generative AI, which can create fake news, deepfake videos, or misleading images that are difficult to distinguish from real ones.

As AI continues to evolve, there’s a need for responsible development, which means building systems that are fair, accountable, and respectful of privacy and human rights. Governments, companies, and researchers are now working together to create guidelines that ensure AI benefits everyone while minimizing harm.

Artificial intelligence is growing fast and becoming a bigger part of our daily lives. It's helping with tasks like recognizing images, understanding language, and making smart decisions in real-time. From manufacturing to agriculture, AI is making everyday tasks easier and more efficient.

In the future, we might see even bigger changes with the rise of Artificial General Intelligence (AGI), where machines could learn and think more like humans. As AI technology improves, it will likely become more connected, more useful, and more responsible. It's an exciting time, and there’s a lot to look forward to as AI continues to evolve.

Become a part of our active community! Explore our GitHub repository to dive deeper into computer vision. If you are interested in Vision AI, check out our licensing options. Learn about computer vision in logistics and AI in healthcare on our solutions pages!