Explore the SIFT algorithm. Learn what SIFT is, its powerful features for scale-invariant computer vision. Enhance your image processing.

Explore the SIFT algorithm. Learn what SIFT is, its powerful features for scale-invariant computer vision. Enhance your image processing.

For a visual walkthrough of the concepts covered in this article, watch the video below.

These days, many of the smart devices we use, from phones and cameras to smart home systems, come with AI solutions that can recognize faces, objects, and even entire visual scenes. This capability comes from computer vision, a field of artificial intelligence that enables machines to understand and interpret images and videos.

For example, if you take a photo of the Eiffel Tower from any angle or distance, your device can typically still recognize it using computer vision and organize it into the correct folder in your gallery. While this sounds straightforward, recognizing objects is not always simple. Images can look very different depending on their size, angle, scale, or lighting, which makes it difficult for machines to identify them consistently.

To help solve this problem, researchers developed a computer vision algorithm called Scale Invariant Feature Transform, or SIFT. This algorithm makes it possible to detect objects across different viewing conditions. Created by David Lowe in 1999, SIFT was designed to find and describe unique keypoints in an image, such as corners, edges, or patterns that remain recognizable even when the image is resized, rotated, or lit differently.

Before deep learning–driven computer vision models like Ultralytics YOLO11 became popular, SIFT was a widely used technique in computer vision. It was a standard approach for tasks such as object recognition, where the goal is to identify a specific item in a photo, and image matching, where photos are aligned by finding overlapping image features.

In this article, we’ll explore SIFT with a quick overview of what it is, how it works at a high level, and why it is important in the evolution of computer vision. Let’s get started!

In an image, an object can appear in many different ways. For instance, a coffee mug might be photographed from above, from the side, in bright sunlight, or under a warm lamp. The same mug can also look larger when it is close to the camera and smaller when it is farther away.

All these differences make teaching a computer to recognize an object a complicated task. This computer vision task, known as object detection, requires Vision AI models to identify and locate objects accurately, even when their size, angle, or lighting conditions change.

To make this possible, computer vision relies on a process called feature extraction or detection. Instead of trying to understand the entire image at once, a model looks for distinctive image features such as sharp corners, unique patterns, or textures that remain recognizable across angles, scales, and lighting conditions.

In particular, this is what the Scale Invariant Feature Transform, or SIFT, was designed to do. SIFT is a feature detection and description algorithm that can reliably identify objects in images, no matter how they are captured.

The SIFT algorithm has a few important properties that make it useful for object recognition. One of the key properties is called scale invariance. This means SIFT can recognize various parts of an object, whether it looks big and is close to the camera or small and far away. Even if the object isn’t completely visible, the algorithm can still pick out the same key points.

It does this using a concept called scale-space theory. Simply put, the image is blurred at different levels to create multiple versions. SIFT then looks through these versions to find patterns and details that stay the same, regardless of how the image changes in size or sharpness.

For instance, a road sign photographed from a few meters away will look much bigger than the same sign captured at a distance, but SIFT can still detect the same distinctive features. This makes it possible to match the two images correctly, even though the sign appears at very different scales.

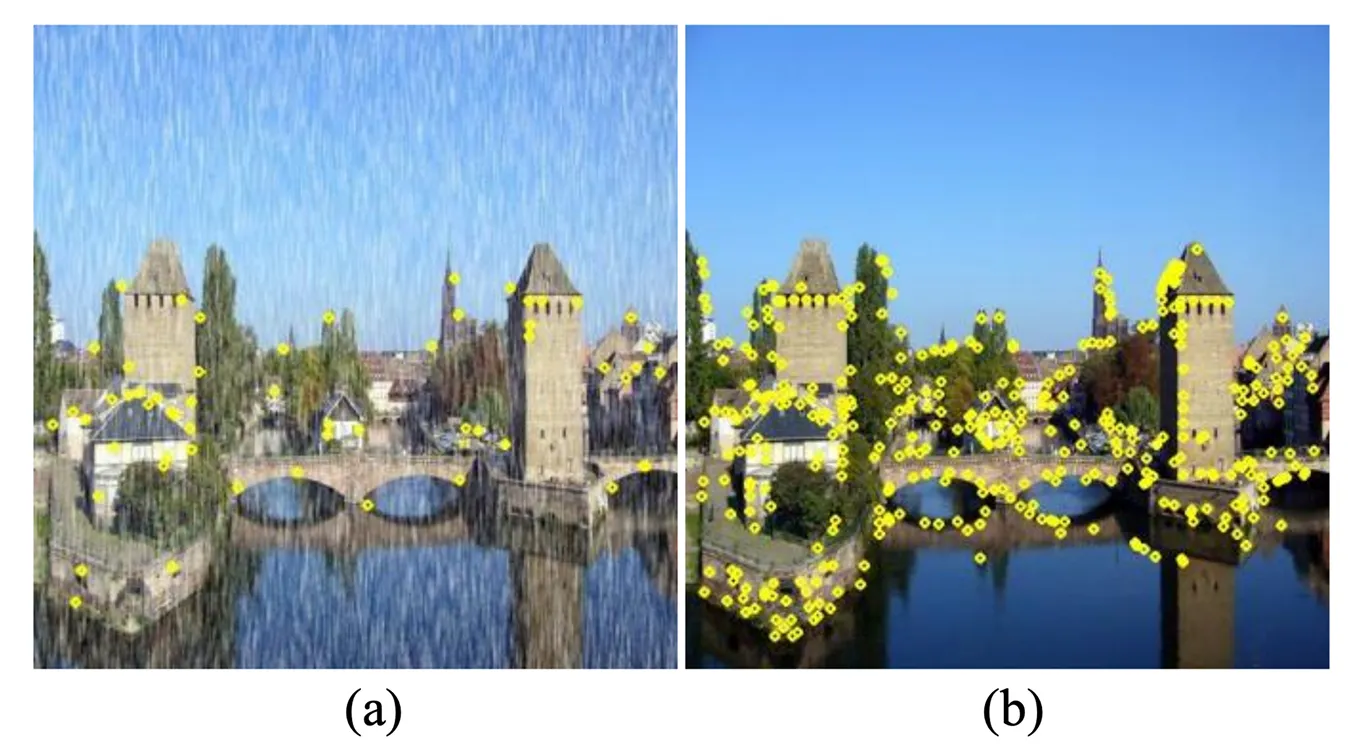

Objects in images can also appear rotated, sometimes even upside down. SIFT handles this through a property called rotation invariance. For every keypoint it detects, the algorithm assigns a consistent orientation based on the local image gradients. This way, the same object can be recognized no matter how it is rotated.

You can think of it like marking each keypoint with a small arrow that shows which direction it faces. By aligning features to these orientations, SIFT ensures that keypoints match correctly even when the object is rotated. For example, a landmark captured in a landscape photo can still be identified correctly even if another photo of it is taken with the camera tilted at an angle.

Beyond size and rotation, images can also change in other ways, like illumination changes. The lighting on an object might go from bright to dim, the camera angle might shift slightly, or the image could be blurred or noisy.

SIFT is built to handle these kinds of variations. It does this by focusing on keypoints that are distinctive and high in contrast, since these features are less affected by changes in lighting or small shifts in viewpoint. As a result, SIFT tends to be more reliable than simple edge or corner detection methods, which often fail when conditions change.

Consider a painting in a gallery. It can still be recognized whether it is photographed under soft daylight, under bright artificial spotlights, or even with slight motion blur from a handheld camera. The keypoints remain stable enough for accurate matching despite these differences.

Next, let’s take a look at how the SIFT algorithm works. This process can be broken down into four main steps: keypoint detection, keypoint localization, orientation assignment, and keypoint description.

The first step is to find and detect keypoints, which are distinctive spots in the image, such as corners or sharp changes in texture, that help track or recognize an object.

To make sure these potential keypoints can be recognized at any size, SIFT builds what is called a scale space. This is a collection of images created by gradually blurring the original image with a Gaussian filter, which is a smoothing technique, and grouping the results into layers called octaves. Each octave contains the same image at increasing levels of blur, while the next octave is a smaller version of the image.

By subtracting one blurred image from another, SIFT calculates the Difference of Gaussians (DoG), which highlights areas where brightness changes sharply. These areas are chosen as candidate keypoints because they remain consistent when the image is zoomed in or out.

Not all candidate keypoints are useful because some may be weak or unstable. To refine them, SIFT uses a mathematical method called the Taylor Series Expansion, which helps estimate the exact position of a keypoint with greater accuracy.

During this step, unreliable points are removed. Keypoints with low contrast, which blend into their surroundings, are discarded, as well as those that lie directly on edges, since they can shift too easily. This filtering step leaves behind only the most stable and distinctive keypoints.

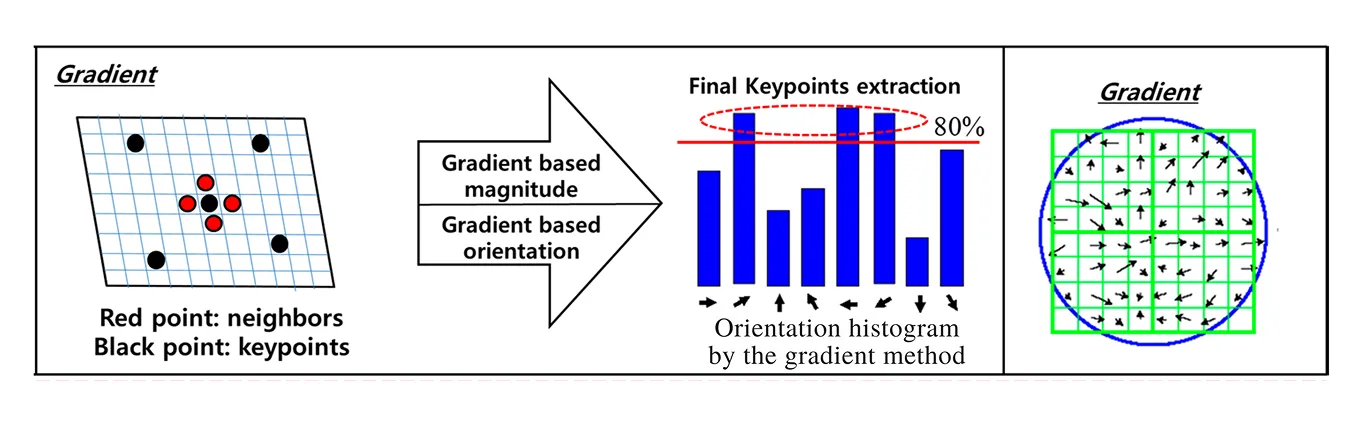

Once stable keypoints are identified, SIFT makes them rotation invariant, which means they can still be matched even if the image is turned sideways or upside down. To do this, SIFT analyzes how brightness changes around each keypoint, which is known as the gradient. Gradients show both the direction and strength of change in pixel intensity, and together they capture the local structure around the point.

For each keypoint, SIFT considers the gradients within a surrounding region and groups them into a histogram of orientations. The tallest peak in this histogram indicates the dominant direction of intensity change, which is then assigned as the keypoint’s orientation. Both the gradient directions, which show where the intensity is changing, and the gradient magnitudes, which indicate how strong that change is, are used to build this histogram

If there are other peaks that are nearly as strong, SIFT assigns multiple orientations to the same keypoint. This prevents important features from being lost when objects appear at unusual angles. By aligning each keypoint with its orientation, SIFT ensures that the descriptors generated in the next step remain consistent.

In other words, even if two images of the same object are rotated differently, the orientation-aligned keypoints will still match correctly. This step is what gives SIFT its strong ability to handle rotation and makes it far more robust than earlier feature detection methods.

The last step in SIFT is to create a description of each keypoint so that it can be recognized in other images.

SIFT achieves this by looking at a small square patch around each keypoint, about 16 by 16 pixels in size. This patch is first aligned to the keypoint’s orientation so rotation does not affect it. The patch is then divided into a grid of 4 by 4 smaller squares.

In each small square, SIFT measures how the brightness changes in different directions. These changes are stored in something called a histogram, which is like a chart showing which directions are most common. Each square gets its own histogram, and together the 16 squares produce 16 histograms.

Finally, these histograms are combined into a single list of numbers, 128 in total. This list is called a feature vector, and it acts like a fingerprint for the keypoint. Because it captures the unique texture and structure around the point, this fingerprint makes it possible to match the same keypoint across different images, even if they are resized, rotated, or lit differently.

Now that we have a better understanding of what SIFT is and how it works, let’s explore some of its real-world applications in computer vision.

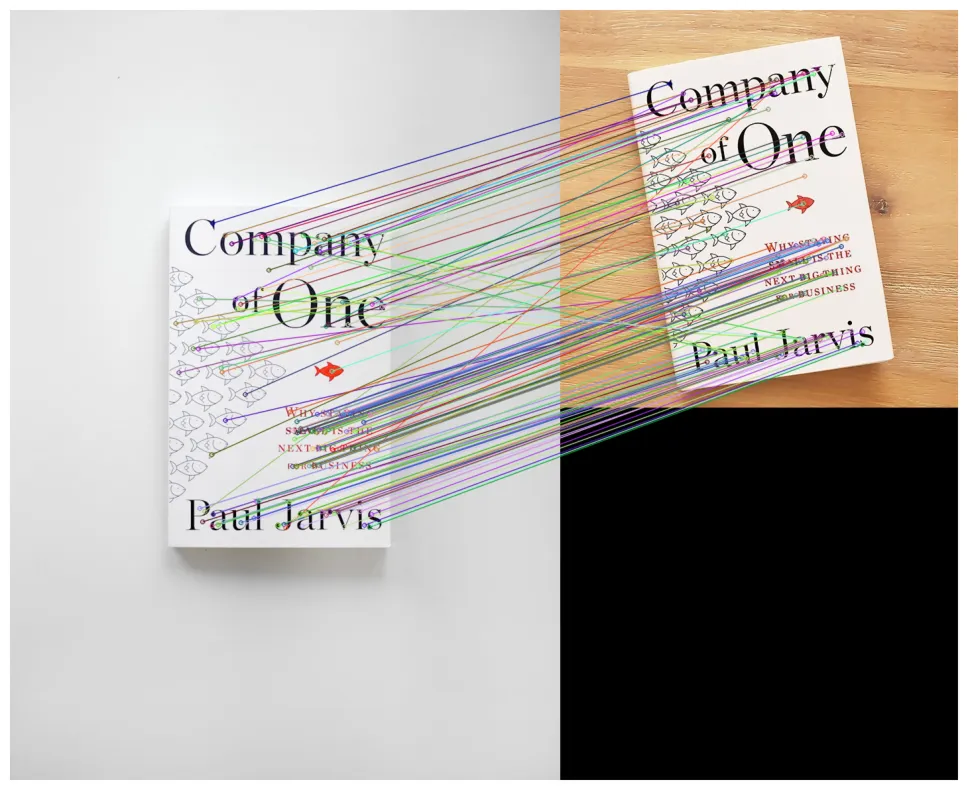

One of the main uses of SIFT is in object recognition and detection. This involves teaching a computer to recognize and locate objects in images, even when the objects do not always look the same. For example, SIFT can detect a book regardless of whether it is close to the camera, farther away, or rotated at an angle.

The reason this works is that SIFT extracts keypoints that are highly distinctive and stable. When these keypoints are paired with SIFT descriptors, they form SIFT features, which provide a reliable way to match the same object across different images. These features capture unique details of the object that remain consistent, enabling reliable feature matching across images even when the object’s size, position, or orientation changes.

Before deep learning became popular, SIFT was one of the most reliable methods for building object recognition systems. It was widely used in research and applications that required matching objects across large image datasets, even though it often demanded significant computational resources.

SIFT can also be used to create panoramic images, which are wide photos made by stitching several pictures together. Using SIFT, distinctive keypoints are found in the overlapping parts of different images and then matched with one another. These matches act like anchors, guiding the stitching process on how the photos should be aligned.

Once the matching is complete, stitching algorithms can be used to calculate the correct alignment, often using geometric transformations that map one image onto another. The images are then blended so that the seams disappear. The final result is a seamless panorama that looks like a single wide photo, even though it was created from multiple shots.

Another interesting application of SIFT is in 3D reconstruction, where multiple 2D photos taken from different angles are combined to build a three-dimensional model. SIFT works by finding and matching the same points across these images.

Once the matches are made, the 3D positions of those points can be estimated using triangulation, a method that calculates depth from different viewpoints. This process is part of structure from motion (SfM), a technique that uses multiple overlapping images to estimate the 3D shape of a scene along with the positions of the cameras that took the photos.

The result is usually a 3D point cloud, a collection of points in space that outlines the object or environment. SIFT was one of the first tools that made structure-from-motion practical. While newer techniques are faster and more common today, SIFT continues to be applied when accuracy is more important than speed.

SIFT has also been used in robotics, particularly in visual SLAM (Simultaneous Localization and Mapping). SLAM allows a robot to figure out where it is while building a map of its surroundings at the same time.

SIFT keypoints act as reliable landmarks that a robot can recognize across frames, even when lighting or angles change. By tracking these landmarks, the robot can estimate its position and update its map on the fly. Although faster feature detectors are used more often in robotics today, SIFT played an important role in early SLAM systems and is still key in cases where robustness is more critical than speed.

While the SIFT algorithm has been widely used in computer vision and is known for being a reliable method, it also comes with some trade-offs. That’s why it’s important to weigh its pros and cons before deciding if it’s the right fit for a project. Next, let’s walk through its key strengths and limitations.

Here are some of the pros of using the SIFT algorithm:

Here are some of the cons of using the SIFT algorithm:

While exploring the pros and cons of SIFT, you might notice that many of its limitations paved the way for more advanced techniques. Specifically, convolutional neural networks (CNNs) emerged as a powerful alternative.

A CNN is a type of deep learning model inspired by how the human visual system works. It processes an image in layers, starting from simple patterns like edges and textures, and gradually building up to more complex shapes and objects. Unlike SIFT’s handcrafted feature rules, CNNs learn feature representations directly from data.

This data-driven learning means CNNs can outperform SIFT in descriptor matching and classification tasks. CNNs are also more expressive and robust, adapting better to the variability and complexity of visual data.

For example, CNN-based models have achieved breakthrough results on ImageNet, a massive benchmark dataset containing millions of labeled images across thousands of categories. Designed to test how well algorithms can recognize and classify objects, ImageNet is able to highlight the gap between older feature-based methods and deep learning.

CNNs quickly surpassed SIFT by learning far richer and more flexible representations, allowing them to recognize objects under changing lighting, from different viewpoints, and even when partially hidden, scenarios where SIFT often struggles.

The Scale Invariant Feature Transform algorithm holds an important place in the history of computer vision. It provided a reliable way to detect features even in changing environments and influenced many of the methods used today.

While newer techniques are faster and more efficient, SIFT laid the groundwork for them. SIFT showcases where today’s progress in computer vision began and highlights how far cutting-edge AI systems have come.

Join our global community and check our GitHub repository to learn more about computer vision. Explore our solutions pages to discover innovations such as AI in agriculture and computer vision in retail. Check out our licensing options and get started with building your own computer vision model.