Edge detection in image processing. Learn Sobel, Canny, and other edge detection algorithms to accurately detect edges and achieve robust edge recognition.

Edge detection in image processing. Learn Sobel, Canny, and other edge detection algorithms to accurately detect edges and achieve robust edge recognition.

As humans, we naturally recognize the edges of objects, follow their curves, and notice the textures on their surfaces when looking at an image. For a computer, however, understanding begins at the level of individual pixels.

A pixel, the smallest unit of a digital image, stores color and brightness at a single point. By tracking changes in these pixel values across an image, a computer can detect patterns that reveal key details.

In particular, image processing uses pixel data to emphasize essential features and remove distractions. One common image processing technique is edge detection, which identifies points where brightness or color changes sharply to outline objects, mark boundaries, and add structure.

This enables computers to separate shapes, measure dimensions, and interpret how parts of a scene connect. Edge detection is often the first step in advanced image analysis.

In this article, we’ll take a look at what edge detection is, how it works, and its real-world applications. Let's get started!

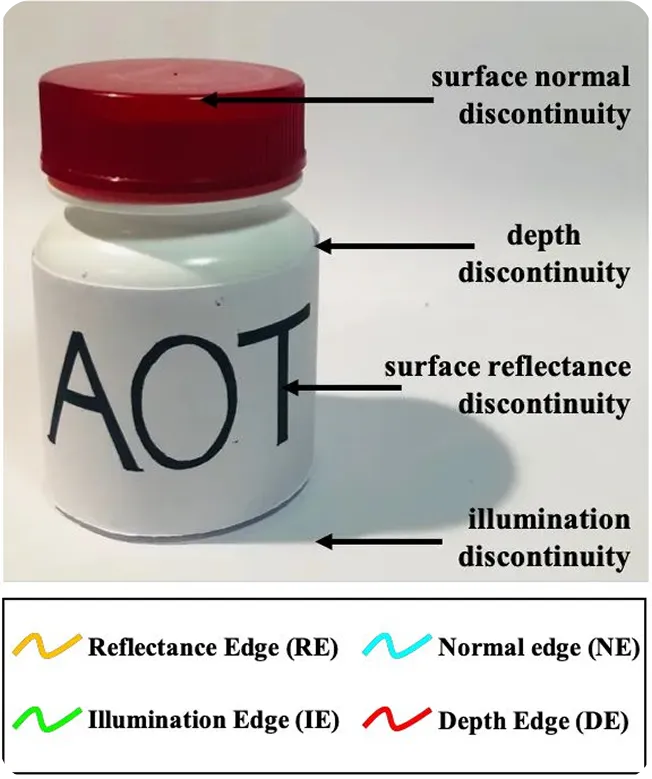

Edge detection focuses on looking for places in an image where brightness or color changes noticeably from one point to the next. If the change is small, the area appears smooth. If the change is sharp, it often marks the boundary between two different regions.

Here are some of the reasons why these pixel changes occur:

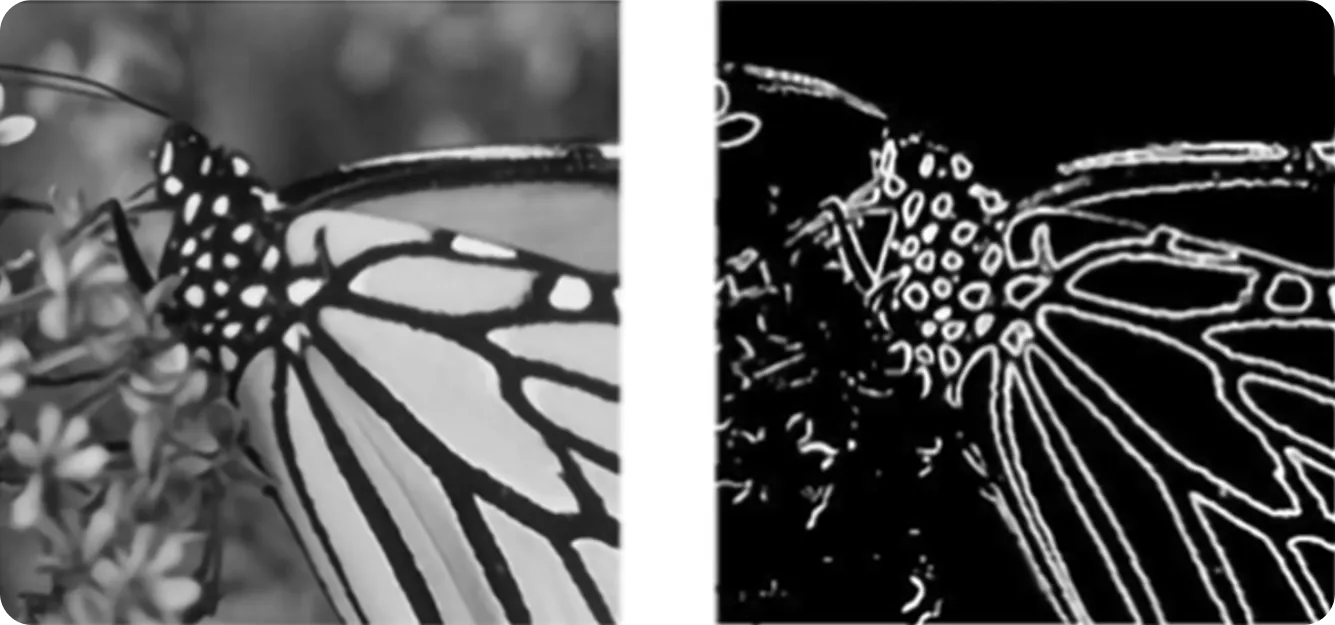

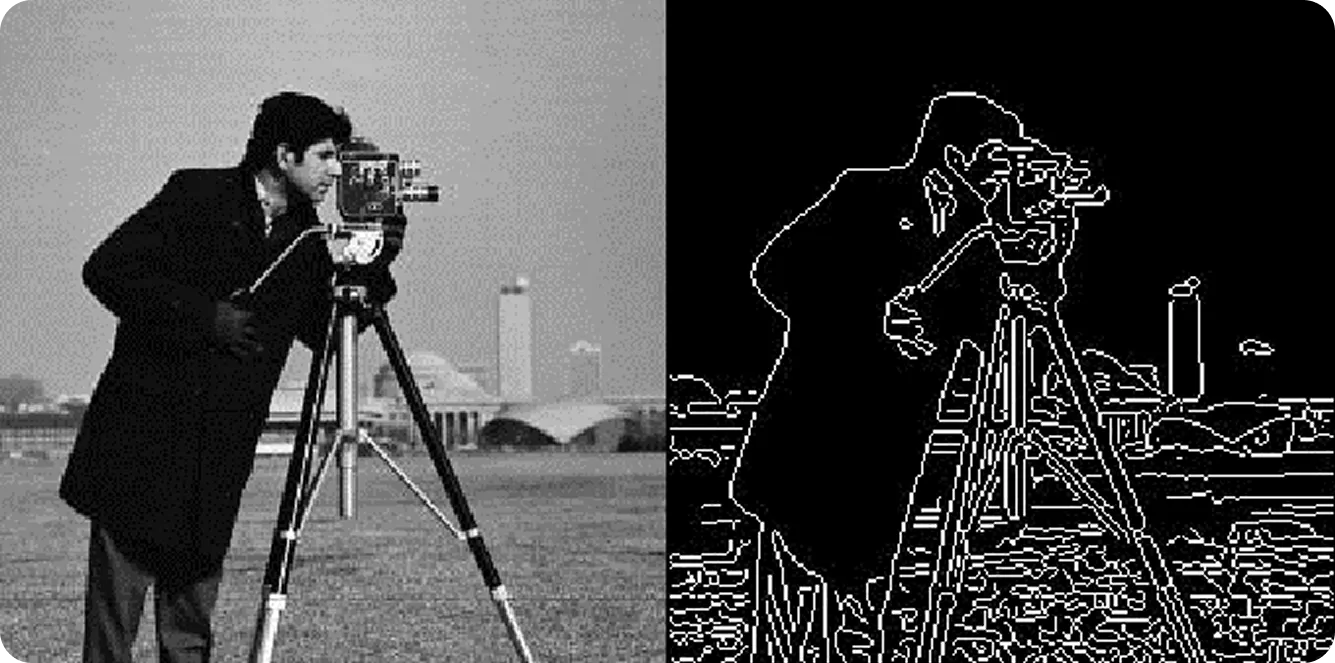

Edge detection usually starts by turning a color image into a grayscale image, so each point only shows brightness. This makes it easier for the algorithm to focus on light and dark differences instead of color.

Next, special filters can scan the image to find places where brightness changes suddenly. These filters calculate how steeply the brightness changes, called the gradient. A higher gradient is caused by a bigger difference between nearby points, which often signals an edge.

The algorithm then keeps refining the image, removing small details and keeping only the most important lines and shapes. The result is a clear outline and output image that can be used for further analysis.

Before we dive into edge detection in more detail, let’s discuss how it developed over time.

Image processing began with simple, rule-based methods like thresholding and filtering to clean up and improve pictures. In the analog era, this meant working with photographs or film using optical filters, magnifiers, or chemical treatments to bring out details.

Techniques like contrast adjustment, noise reduction, adjusting image intensity, and basic edge detection helped make input images clearer and highlight shapes and textures. In the 1960s and 70s, the shift from analog to digital processing opened the way for modern analysis in areas like astronomy, medical imaging, and satellite monitoring.

By the 1980s and 90s, faster computers made it possible to tackle more complex tasks such as feature extraction, shape detection, and basic object recognition. Algorithms like Sobel operator and Canny offered more precise edge detection, while pattern recognition found applications in everything from industrial automation to reading printed text through optical character recognition.

Today, steady advances in technology have led to the development of computer vision. Vision AI, or computer vision, is a branch of AI that focuses on teaching machines to interpret and understand visual information.

While traditional image processing, like double thresholding (which makes images clearer by keeping strong edges and removing weak ones) and edge detection, followed fixed rules and could handle only specific tasks, computer vision uses data-driven models that can learn from examples and adapt to new situations.

Nowadays, imaging systems go far beyond just enhancing images or detecting edges. They can recognize objects, track movement, and understand the context of an entire scene.

One of the key techniques that makes this possible is convolution. A convolution operation is a process where small filters (also called kernels) scan an image to find important patterns like edges, corners, and textures. These patterns become the building blocks that computer vision models use to recognize and understand objects.

For instance, computer vision models like Ultralytics YOLO11 use these convolution-based features to perform advanced tasks such as instance segmentation. This is closely related to edge detection because instance segmentation requires accurately outlining the boundaries of each object in an image.

While edge detection focuses on finding intensity changes in edge pixels to mark object edges, instance segmentation builds on that idea to detect edges, classify, and separate each object into its own region.

Even with the growth of computer vision, image processing is still an important part of many applications. That’s because computer vision often builds on basic image preprocessing steps.

Before detecting objects or understanding a scene, systems usually clean up the image, reduce noise, and find edges to make key details stand out. These steps make advanced models more accurate and efficient.

Next, let’s explore some of the most common image processing algorithms used to detect edges and how they work.

Sobel edge detection is a key method used to find the outlines of objects in an image. Instead of analyzing every detail at once, it focuses on areas where the brightness changes sharply from one pixel to the next neighboring pixel.

These sudden shifts usually mark the point where one object ends and another begins, or where an object meets the background. By isolating these edges, Sobel transforms a complex image into a cleaner outline that is easier for other systems to process for tasks like tracking movement, detecting shapes, or recognizing objects.

You can think of Sobel edge detection as a gradient detector that measures how intensity changes across an image. At its core, this works through a convolution operation: sliding small matrices, called kernels, across the image and computing weighted sums of neighboring pixel values.

These kernels are designed to emphasize changes in brightness along horizontal and vertical directions. Unlike deep learning models, where kernels are learned from data, Sobel uses fixed kernels to efficiently highlight edges without requiring training.

Here’s a closer look at how the Sobel edge detection method works:

Canny edge detection is another popular method for finding edges in an image. It is known for producing clean and precise outlines. Unlike basic edge detection techniques, it follows a series of carefully designed steps to filter out noise, sharpen boundaries, and focus on the most important edges.

Here’s a quick overview of how a Canny edge detector works:

Because it delivers accurate results while filtering out noise, Canny edge detection is widely used in areas where precision matters. For instance, it is used in industries like medical imaging, satellite mapping, document scanning, and robotic vision.

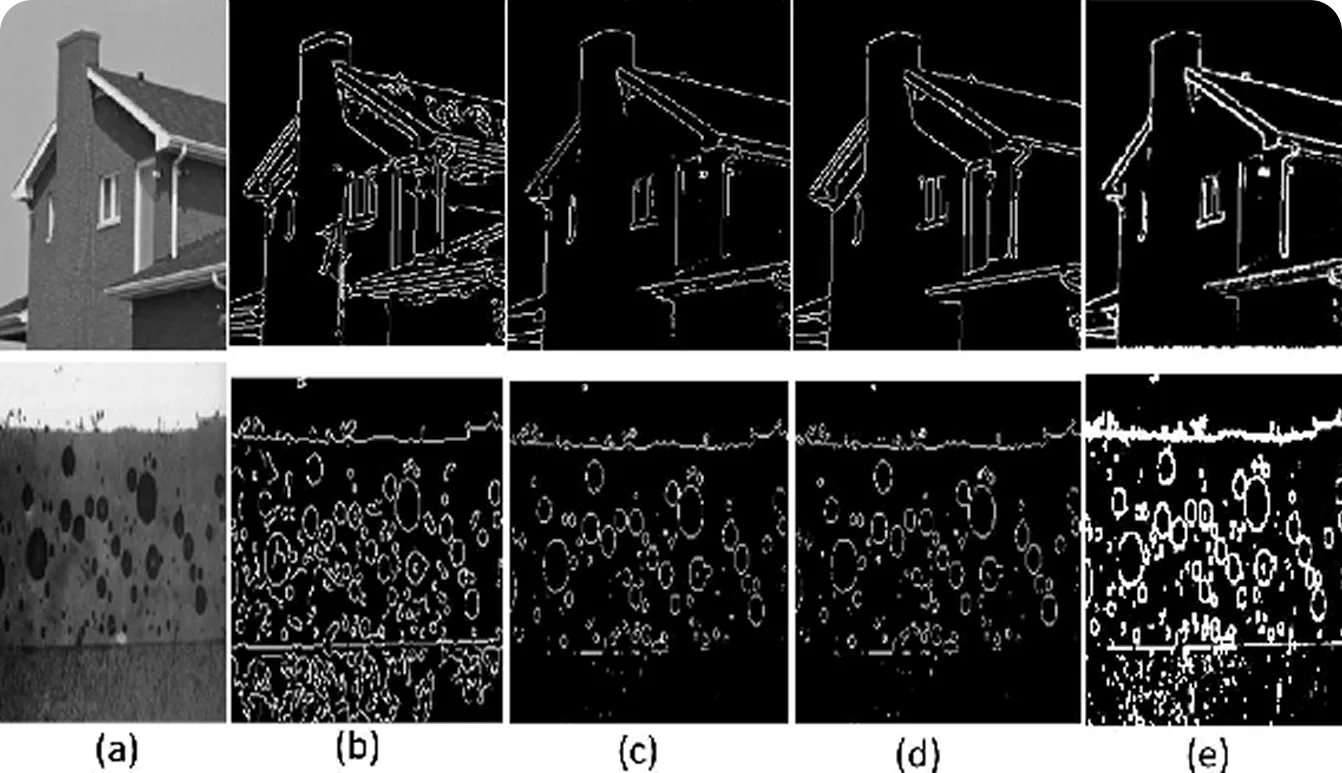

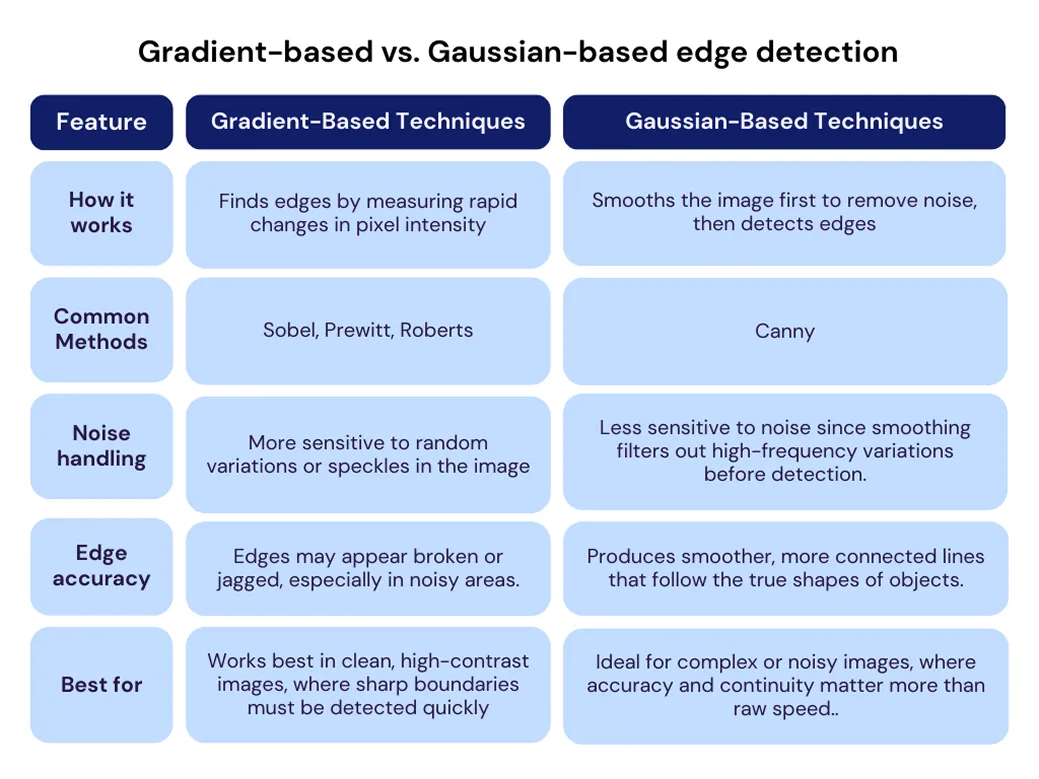

So far, the two examples of edge detection we looked at are Sobel and Canny. While both aim to find edges, they approach the problem differently.

Gradient-based methods (like Sobel, Prewitt, and Scharr) detect edges by looking for sharp changes in brightness, known as the gradient. They scan the image and mark places where this change is strongest. These methods are simple, fast, and work well when images are clear. However, they are sensitive to noise - tiny variations in brightness can be mistaken for edges.

Gaussian-based methods (like Canny or Laplacian of Gaussian) add an extra step to handle this problem: blurring the image first. This smoothing, often done with a Gaussian filter, reduces small variations that could create false edges. After smoothing, these methods still look for sharp brightness changes, but the results are cleaner and more accurate for noisy or low-quality images.

With a better understanding of how edge detection works, let’s explore how it’s applied in real-world situations.

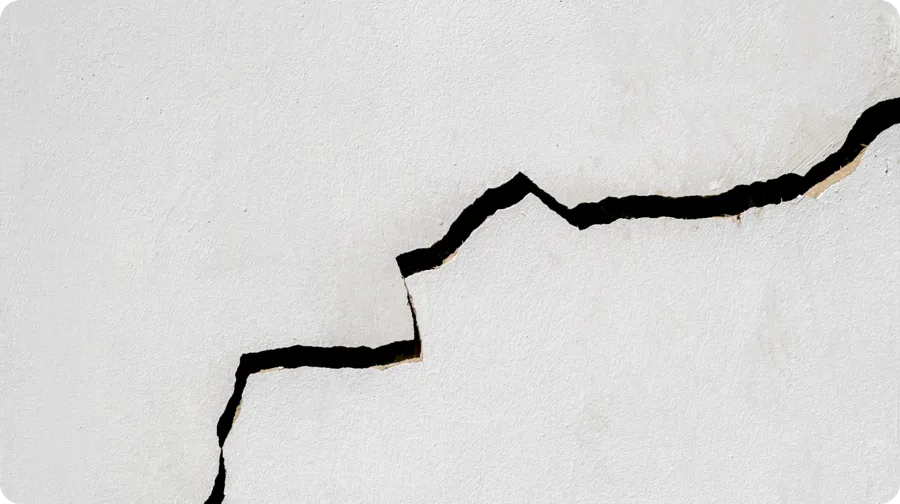

Inspecting large concrete structures, such as bridges and high-rise buildings, is often a challenging and hazardous task. These structures can span long distances or reach high elevations, making traditional inspections slow, expensive, and risky. These inspections also typically require scaffolding, rope access, manual close-up measurements, or photography.

An interesting approach was explored in 2019, when researchers tested a safer and faster method using drones equipped with high-resolution cameras to capture detailed input images of concrete surfaces. These images were then processed with various edge detection techniques to automatically identify cracks.

The study showed that this method significantly reduced the need for direct human access to hazardous areas and sped up inspections. However, its accuracy still depended on factors such as lighting conditions, image clarity, and stable drone operation. In some cases, human review was still needed to eliminate false positives.

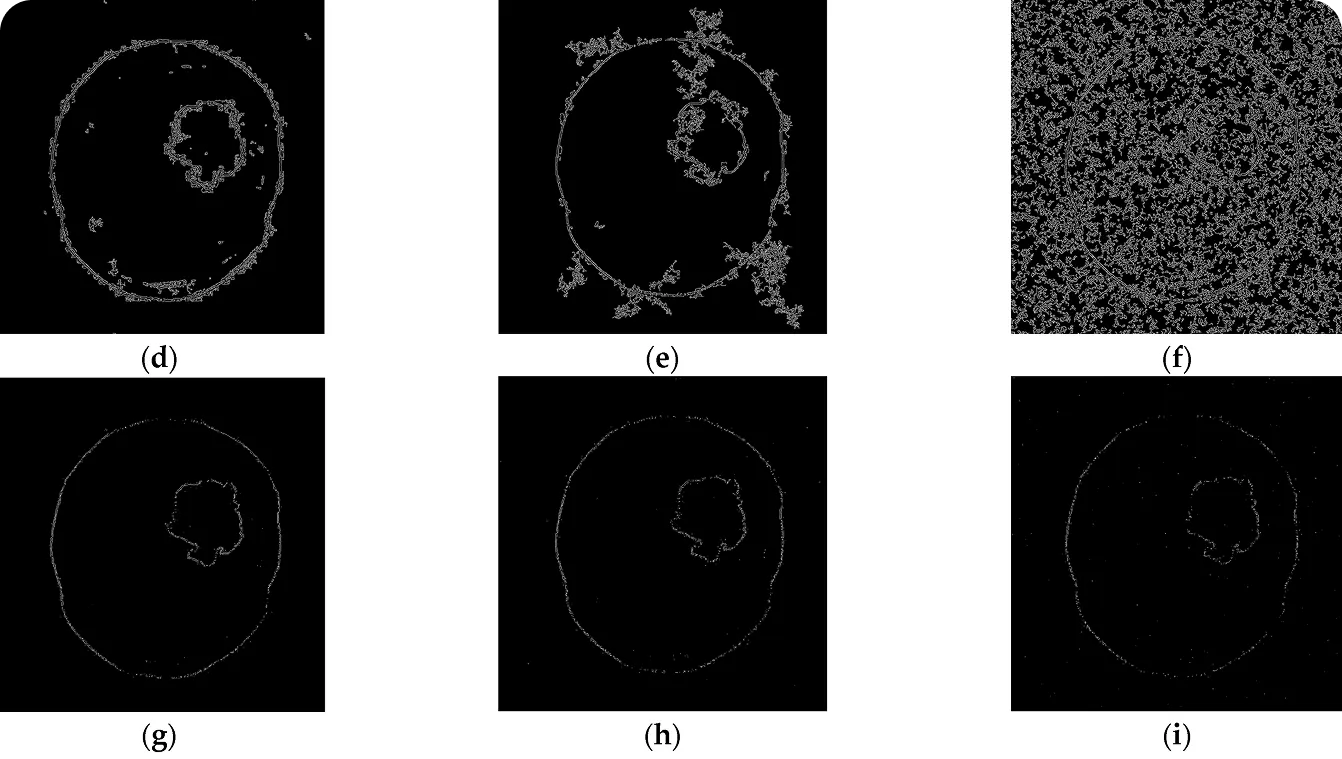

X-rays and MRIs often contain visual disturbances known as noise, which can make fine details harder to see. This becomes a challenge for doctors when trying to spot the edges of a tumor, trace the outline of an organ, or monitor subtle changes over time.

A recent medical imaging study tested how well common edge detection methods, like Sobel, Canny, Prewitt, and Laplacian, handle noisy images. The researchers added different types and levels of noise to images and checked how accurately each method could outline important features.

Canny usually produced the cleanest edges, even when noise was heavy, but it wasn’t the best in every case. Some methods worked better with certain noise patterns, so there’s no single perfect solution.

This highlights why technologies like computer vision are so important. By combining advanced algorithms and Vision AI models, such solutions can go beyond basic edge detection to deliver more accurate, reliable results even in challenging conditions.

Here are some of the benefits of using edge detection and image processing:

While there are many benefits to edge detection in image processing, it also comes with a few challenges. Here are some of the key limitations to consider:

Edge detection is inspired by how our eyes and brain work together to make sense of the world. When it comes to human vision, specialized neurons in the visual cortex are highly sensitive to edges, lines, and boundaries.

These visual cues help us quickly determine where one object ends and another begins. This is why even a simple line drawing can be instantly recognizable - our brain relies heavily on edges to identify shapes and objects.

Computer vision aims to mimic this ability but takes it a step further. Models like Ultralytics YOLO11 go beyond basic edge highlighting and image enhancement. They can detect objects, outline them with precision, and track movement in real time. This deeper level of understanding makes them essential in scenarios where edge detection alone isn’t enough.

Here are some key computer vision tasks supported by YOLO11 that build on and go beyond edge detection:

A good example of computer vision enhancing an application that traditionally relied on edge detection is crack detection in infrastructure and industrial assets. Computer vision models like YOLO11 can be trained to accurately identify cracks on roads, bridges, and pipelines. The same technique can also be applied in aircraft maintenance, building inspections, and manufacturing quality control, helping speed up inspections and improve safety.

Edge detection has come a long way, from simple early methods to advanced techniques that can spot even subtle boundaries in complex images. It helps bring out important details, highlight key areas, and prepare images for deeper analysis, making it a core part of image processing.

In computer vision, edge detection plays an important role in defining shapes, separating objects, and extracting useful information. It is used in many areas such as medical imaging, industrial inspections, autonomous driving, and security to deliver accurate and reliable visual understanding.

Join our community and explore our GitHub repository to discover more about AI. If you're looking to start your own Vision AI project, check out our licensing options. Discover more about applications like AI in healthcare and Vision AI in retail by visiting our solutions pages.