See how ensemble learning boosts AI model performance through techniques like bagging, boosting, and stacking to deliver more accurate, stable predictions.

See how ensemble learning boosts AI model performance through techniques like bagging, boosting, and stacking to deliver more accurate, stable predictions.

For a visual walkthrough of the concepts covered in this article, watch the video below.

AI innovations like recommendation engines and fraud detection systems rely on machine learning algorithms and models to make predictions and decisions based on data. These models can identify patterns, forecast trends, and help automate complex tasks.

However, a single model can struggle to capture all the details in real-world data. It might perform well in some cases but fall short in others, such as a fraud detection model missing new types of transactions.

This limitation is something AI engineers often face when building and deploying machine learning models. Some models overfit by learning the training data too closely, while others underfit by missing important patterns. Ensemble learning is an AI technique that helps address these challenges by combining multiple models, known as base learners, into a single, more powerful system.

You can think of it like a team of experts working together to solve a problem. In this article, we’ll explore what ensemble learning is, how it works, and where it can be used. Let’s get started!

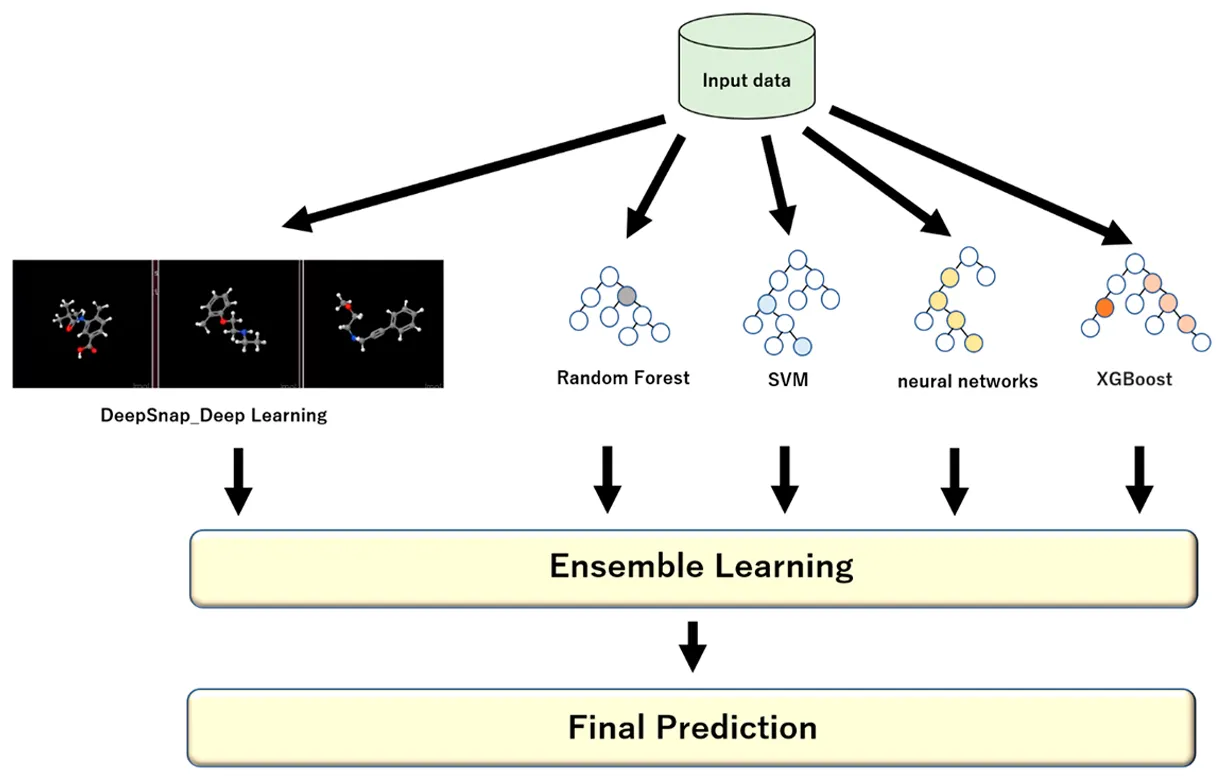

Ensemble learning refers to a set of techniques that combine multiple models to solve the same problem and produce a single, improved result. It can be applied in both supervised learning (where models learn from labeled data) and unsupervised learning (where models find patterns in unlabeled data).

Instead of relying on one model to make predictions, an ensemble uses several models that each look at the data in their own way. When their outputs are combined, the result is often more accurate, stable, and generalizable than what any single model could achieve on its own.

You can compare it to a panel of analysts addressing the same problem. Each analyst or individual model interprets the data differently.

One may focus on patterns, another on anomalies, and another on context. By bringing their perspectives together, the group can make a decision that is more balanced and reliable than any individual judgment.

This approach also helps address two of the biggest challenges in machine learning: bias and variance. A model with high bias is too simple and overlooks important patterns, while one with high variance is overly sensitive and fits the training data too closely. By combining models, ensemble learning finds a balance between the two, improving how well the system performs on new, unseen data.

Each model in an ensemble is known as a base learner or base model. These can either be the same type of algorithm or a mix of different algorithms, depending on the ensemble technique being used.

Here are some common examples of the different models used in ensemble learning:

A combined model ensemble is generally called a strong learner because it integrates the strengths of the base learners (also referred to as weak models) while minimizing their weaknesses. It does so by combining the predictions of each model in a structured way, using majority voting for classification tasks or weighted averaging for regression tasks to produce a more accurate final result.

Before we dive into various ensemble learning techniques, let’s take a step back and understand when this type of approach should be used in a machine learning or AI project.

Ensemble learning is most impactful when a single model struggles to make accurate or consistent predictions. It can also be used in situations where data is complex, noisy, or unpredictable.

Here are some common cases where ensemble methods are particularly effective:

It is also simpler to train, easier to interpret, and faster to maintain. Before using an ensemble, it is important to weigh the benefit of higher accuracy against the additional time, computing power, and complexity it requires.

Next, let’s look at the main ways ensemble learning can be applied in machine learning projects. There are several core techniques used to combine models, each improving performance in its own way. The most common ensemble methods are bagging, boosting, stacking, and blending.

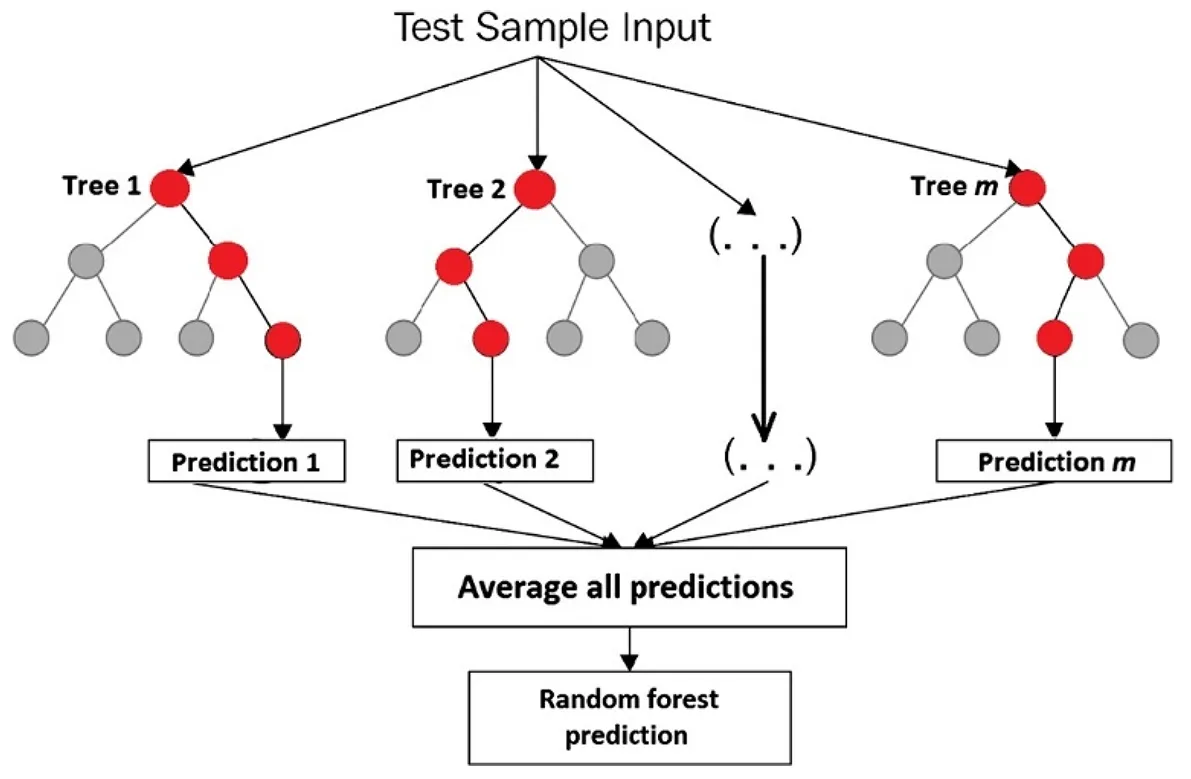

Bagging, short for bootstrap aggregating, is an ensemble learning method that helps improve model stability and accuracy by training multiple versions of the same model on different parts of the data.

Each subset is created using a process called bootstrap sampling, where data points are randomly selected with replacement. This means that after a data point is chosen, it is put back into the pool before the next one is picked, so the same point can appear more than once, while others might be left out. This randomness ensures that each model trains on a slightly different version of the dataset.

During inference, all the trained models run in parallel to make predictions on new, unseen data. Each model produces its own output based on what it learned, and these individual predictions are then combined to form the final result.

For regression tasks, such as predicting house prices or sales forecasts, this usually means averaging the outputs of all models to get a smoother estimate. For classification tasks, like identifying whether a transaction is fraudulent or not, the ensemble often takes a majority vote to decide the final class.

A good example of where bagging works well is with decision trees, which can easily overfit when trained on a single dataset. By training many trees on slightly different samples and combining their results, bagging reduces overfitting and improves reliability.

Consider the Random Forest algorithm. It is an ensemble of decision trees, where each tree is trained on a random subset of the training dataset as well as a random subset of features.

This feature randomness helps ensure that the trees are less correlated and that the overall model is more stable and accurate. A Random Forest algorithm can be used to classify images, detect fraud, predict customer churn, forecast sales, or estimate property prices.

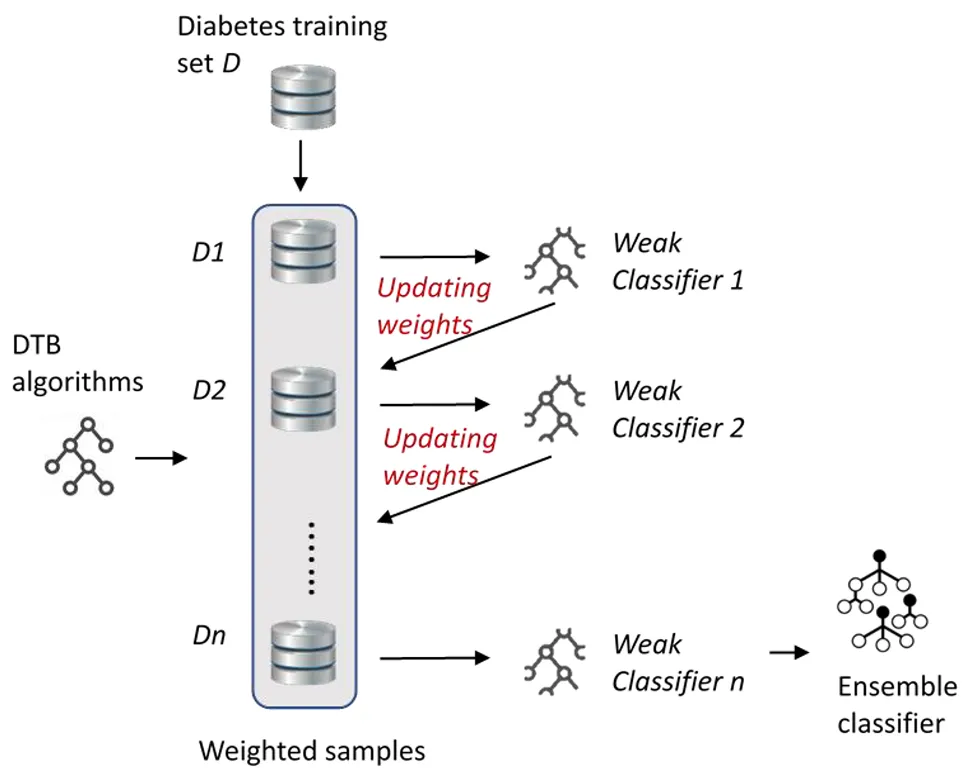

Boosting is another ensemble learning technique that focuses on improving weak learners (models) by training them sequentially, one after another, instead of in parallel. The core concept of boosting is that each new model learns from the mistakes of the previous ones, gradually improving overall model performance.

Unlike bagging, which reduces variance by averaging independent models, boosting reduces bias by making each new model pay more attention to difficult cases that the earlier models struggled with.

Since boosting models are trained sequentially, the way their predictions are combined at the end differs slightly from other ensemble methods. Each model contributes to the final prediction in proportion to its performance during training, with more accurate models receiving greater weight.

For regression tasks, the final result is usually a weighted sum of all the model predictions. For classification tasks, the algorithm combines the weighted votes from the models to decide the final class. This approach helps boosting create a strong overall model by giving more weight to the models that are more accurate while still learning from the others.

Here are some common types of boosting algorithms:

Stacking, also called stacked generalization, takes things a step further by using the predictions from several models as input for a final model known as a meta learner. You can think of it like having a group of experts who each share their opinion, and then a final decision maker learns how to weigh those opinions to make the best possible call.

For example, one model might be great at spotting fraud while another is better at predicting customer churn. The meta learner studies how each performs and uses their strengths together to make a more accurate final prediction.

Blending works in a similar way to stacking because it also combines the predictions from several models to make a final decision, but it takes a simpler and faster approach. Instead of using cross-validation (a method that splits the data into several parts and rotates them between training and testing to make the model more reliable), like stacking does, blending sets aside a small portion of the data, called a holdout set.

The base models are trained on the remaining data and then make predictions on the holdout set, which they have not seen before. This produces two key pieces of information: the actual answers, or true labels, and the predictions made by each base model.

These predictions are then passed to another model called the blending model or meta model. This final model studies how accurate each base model’s predictions are and learns how to combine them in the best possible way.

Because blending relies on just one train-and-test split instead of repeating the process several times, it runs faster and is easier to set up. The trade-off is that it has slightly less information to learn from, which can make it a bit less precise.

An important part of ensemble learning is evaluating how well a model performs on data it has not seen before. No matter how advanced a technique is, it must be tested to ensure it can generalize, meaning it should make accurate predictions on new, real-world examples rather than just memorizing the training data.

Here are some common performance metrics used to evaluate AI models:

So far, we’ve explored how ensemble learning works and the techniques behind it. Now let’s look at where this approach is making an impact.

Here are some key areas where ensemble learning is commonly applied:

While ensemble learning is most commonly used with structured or tabular data, like spreadsheets containing numerical or categorical information, it can also be applied to unstructured data such as text, images, audio, and video.

These data types are more complex and harder for models to interpret, but ensemble methods help improve accuracy and reliability. For instance, in computer vision, ensembles can enhance tasks like image classification and object detection.

By combining the predictions of multiple vision models, such as convolutional neural networks (CNNs), the system can recognize objects more accurately and handle variations in lighting, angle, or background that might confuse a single model.

An interesting example of using ensemble learning in computer vision is when an engineer combines multiple object detection models to improve accuracy. Imagine an engineer working on a safety monitoring system for a construction site, where lighting, angles, and object sizes constantly change.

A single model might miss a worker in the shadows or confuse machinery in motion. By using an ensemble of models, each with different strengths, the system becomes more reliable and less likely to make those errors.

In particular, models like Ultralytics YOLOv5 go hand in hand with model ensembling. Engineers can combine different YOLOv5 variants, such as YOLOv5x and YOLOv5l6, to make predictions together. Each model analyzes the same image and produces its own detections, which are then averaged to generate a stronger and more accurate final result.

Here are some key benefits of using ensemble learning:

While ensemble learning brings various advantages to the table, there are also some challenges to consider. Here are a few factors to keep in mind:

Ensemble learning shows how combining multiple models can make AI systems more accurate and reliable. It helps reduce errors and improve performance across different kinds of tasks. As machine learning and AI continue to grow, techniques like this are driving wider adoption and more practical, high-performing AI solutions.

Join our growing community and GitHub repository to find out more about Vision AI. Explore our solutions pages to learn about applications of computer vision in agriculture and AI in logistics. Check out our licensing options to get started with your own computer vision model today!