Learn how to train an AI model step-by-step with this quick guide for beginners. Discover essential workflows, datasets, and tools to get started.

Learn how to train an AI model step-by-step with this quick guide for beginners. Discover essential workflows, datasets, and tools to get started.

ChatGPT, image generators, and other artificial intelligence (AI) tools are becoming an integral part of daily life in schools, workplaces, and even on our personal devices. But have you ever wondered how they actually work?

At the heart of these systems is a process called training, where an AI model learns from large amounts of data to recognize patterns and make decisions. For years, training an AI model was a very complicated process, and although it remains complex, it has become far more approachable.

It required powerful computers that could process huge amounts of data, along with specialized datasets that had to be collected and labeled by experts. Setting up the right environment, installing frameworks, and running experiments was time-consuming, costly, and complex.

Today, open-source tools, easy-to-use platforms, and accessible datasets have made this process much simpler. Students, engineers, AI enthusiasts, data scientists, and even beginners can now experiment with model training without needing advanced hardware or deep expertise.

In this article, we’ll walk through the steps of how to train an AI model, explain each stage of the process, and share best practices. Let’s get started!

Training an AI model involves teaching a computer system to learn from examples, rather than providing it with a list of rules to follow. Instead of saying “if this, then that,” we show it lots of data and let it figure out patterns on its own.

At the core of this process are three key components working together: the dataset, the algorithm, and the training process. The dataset is the information the model studies.

The algorithm is the method that helps it learn from the data, and the training process is how it continually practices, makes predictions, identifies mistakes, and improves each time.

An important part of this process is the use of training and validation data. Training data helps the model learn patterns, while validation data, a separate portion of the dataset, is used to test how well the model is learning. Validation ensures the model isn’t just memorizing examples but can make reliable predictions on new, unseen data.

For instance, a model trained on house prices might use details such as location, size, number of rooms, and neighborhood trends to predict property values. The model studies historical data, identifies patterns, and learns how these factors influence price.

Similarly, a computer vision model might be trained on thousands of labeled images to distinguish cats from dogs. Each image teaches the model to recognize shapes, textures, and features, like ears, fur patterns, or tails, that set one apart from the other. In both cases, the model learns by analyzing training data, validating its performance on unseen examples, and refining its predictions over time.

Let’s take a closer look at how model training actually works.

When a trained AI model is used to make predictions, it takes in new data, like an image, a sentence, or a set of numbers, and produces an output based on what it has already learned. This is referred to as inference, which simply means the model is applying what it learned during training to make decisions or predictions on new information.

However, before a model can perform inference effectively, it first needs to be trained. Training is the process by which the model learns from examples so it can recognize patterns and make accurate predictions later.

During training, we feed the model labeled examples. For instance, an image of a cat with the correct label “cat.” The model processes the input and generates a prediction. Its output is then compared to the correct label, and the difference between the two is calculated using a loss function. The loss value represents the model’s prediction error or how far off its output is from the desired result.

To reduce this error, the model relies on an optimizer, such as stochastic gradient descent (SGD) or Adam. The optimizer adjusts the model’s internal parameters, known as weights, in the direction that minimizes the loss. These weights determine how strongly the model responds to different features in the data.

This process, making predictions, calculating the loss, updating the weights, and repeating, occurs over many iterations and epochs. With each cycle, the model refines its understanding of the data and gradually reduces its prediction error. When trained effectively, the loss eventually stabilizes, which often indicates that the model has learned the main patterns present in the training data.

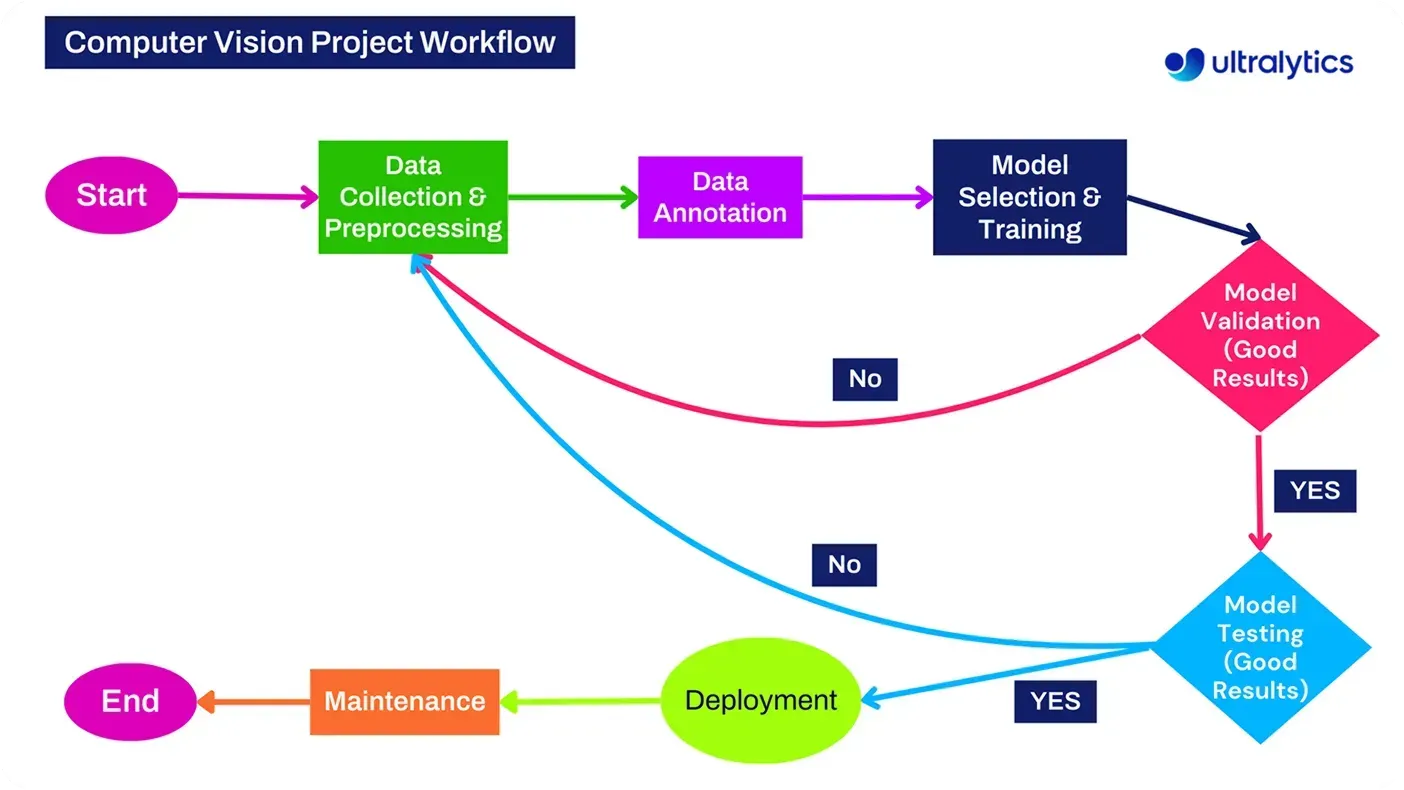

Training an AI model can seem complicated at first, but breaking it down into simple steps makes the process much easier to understand. Each stage builds on the previous one, helping you move from an idea to a working solution.

Next, we’ll explore the key steps beginners can focus on: defining the use case, collecting and preparing data, choosing a model and algorithm, setting up the environment, training, validating and testing, and finally deploying and iterating.

The first step in training an AI model is to clearly define the problem you want your AI solution to solve. Without a well-defined goal, the process can easily lose focus, and the model may not deliver meaningful results. A use case is simply a specific scenario where you expect the model to make predictions or classifications.

For example, in computer vision, a branch of AI that allows machines to interpret and understand visual information, a common task is object detection. This can be applied in various ways, such as identifying products on shelves, monitoring road traffic, or detecting defects in manufacturing.

Likewise, in finance and supply chain management, forecasting models help predict trends, demand, or future performance. Also, in natural language processing (NLP), text classification enables systems to sort emails, analyze customer feedback, or detect sentiment in reviews.

In general, when you start with a clear goal, it becomes much easier to choose the right dataset, the learning method, and the model that will work best.

Once you’ve defined your use case, the next step is to gather data. Training data is the foundation of every AI model, and the quality of this data directly impacts model performance. It’s essential to keep in mind that data is the backbone of model training, and an AI system is only as good as the data it learns from. Biases or gaps in that data will inevitably affect its predictions.

The type of data you collect depends on your use case. For instance, medical image analysis requires high-resolution scans, while sentiment analysis uses text from reviews or social media. This data can be sourced from open datasets shared by the research community, internal company databases, or through different collection methods such as scraping or sensor data.

After collection, the data can be preprocessed. This includes cleaning errors, standardizing formats, and labeling information so the algorithm can learn from it. Data cleaning or preprocessing ensures the dataset is accurate and reliable.

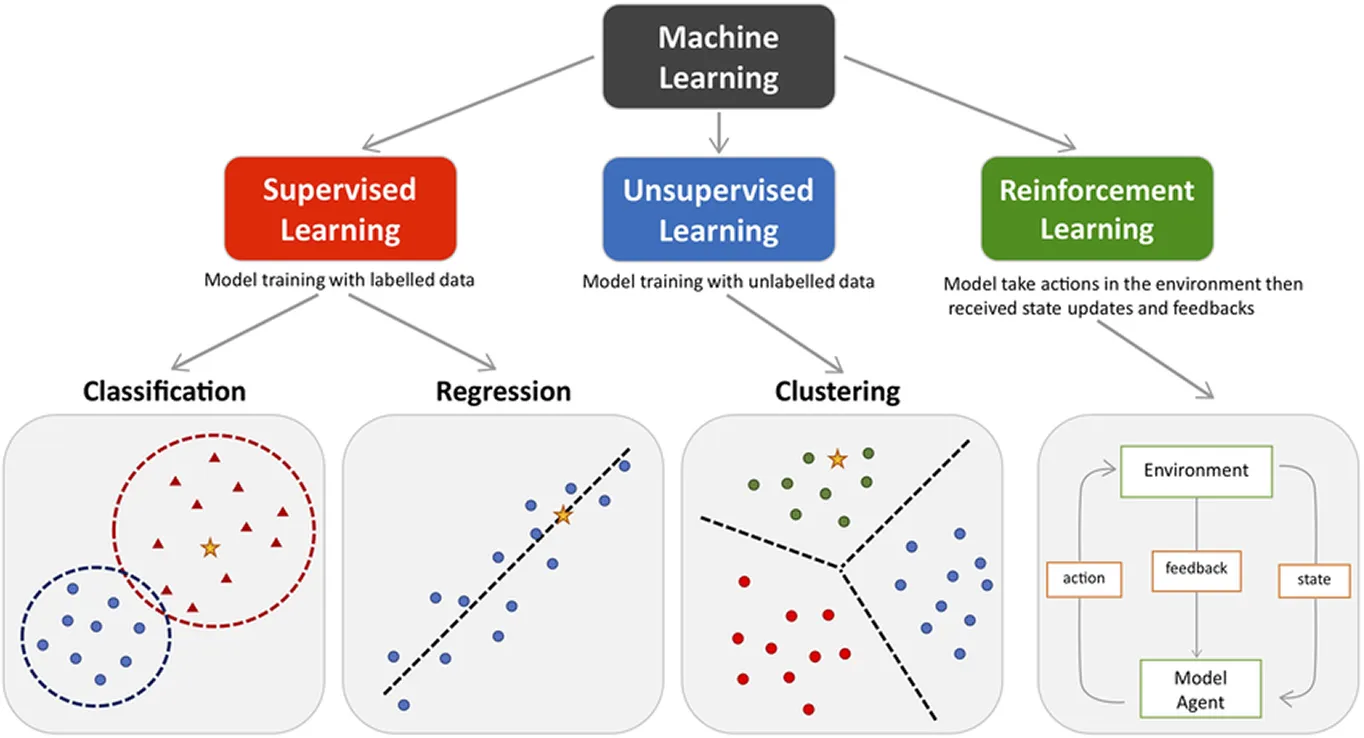

Once your data is ready, the next step is to choose the right model and learning method. Machine learning methods generally fall into three categories: supervised, unsupervised, and reinforcement learning.

In supervised learning, models learn from labeled data and are used for tasks such as price prediction, image recognition, or email classification. In contrast, unsupervised learning works with unlabeled data to find hidden patterns or groupings, such as clustering customers or discovering trends. Whereas reinforcement learning trains an agent through feedback and rewards, it is commonly used in robotics, games, and automation.

In practice, this step is closely tied to data collection because the kind of model you choose often depends on the data available, and the data you collect is usually shaped by the model’s requirements.

You can think of it as the classic chicken-and-egg question; which comes first depends on your application. Sometimes you already have data and want to find the best way to make use of it. Other times, you start with a problem to solve and need to collect or create new data to train your model effectively.

Let’s assume, in this case, you already have a dataset and want to choose the most suitable model for supervised learning. If your data is made up of numbers, you might train a regression model to predict outcomes such as prices, sales, or trends.

Similarly, if you are working with images, you might use a computer vision model like Ultralytics YOLO11 or Ultralytics YOLO26 that supports tasks like instance segmentation and object detection.

On the other hand, when your data is text, a language model might be the best choice. So how do you decide which learning method or algorithm to use? That depends on several factors, including the size and quality of your dataset, the complexity of the task, the computing resources available, and the level of accuracy you need.

To learn more about these factors and explore different AI concepts, check out the Guides section of our blog.

Setting up the right environment is an important step before training your AI model. The right setup helps ensure your experiments run smoothly and efficiently.

Here are the key aspects to consider:

Once your environment is ready, it’s time to start training. This is the stage where the model learns from your dataset by recognizing patterns and improving over time.

Training involves repeatedly showing the data to the model and adjusting its internal parameters until its predictions become more accurate. Each complete pass through the dataset is known as an epoch.

To improve performance, you can use optimization techniques such as hyperparameter tuning. Adjusting settings like the learning rate, batch size, or number of epochs can make a significant difference in how well your model learns.

Throughout training, it’s important to monitor progress using performance metrics. Metrics such as accuracy, precision, recall, and loss indicate whether the model is improving or needs adjustments. Most machine learning and AI libraries include dashboards and visual tools that make it easy to track these metrics in real time and identify potential issues early.

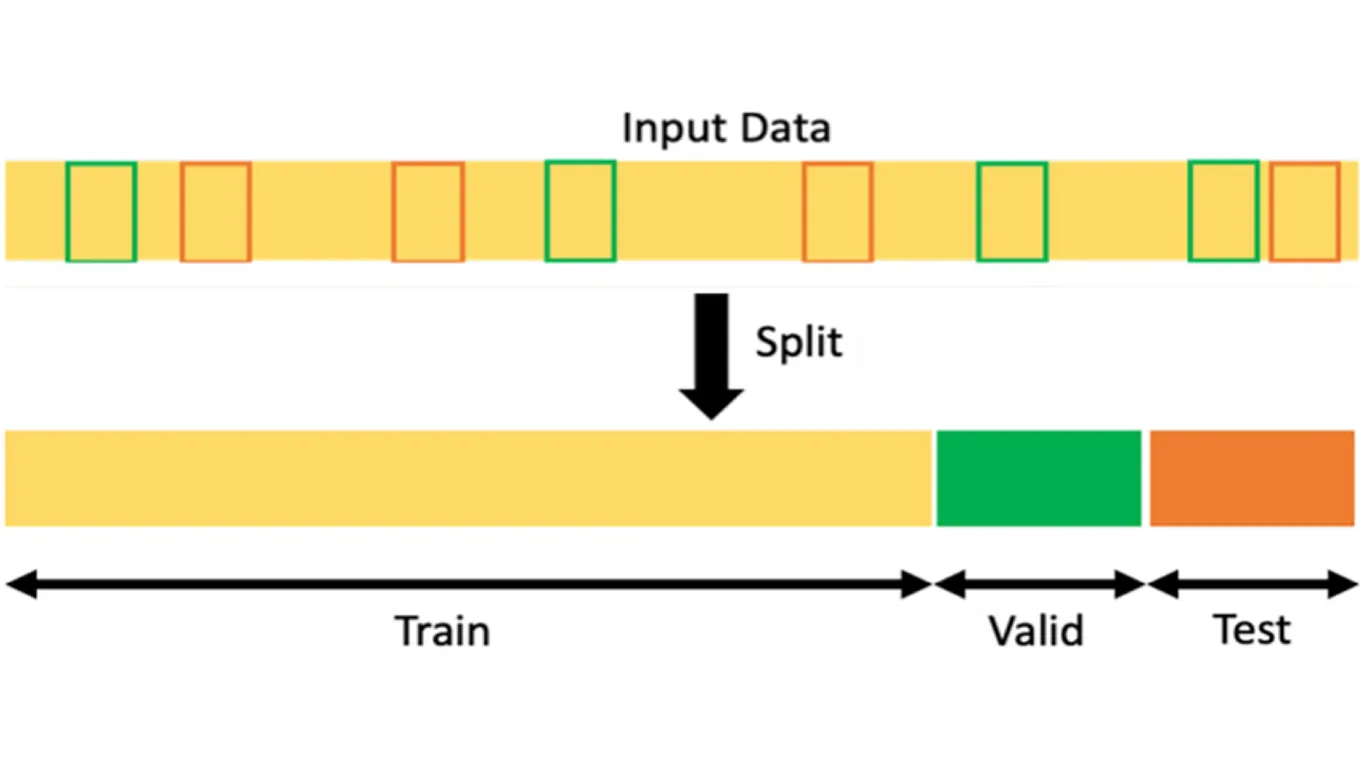

After you’ve trained your model, you can evaluate and validate it. This involves testing it on data it has not seen before to check if it can handle real-world scenarios. You might be wondering where this new data actually comes from.

In most cases, the dataset is divided before training into three parts: a training set, a validation set, and a test set. The training set teaches the model to recognize patterns in the data.

On the other hand, the validation set is used during training to fine-tune parameters and prevent overfitting (when a model learns the training data too closely and performs poorly on new, unseen data).

Conversely, the test set is used afterward to measure how well the model performs on completely unseen data. When a model performs consistently well across both the validation and test sets, it’s a strong indication that it has learned meaningful patterns rather than simply memorizing examples.

After a model has been validated and tested, it can be deployed for actual real-world use. This simply means putting the model into use so it can make predictions in the real world. For example, a trained model might be integrated into a website, an app, or a machine where it can process new data and give results automatically.

Models can be deployed in different ways depending on the application. Some models are shared through APIs, which are simple software connections that allow other applications to access the model’s predictions. Others are hosted on cloud platforms, where they can be easily scaled and managed online.

In some cases, models run on edge devices such as cameras or sensors. These models make predictions locally without relying on an internet connection. The best deployment method depends on the use case and available resources.

It’s also crucial to monitor and update the model regularly. Over time, new data or changing conditions can affect performance. Continuous evaluation, retraining, and optimization ensure the model stays accurate, reliable, and effective in real-world applications.

Training an AI model involves several steps, and following a few best practices can make the process smoother and the results more reliable. Let’s take a look at a few key practices that can help you build better, more accurate models.

Start by using balanced datasets so that all categories or classes are represented fairly. When one category appears much more often than others, the model can become biased and struggle to make accurate predictions.

Next, leverage techniques such as hyperparameter tuning, which involves adjusting settings like the learning rate or batch size to improve accuracy. Even small changes can have a big impact on how effectively the model learns.

Throughout training, monitor key performance metrics such as precision, recall, and loss. These values help you determine whether the model is learning meaningful patterns or simply memorizing the data.

Finally, always make it a habit to document your workflow. Keep track of the data you used, the experiments you ran, and the results you achieved. Clear documentation makes it easier to reproduce successful outcomes and continuously refine your training process over time.

AI is a technology that’s being widely adopted across different industries and applications. From text and images to sound and time-based data, the same core principles of using data, algorithms, and iterative learning apply everywhere.

Here are some of the key areas where AI models are trained and used:

Despite recent technological advancements, training an AI model still comes with certain challenges that can impact performance and reliability. Here are some key limitations to keep in mind as you build and refine your models:

Traditionally, training an AI model required large teams, powerful hardware, and complex infrastructure. Today, however, cutting-edge tools and platforms have made the process much simpler, faster, and more accessible.

These solutions reduce the need for deep technical expertise and make it possible for individuals, students, and businesses to build and deploy custom models with ease. In fact, getting started with AI training has never been easier.

For instance, the Ultralytics Python package is a great place to start. It provides everything you need to train, validate, and run inference with Ultralytics YOLO models, and to export them for deployment in various applications.

Other popular tools, such as Roboflow, TensorFlow, Hugging Face, and PyTorch Lightning, also simplify different parts of the AI training workflow, from data preparation to deployment. With these platforms, AI development has become more accessible than ever, empowering developers, businesses, and even beginners to experiment and innovate.

Training an AI model may seem complex, but with the right tools, data, and approach, anyone can get started today. By understanding each step, from defining your use case to deployment, you can turn ideas into real-world AI solutions that make a difference. As AI technology continues to evolve, the opportunities to learn, build, and innovate are more accessible than ever.

Join our growing community and explore our GitHub repository for hands-on AI resources. To build with Vision AI today, explore our licensing options. Learn how AI in agriculture is transforming farming and how Vision AI in robotics is shaping the future by visiting our solutions pages.