Meet Ultralytics YOLO26: A better, faster, smaller YOLO model

Explore the latest Ultralytics YOLO model, Ultralytics YOLO26, and its cutting-edge features that support an optimal balance of speed, accuracy, and deployability.

Explore the latest Ultralytics YOLO model, Ultralytics YOLO26, and its cutting-edge features that support an optimal balance of speed, accuracy, and deployability.

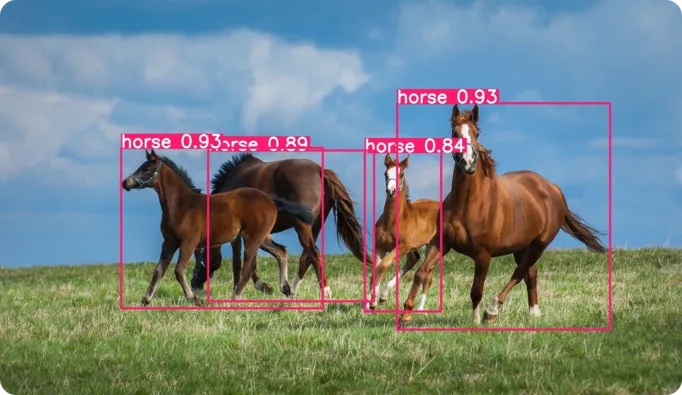

On September 25th, at our annual hybrid event, YOLO Vision 2025 (YV25) in London, Glenn Jocher, our Founder & CEO, officially announced the latest breakthrough in the Ultralytics YOLO model series, Ultralytics YOLO26! Our new computer vision model, YOLO26, can analyze and interpret images and video with a streamlined architecture that balances speed, accuracy, and ease of deployment.

While Ultralytics YOLO26 simplifies aspects of the model’s design and adds new enhancements, it also continues to offer the familiar features users expect from Ultralytics YOLO models. For example, Ultralytics YOLO26 is easy to use, supports a range of computer vision tasks, and provides flexible integration and deployment options.

Needless to say, this makes switching to using Ultralytics YOLO26 hassle-free, and we can’t wait to see users experiencing it for themselves when it becomes publicly available at the end of October.

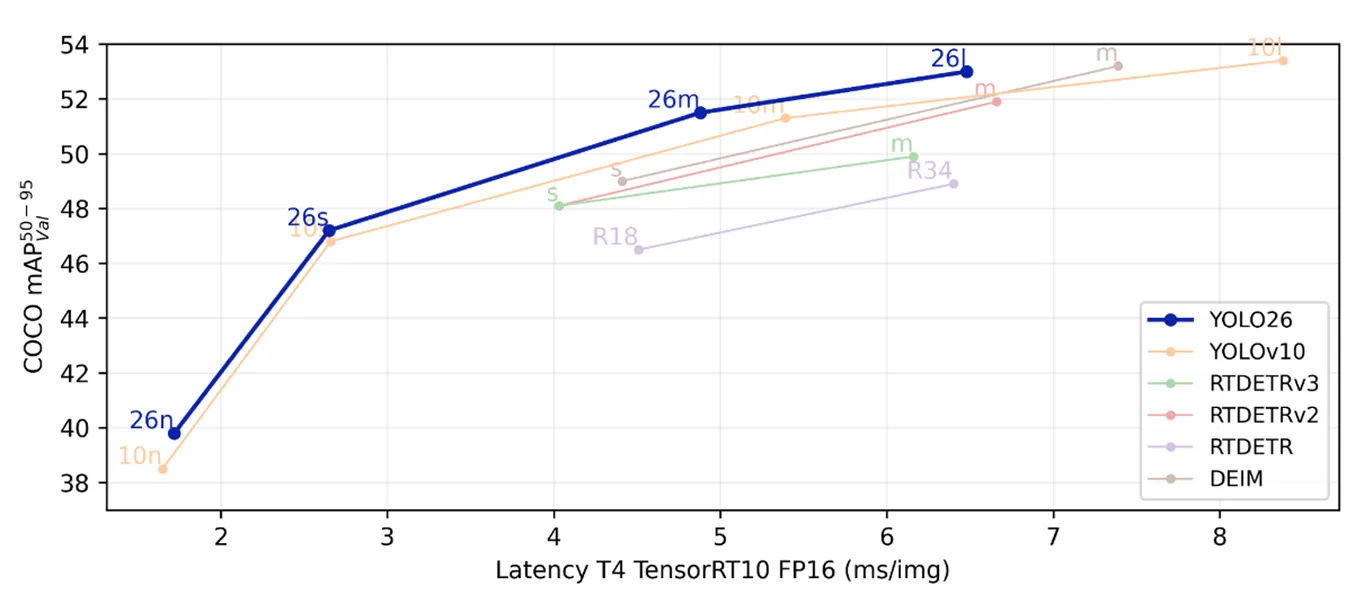

Simply put, Ultralytics YOLO26 is a better, faster, and smaller Vision AI model. In this article, we’ll explore the key features of Ultralytics YOLO26 and what it brings to the table. Let’s get started!

Before we dive into the key features of Ultralytics YOLO26 and the applications it makes possible, let’s take a step back and discuss the inspiration and motivation that drove the development of this model.

At Ultralytics, we’ve always believed in the power of innovation. From the very beginning, our mission has been twofold. On one hand, we want to make Vision AI accessible so anyone can use it without barriers. On the other hand, we are equally committed to keeping it at the cutting edge, pushing the boundaries of what computer vision models can achieve.

A key factor behind this mission is that the AI space is always evolving. For instance, edge AI, which involves running AI models directly on devices instead of relying on the cloud, is being adopted rapidly across industries.

From smart cameras to autonomous systems, devices at the edge are now expected to process information in real time. This shift demands models that are lighter and faster, while still delivering the same high level of accuracy.

That’s why there is a constant need to keep improving our Ultralytics YOLO models. As Glenn Jocher puts it, “One of the biggest challenges was making sure users can get the most out of YOLO26 while still delivering top performance.”

YOLO26 is available out of the box in five different model variants, giving you the flexibility to leverage its capabilities in applications of any scale.

All of these model variants support multiple computer vision tasks, just like previous Ultralytics YOLO models. This means that no matter which size you choose, you can rely on YOLO26 to deliver a wide range of capabilities, much like Ultralytics YOLO11.

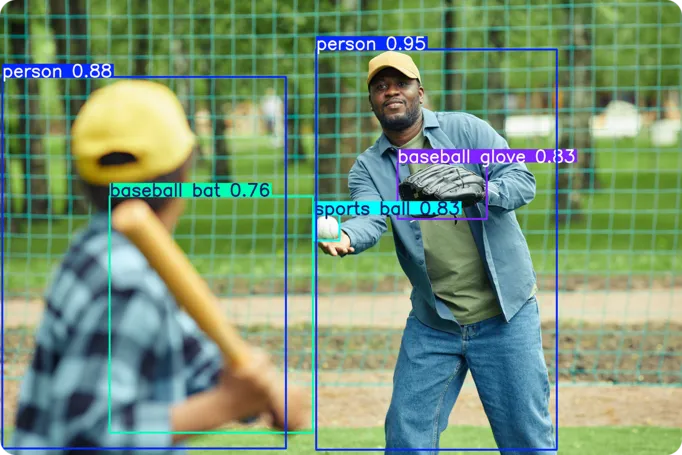

Here’s an overview of the computer vision tasks supported by YOLO26:

Now that we have a better understanding of what YOLO26 is capable of, let’s walk through some of the innovations in its architecture.

The model’s design has been streamlined by removing the Distribution Focal Loss (DFL) module, which previously slowed down inference and limited bounding box regression.

The prediction process has also been simplified with an end-to-end (E2E) inference option, which lets the model skip the traditional Non-Maximum Suppression (NMS) step. This enhancement reduces complexity and lets the model deliver results more quickly, making deployment easier in real-world applications.

Other improvements make the model smarter and more reliable. Progressive Loss Balancing (ProgLoss) helps stabilize training and improve accuracy, while Small-Target-Aware Label Assignment (STAL) ensures the model detects small objects more effectively. On top of that, a new MuSGD optimizer improves training convergence and boosts overall performance.

In fact, the smallest version of YOLO26, the nano model, now runs up to 43% faster on standard CPUs, making it especially well-suited for mobile apps, smart cameras, and other edge devices where speed and efficiency are critical.

Here’s a quick recap of YOLO26’s features and what users can look forward to:

Whether you are working on mobile apps, smart cameras, or enterprise systems, deploying YOLO26 is simple and flexible. The Ultralytics Python package supports a constantly growing number of export formats, which makes it easy to integrate YOLO26 into existing workflows and makes it compatible with almost any platform.

A few of the export options include TensorRT for maximum GPU acceleration, ONNX for broad compatibility, CoreML for native iOS apps, TFLite for Android and edge devices, and OpenVINO for optimized performance on Intel hardware. This flexibility makes it straightforward to take YOLO26 from development to production without extra hurdles.

Another crucial part of deployment is making sure models run efficiently on devices with limited resources. This is where quantization comes in. Thanks to its simplified architecture, YOLO26 handles this exceptionally well. It supports INT8 deployment (using 8-bit compression to reduce size and improve speed with minimal accuracy loss) as well as half-precision (FP16) for faster inference on supported hardware.

Most importantly, YOLO26 delivers consistent performance across these quantization levels, so you can rely on it whether it’s running on a powerful server or a compact edge device.

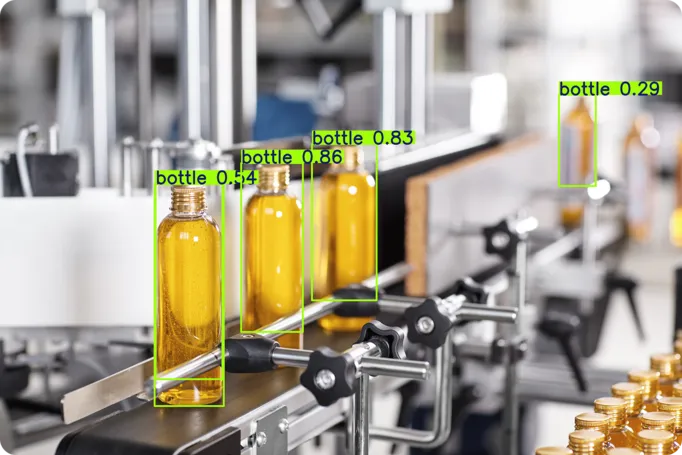

YOLO26 can be used in a wide variety of computer vision applications across many different industries and use cases. From robotics to manufacturing, it can make a significant impact by improving workflows and enabling faster, more accurate decision-making.

For instance, a good example is in robotics, where YOLO26 can help robots interpret their surroundings in real time. This makes navigation smoother and object handling more precise. It also enables safer collaboration with people.

Another example is manufacturing, where the model can be used for defect detection. It can automatically identify flaws on production lines more quickly and accurately than manual inspection.

In general, because YOLO26 is better, faster, and lighter, it adapts easily to a wide range of environments, from lightweight edge devices to large enterprise systems. This makes it a practical choice for industries looking to improve efficiency, accuracy, and reliability.

Ultralytics YOLO26 is a computer vision model that is better, faster, and lighter, all the while remaining easy to use and still delivering strong performance. It works across a wide range of tasks and platforms and will be available to everyone by the end of October. We can’t wait to see how the community uses it to create new solutions and push the boundaries of computer vision.

Join our growing community! Explore our GitHub repository to learn more about AI. Discover innovations like computer vision in retail and AI in the automotive industry by visiting our solution pages. To start building with computer vision today, check out our licensing options.