Traffic video detection at nighttime: A look at why accuracy is key

From poor visibility to glare, learn what makes nighttime traffic video detection accuracy a challenge, and how computer vision improves safety and flow.

From poor visibility to glare, learn what makes nighttime traffic video detection accuracy a challenge, and how computer vision improves safety and flow.

Roads appear different in the dark, not just to drivers, but also to the systems responsible for keeping them safe. Poor visibility, headlight glare, and fast-moving reflections can make nighttime vehicle detection more challenging.

Traditional methods, such as manual observation or motion-based cameras, mainly rely on human judgment and simple motion cues. This can be unreliable in low-light or complex traffic conditions. These methods may misidentify vehicles or pedestrians, leading to false detections or missed observations.

Artificial intelligence (AI), deep learning, and computer vision can bridge the gap by automating vehicle detection and recognition. In particular, computer vision is a branch of AI that allows machines to see and interpret visual data. When it comes to nighttime traffic video detection, Vision AI models can go beyond brightness or motion, learning to recognize complex patterns that improve accuracy.

For instance, computer vision models like Ultralytics YOLO26 and Ultralytics YOLO11 are known for their speed and precision. They can handle video and image data in real-time, detecting and classifying multiple objects within a frame and tracking them across sequences.

These capabilities are made possible through various vision tasks, such as object detection and instance segmentation, even when headlights, shadows, or overlapping vehicles make detection difficult. They enable the model to identify, classify, and differentiate objects.

In this article, we’ll explore the challenges of nighttime traffic monitoring, see how computer vision addresses them, and where Vision AI is being applied in real-world traffic monitoring systems. Let’s get started!

Before exploring how computer vision solves the challenges of nighttime traffic detection, let’s look at why spotting vehicles after dark is so difficult. Here are a few factors to consider:

Computer vision models are trained using large collections of images known as datasets. These datasets are carefully labeled with the objects they contain, such as cars, trucks, pedestrians, and bicycles, and serve as the foundation for training. By studying these labeled examples, the model learns to recognize patterns, enabling it to identify and detect objects in new video footage.

During training, the model extracts features from the data and uses them to improve detection accuracy. This process helps reduce missed detections and false alarms when the model is exposed to real-world traffic scenes.

Building datasets or data collections for nighttime conditions, however, is much more challenging. Poor video quality makes labeling time-consuming and prone to mistakes. Also, rare but important events, such as accidents or unusual driving behavior, can be difficult to capture in nighttime settings. This results in a limited number of training examples for models to learn from.

To address this issue, researchers have developed specialized benchmark datasets. A benchmark dataset is more than just a collection of images. It includes standardized labels, evaluation protocols, and performance metrics such as precision, recall, and mean average precision (mAP). These metrics make it possible to test and compare different algorithms under the same conditions, ensuring fair and consistent evaluation.

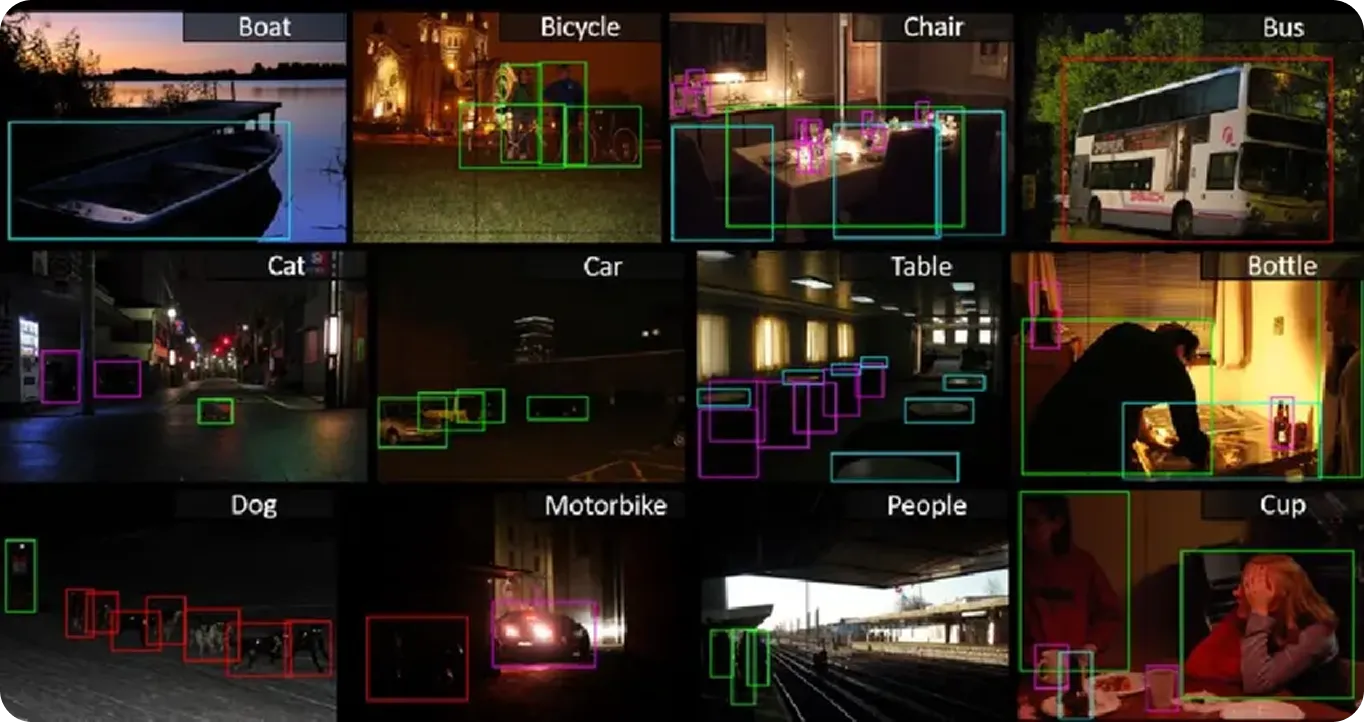

For example, the ExDark benchmark dataset contains 7,363 low-light images across 12 object categories (bike, boat, bottle, bus, car, cat, chair, cup, dog, motorbike, people, table). Each image is annotated with bounding boxes, and the dataset is widely used in research to evaluate object detection and classification in poor illumination.

Similarly, the NightOwls benchmark dataset provides around 115,000 nighttime images with approximately 279,000 pedestrian annotations. It has become a key resource for evaluating pedestrian detection systems, which play a vital role in road safety and advanced driver assistance systems (ADAS).

Now that we have a better understanding of the challenges involved and the need for datasets, let’s take a closer look at how vision-based systems can interpret nighttime traffic videos.

Models like YOLO11 and YOLO26 support computer vision tasks such as object detection, tracking, and instance segmentation, which make it possible to analyze traffic even under low-light conditions. Here’s an overview of the Vision AI tasks these models support for analyzing nighttime traffic:

We discussed model training, the need for datasets, and the tasks that models like YOLO11 and YOLO26 support. But to put it all together, there’s one more concept to walk through: how these models are actually applied to nighttime monitoring.

Out of the box, Ultralytics YOLO models are available as pre-trained versions, meaning they have already been trained on large, general-purpose datasets, such as the COCO dataset, which covers many everyday objects. This gives the model a strong baseline ability to detect and classify objects.

However, because these datasets contain very few low-light or nighttime examples, the pretrained models aren’t great at handling glare, shadows, or poor contrast effectively. To make them impactful for nighttime traffic video detection, they need to be fine-tuned on specialized datasets that reflect real-world nighttime conditions.

Fine-tuning or custom training involves training the model on additional labeled images captured in low-light conditions, such as vehicles under headlight glare, pedestrians in crosswalks, or crowded intersections at night, so it learns the unique features of nighttime scenes.

Once custom-trained, the model can support tasks like detection, tracking, segmentation, or classification with much greater accuracy in low-light conditions. This allows traffic authorities to apply such models for practical use cases such as vehicle counting, speed estimation, adaptive signal control, and accident prevention at night.

Next, let’s look at how computer vision can be adopted in real-world nighttime traffic systems.

Urban intersections are some of the hardest areas to manage, especially at night when visibility drops and traffic flow becomes less predictable. Traditional signals rely on fixed timers that can’t respond to real-time changes. Research shows that this not only wastes time for drivers but also leads to unnecessary fuel consumption and delays.

That’s exactly where computer vision systems can help overcome this challenge. These systems can monitor vehicle movements and detect the number of vehicles in real time. The data is then fed into adaptive traffic control systems. This enables signals to adjust quickly in response to actual road conditions. So, if one lane is crowded while another is empty, the signal timing can be adjusted instantly to clear bottlenecks.

Driving at night is riskier than during the day because reduced visibility, uneven lighting, and glare make it harder for drivers to judge distances. In fact, studies show the fatal accident rate per kilometer at night can be up to three times higher than in daylight.

Conventional monitoring methods, such as fixed surveillance cameras, road patrols, vehicle presence sensors, and fixed-time signal systems, operate reactively and can fail to detect hazardous situations early enough to prevent them from occurring.

Computer vision solutions can handle this concern by analyzing live video streams and detecting unusual patterns on the road or freeway. Using object tracking, these systems can monitor vehicle behavior in real-time and raise alerts when something appears abnormal.

For instance, if a moving vehicle is switching lanes, moving too fast in a congested area, or slowing down suddenly, the system can flag it in real-time. This means authorities can respond quickly and prevent accidents before they happen.

For autonomous vehicles and ADAS, nighttime driving brings its own challenges. Low visibility and unpredictable traffic patterns make it harder for traditional sensors to perform reliably, which raises safety concerns.

Computer vision enhances these systems by handling tasks such as object detection, lane tracking, and segmentation, enabling vehicles to recognize pedestrians, other cars, and obstacles even in poor lighting conditions. When combined with radar or LiDAR (Light Detection and Ranging), which map the surroundings in 3D, the added visual layer helps ADAS provide early warnings and gives autonomous vehicles the awareness they need to navigate more safely at night.

Speeding is responsible for one in three traffic fatalities worldwide, and the risk only gets worse at night. Darkness makes it harder for traffic police to catch violations, since details that are clear in daylight often blur after dark.

That’s why offenses like speeding on empty roads, running red lights, or drifting into the wrong lane often go unpunished. Computer vision tackles this problem by using infrared cameras to spot violations even in low-light conditions. These systems create detection zones where incidents are recorded, cutting down on false positives and providing clear, verifiable evidence. For transportation authorities, it means fewer blind spots and less reliance on manual checks.

Here are some advantages of using Vision AI for nighttime traffic video detection.

Despite its benefits, nighttime traffic detection using Vision AI also comes with certain limitations. Here are some factors to keep in mind:

As cities grow and roads get busier, nighttime traffic detection is moving toward more intelligent and responsive systems. For example, thermal cameras and infrared sensors can detect heat signatures from people and vehicles, making it possible to see even in complete darkness. This reduces errors that occur when standard cameras struggle with glare or low light.

Another growing approach is camera-LiDAR fusion. Cameras provide detailed images of the road, while LiDAR generates a precise 3D map. Used together, they improve the accuracy of detecting lanes, vehicles, and obstacles, particularly in low light or moderate fog.

At the same time, advances in low-light image enhancement, pedestrian recognition, and license plate identification are expanding the capabilities of computer vision. With these improvements, even poorly lit roads and intersections can be monitored with greater reliability and fewer errors.

Detecting vehicles at night has always been a challenge for traffic monitoring, but computer vision is making it more manageable. By reducing the impact of glare and handling complex traffic scenes, it provides a more accurate picture of how roads behave after dark. As these systems continue to advance, they are paving the way for safer, smarter, and more efficient transportation at night.

Ready to integrate Vision AI into your projects? Join our active community and discover innovations like AI in the automotive industry and Vision AI in robotics. Visit our GitHub repository to discover more. To get started with computer vision today, check out our licensing options.