Explore how neuro-symbolic AI aims to combine learning and logic to build systems that understand context and provide more transparent, explainable decisions.

Explore how neuro-symbolic AI aims to combine learning and logic to build systems that understand context and provide more transparent, explainable decisions.

Nowadays, thanks to the rapid growth of artificial intelligence (AI) and the increasing availability of computing power, advanced AI models are being released faster than ever before. In fact, the AI space is driving meaningful innovation across many industries.

For instance, in healthcare, AI systems are being used to assist with tasks such as analyzing medical images for early diagnosis. However, like any technology, AI has its limitations.

One major concern is transparency. For example, an object detection model may accurately locate a tumor in an MRI brain scan, but it can be difficult to understand how the model arrived at that conclusion. This lack of explainability makes it harder for doctors and researchers to fully trust or validate AI results.

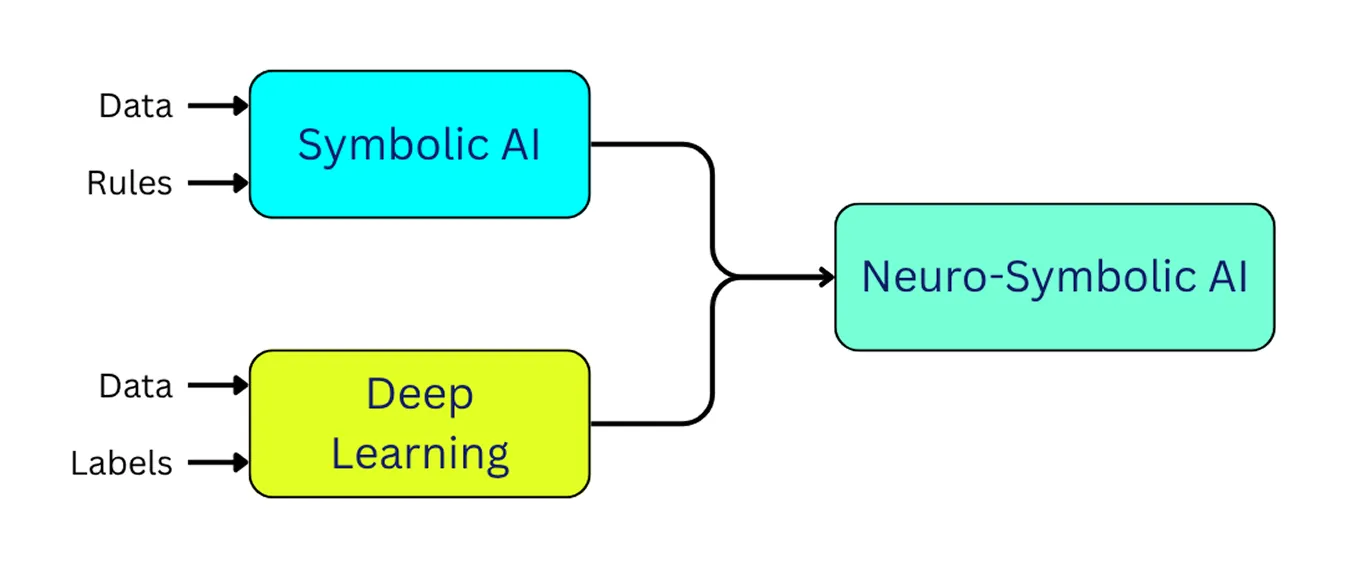

That's exactly why there's growing interest in the emerging field of neuro-symbolic AI. Neuro-symbolic AI combines the pattern-recognition strengths of deep learning with the structured, rule-based reasoning found in symbolic AI. The goal is to create systems that make accurate predictions, but can also explain their reasoning in a way humans can understand.

In this article, we’ll explore how neuro-symbolic artificial intelligence works and how it brings learning and reasoning together to build more transparent, context-aware systems. Let's get started!

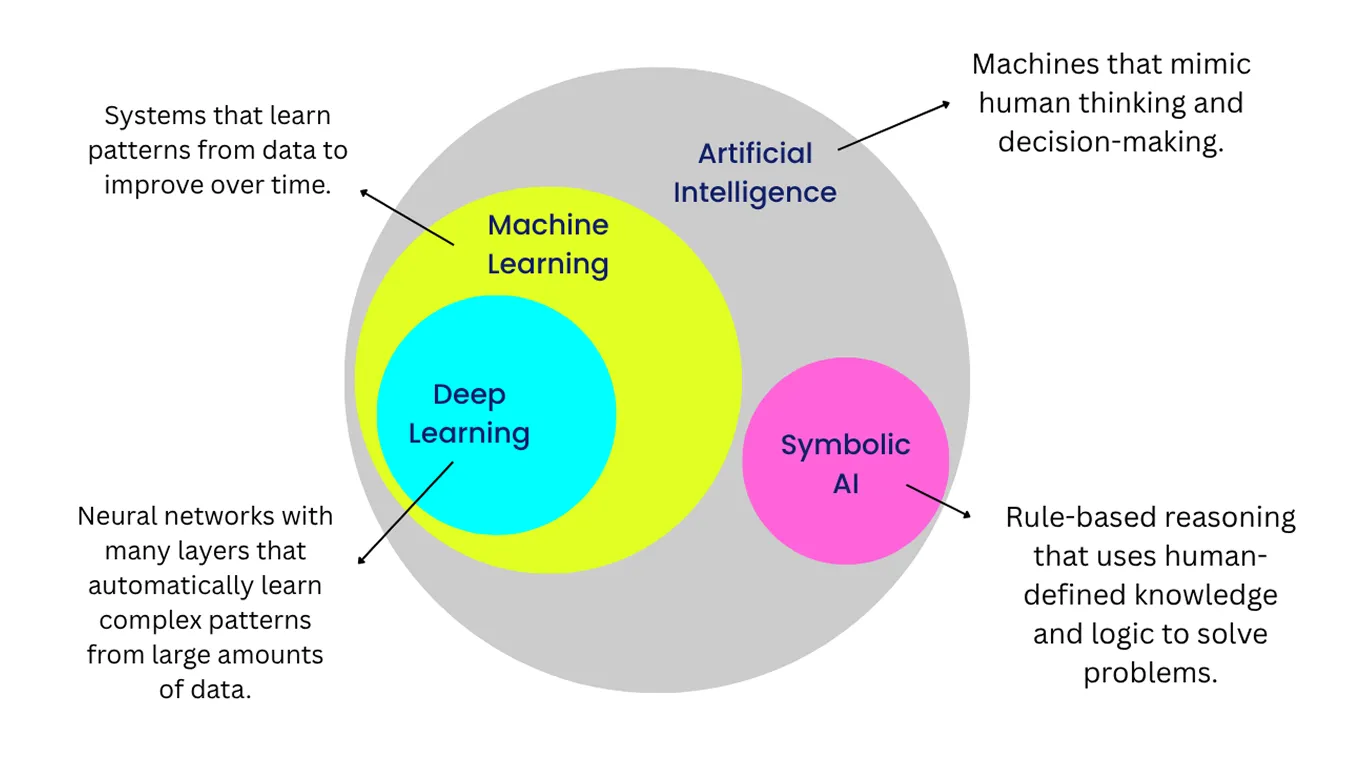

Before we dive into neuro-symbolic AI, let’s take a closer look at the two subfields it brings together: deep learning and symbolic AI.

Deep learning focuses on recognizing patterns in data, while symbolic AI uses rules, logic, or common sense to reason through problems. Each has strengths, but also limitations. By combining them, neuro-symbolic AI creates systems that can both learn from data and explain their decisions more clearly.

Deep learning is a branch of machine learning that uses artificial neural networks, loosely inspired by how the brain processes information. These networks learn by analyzing large amounts of data and adjusting their internal connections to improve performance.

This allows them to recognize patterns in images, sounds, and text without needing handcrafted rules for every situation. Because of this, deep learning is highly effective for perception-focused tasks such as image recognition, speech processing, and language translation.

A good example is a computer vision model trained to segment objects in images. With enough labeled examples, it can learn to separate roads, vehicles, and pedestrians in real-time traffic footage.

However, despite their accuracy, deep learning models often struggle to clearly explain how they arrived at a specific result. This challenge, commonly referred to as the black box problem, makes it harder for users to interpret or verify the model’s decisions, especially in sensitive areas like healthcare or finance. It is significant because responsible AI requires transparency, trust, and the ability to understand why a model made a certain prediction.

Symbolic AI takes a more structured approach to intelligence and decision-making. It represents knowledge using symbols and applies logical rules to work with that knowledge, similar to how we use reasoning and language to solve problems. Each step in the reasoning process is defined, which makes symbolic AI’s decisions transparent and easier to explain.

Symbolic knowledge works especially well in tasks that follow clear and well-defined rules, such as planning, scheduling, or managing structured knowledge. However, symbolic AI struggles with unstructured data or situations that don’t fit neatly into predefined categories.

A common example of symbolic approaches in action is early chess programs. They followed hand-crafted rules and fixed strategies rather than learning from previous games or adapting to different opponents. As a result, their gameplay tended to be rigid and predictable.

In the 2010s, as deep learning became more widely adopted, researchers began looking for ways to move beyond simple pattern recognition and toward understanding relationships and context. This shift made it possible for AI models not only to detect objects in a scene, like a cat and a mat, but also to interpret how those objects relate, such as recognizing that the cat is sitting on the mat.

However, this progress also highlighted a core limitation. Deep learning models can recognize patterns extremely well, but they often struggle to explain their reasoning or handle unfamiliar situations. This renewed attention to reasoning led researchers back to a field that has existed since the 1980s: neuro-symbolic AI.

Neuro-symbolic AI integrates deep learning and symbolic AI. It enables models to learn from examples in the same way deep learning does, while also applying logic and reasoning as symbolic AI does.

Simply put, neuro-symbolic AI can recognize information, understand context, and provide clearer explanations for its decisions. This approach brings us closer to developing AI systems that behave in a more reliable and human-like way.

A neuro-symbolic architectures bring learning and reasoning together within a single framework. It typically includes three main parts: a neural perception layer that interprets raw data, a symbolic reasoning layer that applies logic, and an integration layer that connects the two. Next, we'll take a closer look at each layer.

The neural perception component processes unstructured data, such as images, video, text, or audio, and converts it into internal representations that the system can work with. It typically uses deep learning models to detect patterns and identify objects or features in the input. At this stage, the system recognizes what is present in the data, but it doesn't yet reason about meaning, relationships, or context.

Here are some common types of deep learning models used in this layer:

Ultimately, these neural models extract and represent meaningful features from raw data. This output then becomes the input for the symbolic reasoning layer, which interprets and reasons about what the system has detected.

The symbolic reasoning layer takes the information produced by the neural perception layer and makes sense of it using logic. Instead of working from just patterns, it relies on things like rules, knowledge graphs, knowledge bases, and ontologies (organized descriptions of concepts and how they relate to each other). These help the system understand how different elements fit together and what actions make sense in a given situation.

For example, in a self-driving car, the neural perception layer may recognize a red traffic light in the camera feed. The symbolic reasoning layer can then apply a rule such as: “If the light is red, the vehicle must stop.” Because the reasoning is based on clear rules, the system’s decisions are easier to explain and verify, which is especially important in situations where safety and accountability matter.

The integration layer connects the neural perception layer and the symbolic reasoning layer, ensuring that learning and reasoning operate together. In one direction, it converts outputs from neural models (such as detecting a pedestrian) into symbolic representations that describe the object and its attributes.

In the other direction, it takes symbolic rules (for example, “a vehicle must stop if a pedestrian is in a crosswalk”) and translates them into signals that guide the neural models. This may involve highlighting relevant areas of an image, influencing attention, or shaping the model’s decision pathways.

This two-way exchange forms a feedback loop. The neural side gains structure and interpretability from symbolic rules, while the symbolic side can adapt more effectively based on real-world data. Techniques such as logical neural networks (LNNs) help enable this interaction by embedding logical constraints directly into neural architectures.

By linking perception and reasoning in this way, neuro-symbolic AI can produce decisions that are both accurate and easier to interpret. Many researchers see this approach as a promising step toward more reliable and human-aligned AI, and potentially as a foundation for future progress toward artificial general intelligence (AGI).

Now that we have a better understanding of what a neuro-symbolic AI is and how it works, let’s take a look at some of its real-world use cases.

Autonomous vehicles need to understand their surroundings to operate safely. They use technologies like computer vision to detect pedestrians, vehicles, lane markings, and traffic signs.

While deep learning models can identify these objects accurately, they do not always understand what those objects mean in context or how they relate to one another in a real-world situation. For instance, a neural model might recognize a pedestrian on a crosswalk but can’t tell if they’re about to cross or just standing and waiting.

Neuro-symbolic AI attempts to bridge this gap by enabling self-driving vehicles to combine visual recognition with logical reasoning, so they can interpret situations rather than just identify objects. Recent AI research has shown that systems combining neural perception with symbolic rules can improve pedestrian behavior prediction.

In these systems, the neural component analyzes visual cues such as a pedestrian’s posture, movement, and position. The symbolic component then applies logical rules, considering factors like whether the person is near a crosswalk or what the current traffic signal indicates.

By combining these two perspectives, the neuro-symbolic system can do more than simply detect a pedestrian. It can make a reasonable prediction about whether the pedestrian is likely to cross, and it can explain why it made that decision. This leads to safer and more transparent behavior in autonomous vehicles.

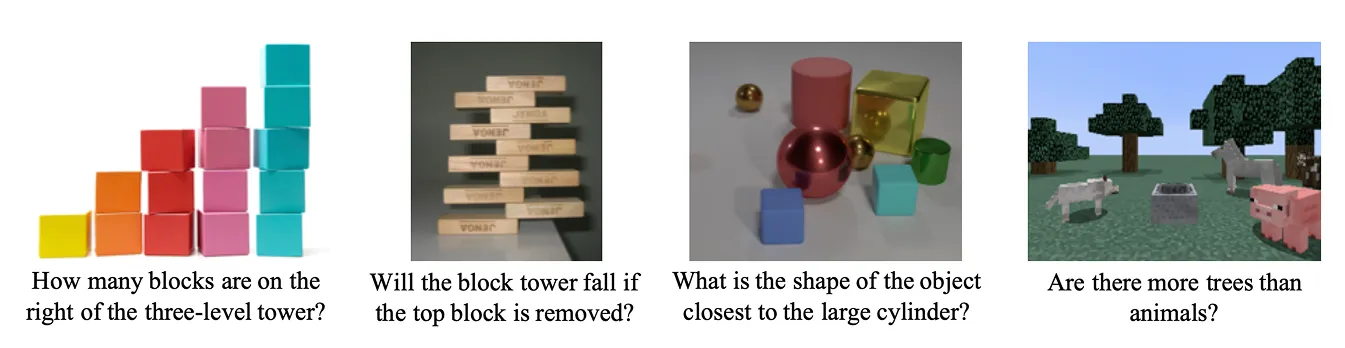

Another important application of neuro-symbolic AI is visual question answering (VQA). VQA systems are designed to answer questions about images.

It brings together large language models (LLMs) and visual models to perform multimodal reasoning, combining what the system sees with what it understands. For example, if a VQA system is shown an image and asked, “Is the cup on the table?”, it has to recognize the objects but also understand the relationship between them. It needs to determine whether the cup is actually located on top of the table in the scene.

A recent study demonstrated how neuro-symbolic AI can enhance VQA by integrating neural perception with symbolic reasoning. In the proposed system, the neural network first analyzes the image to recognize objects and their attributes, such as color, shape, or size.

The symbolic reasoning component then applies logical rules to interpret how these objects relate to one another and to answer the question. If asked “How many gray cylinders are in the scene?”, the neural part identifies all the cylinders and their colors, and the symbolic part filters them based on the criteria and counts the correct ones.

Such research showcases how neuro-symbolic VQA can move beyond simply providing answers. Because the model can show the steps it took to reach a conclusion, it supports explainable AI, where systems make predictions and justify their reasoning in a way people can understand.

Here are some of the key benefits of using neuro-symbolic AI:

Despite its potential, neuro-symbolic AI is still evolving and comes with certain practical challenges. Here are some of its key limitations:

Neuro-symbolic AI represents an important step toward building AI systems that can not only perceive the world but also reason about it and explain their decisions. Unlike traditional deep learning systems, which rely mostly on patterns learned from data, neuro-symbolic AI combines statistical learning with structured logic and knowledge. Rather than replacing deep learning, it builds on top of it, moving us a little closer to developing AI that can understand and reason in a more human-like way.

Join our community and explore our GitHub repository. Check out our solutions pages to discover various applications of AI in agriculture and computer vision in healthcare. Discover our licensing options and get started with building your Vision AI project!