Learn how pose estimation tools can be used to detect body keypoints in images and video, estimate 2D and 3D poses, and power various Vision AI applications.

Learn how pose estimation tools can be used to detect body keypoints in images and video, estimate 2D and 3D poses, and power various Vision AI applications.

As humans, we read movement instinctively. When someone leans forward, turns their head, or lifts an arm, you can immediately infer what they’re doing. It’s a quiet, almost subconscious skill that shapes how we interact with people and explore the world.

As technology becomes a bigger part of daily life, it’s natural to want our devices to understand movement as smoothly as we do. Recent advances in artificial intelligence, especially deep learning-based advancements, are making that possible. In particular, computer vision helps machines extract meaning from images and video and is driving this progress.

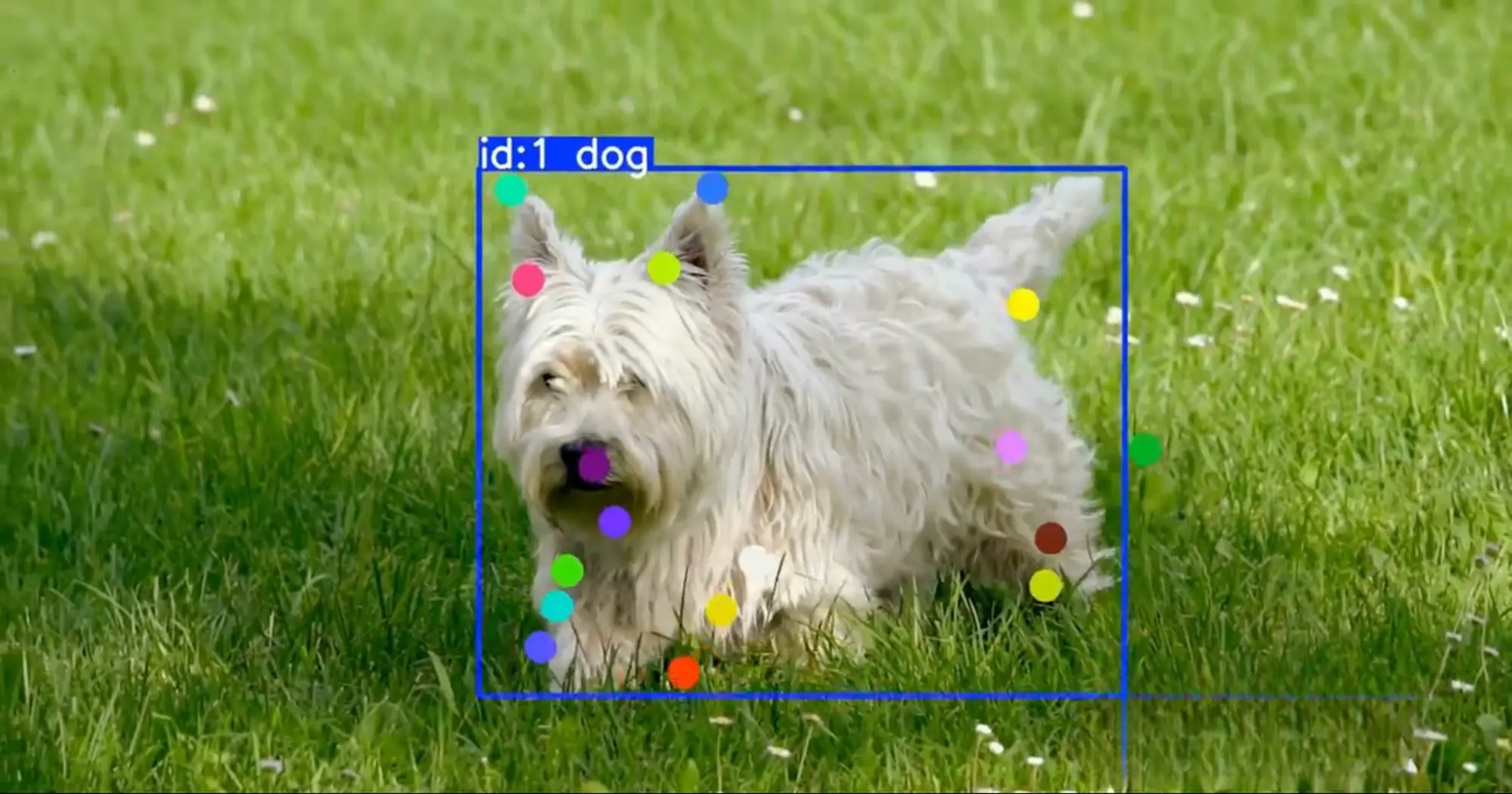

For example, pose estimation is a common computer vision task that predicts the locations of predefined body keypoints (such as the shoulders, elbows, hips, and knees) in an image or video frame. These keypoints can be connected using a fixed skeleton definition to form a simplified pose representation.

Computer vision models such as Ultralytics YOLO11 and the upcoming Ultralytics YOLO26 support tasks like pose estimation and can be used to power real-time applications, including form feedback in fitness and sports, safety monitoring, and interactive augmented reality experiences.

.webp)

In this article, we’ll take a deep dive into pose estimation tools and see how pose estimation works, where it’s used, and some of the top models and libraries available today. Let’s get started!

Pose estimation is a computer vision technique that helps a system understand how a person or object is positioned in an image or video. Rather than analyzing every pixel equally, it predicts a set of consistent landmarks, such as the head, shoulders, elbows, hips, knees, and ankles.

Most models output the coordinates of these keypoints and a score that reflects how likely each prediction is to be correct. These keypoints can then be connected using a predefined skeleton layout to form a simple pose representation.

When applied frame by frame in videos, the resulting keypoints can be associated over time to estimate motion. This enables applications like form checks, movement analysis, and gesture-based interaction.

.webp)

Human movement carries a lot of information. The way someone bends, reaches, or shifts their weight can reveal intent, effort, fatigue, or even injury risk. Until recently, capturing that level of detail typically required specialized sensors, motion-capture suits, or controlled lab environments.

Pose estimation changes that. Extracting key body landmarks from regular images and video lets computers analyze movement using standard cameras. This makes movement analysis more accessible, scalable, and practical to use in real-world settings.

Here are a few ways pose estimation can create an impact:

The idea of estimating poses has been around for many years. Early approaches used simple geometric models and hand-crafted rules, and they typically worked only in controlled conditions.

For example, a system might perform well when a person stands still in a fixed position, but break down when they start walking, turning, or interacting with objects in real-world scenes. These methods often struggled with natural movement, changing camera angles, cluttered backgrounds, and partial occlusion.

Modern pose estimation relies on deep learning to handle these challenges. By training convolutional neural networks on large labeled datasets, models learn visual patterns that help them detect keypoints more reliably across different poses, people, and environments.

With more examples, the model improves its predictions and becomes better at generalizing to new scenes. Because of this progress, pose estimation now supports a wide range of practical use cases, including workplace monitoring and ergonomics, and sports analytics, where coaches and analysts study how athletes move.

Pose estimation comes in a few different forms, depending on the setting and what you need to measure. Here are the main types you will come across:

.webp)

Pose estimation can be applied to many kinds of objects, but to keep things simple, let’s focus on human pose estimation.

Most human pose estimation systems are trained on annotated datasets where key body parts are labeled across large collections of images and video frames. Using these examples, the model learns visual patterns linked to human body landmarks like the shoulders, elbows, hips, knees, and ankles, so it can predict keypoints accurately in new scenes.

Another key aspect is the model’s inference architecture, which determines how it detects keypoints and assembles them into full poses. Some systems detect each person first and then estimate keypoints within each person’s region, while others detect keypoints across the entire image and then group them into individuals. Newer single-stage designs can predict poses in one pass, balancing speed and accuracy for real-time use.

Next, let’s walk through different pose estimation approaches in detail.

In a bottom-up approach, the model looks at the whole image and finds body keypoints first, like the head, shoulders, elbows, hips, knees, and ankles. At this stage, it isn’t trying to separate people. It is simply detecting all keypoints or body joints defined by the pose skeleton across the scene.

After that, the system does a second step to connect the dots. It links keypoints that belong together and groups them into complete skeletons, one per person. Since it doesn’t need to detect each person first, bottom-up methods often work well in crowded scenes where people overlap, appear at different sizes, or are partly hidden.

In contrast, top-down systems start by detecting each person in the image first. They place a bounding box around every individual and treat each box as its own region to analyze.

Once a person is isolated, the model predicts the body keypoints within that region. This step-by-step setup often produces very accurate results, especially when there are only a few people in the scene, and each person is clearly visible.

Single-stage, sometimes called hybrid, models predict poses in one pass. Instead of running person detection first and keypoint estimation second, they output the person location and the body keypoints at the same time.

Because everything happens in a single module, these models are often faster and more efficient, which makes them a strong fit for real-time uses like live motion tracking and motion capture. Models such as Ultralytics YOLO11 are built around this idea, aiming to balance speed with reliable keypoint predictions.

Regardless of the approach used, a pose estimation model still needs to be trained and tested carefully before it’s reliable in the real world. It typically learns from large sets of images (and sometimes video) where body keypoints are labeled, helping it handle different poses, camera angles, and environments.

Some well-known pose estimation datasets include COCO Keypoints, MPII Human Pose, CrowdPose, and OCHuman. When these datasets don’t reflect the conditions the model will face in deployment, engineers often collect and label additional images from the target setting, such as a factory floor, gym, or clinic.

.webp)

After training, the model’s performance is evaluated on standard benchmarks to measure accuracy and robustness and to guide further tuning for real-world use. Results are often reported using mean average precision, commonly referred to as mAP, which summarizes performance across different confidence thresholds by comparing predicted poses to the labeled ground truth.

In many pose benchmarks, a predicted pose is matched to a ground-truth pose using Object Keypoint Similarity (OKS). OKS measures how close the predicted keypoints are to the annotated keypoints, while accounting for factors like the person’s scale and the typical localization difficulty of each keypoint.

Pose models also output confidence scores for detected people and for individual keypoints. These scores reflect the model’s confidence and are used to rank and filter predictions, which is especially important in challenging conditions such as occlusion, motion blur, or unusual camera angles.

Many pose estimation tools are available today, each balancing speed, accuracy, and ease of use. Here are some of the most widely used tools and libraries:

Pose estimation is increasingly being used to turn ordinary videos into useful movement insights. By tracking body keypoints frame by frame, these systems can infer posture, motion, and physical behavior from camera feeds, making such technology practical in many real-world settings.

For example, in healthcare and rehabilitation, pose tracking can help clinicians see and measure how a patient moves during therapy and recovery. By extracting body landmarks from ordinary video recordings, it can be used to assess posture, range of motion, and overall movement patterns over time. These measurements can support and optimize traditional clinical evaluations and, in some cases, make it easier to track progress without needing wearable sensors or specialized equipment.

Similarly, in sports and broadcasting, pose estimation can analyze how athletes move directly from video feeds. An interesting example is Hawk-Eye, a camera-based tracking system used in professional sports for officiating and broadcast graphics. It also provides skeletal tracking by estimating an athlete’s body keypoints from camera views.

Choosing the right pose estimation tool starts with understanding your computer vision project’s needs. Some applications prioritize real-time speed, while others require higher accuracy and detail.

The target deployment device also makes a difference. Mobile apps and edge devices typically require lightweight, efficient models, while larger models are often a better fit for servers or cloud environments.

In addition to this, ease of use can play a role. Good documentation, smooth deployment, and support for custom training can streamline your project.

Simply put, different tools excel in different areas. For instance, Ultralytics YOLO models provide a practical balance of speed, accuracy, and ease of deployment for many real-world pose estimation applications.

Pose estimation helps computers understand human movement by detecting body keypoints in images and video. Models like YOLO11 and YOLO26 make it easier to build real-time applications for areas like sports, healthcare, workplace safety, and interactive experiences. As models keep getting faster and more accurate, pose estimation is likely to become a common feature in many Vision AI systems.

Want to know more about AI? Check out our community and GitHub repository. Explore our solution pages to learn about AI in robotics and computer vision in manufacturing. Discover our licensing options and start building with computer vision today!