Explore how OpenPose can be used for pose estimation in computer vision applications. Learn about its features and its significance in Vision AI.

Explore how OpenPose can be used for pose estimation in computer vision applications. Learn about its features and its significance in Vision AI.

Nowadays, images and cameras are everywhere - built into our phones, homes, and even public spaces. We rely on them not just to capture moments, but to help us understand and interact with the world around us.

Behind the scenes, computer vision, a subfield of artificial intelligence (AI), makes this possible by enabling machines to interpret visual data. It allows systems to detect objects, recognize faces, and track movement, playing a key role in many of the technologies we use every day.

Thanks to recent advancements in AI, computer vision models can now analyze and extract more complex data and insights. One example of this is pose estimation, a computer vision task focused on understanding human movement.

It works by identifying key points on the body, such as shoulders, elbows, and knees, in images or video. This makes it possible to analyze how people move, enabling applications in fitness tracking, animation, healthcare, and more.

Among the many tools developed for pose estimation, OpenPose stands out as a major breakthrough. Created by researchers at the Perceptual Computing Lab at Carnegie Mellon University, it was one of the first open-source systems capable of detecting full-body poses, including hands, feet, and facial keypoints, for multiple people in real time using just a camera (with up to 135 keypoints per person).

In this article, we’ll explore OpenPose, how it works, and its significance as a milestone in computer vision.

Before AI was widely adopted, tracking human movement in videos involved using specialized equipment. In industries like film and animation, actors often wore suits with reflective markers so cameras could capture their movements in a controlled studio environment.

While these marker-based motion capture techniques were accurate, they were also expensive and limited to specific setups. As computer vision advanced, researchers looked for ways to track body movement without using markers. They used edges, contours, and templates to find human shapes in images.

These early systems worked in simple and straightforward instances but struggled with real-world scenarios. They often gave poor results when people moved in unexpected ways or when more than one person appeared in a frame.

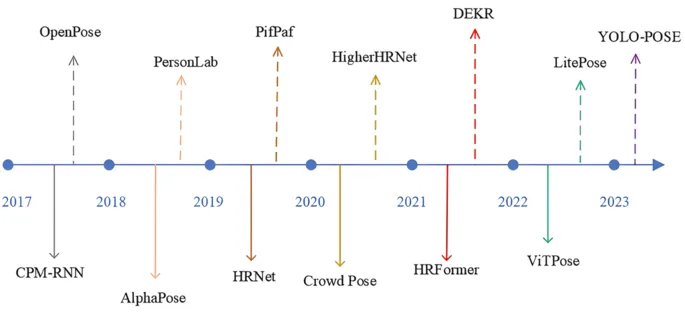

In the late 2010s, deep learning brought a major shift to pose estimation. Vision AI models could be trained on large datasets of human poses. Instead of relying on edges and templates, the models learned to recognize body joints and structure by studying thousands of labeled images. This made pose estimation more accurate, flexible, and impactful in a wider range of settings.

OpenPose was first released in 2017 and is capable of estimating the poses of multiple people simultaneously in a single image. Unlike older systems, OpenPose doesn’t require special suits or markers. It works with standard cameras and can process images and video in real time. These features made pose estimation more accessible to developers and researchers

The foundation that OpenPose laid for computer vision helped others build newer architectures for a variety of other applications. Today, Vision AI models like Ultralytics YOLO8 and Ultralytics YOLO11 that support pose estimation tasks offer faster results and lower latency.

However, OpenPose is a great place to start if you're curious about how pose estimation has evolved. It introduced key ideas that many newer systems still rely on today.

Now that we have a better understanding of why OpenPose matters, let's take a closer look at what it can actually do.

At the heart of OpenPose’s capabilities is something called keypoint detection. Keypoints are specific landmarks on the human body, like the tip of the nose, the center of the shoulders, elbows, wrists, hips, knees, and ankles. OpenPose can detect up to 135 of these points per person, including detailed areas like fingers and facial features.

When these points are connected, they form a simplified representation of the human body - you can think of it as a digital skeleton. This skeletal outline shows not just where a person is, but how they are posed: whether they’re sitting, standing, waving, smiling, or walking. Computers can interpret human movement visually using these skeletons, much like we instinctively understand someone's body language.

Skeletal tracking is especially useful because it strips away background noise and distractions, letting the system focus purely on human posture and motion. Instead of analyzing every pixel, OpenPose concentrates on meaningful points that tell the story of how a person is moving or interacting.

By extracting this structured information from everyday images or video, OpenPose makes it possible to build applications that respond to gestures, monitor physical activity, assess emotional cues, or even animate digital characters.

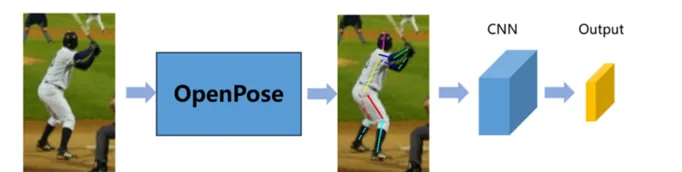

Here is an overview of how OpenPose detects and connects keypoints on the human body from visual input:

OpenPose was one of the first advanced tools that made pose estimation practical for a variety of real-world use cases. While it's not commonly used in real-time computer vision solutions today, it played an important role in shaping early work across fields like sports, entertainment, education, and safety.

Let’s take a closer look at how it helped pave the way in these areas.

When you watch baseball, it's easy to understand what's happening - you can instantly recognize a pitch, a swing, or a stolen base. As humans, we intuitively read body movements and make sense of them without much effort. But for machines, recognizing these actions is far more complex. They need precise information about how each part of the body moves through space.

OpenPose was a substantial step forward in this area of computer vision. It was a practical tool for analyzing athletic form in a variety of settings.

Many research projects used OpenPose to break down movements like swings and jumps, even classifying specific baseball actions based on how players moved. Because it worked in open environments with standard video, it allowed researchers to test how such systems might function in real-world training or coaching scenarios.

These early studies helped lay the groundwork for the performance tracking tools now used in advanced sports technology.

Similarly, researchers also used OpenPose to explore how video-based pose tracking could support safety monitoring. It was tested in detecting behaviors such as falls, unexpected gestures, or movement patterns in public areas.

Because it worked with standard cameras, OpenPose made early experimentation more accessible in environments like hospitals and transportation hubs. These studies helped drive the development of newer models now used in surveillance, fall detection, and emergency response systems.

Here is a glimpse of some of the advantages that OpenPose offers:

Although OpenPose was a major step forward, it also has technical limitations that are important to keep in mind. Here are some of the key challenges associated with OpenPose:

OpenPose played an important role in making pose estimation more accessible. It showed that tracking body movements could be done with a simple camera, without relying on suits or specialized equipment.

It laid the foundation for many practical applications across healthcare, education, entertainment, and research. While newer models now offer faster speeds and lighter performance, OpenPose remains a key reference point for understanding how pose estimation has evolved.

Join our community and visit our GitHub repository to learn more about AI. If you are looking to build your own computer vision solutions, explore our licensing options. Also, check out how computer vision in healthcare and AI in logistics are making an impact!