See how self-supervised learning for denoising works, why images get noisy, and the key methods and steps used to recover clean visual details.

See how self-supervised learning for denoising works, why images get noisy, and the key methods and steps used to recover clean visual details.

Cameras don’t always capture the world the way we see it. A portrait taken in low light or a photo of a fast-moving car can look grainy, blurred, or distorted.

Slow sensors, dark environments, and motion can introduce tiny speckles of noise that soften edges and hide important details. When this clarity is lost, even advanced AI and machine learning systems can struggle to understand what an image contains, because many smart systems rely on those fine details to work well.

For instance, computer vision is a branch of artificial intelligence that enables machines to interpret images and video. But to do this accurately, Vision AI models need clean, high-quality visual data to learn from.

Specifically, models like Ultralytics YOLO11 and the upcoming Ultralytics YOLO26 support tasks such as object detection, instance segmentation, and pose estimation, and can be custom-trained for different use cases. These tasks rely on clear visual cues like edges, textures, colors, and fine structural details.

When noise obscures these features, the model receives weaker training signals, making it harder to learn accurate patterns. As a result, even small amounts of noise can reduce performance in real applications.

Previously, we looked at how self-supervised learning denoises images. In this article, we'll dive deeper into how self-supervised denoising techniques work and how they help recover meaningful visual information. Let’s get started!

Before we explore how self-supervised learning is used in image denoising, let's first revisit why images become noisy in the first place.

Images of real-world objects and scenes are rarely perfect. Low lighting, limited sensor quality, and fast motion can introduce random disturbances in individual pixels across the image. These pixel-level disruptions, known as noise, reduce overall clarity and make important details harder to see.

When noise hides edges, textures, and subtle patterns, computer vision systems struggle to recognize objects or interpret scenes accurately. Different conditions produce different types of noise, each affecting the image in its own way.

.webp)

Here are some of the most common types of noise found in images:

So, what makes self-supervised denoising special? It shines in situations where clean, ground-truth images simply don’t exist or are too difficult to capture.

This often happens in low-light photography, high-ISO imaging, medical and scientific imaging, or any environment where noise is unavoidable and collecting perfect reference data is unrealistic. Instead of needing clean examples, the model learns directly from the noisy images you already have, making it adaptable to the specific noise patterns of your camera or sensor.

Self-supervised denoising is also a great option when you want to boost the performance of downstream computer vision tasks, but your dataset is filled with inconsistent or noisy images. By recovering clearer edges, textures, and structures, these methods help models like YOLO detect, segment, and understand scenes more reliably. In short, if you’re working with noisy data and clean training images aren’t available, self-supervised denoising often offers the most practical and effective solution.

As we’ve previously seen, self-supervised denoising is a deep-learning–based AI approach that allows models to learn directly from noisy images without relying on clean labels. It builds on the principles of self-supervised learning, where models generate their own training signals from the data itself.

In other words, a model can teach itself by using noisy images as both the input and the source of its learning signal. By comparing different corrupted versions of the same image or predicting masked pixels, the model learns which patterns represent real structure and which are just noise. Through iterative optimization and pattern recognition, the network gradually improves its ability to distinguish meaningful image content from random variation.

.webp)

This is made possible through specific learning strategies that guide the model to separate stable image structure from random noise. Next, let’s take a closer look at the core techniques and algorithms that streamline this process and how each approach helps models reconstruct cleaner, more reliable images.

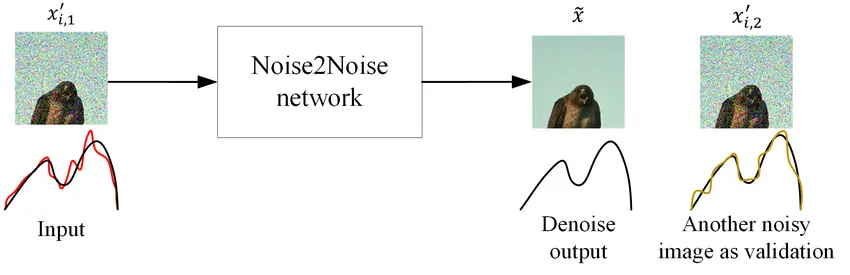

Many early self-supervised learning methods for denoising worked by comparing two noisy versions of the same image. Since noise changes randomly each time an image is captured or corrupted, but the real structure stays the same, these differences can be used as a learning signal for a model.

These approaches are commonly referred to as pairwise image denoising methods because they rely on using or generating pairs of noisy images during training. For example, the Noise2Noise approach (proposed by Jaakko Lehtinen and his team) trains a model using two independently noisy images of the same scene. Given that the noise patterns differ between the two versions, the model learns to identify the consistent details that represent the actual underlying image.

Over time, this teaches the network to suppress the random noise and preserve real structure, even though it never sees a clean reference image. Consider a simple scenario where you take two photos of a low-light street at night.

Each image contains the same buildings, lights, and shadows, but the grainy noise appears in different places. By comparing these two noisy photos during training, a self-supervised model can learn which visual patterns are stable and which are caused by noise, ultimately improving its ability to reconstruct cleaner images.

While pairwise methods rely on comparing two differently corrupted versions of the same image, blind-spot methods take a different approach. They let a model learn from a single noisy image by hiding selected pixels so the network can’t see their corrupted values.

The model must then predict the hidden pixels using only the surrounding context. The core idea is that noise is random, but the underlying structure of an image is not.

By preventing the model from copying a pixel’s noisy value, blind-spot methods encourage it to infer what that pixel should be based on stable image patterns such as nearby edges, textures, or color gradients. Techniques like Noise2Void (introduced by Alexander Krull and his team) and Noise2Self (developed by Joshua Batson and Loïc Royer) implement this principle by masking individual pixels or small neighborhoods and training the model to reconstruct them.

More advanced approaches, including Noise2Same and PN2V, improve robustness by enforcing consistent predictions across multiple masked versions or by explicitly modeling the noise distribution to estimate uncertainty. As these methods require only a single noisy image, they are especially useful in domains where capturing clean or paired images is impractical or impossible, such as microscopy, astronomy, biomedical imaging, or low-light photography.

Most pairwise and blind-spot self-supervised denoising methods rely on convolutional neural networks (CNNs) or denoising networks. CNNs are a great option for these approaches because they focus on local patterns, namely edges, textures, and small details.

Architectures like U-Net are widely used since they combine fine-grained features with multi-scale information. However, CNNs mainly operate within limited neighborhoods, which means they can miss important relationships that span larger regions of an image.

Transformer-supported state-of-the-art denoising methods were introduced to address this limitation. Instead of only looking at nearby pixels, the proposed method uses attention mechanisms to understand how different parts of an image relate to one another.

Some models use full global attention, while others use window-based or hierarchical attention to reduce computation, but in general, they are designed to capture long-range structure that CNNs by themselves can't. This broader view helps the model restore repeating textures, smooth surfaces, or large objects that require information from across the image.

Aside from self-supervised techniques, there are also several other ways to clean up noisy images. Traditional methods, like bilateral filtering, wavelet denoising, and non-local means, use simple mathematical rules to smooth noise while trying to keep important details.

Meanwhile, deep-learning approaches also exist, including supervised models that learn from clean–noisy image pairs and generative adversarial networks (GANs) that generate sharper, more realistic results. However, these methods usually require better image quality for training.

Since we just walked through several different techniques, you might be wondering whether each one works in a completely different way, given that they use their own architectures. However, they all follow a similar pipeline that begins with data preparation and ends with model evaluation.

Next, let's take a closer look at how the overall self-supervised denoising image process works step by step.

Before the model can start learning from noisy images, the first step is to make sure all the images look consistent. Real photos can vary a lot.

Some images may be too bright, others too dark, and some may have colors that are slightly off. If we feed these variations directly into a model, it becomes harder for it to focus on learning what noise looks like.

To handle this, each image goes through normalization and basic preprocessing. This might include scaling pixel values to a standard range, correcting intensity variations, or cropping and resizing. The key is that the model receives clean data that can be used as stable, comparable inputs.

Once the images have been normalized, the next step is to create a training signal that allows the model to learn without ever seeing a clean image. Self-supervised denoising methods do this by ensuring the model can't simply copy the noisy pixel values it receives.

Instead, they create situations where the model must rely on the surrounding context of the image, which contains a stable structure, rather than the unpredictable noise. Different methods achieve this in slightly different ways, but the core idea is the same.

Some approaches temporarily hide or mask certain pixels so the model has to infer them from their neighbors, while others generate a separately corrupted version of the same noisy image so the input and target contain independent noise. In both cases, the target image carries meaningful structural information but prevents the network from accessing the original noisy value of the pixel it is supposed to predict.

Because noise changes randomly while the underlying image remains consistent, this setup naturally encourages the model to learn what the true structure looks like and ignore the noise that varies from one version to another.

With the training signal in place, the model can begin learning how to separate meaningful image structure from noise through model training. Each time it predicts a masked or re-corrupted pixel, it must rely on the surrounding context instead of the noisy value that originally occupied that spot.

Over many iterations or epochs, this teaches the network to recognize the kinds of patterns that remain stable across an image, such as edges, textures, and smooth surfaces. It also learns to ignore the random fluctuations that characterize noise.

For example, consider a low-light photo where a surface looks extremely grainy. Although the noise varies from pixel to pixel, the underlying surface is still smooth. By repeatedly inferring the hidden pixels in such regions, the model gradually becomes better at identifying the stable pattern beneath the noise and reconstructing it more cleanly.

Through the model training process, the network learns an internal representation of the image's structure. This lets the model recover coherent details even when the input is heavily corrupted.

After the model has learned to predict hidden or re-corrupted pixels, the final step is to evaluate how well it performs on full images. During testing, the model receives an entire noisy image and produces a complete denoised version based on what it learned about image structure. To measure how effective this process is, the output is compared against clean reference images or standard benchmark datasets.

Two commonly used metrics are PSNR (Peak Signal-to-Noise Ratio), which measures how close the reconstruction is to the clean ground truth, and SSIM (Structural Similarity Index), which evaluates how well important features such as edges and textures are preserved. Higher scores generally indicate more accurate and visually reliable denoising.

Self-supervised denoising research, appearing in IEEE journals and CVF conferences, among others, CVPR, ICCV, and ECCV, as well as widely distributed on arXiv, often relies on a mix of synthetic and real-world datasets to assess the model performance of deep learning methods under both controlled and practical conditions. On one hand, synthetic datasets begin with clean images and add artificial noise, making it easy to compare methods using metrics like PSNR and SSIM.

Here are some popular datasets commonly used with synthetic noise added for benchmarking:

Real-world noisy datasets, on the other hand, contain images captured directly from camera sensors under low light, high ISO, or other challenging conditions. These datasets test whether a model can handle complex, non-Gaussian noise that can’t be easily simulated.

Here are some popular real-world noisy datasets:

.webp)

Here are some factors and limitations to consider if you are going to train a deep-learning-based self-supervised denoising model:

Self-supervised denoising gives AI enthusiasts a practical way to clean up images using only the noisy data we already have. By learning to recognize real structure beneath the noise, these methods can recover important visual details. As denoising technology continues to improve, it will likely make a wide range of computer vision tasks more reliable in everyday settings.

Become a part of our growing community! Dive into our GitHub repository to learn more about AI. If you're looking to build computer vision solutions, check out our licensing options. Explore the benefits of computer vision in retail and see how AI in manufacturing is making a difference!