Learn about U-Net architecture, how it supports image segmentation, its applications, and why it's significant in the evolution of computer vision.

Learn about U-Net architecture, how it supports image segmentation, its applications, and why it's significant in the evolution of computer vision.

Computer vision is a branch of artificial intelligence (AI) that focuses on analyzing visual data. It has paved the way for many cutting-edge systems, such as automating the process of inspecting products in factories and helping autonomous vehicles navigate roads.

One of the most well-known computer vision tasks is object detection. This task enables models to locate and identify objects within an image using bounding boxes. While bounding boxes are helpful for various applications, they only provide a rough estimate of an object’s location.

However, in fields like healthcare, where precision is crucial, Vision AI use cases depend on more than just identifying an object. Often, they also require information related to the exact shape and position of objects.

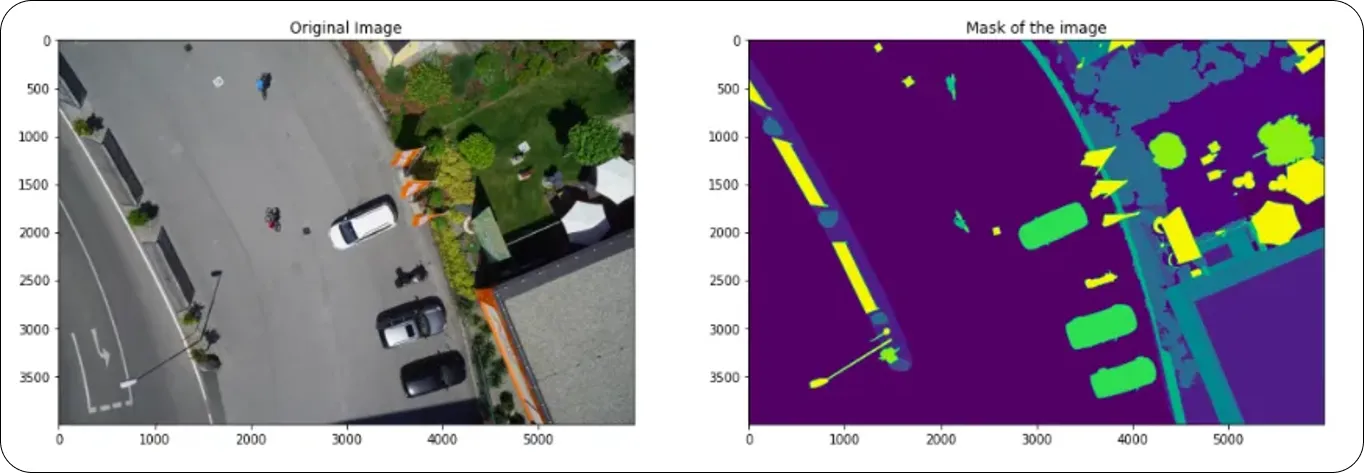

That’s exactly what the computer vision task, segmentation, is designed to do. Instead of using bounding boxes, segmentation models detect objects at the pixel level. Over the years, researchers have developed specialized computer vision models for segmentation.

One such model is U-Net. Although newer, more advanced models have surpassed its performance, U-Net holds a significant place in the history of computer vision. In this article, we’ll take a closer look at the U-Net architecture, how it works, where it has been used, and how it compares to more modern segmentation models available today.

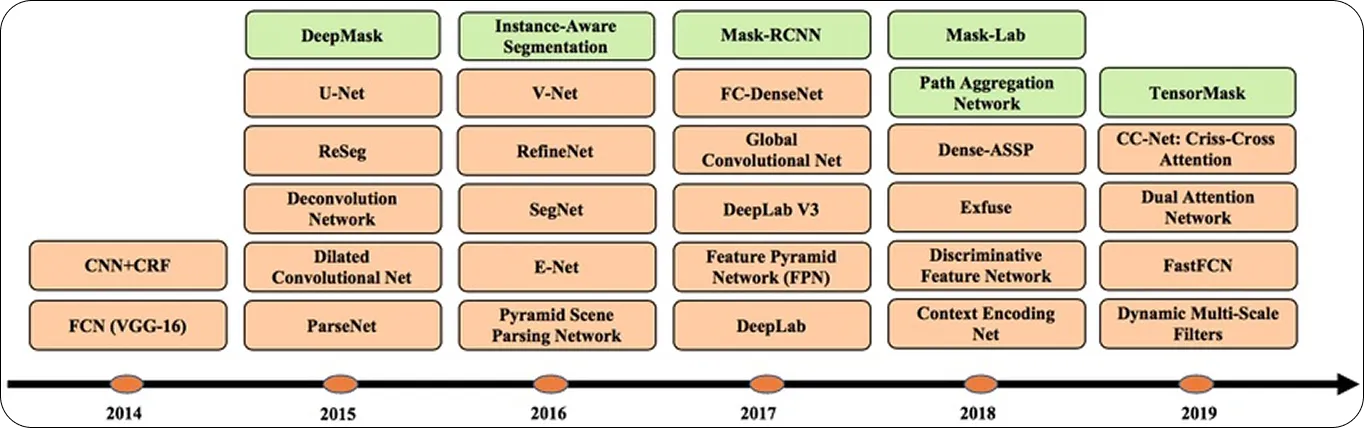

Before we dive into what U-Net is, let’s first get a better idea of how image segmentation models evolved.

Initially, computer vision relied on traditional techniques like edge detection, thresholding, or region growing to separate objects in an image. These techniques were used to detect object boundaries using edges, separate regions by pixel intensity, and group similar pixels. They worked for simple cases but often failed when images had noise, overlapping shapes, or unclear boundaries.

Following the rise of deep learning in 2012, researchers introduced the concept of fully convolutional networks (FCNs) in 2014 for tasks like semantic segmentation. These models replaced certain parts of a convolutional network to allow the computer to look at a whole image at once, instead of breaking it down into smaller pieces. This made it possible for the model to create detailed maps that showcase what’s in an image more clearly.

Building on the FCNs, U-Net was introduced by researchers at the University of Freiburg in 2015. It was originally designed for biomedical image segmentation. In particular, U-Net was designed to perform well in situations where annotated data is limited.

Meanwhile, later versions like UNet++ and TransUNet added upgrades such as attention layers and better feature extraction. The attention layers help the model focus on key regions, while enhanced feature extraction captures more detailed information.

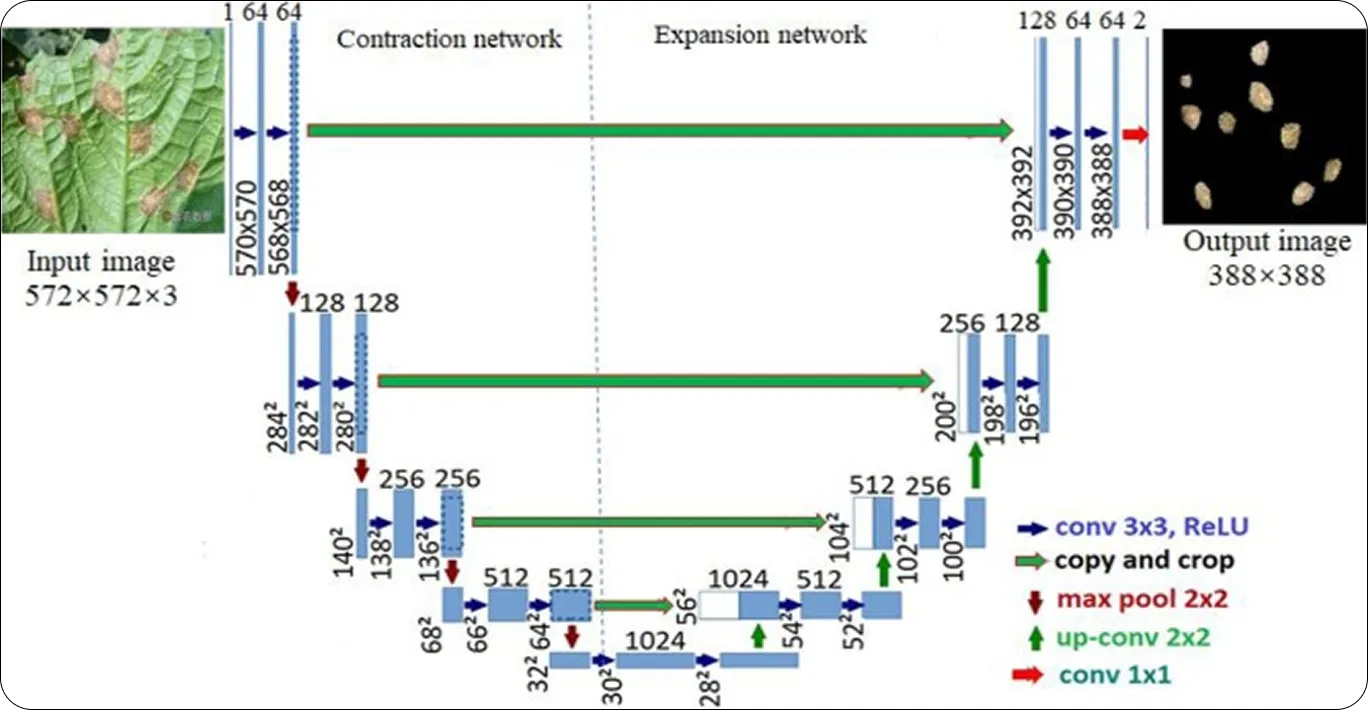

U-Net is a deep learning model built specifically for image segmentation. It takes an image as input and produces a segmentation mask that classifies each pixel according to the object or region it belongs to.

The model gets its name from its U-shaped architecture. It consists of two main parts: an encoder that compresses the image and learns its features, and a decoder that expands it back to the original size. This design creates a symmetrical U-shape, which helps the model understand both the overall structure of an image and its finer details.

One crucial feature of U-Net is the use of skip connections, which allow information from the encoder to be passed directly to the decoder. This means the model can preserve important details that might be lost when the image is compressed.

Here’s a glimpse of how U-Net’s architecture works:

As you explore U-Net, you might be wondering how it differs from other deep learning models, like the Vision Transformer (ViT), which can also perform segmentation tasks. While both models can perform similar tasks, they differ in terms of how they’re built and how they handle segmentation.

U-Net works by processing images at the pixel level through convolutional layers in an encoder-decoder structure. It's often used for tasks that require precise segmentation, like medical scans or self-driving car scenes.

On the other hand, the Vision Transformer (ViT) breaks images into patches and processes them simultaneously through attention mechanisms. It uses self-attention (a mechanism that allows the model to weigh the importance of different parts of the image relative to each other) to capture how different parts of the image relate to each other, unlike U-Net's convolutional approach.

Another important difference is that ViT generally needs more data to work well, but it’s great at picking up complex patterns. U-Net, on the other hand, performs well with smaller datasets and is quicker to train and often requires less training time.

Now that we have a better understanding of what U-Net is and how it works, let's explore how U-Net has been applied across different domains.

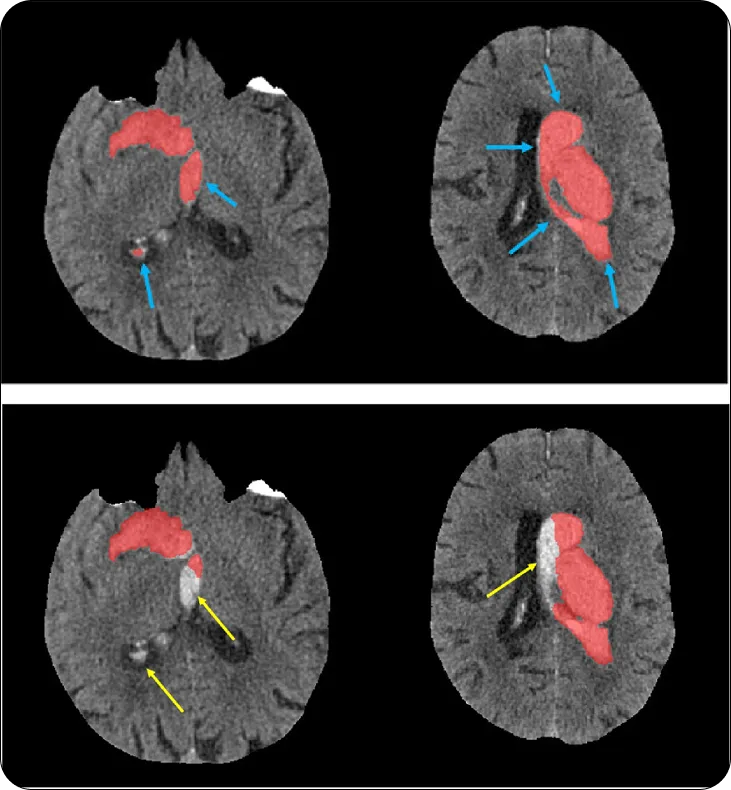

U-Net became a reliable method for pixel-level segmentation of complex medical images, particularly during its prime in research. It was used by researchers to highlight key areas in medical scans, such as tumors and signs of internal bleeding in CT and MRI images. This approach significantly advanced the accuracy of diagnoses and streamlined the analysis of complex medical data in research settings.

One example of U-Net's impact in healthcare research is its use in identifying stroke and brain hemorrhage in medical scans. Researchers could use U-Net to analyze head scans and highlight areas of concern, enabling quicker identification of cases requiring immediate attention.

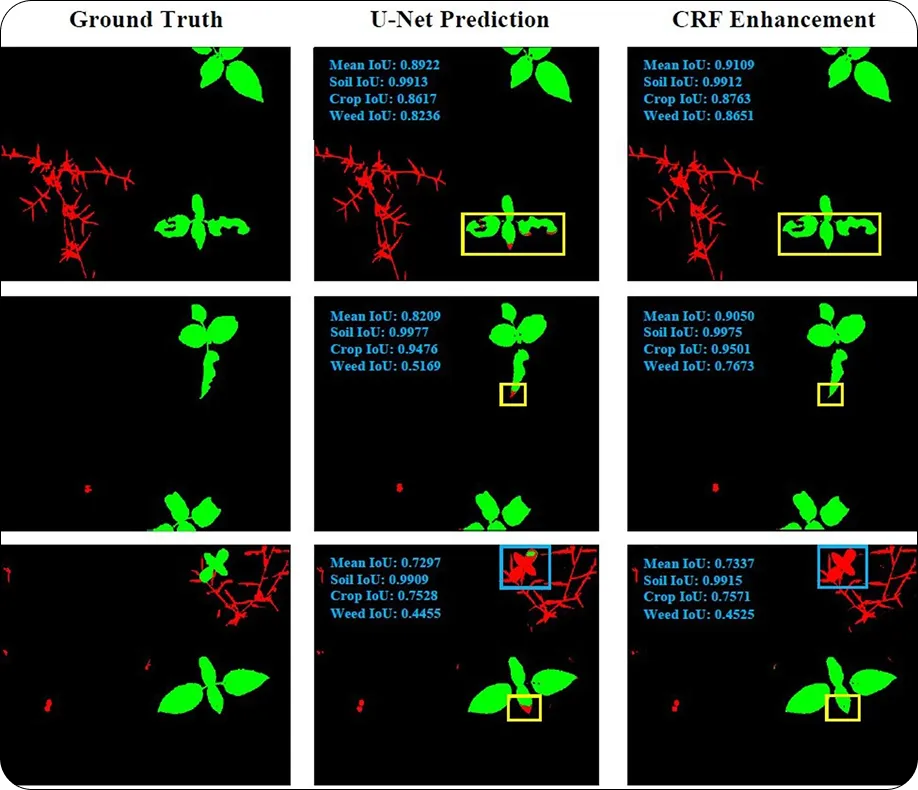

Another area where researchers have used U-Net is in agriculture, particularly for segmenting crops, weeds, and soil. It helps farmers monitor plant health, estimate yields, and make better decisions across large farms. For example, U-Net can separate crops from weeds, making herbicide application more efficient and reducing waste.

To address challenges like motion blur in drone images, researchers have improved U-Net with image deblurring techniques. This ensures clearer segmentation, even when data is collected while moving, such as during aerial surveys.

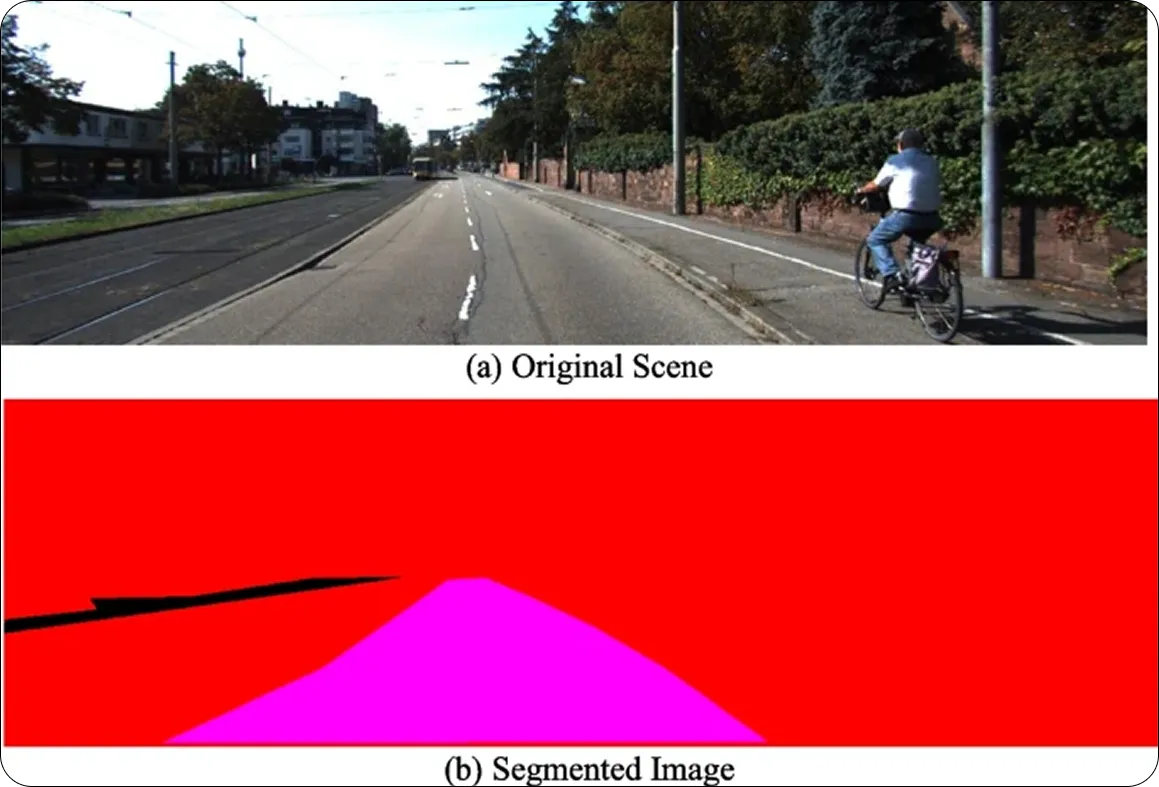

Before more advanced AI models were introduced, U-Net played a vital role in exploring how segmentation could enhance autonomous driving. In autonomous vehicles, U-Net's semantic segmentation can be used to classify each pixel in an image into categories like road, vehicle, pedestrian, and lane markings. This provides the car with a clear view of its surroundings, aiding in safe navigation and effective decision-making.

Even today, U-Net remains a good choice for image segmentation among researchers due to its balance of simplicity, accuracy, and adaptability. Here are some of the key advantages that make it stand out:

While U-Net has many strengths, there are also a few limitations to keep in mind. Here are some factors to consider:

U-Net has been a key milestone in the evolution of image segmentation. It proved that deep learning models can deliver accurate results using smaller datasets, especially in areas like medical imaging.

This breakthrough has paved the way for more advanced applications in various fields. As computer vision continues to evolve, segmentation models like U-Net remain fundamental in enabling machines to understand and interpret visual data with high precision.

Looking to build your own computer vision projects? Explore our GitHub repository to dive deeper into AI and check out our licensing options. Learn how computer vision in healthcare is improving efficiency and explore the impact of AI in retail by visiting our solutions pages! Join our growing community now!