Learn how dataset distillation speeds up model training and cuts computing costs by replacing large datasets with a small, optimized set of synthetic samples.

Learn how dataset distillation speeds up model training and cuts computing costs by replacing large datasets with a small, optimized set of synthetic samples.

Training models might seem like the most time-consuming part of a data scientist’s job. But most of their time, often 60% to 80%, actually goes into getting data ready: collecting it, cleaning it, and organizing it for modeling. As datasets grow larger, that prep time grows too, slowing down experiments and making iteration harder.

To tackle this, researchers have spent years looking for ways to streamline training. Approaches like synthetic data, dataset compression, and better optimization methods all aim to reduce the cost and friction of working with large-scale datasets and to speed up machine learning workflows.

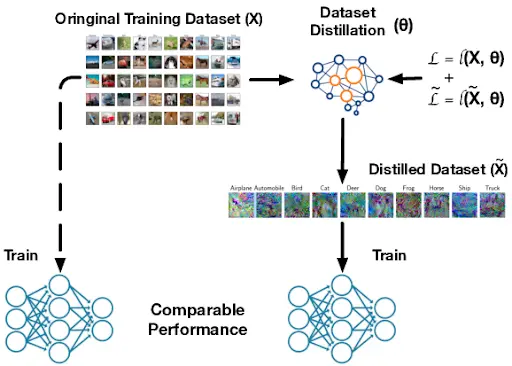

A key question this raises is whether we can drastically shrink a dataset while still achieving the same performance as training a model on the complete data. Dataset distillation is one promising answer.

It creates a compact version of a large training dataset while preserving the essential patterns the model needs to learn effectively. It provides a path to faster training, lower compute needs, and more efficient experimentation. You can think of it like a study cheat sheet for the model, a tiny set of synthetic data examples designed to teach the same core patterns as the full dataset.

In this article, we’ll explore how dataset distillation works and how it supports scalable machine learning and deep learning across real-world applications. Let’s get started!

Dataset distillation is a process where a large training dataset is condensed into a much smaller set of data that still teaches a model almost the same information as the original dataset. Many researchers also refer to this process as dataset condensation because the goal is to capture the essential patterns that appear across the full dataset.

A distilled dataset is different from randomly generated synthetic data or simply picking a smaller subset of real images. It isn’t a random fake dataset or a trimmed copy of the original.

Instead, it is deliberately optimized to capture the most important patterns. During this process, every pixel and feature is adjusted and optimized so that a neural network trained on the distilled data learns almost as if it were trained on the entire dataset.

This idea first appeared in a 2018 arXiv paper by Tongzhou Wang, Jun-Yan Zhu, Antonio Torralba, and Alexei A. Efros. Early tests used simple datasets like MNIST and CIFAR-10, which made it easy to show that a few distilled samples could stand in for thousands of real images.

.webp)

Since then, follow-up work has pushed dataset distillation further, including methods published at ICML and ICLR that make condensation more efficient and scalable.

Dataset distillation improves training efficiency and makes development cycles faster. By reducing the amount of data a model needs to learn from, it lowers the computational requirements.

This is especially useful for continual learning, where models update over time, neural architecture search, where many model designs are tested, and edge training, where models run on small devices with limited memory and power. Overall, these benefits make dataset distillation a great option for rapid initialization, quick fine-tuning, and building early prototypes across many machine learning workflows.

Dataset distillation creates synthetic, or artificially generated, training samples. These samples help a model learn in a way that closely resembles training it on real data. It works by tracking three key factors during normal training.

First is the loss function, which is the model’s error score showing how wrong its predictions are. Second is the model parameters, which are the network’s internal weights that get updated as it learns.

Third is the training trajectory, which describes how the error and weights change step by step over time. The synthetic samples are then optimized so that, when a model trains on them, its error drops and its weights update in the same way they would with the full dataset.

Here’s a closer look at how the dataset distillation process works:

All dataset distillation methods are built on the same core idea, even if they use different algorithms to get there. Most approaches fall into three categories: performance matching, distribution matching, and parameter matching.

Next, let’s look at each one and see how it works.

Performance matching in dataset distillation focuses on creating a tiny, optimized training set that lets a model reach nearly the same accuracy as if it were trained on the full, original dataset. Instead of picking a random subset, the distilled samples are optimized so that a model trained on them ends up with similar predictions, similar loss behavior during training, or similar final accuracy as a model trained on the original dataset.

Meta learning is a common method used to improve this process. The distilled dataset is updated through repeated training episodes, so it becomes effective across many possible situations.

During these episodes, the method simulates how a student model learns from the current distilled samples, checks how well that student performs on real data, and then adjusts the distilled samples to be better teachers. Over time, the distilled set learns to support fast learning and strong generalization, even when the student model starts from different initial weights or uses a different architecture. This makes the distilled dataset more reliable and not tied to a single training run.

.webp)

Meanwhile, distribution matching generates synthetic data that matches the statistical patterns of the real dataset. Instead of focusing only on the final accuracy of a model, this approach focuses on the internal features a neural network generates during learning.

Next, let’s take a look at the two techniques that drive distribution matching.

Single-layer distribution matching concentrates on a single layer of a neural network and compares the features it produces for real versus synthetic data. Those features, also called activations, capture what the model has learned at that point in the network.

By making the synthetic data produce similar activations, the method encourages the distilled dataset to reflect the same important patterns as the original dataset. In practice, the synthetic samples are repeatedly updated until the activations at that chosen layer closely match those from real images.

This approach is relatively simple because it aligns only one level of representation at a time. It can work especially well on smaller datasets or tasks where matching deep, multi-stage feature hierarchies is not necessary. By clearly aligning one feature space, single-layer matching provides a stable and meaningful signal for learning with the distilled dataset.

Multi-layer distribution matching builds on the idea of comparing real and synthetic data by doing it at several layers of a neural network instead of just one. Different layers capture different kinds of information, from simple edges and textures in early layers to shapes and more complex patterns in deeper layers.

By matching features across these layers, the distilled dataset is pushed to reflect what the model learns at multiple levels. Because it aligns features throughout the network, this approach helps the synthetic data preserve richer signals that the model relies on to tell classes apart.

This is especially helpful in computer vision, meaning tasks where models learn to understand images and videos, because useful patterns are spread across many layers. When the feature distributions match well at several depths, the distilled dataset acts as a stronger and more reliable stand-in for the original training data.

Another key category in dataset distillation is parameter matching. Instead of matching accuracy or feature distributions, it matches how a model’s weights change during training. By making training on the distilled dataset produce similar parameter updates to real data training, the model follows a nearly identical learning path.

We’ll walk through the two main parameter matching methods next.

Single-step matching compares what happens to a model’s weights after just one training step on real data. The distilled dataset is then tuned so that a model trained on it for one step produces a very similar weight update. Since it only focuses on this single update, the method is straightforward and fast to run.

The downside is that one step doesn’t reflect the full learning process, especially for harder tasks where the model needs many updates to build richer features. Because of that, single-step matching tends to work best on simpler problems or smaller datasets where useful patterns can be picked up quickly.

In contrast, multi-step parameter matching looks at how a model’s weights change over several training steps, not just one. This sequence of updates is the model’s training trajectory.

The distilled dataset is built so that when a model trains on the synthetic samples, its trajectory closely follows the one it would take on real data. By matching a longer stretch of learning, the distilled set captures more of the structure in the original training process.

Because it reflects how learning unfolds over time, multi-step matching usually works better for bigger or more complex datasets where models need many updates to pick up useful patterns. It does take more computation since it has to track multiple steps, but it often produces distilled datasets that generalize better and give better performance than single-step matching.

With a better understanding of the main distillation approaches, we can now look at how synthetic data is made. In dataset distillation, synthetic samples are optimized to capture the most important learning signal, so a small set can replace a much larger dataset.

Next, we will see how this distilled data is generated and evaluated.

During dataset distillation, the synthetic pixels are updated over many training steps. The neural network learns from the current synthetic images and sends gradient-based feedback, which shows how each pixel should change to match the patterns in the real dataset better.

This works because the process is differentiable (meaning every step is smooth and has well-defined gradients, so small pixel changes lead to predictable changes in the loss), allowing the model to smoothly adjust the synthetic data during gradient descent.

As the optimization continues, the synthetic images start to form meaningful structure, including shapes and textures that the model recognizes. These refined synthetic images are often used for image classification tasks because they capture the key visual cues a classifier needs to learn.

Distilled datasets are evaluated by training models on them and comparing the results to models trained on real data. Researchers measure validation accuracy and check whether the synthetic set preserves the discriminative features (the patterns or signals the model relies on to tell one class from another) needed to separate classes. They also test stability and generalization across different runs or model setups to make sure the distilled data doesn’t lead to overfitting.

Next, we’ll take a closer look at examples showing how distilled datasets speed up training and reduce compute costs while still maintaining strong performance, even when the data is limited or highly specialized.

When it comes to computer vision, the goal is to train models to understand visual data like images and videos. These models learn patterns such as edges, textures, shapes, and objects, and then use those patterns for tasks like image classification, object detection, or segmentation. Because vision problems often have huge variation in lighting, backgrounds, and viewpoints, computer vision systems usually need large datasets to generalize well, which makes training expensive and slow.

When it comes to image classification use cases such as medical scans, wildlife monitoring, or factory defect detection, models often face a tough tradeoff between accuracy and training cost. These tasks typically involve massive datasets.

Dataset distillation can compress the original training set into a small number of synthetic images that still contain the most important visual cues for the classifier. On large benchmarks like ImageNet, distilled sets using only about 4.2% of the original images have been shown to maintain strong classification accuracy. This means a tiny synthetic proxy can replace millions of real samples with far less compute.

Neural architecture search, or NAS, is a technique that automatically explores many possible neural network designs to find one that works best for a task. Because NAS has to train and evaluate a large number of candidate models, running it on full datasets can be slow and very computationally intensive.

Dataset distillation helps by creating a tiny synthetic training set that still contains the main learning signal of the original data, so each candidate architecture can be tested much faster. This lets NAS compare designs efficiently while keeping the rankings of good versus bad architectures reasonably reliable, reducing search cost without sacrificing much final model quality.

Continual learning systems, meaning models that keep updating as new data arrives instead of being trained once, need updates that are fast and memory efficient. Edge devices like cameras, phones, and sensors face similar limits because they have tight compute and storage budgets.

Dataset distillation helps in both cases by compressing a large training set into a tiny synthetic one, so models can adapt or retrain using a small replay set rather than the full dataset. For instance, kernel-based meta-learning work showed that just 10 distilled samples can achieve over 64% accuracy on CIFAR-10, a standard image classification benchmark. Because the replay set is so compact, updates become far quicker and more practical, especially when models need to be refreshed often.

Dataset distillation can also work alongside knowledge distillation for large language models. A small distilled dataset can keep the most important task signals from the teacher model, so a compressed student model can be trained or refreshed more efficiently without losing much performance. Since these datasets are tiny, they are especially helpful for edge or on-device use, where storage and compute are limited but you still want the model to stay accurate after updates.

Here are some benefits of using dataset distillation:

While dataset distillation offers several advantages, here are some limitations to keep in mind:

Dataset distillation makes it possible for a small set of synthetic samples to teach a model almost as effectively as a complete dataset. This makes machine learning faster, more efficient, and easier to scale. As models grow and require more data, distilled datasets offer a practical way to reduce compute costs without sacrificing accuracy.

Join our community and check out our GitHub repository to discover more about AI. If you're looking to build your own Vision AI project, check out our licensing options. Explore more about applications like AI in healthcare and Vision AI in retail by visiting our solutions pages.