Monitoring airport ground operations with Ultralytics YOLO11

See how Ultralytics YOLO11 can enhance airport ground operations by monitoring the tarmac, detecting anomalies, tracking crew activity, and improving safety.

See how Ultralytics YOLO11 can enhance airport ground operations by monitoring the tarmac, detecting anomalies, tracking crew activity, and improving safety.

For a visual walkthrough of the concepts covered in this article, watch the video below.

Globally, airports manage over 100,000 flights every day, putting constant pressure on ground crews to keep everything running smoothly. In fact, airports are some of the busiest and most complex work environments, where every flight relies on ground operations following a precise schedule.

Even small issues, like a delayed cargo load or a missed safety check, can lead to flight disruptions or create serious safety risks on the tarmac. Ground crews are responsible for a wide range of critical tasks to keep airport operations on track.

They guide aircraft, operate support vehicles, manage loading zones, and work within tight turnaround windows. Despite the pace and complexity, many of these tasks still rely on manual checks, outdated systems, and limited automation.

Missteps, like a cart left outside its designated area or a crew member entering an active taxiway, can cause delays or create safety hazards. To better handle these challenges, airports are starting to use computer vision, a subfield of artificial intelligence (AI) that enables computers to analyze and understand images and videos.

Leveraging computer vision models like Ultralytics YOLO11, airports can monitor ground operations in real-time. For instance, YOLO11 can be used to detect aircraft, vehicles, baggage carts, crew movement, and unexpected objects. This real-time visibility helps airports respond faster to potential issues and make more informed decisions on the ground.

In this article, we’ll explore how Ultralytics YOLO11 can make airport ground operations safer by providing real-time monitoring, enhancing situational awareness, and helping reduce the risk of delays and accidents on the tarmac. Let’s get started!

Airport ground operations refer to all the activities that take place on a tarmac to prepare an aircraft for departure or arrival. These tasks include guiding aircraft to gates, loading and unloading baggage and cargo, refueling, catering, and coordinating support vehicles. Each of these tasks must be completed within a short window to keep flights on schedule.

Because planes often operate on tight turnaround times, ground operations are very time-sensitive. Any delay on the ground, whether it’s a fueling issue, a late baggage transfer, or a safety check that takes too long, can lead to flight disruptions, missed connections, or increased costs for airlines.

Adding to the pressure, these tasks happen in busy, open environments with constant vehicle and personnel movement. Ground crews have to coordinate closely to manage shared spaces safely and efficiently, often while dealing with changing weather conditions or visibility challenges.

Many of these operations still rely on manual processes. Crews use walkie-talkies, visual checks, and verbal communication to track activity, which can make it hard to catch problems early or respond quickly.

As airports get busier and handle more flights, it’s becoming harder to manage ground operations. Relying on just manual supervision isn’t enough to keep up with the speed and precision that today’s airports need.

Computer vision models like Ultralytics YOLO11 can help address these problems by giving airports a streamlined way to analyze, track, and understand what is happening on the ground in real time. In particular, it supports crews by watching for issues in real time, so they can act before small problems turn into big ones.

On top of object detection, YOLO11 supports a variety of other Vision AI tasks. Here are some that are especially useful for monitoring airport ground operations:

Airport ground operations involve many moving parts happening at the same time, yet only a few are monitored in real time. It’s often hard to tell which equipment is in use, where support vehicles are located, or whether safety procedures are being followed.

These gaps can slow down operations and increase the risk of error. Next, let’s walk through some use cases where YOLO11 can optimize ground operations.

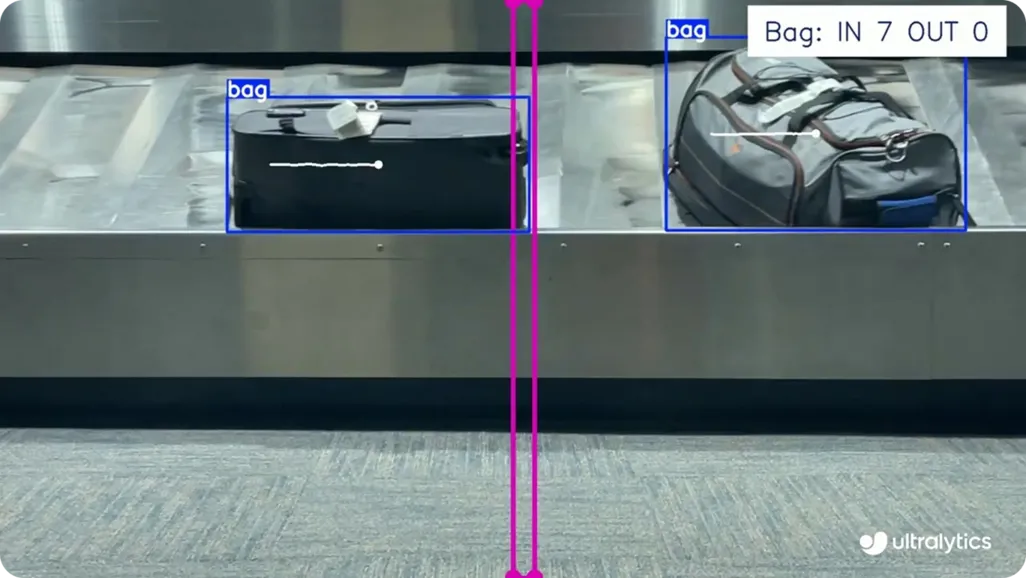

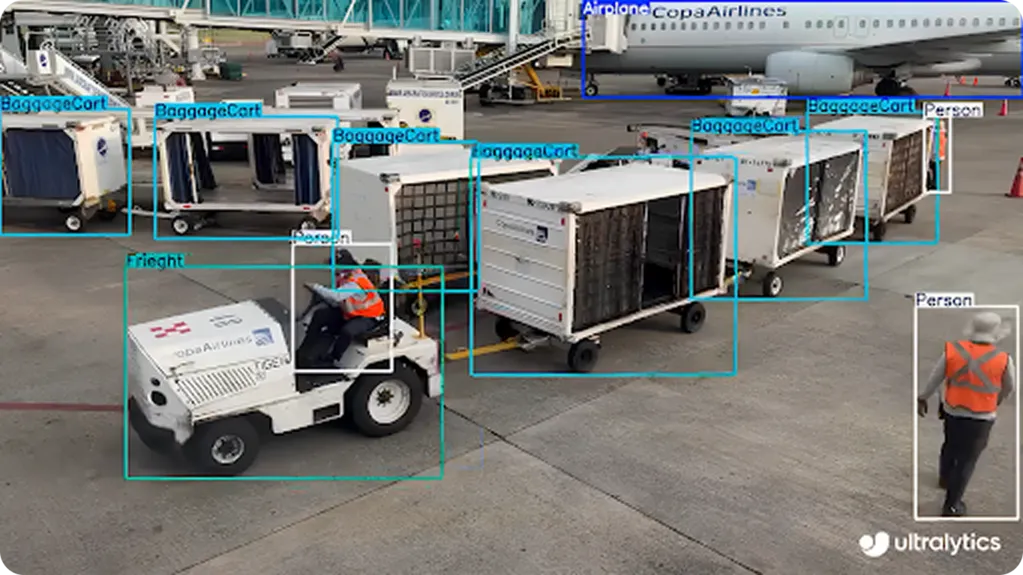

Ground support vehicles like baggage carts, freight loaders, catering trucks, and service vans are essential to every flight’s turnaround. These vehicles generally move through shared spaces and need to be in the right place at the right time. Without proper tracking, they can block access paths and delay loading operations.

YOLO11’s support for object detection can be used to identify and locate each vehicle as it moves across the apron. This gives teams a live view of equipment locations and highlights when something is out of place. It helps reduce confusion, and supervisors can use this information to improve vehicle flow and prevent equipment from going idle or staying in high-traffic zones for too long.

For example, if a cart remains in a loading zone past its scheduled time, a system with YOLO11 integrated can flag it for removal. Similarly, having access to YOLO11’s object tracking insights can eliminate the need for verbal check-ins or manual reporting.

Ground crew members, such as baggage handlers, technicians, and fuel operators, work close to aircraft and heavy equipment, often in areas with limited visibility. Their work requires moving quickly between different areas, so they need to stay focused on both timing and safety. When something doesn’t go as planned, it can lead to injuries or disrupt the flow of airport operations.

To make these tasks safer, YOLO11’s pose estimation capabilities can be used to analyze how people move within active areas. It can recognize body posture and flag movements that don’t follow safety guidelines. For instance, it can spot when someone bends too close to an engine.

Pose estimation also supports training and safety reviews by providing detailed movement data that can be analyzed after a shift. This helps teams identify patterns, correct unsafe habits, and reinforce proper procedures during future operations.

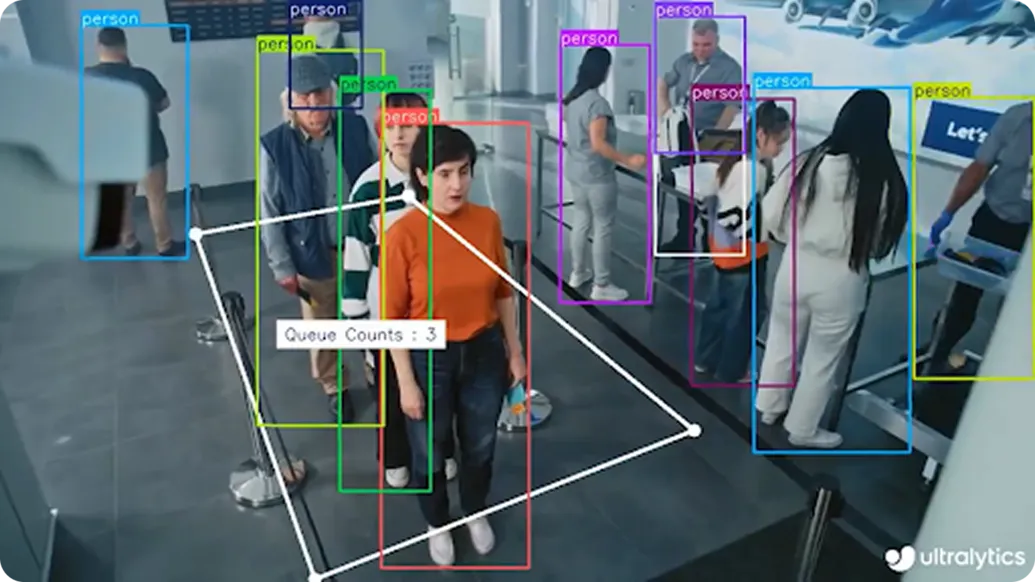

Keeping passengers moving smoothly through the airport is directly linked to ground operations. Consider a situation where baggage loading is delayed. This can slow down boarding, lead to crowding at the gate, and cause disruptions throughout the terminal.

Similarly, if a support vehicle or crew member arrives late, it can delay aircraft turnaround and affect the flow of passengers during both arrivals and departures.

Managing queues effectively is also a key part of keeping things on schedule. Long lines at check-in, security, or boarding gates can lead to missed flights and passenger frustration.

Using YOLO11 for object detection and tracking, smart airports can monitor queue lengths and passenger movement in real time. Vision-enabled systems can alert staff when lines become too long or when it's time to open additional lanes, helping to reduce wait times and prevent congestion.

Runways and aprons are critical parts of airport infrastructure. Runways are paved paths used for aircraft takeoff and landing, while aprons are the areas where planes are parked, loaded, or serviced.

These areas need regular surface checks to keep taxiing, parking, and servicing safe. Problems like cracks, fluid spills, standing water, or debris can be easy to miss but might cause delays or damage if not dealt with quickly.

YOLO11’s instance segmentation ability can detect and segment these defects with pixel-level accuracy. The model can process images in real time and highlight surface areas that require attention. This makes it possible for maintenance crews to receive alerts and schedule cleanup or repairs without waiting for manual inspections.

Here’s a look at some of the key benefits of using computer vision to improve airport ground operations:

On the other hand, there are also some limitations to keep in mind when implementing a Vision AI solution. Here are a few factors to consider:

Computer vision models like Ultralytics YOLO11 are making it easier to monitor airport ground operations in real time. By detecting ground vehicles, tracking personnel, and identifying surface-level risks, YOLO11 can improve situational awareness and reduce the likelihood of errors during time-sensitive operations.

Looking ahead, models like YOLO11 can support semi-autonomous systems that manage vehicle routing, guide aircraft movements, and monitor personnel zones in real time. As Vision AI improves, it's becoming an important tool for making airport ground operations safer, more efficient, and better able to keep up with growing demand.

Join our growing community! Explore our GitHub repository to learn more about AI. Ready to start your own computer vision projects? Check out our licensing options. Discover AI in agriculture and Vision AI in healthcare by visiting our solutions pages!