Ultralytics YOLOv8 for speed estimation in computer vision projects

Discover how the Ultralytics YOLOv8 model can be used for speed estimation in your computer vision projects. Try it out yourself with a simple coding example.

Discover how the Ultralytics YOLOv8 model can be used for speed estimation in your computer vision projects. Try it out yourself with a simple coding example.

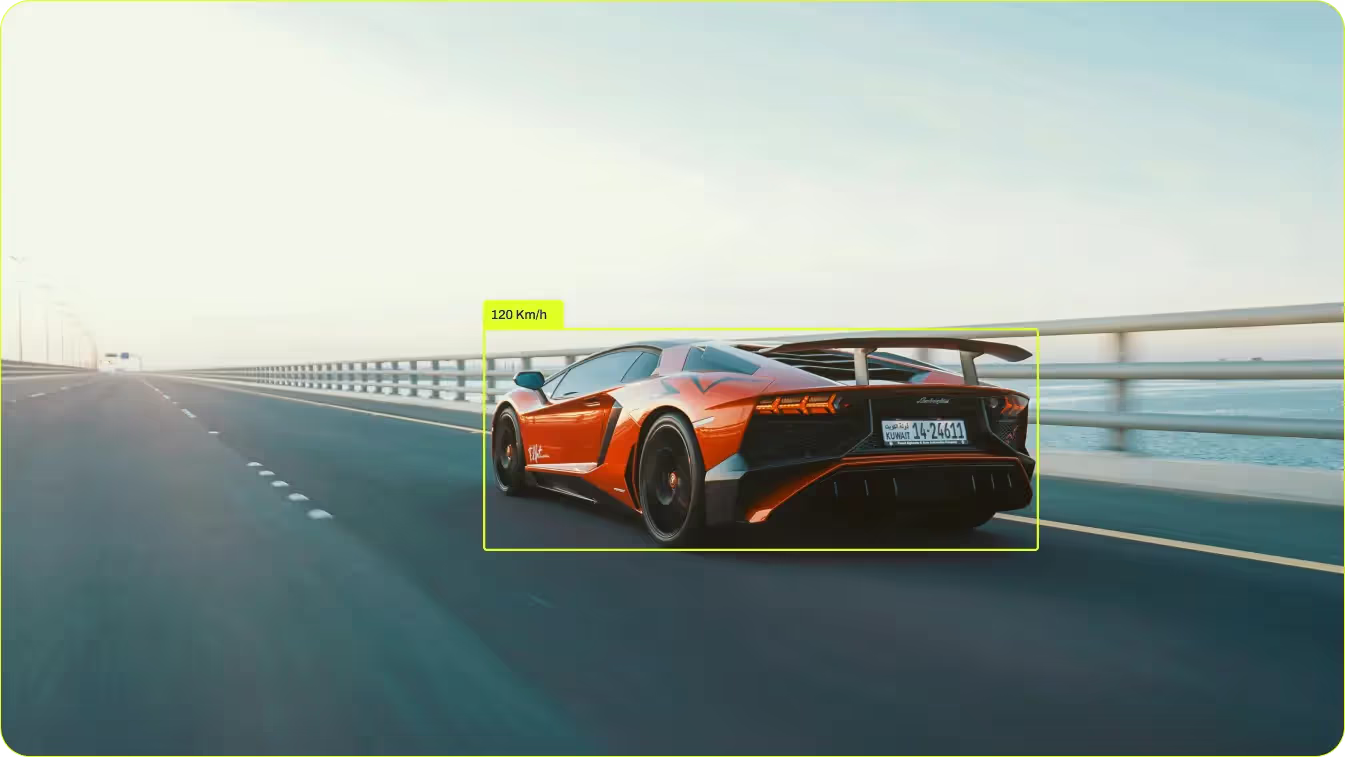

We’ve probably all seen speed limit road signs. Some of us may have even received an automated speed limit violation notification by post or email. Artificial intelligence (AI) traffic management systems can flag speeding violations automatically thanks to computer vision. Real-time footage captured by cameras at street lights and on highways is used for speed estimation and to reinforce road safety.

Speed estimation isn’t just limited to highway safety. It can be used in sports, autonomous vehicles, and various other applications. In this article, we’ll discuss how you can use the Ultralytics YOLOv8 model for speed estimation in your computer vision projects. We’ll also walk step-by-step through a coding example so you can try it out yourself. Let’s get started!

According to the World Health Organization (WHO), approximately 1.19 million people die annually from road traffic crashes as a result of speeding. Additionally, 20 to 50 million more suffer non-fatal injuries of which many result in disabilities. The importance of traffic security cannot be overstated, especially when speed estimation helps prevent accidents, saves lives, and keeps our roads safe and efficient.

Speed estimation using computer vision involves detecting and tracking objects in video frames to calculate how fast they're moving. Algorithms like YOLOv8 can identify and track objects such as vehicles across consecutive frames. The system measures the distance these objects travel using calibrated cameras or reference points to gauge real-world distances. By timing how long it takes for objects to move between two points, the system calculates their speed using the distance-time ratio.

Other than catching speeders, AI-integrated speed estimation systems can collect data to make predictions about traffic. These predictions can support traffic management tasks like optimizing signal timings and resource allocation. Insights into traffic patterns and congestion causes can be used to plan new roads to reduce traffic congestion.

Speed estimation applications go beyond monitoring roads. It can also be handy for monitoring athletes' performance, helping autonomous vehicles understand the speed of objects moving around them, detecting suspicious behavior, etc. Anywhere a camera can be used to measure the speed of an object, speed estimation using computer vision can be used.

Here are some examples of where speed estimation is being used:

Vision-based speed estimation systems are replacing traditional sensor-based methods because of their enhanced accuracy, cost-effectiveness, and flexibility. Unlike systems that rely on expensive sensors like LiDAR, computer vision uses standard cameras to monitor and analyze speed in real time. Computer vision solutions for speed estimation can be seamlessly integrated with existing traffic infrastructure. Also, these systems can be built to perform a number of complex tasks like vehicle type identification and traffic pattern analysis to improve overall traffic flow and safety.

Now that we have a clear understanding of speed estimation and its applications, let’s take a closer look at how you can integrate speed estimation into your computer vision projects through code. We'll detect moving vehicles and estimate their speed using the YOLOv8 model.

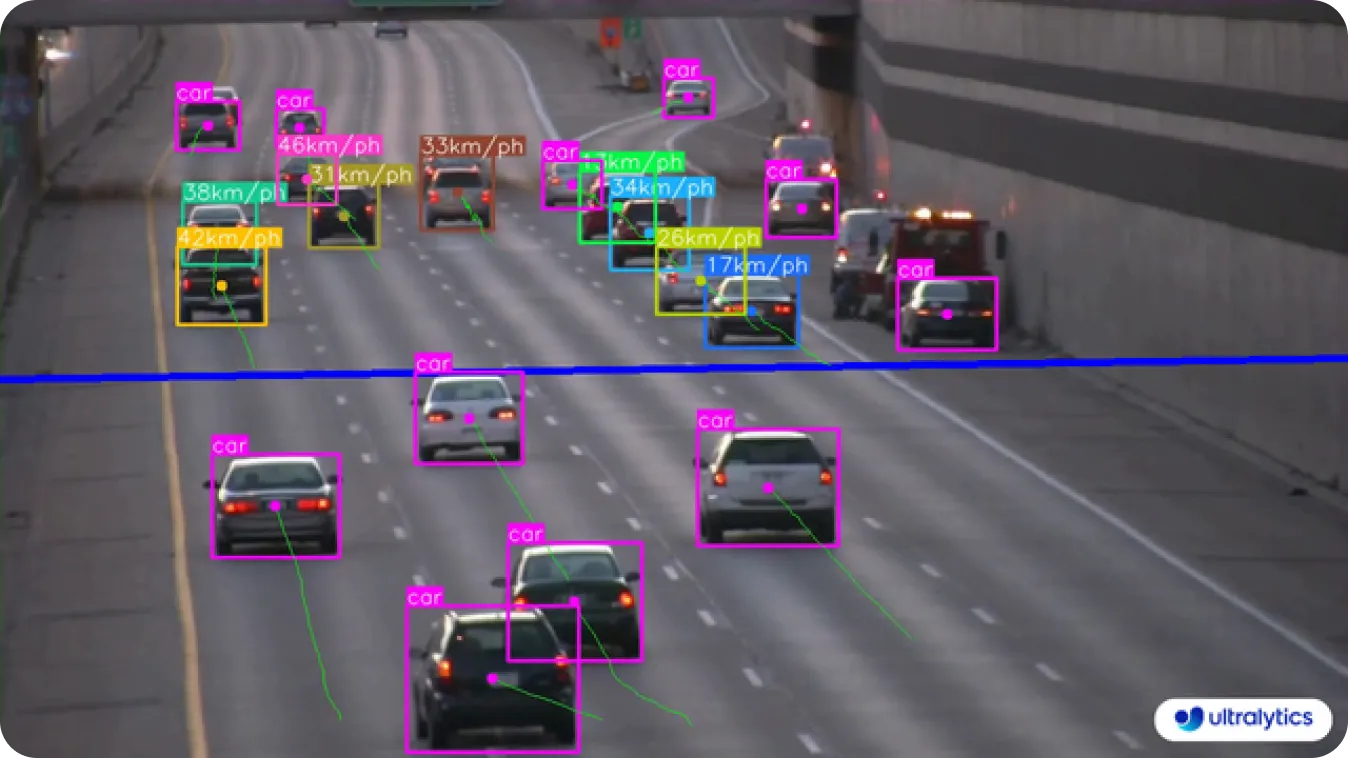

This example uses a video of cars on a road downloaded from the internet. You can use the same video or any relevant video. The YOLOv8 model identifies each vehicle's center and calculates its speed based on how quickly this center crosses a horizontal line in the video frame.

Before we dive in, it’s important to note that, in this case, the distance calculation is approximate and based on Euclidean Distance. Camera calibration is not factored in, and so the speed estimation may not be entirely accurate. Also, the estimated speed can vary depending on your GPU’s speed.

Step 1: We’ll start by installing the Ultralytics package. Open your command prompt or terminal and run the command shown below.

pip install ultralytics Take a look at our Ultralytics Installation guide for step-by-step instructions and best practices on the installation process. If you run into any issues while installing the required packages for YOLOv8, our Common Issues guide has solutions and helpful tips.

Step 2: Next, we’ll import the required libraries. The OpenCV library will help us handle video processing.

import cv2

from ultralytics import YOLO, solutionsStep 3: Then, we can load the YOLOv8 model and retrieve the names of the classes that the model can detect.

model = YOLO("yolov8n.pt")

names = model.model.namesCheck out all the models we support to understand which model suits your project the best.

Step 4: In this step, we’ll open the input video file using OpenCV’s VideoCapture module. We will also extract the video’s width, height, and frames per second (fps).

cap = cv2.VideoCapture("path/to/video/file.mp4")

assert cap.isOpened(), "Error reading video file"

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH, cv2.CAP_PROP_FRAME_HEIGHT, cv2.CAP_PROP_FPS))Step 5: Here, we will initialize the video writer to save our final results of speed estimation. The output video file will saved as “speed_estimation.avi”.

video_writer = cv2.VideoWriter("speed_estimation.avi", cv2.VideoWriter_fourcc(*"mp4v"), fps, (w, h))

Step 6: Next, we can define the line points for speed estimation. For our input video, this line will be placed horizontally in the middle of the frame. Feel free to play around with the values to place the line in the most suitable positions, depending on your input video.

line_pts = [(0, 360), (1280, 360)]Step 7: Now, we can initialize the speed estimation object using the defined line points and class names.

speed_obj = solutions.SpeedEstimator(

reg_pts=line_pts,

names=names,

view_img=True,

)Step 8: The core of the script processes the video frame by frame. We read each frame and detect and track objects. The speed of the tracked objects is estimated, and the annotated frame is written to the output video.

while cap.isOpened():

success, im0 = cap.read()

if not success:

print("Video frame is empty or video processing has been successfully completed.")

break

tracks = model.track(im0, persist=True, show=False)

im0 = speed_obj.estimate_speed(im0, tracks)

video_writer.write(im0)

Step 9: Finally, we release the video capture and writer objects and close any OpenCV windows.

cap.release()

video_writer.release()

cv2.destroyAllWindows()Step 10: Save your script. If you are working from your terminal or command prompt, run the script using the following command:

python your_script_name.pyIt’s also important to understand the challenges involved in implementing speed estimation using computer vision. Unfavorable weather conditions like rain, fog, or snow can cause problems to the system as they can obstruct the visibility of the road. Similarly, occlusions caused by other vehicles or objects can make it difficult for these systems to track and estimate a target vehicle's speed accurately. Poor lighting conditions that cause shadows or a glare from the sun can also further complicate the task of speed estimation.

Another challenge concerns computational power. To estimate speed in real time, we have to process a lot of visual data from high-quality traffic cameras. Your solution may require expensive hardware to handle all this and ensure everything works quickly without delays.

Then, there is the issue of privacy. Data collected by these systems may include an individual's vehicle details like make, model, and license plate information, which are gathered without their consent. Some modern HD cameras can even capture images of the occupants inside the car. Such data collection can raise serious ethical and legal issues that need to be handled with the utmost care.

Using the Ultralytics YOLOv8 model for speed estimation provides a flexible and efficient solution for many uses. Although there are challenges, like accuracy in tough conditions and addressing privacy issues, the advantages are manifold. Computer vision-enabled speed estimation is more cost-effective, adaptable, and precise compared to older ways. It’s useful in various sectors like transportation, sports, surveillance, and self-driving cars. With all the benefits and applications, it’s destined to be a key part of future smart systems.

Interested in AI? Connect with our community! Explore our GitHub repository to learn more about how we are using AI to create innovative solutions in various industries like healthcare and agriculture. Collaborate, innovate, and learn with us! 🚀