探索Ultralytics YOLO 如何通过实现早期检测、快速响应和更安全的道路运营,彻底改变交通事故管理。

探索Ultralytics YOLO 如何通过实现早期检测、快速响应和更安全的道路运营,彻底改变交通事故管理。

每天,轻微的道路事故都会对交通流产生细微影响,这些影响可能迅速引发连锁反应。例如,高速公路上的抛锚车辆或散落物,极易导致长时间延误、交通流不安全以及二次碰撞事故。

对于消防部门等第一响应者而言,这带来了持续的压力。每分钟现场评估事故的时间,都可能增加接触移动车辆的风险,并危及道路安全。

公共道路安全与应急响应人员安全在此类情境中至关重要。依赖人工监控的交通、公共工程及应急管理系统,在高峰时段或涉及危险品的事故中可能难以有效应对。

许多交通事故管理(TIM)团队现正采用计算机视觉技术来分析道路状况并及早标记事故。计算机视觉作为人工智能(AI)的一个分支,使机器能够识别并解读来自摄像头和视频的视觉数据。

视觉系统能够监控道路状况、detect 并提供实时视觉信息。这种早期可见性有助于紧急医疗服务(EMS)、执法部门和交通团队掌握现场情况并加快响应速度。

这些能力由训练有素的视觉模型驱动,Ultralytics 。通过从实时视频流中自动提取可操作的洞察,这些模型减少了对人工监控的依赖,并实现了更快、更明智的决策。这使得事件感知更迅速,应急响应协调更高效。

本文将探讨视觉人工智能如何改变交通事故管理,以及诸Ultralytics 计算机视觉模型如何帮助应急响应人员更快地detect 处理事故。让我们开始吧!

以下是交通事件管理团队在现场面临的一些关键挑战:

大多数交通事故管理系统已由部署在高速公路和城市道路上的设备网络构成。交通信号摄像头、闭路电视系统以及安装在杆柱、拖车或应急车辆上的便携式摄像头如今正日益普及。

计算机视觉技术能够轻松集成到这些系统中,因为它基于现有的摄像头基础设施,并直接处理视频流以提取可操作的洞察。来自交通摄像头的视频流可与道路传感器(如速度和流量检测器)配合使用,从而提供更全面的交通状况图景。

特别是,Ultralytics 视觉模型可用于处理视频流。YOLO26支持多种核心计算机视觉任务,能够协助detect 、解读道路状况,并为交通运营提供可操作的洞察。

以下是用于监测和管理交通事故的若干视觉任务的简要说明:

Ultralytics YOLO (如YOLO26)作为预训练模型开箱即用。这意味着它们已在COCO 等大规模、广泛使用的数据集上完成训练。

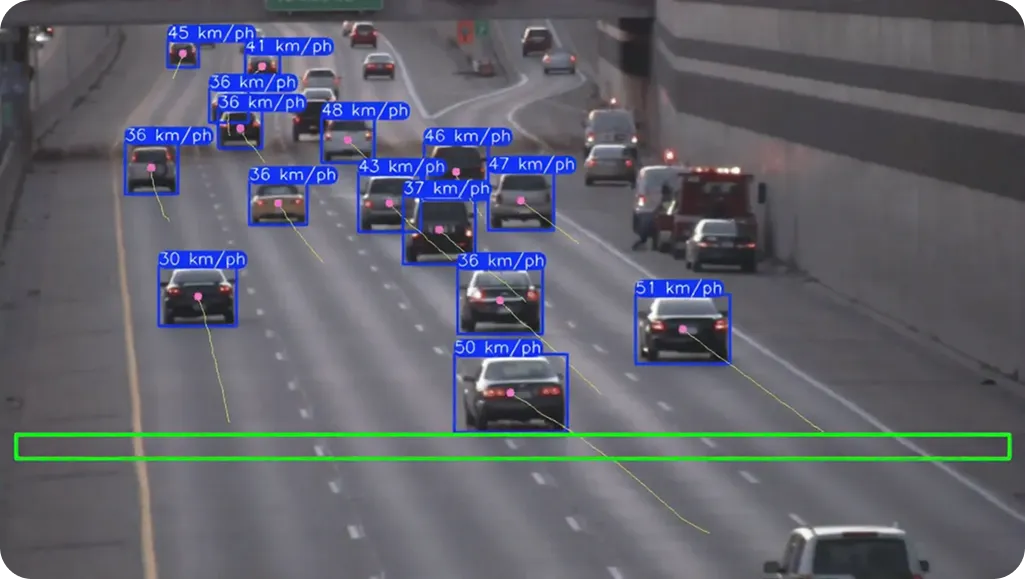

由于这种预训练,YOLO26可立即用于detect 现实世界物体,如汽车、自行车、行人、摩托车及其他日常物品。这为理解道路场景建立了强大的基准,使团队能够构建更具协同性的应用程序——例如车辆计数、交通流量分析和速度估算——而无需从头开始训练模型。

对于更具体的交通事件管理应用,这些预训练模型可通过标注的特定领域图像和视频数据进行定制化训练,从而实现detect 目标的detect 。

例如,模型可被训练为在道路监控录像中可靠识别红色消防车,帮助交通团队更快识别正在进行的应急响应现场。由此获得的视频洞察也可用于应急响应人员培训,使团队能够复盘真实事件场景,从而提升未来应对类似事件的准备能力。

接下来,我们将通过实例展示计算机视觉技术如何应用于现实世界的交通事故管理系统。

交通事件管理面临的最大挑战之一,是尽早识别事件和道路障碍,以便团队能够快速安全地处理交通事故。过去,检测主要依赖驾驶员报告、巡逻车辆或工作人员手动监控摄像头画面。

尽管这些方法至今仍在使用,但它们可能导致感知延迟或遗漏细节,尤其在繁忙的高速公路或低能见度条件下。视觉人工智能通过Ultralytics 等模型持续实时监测道路,从而优化了这一过程。

例如,YOLO26的物体检测和追踪功能可用于识别停在行驶车道上的车辆,并detect 后方交通正在减速或拥堵。

当检测到此类异常活动时,系统可提前向交通管理团队发出警报,使应急人员有更多时间规划交通管制措施、警示驾驶员并协调有效应对方案。更早的检测还能支持快速疏通道路、缓解拥堵状况,并降低二次事故的风险。

交通事故管理不仅在于事故发生后的应急响应,更在于及早发现道路隐患,在它们演变为事故之前加以防范。

借助计算机视觉技术,联邦公路管理局(FHWA)和交通部等政府机构能够持续监测道路状况,识别路面损坏、散落物或其他危险等各类问题。

通过运用实例分割等技术,YOLO26等视觉模型能够在道路影像中精准勾勒出裂缝、坑洞或路面损坏区域的轮廓。这使得人们能够更清晰地了解损坏的规模和位置,而不仅仅是检测到问题存在。

及早发现这些问题,就能更早采取行动——无论是安排维护、调整交通管制,还是向驾驶员发出警告。这种主动措施能提升道路安全性,降低事故风险,并改善所有人的日常驾驶环境。

以下是采用视觉人工智能技术支持交通事故管理和道路安全的主要优势:

尽管存在这些优势,仍需考虑其局限性。以下是需要注意的因素:

交通事件管理最有效的方式,是让团队能够及早发现问题并实时掌握道路动态。视觉人工智能技术通过将日常交通摄像头画面转化为有价值的洞察,支持更快速的响应和更安全的决策,从而实现这一目标。当该技术被审慎运用时,它能为驾驶员创造更安全的道路环境,同时降低道路工作人员的日常作业风险。

想将视觉人工智能融入您的项目吗?加入我们活跃的社区,了解制造业中的视觉人工智能和机器人领域的计算机视觉技术。探索我们的GitHub代码库获取更多信息。查看我们的许可方案,立即开始吧!

.webp)

.webp)