Learn how cell segmentation works and how Vision AI improves microscopy analysis with deep learning, key metrics, datasets, and real-world uses.

Learn how cell segmentation works and how Vision AI improves microscopy analysis with deep learning, key metrics, datasets, and real-world uses.

Many breakthroughs in drug discovery, cancer research, or personalized medicine start with one key challenge: seeing cells clearly. Scientists depend on clear images to track cell behavior, evaluate drugs, and explore new therapies.

A single microscopy image can contain thousands of overlapping cells, making boundaries hard to see. Cell segmentation aims to solve this by clearly separating each cell for accurate analysis.

But cell segmentation isn’t always simple. A single study can produce thousands of detailed microscope images, far too many to review by hand. As datasets grow, scientists need faster and more reliable ways to separate and study cells.

In fact, many scientists are adopting computer vision, a branch of AI that enables machines to interpret and analyze visual information. For instance, models like Ultralytics YOLO11 that support instance segmentation can be trained to separate cells and even detect subcellular structures. This enables precise analysis in seconds, rather than hours, helping researchers scale their studies efficiently.

In this article, we’ll explore how cell segmentation works, how computer vision improves it, and where it’s applied in the real world. Let’s get started!

Traditionally, scientists segmented cells by hand, tracing them in microscopy images. This worked well for small projects but was slow, inconsistent, and error-prone. With thousands of overlapping cells in a single image, manual tracing quickly becomes overwhelming and a major bottleneck.

Computer vision provides a faster and more reliable option. It is a branch of AI powered by deep learning, where machines learn patterns from large sets of images. In cell research, this means they can recognize and separate individual cells with high accuracy.

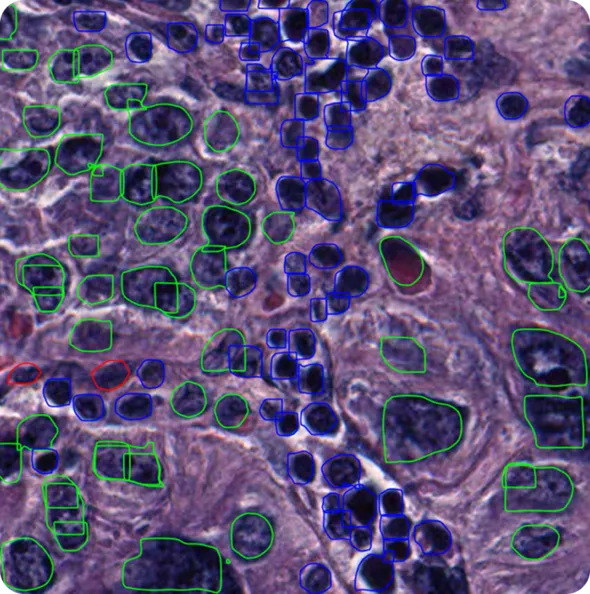

Specifically, Vision AI models like Ultralytics YOLO11 support tasks such as object detection and instance segmentation, and can be trained on custom datasets to analyze cells. Object detection makes it possible to find and label each cell in an image, even when many appear together.

Instance segmentation goes a step further by drawing precise boundaries around each cell, capturing their exact shapes. Integrating these Vision AI capabilities into cell segmentation pipelines allows researchers to automate complex workflows and process high-resolution microscopy images efficiently.

Cellular segmentation methods have changed a lot over the years. Early image segmentation techniques worked for simple images but struggled as datasets grew larger and cells became harder to distinguish.

To overcome these limits, more advanced approaches were developed, leading to today’s computer vision models that bring speed, accuracy, and scalability to microbiology and microscopy studies.

Next, let’s walk through how segmentation algorithms have evolved, from basic thresholding methods to cutting-edge deep learning models and hybrid pipelines.

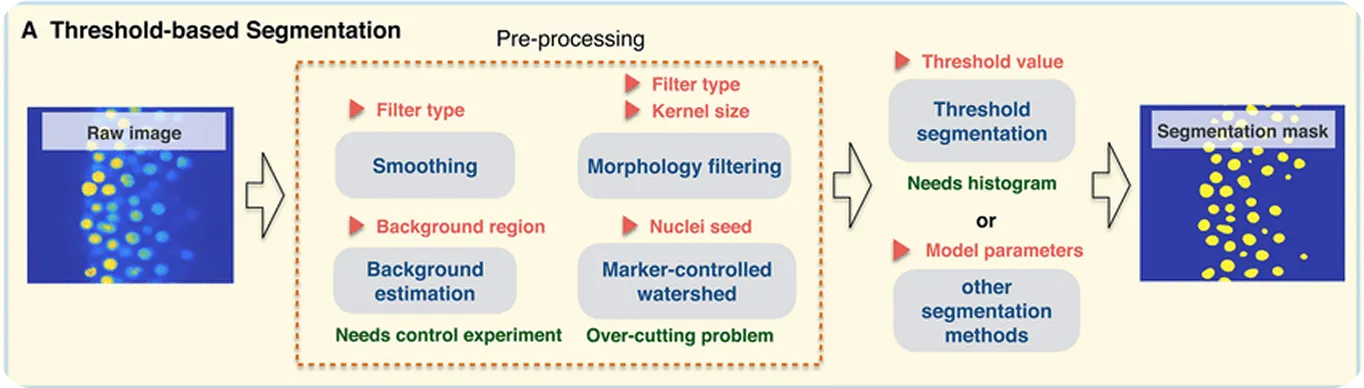

Before advances in computer vision, cell segmentation relied on traditional image processing techniques. These methods relied on manually defined rules and operations, such as detecting edges, separating the foreground from the background, or smoothing shapes. Unlike computer vision models, which can learn patterns directly from data, image processing depends on fixed algorithms applied the same way across all images.

One of the earliest approaches was thresholding, a method that separates cells from the background by comparing pixel brightness levels. This works well when there is a strong contrast between cells and their surroundings.

To refine the results, morphological operations such as dilation (expanding shapes) and erosion (shrinking shapes) are used to smooth edges, remove noise, or close small gaps. For cells that touch or overlap, a technique called watershed segmentation helps split them apart by drawing boundaries where the cells meet.

While these techniques struggle with complex cases like overlapping cells or noisy images, they are still useful for simpler applications and are an important part of the history of cell segmentation. Their limitations, however, pushed the field toward deep learning–based models, which deliver much higher accuracy for more challenging images.

As image processing techniques reached their limits, cell segmentation shifted toward learning-based approaches. Unlike rule-based methods, deep learning models identify patterns directly from data, making them more adaptable to overlapping cells, variable shapes, and different imaging modalities.

Convolutional neural networks (CNNs) are a class of deep learning architectures widely used in computer vision. They process images in layers: the early layers detect simple features like edges and textures, while deeper layers capture more complex shapes and structures. This layered approach makes CNNs effective for many visual tasks, from recognizing everyday objects through pattern recognition to analyzing biomedical images.

Models like YOLO11 are built on these deep learning principles. They extend CNN-based architectures with techniques for real-time object detection and instance segmentation, making it possible to quickly locate cells and outline their boundaries.

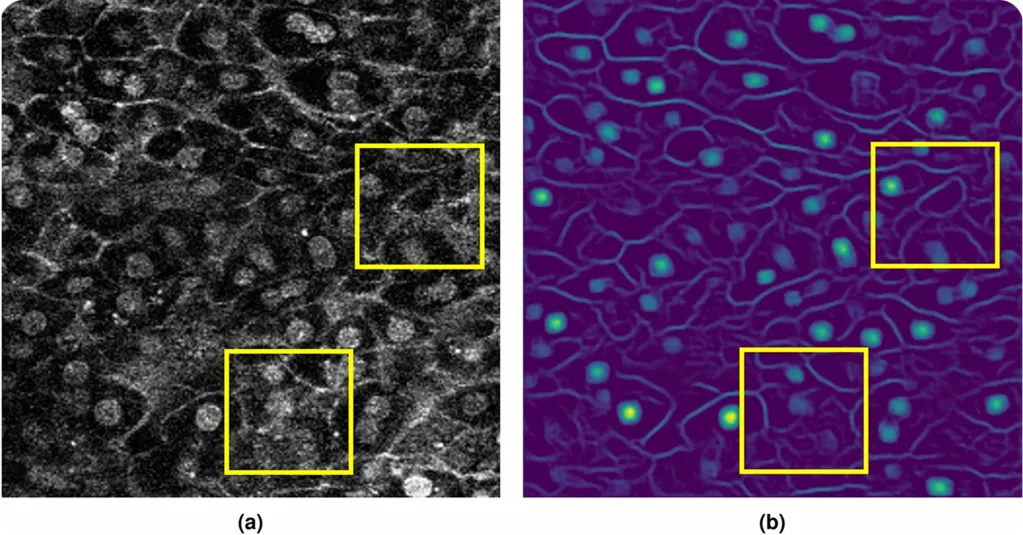

Hybrid pipelines improve cell segmentation by combining the strengths of multiple methods. These can include classical image processing and deep learning models, or even different deep learning-based models working together.

For example, one method might enhance or pre-process microscopy images to reduce noise and sharpen boundaries, while another model is used to detect and segment the cells. By splitting tasks this way, hybrid approaches improve accuracy, handle complex images more effectively, and make large-scale studies more reliable.

Another key factor to consider in computer vision-driven cell segmentation is image data. Computer vision models depend heavily on large, high-quality datasets to learn how to identify and separate cells accurately.

These datasets typically consist of microscopy images paired with annotations. Just as important as the raw images, data annotation, or labeling plays a crucial role in creating effective datasets, since it tells the model key information.

For example, if we want to train a model like YOLO11 to segment cancerous cells from microscopy images, we need labeled examples that show where each cell begins and ends. These labels act as a guide, teaching the model how to recognize cell morphology and boundaries. Annotations can be drawn by hand or created with semi-automated tools to save time.

The type of annotation also depends on the task. For object detection, bounding boxes are drawn around each cell. For instance segmentation, labels are more like detailed masks that trace the exact outline of each cell. Picking the right kind of annotation and training data helps the model learn what it needs for the job.

In general, building computer vision datasets can be difficult, especially when there are no existing image collections or when the field is very unique and specialized. But in the field of cellular research, there are technical difficulties that make data collection and annotation even more complex.

Microscopy images can look very different depending on the cell imaging method. For instance, fluorescence microscopy uses dyes that make parts of a cell glow. These fluorescence images highlight details that are otherwise hard to see.

Annotation is another major challenge. Labeling thousands of cells by hand is slow and requires domain expertise. Cells often overlap, change shape, or appear faint, making it easy for mistakes to creep in. Semi-automated tools can speed up the process, but human oversight is usually needed to ensure quality.

To ease the workload, researchers sometimes use simpler annotations such as location-of-interest markers that indicate where cells are, rather than drawing full outlines. While less precise, these markers still provide crucial guidance for training.

Beyond this, data sharing in biology adds further complications. Privacy concerns, patient consent, and differences in imaging equipment between labs can make it harder to build consistent, high-quality datasets.

Despite these obstacles, open-source datasets have made a big difference. Public collections shared through platforms like GitHub provide thousands of labeled images across many cell types and imaging methods, helping models generalize better to real-world scenarios.

Now that we have a better understanding of the data and methods used for segmenting cells with computer vision, let’s look at some of the real-world applications of cell segmentation and computer vision.

Single-cell analysis or studying individual cells instead of entire tissue samples helps scientists see details that are often missed at the broader level. This approach is widely used in cell biology, drug discovery, and diagnostics to understand how cells function and respond under different conditions.

For example, in cancer research, a tissue sample often contains a mix of cancerous cells, immune cells, and supporting (stromal) cells. Looking only at the tissue as a whole can hide important differences, such as how immune cells interact with tumors or how cancer cells near blood vessels behave.

Single-cell analysis allows researchers to separate these cell types and study them individually, which is crucial for understanding treatment responses and disease progression. Models like YOLO11, which support instance segmentation, can detect each cell and outline its exact shape, even in crowded or overlapping images. By turning complex microscopy images into structured data, YOLO11 enables researchers to analyze thousands of cells quickly and consistently.

Cells divide, move, and respond to their surroundings in different ways. Analyzing how living cells change over time helps scientists understand how they behave in health and disease.

With tools like phase contrast or high-resolution microscopy, researchers can follow these changes without adding dyes or labels. This keeps cells in their natural state and makes the results more reliable.

Tracking cells over time also helps capture details that might otherwise be missed. One cell might move faster than others, divide in an unusual way, or respond strongly to a stimulus. Recording these subtle differences provides a clearer picture of how cells behave in real conditions.

Computer vision models such as Ultralytics YOLOv8 make this process faster and more consistent. By detecting and tracking individual cells across image sequences, YOLOv8 can monitor cell movements, divisions, and interactions automatically, even when cells overlap or change shape.

Insights from computer vision models like YOLO11 used for whole-cell segmentation can make a greater impact when combined with bioinformatics (the use of computational methods to analyze biological data) and multi-omics (the integration of DNA, RNA, and protein information). Together, these methods move research beyond drawing cell boundaries and into understanding what those boundaries mean.

Instead of only identifying where cells are, scientists can study how they interact, how tissue structure changes in disease, and how small shifts in cell shape connect to molecular activity.

Consider a tumor sample: by linking the size, shape, or position of cancer cells with their molecular profiles, researchers can find correlations with gene mutations, gene expression, or abnormal protein activity. This turns static images into practical insights, helping track gene activity across tumors, map protein behavior in real time, and build reference atlases that connect structure with function.

Here are some of the key benefits of using computer vision for cell segmentation:

While computer vision brings many benefits to cell segmentation, it also has some limitations. Here are a few factors to keep in mind:

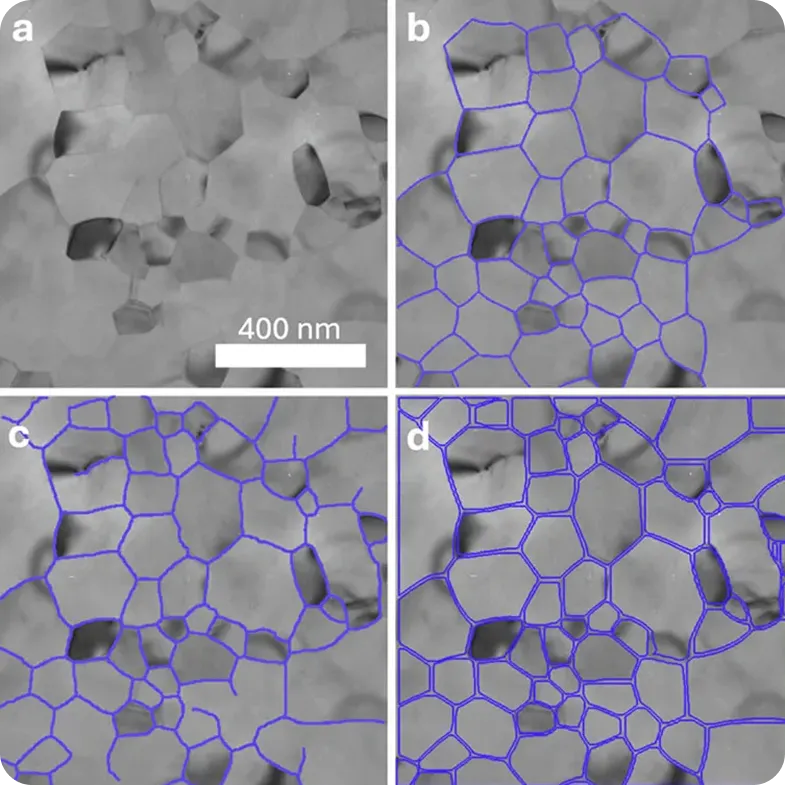

The next generation of cell segmentation will likely be defined by computer vision models that combine speed, accuracy, and scalability. Models such as U-Net have been highly influential, but they can be computationally demanding. With advances in computer vision, researchers are moving toward models that deliver both high accuracy and real-time performance.

For instance, state-of-the-art models like Ultralytics YOLOv8 can segment microscopy images much faster than traditional approaches while still producing sharp and accurate boundaries.

In a recent Transmission Electron Microscopy (TEM) study, performance metrics showed that YOLOv8 ran up to 43 times faster than U-Net. This kind of performance makes it possible to analyze large datasets in real time, which is increasingly important as imaging studies grow in size.

These improvements are already being put into practice. Platforms such as Theia Scientific’s Theiascope™ integrate Ultralytics YOLO models with Transmission Electron Microscopy (TEM), enabling nanoscale structures to be segmented consistently and at scale. The platform uses Ultralytics YOLO models for real-time detection and segmentation, automatically identifying structures in TEM images as they are captured and converting them into reliable, ready-to-analyze data.

Cell segmentation plays a key role in modern microscopy and biomedical research. It allows scientists to observe individual cells, track disease progression, and monitor how treatments affect cell behavior. Vision AI models like YOLO11 make this process faster and more precise. By handling large, complex images with ease, they ensure experiments are repeatable and scalable.

Join our community and visit our GitHub repository to learn more about AI. Explore our solutions pages to learn more about applications like AI in agriculture and computer vision in logistics. Check out our licensing options and start building with computer vision today!