Deploy Ultralytics YOLO models using the ExecuTorch integration

Explore how to export Ultralytics YOLO models like Ultralytics YOLO11 to ExecuTorch format for efficient, PyTorch-native deployment on edge and mobile devices.

Explore how to export Ultralytics YOLO models like Ultralytics YOLO11 to ExecuTorch format for efficient, PyTorch-native deployment on edge and mobile devices.

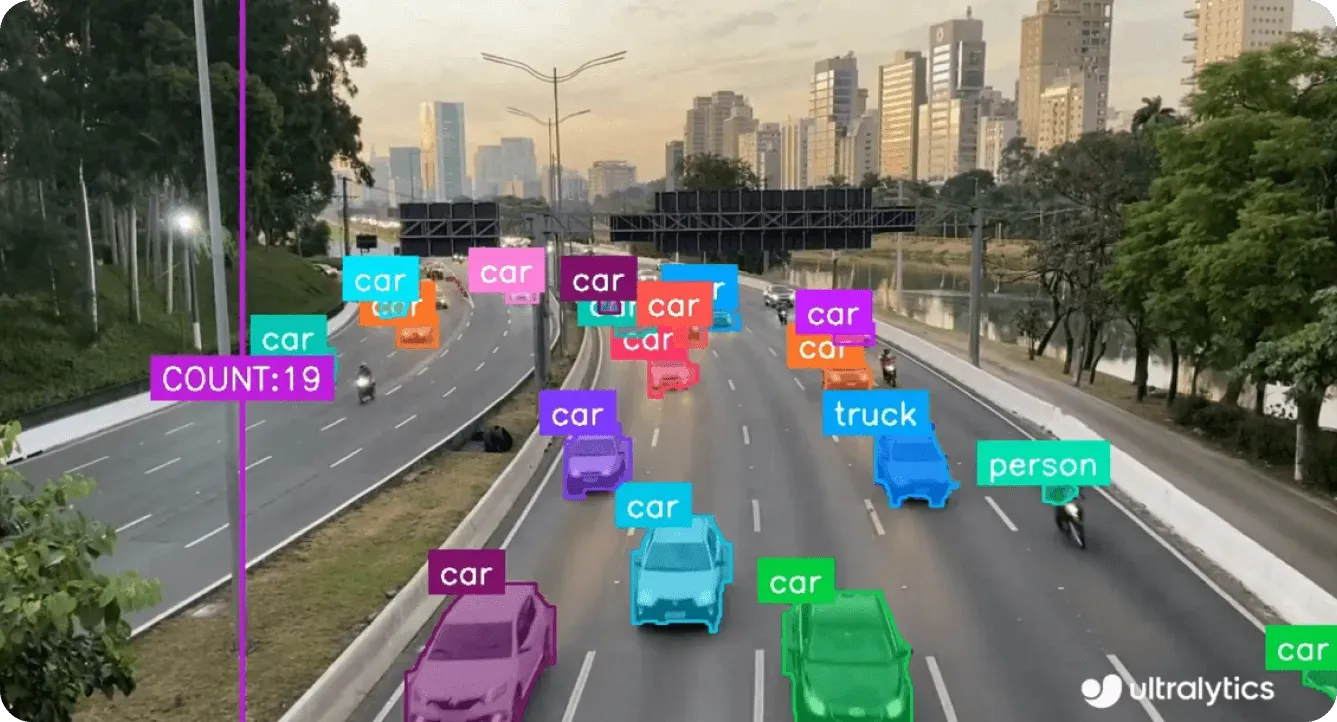

Certain computer vision applications, like automated quality inspection, autonomous drones, or smart security systems, perform best when Ultralytics YOLO models, like Ultralytics YOLO11, run close to the sensor capturing images. In other words, these models need to process data directly where it’s generated, on cameras, drones, or embedded systems, rather than sending it to the cloud.

This approach, known as edge AI, enables models to perform inference directly on the device where data is captured. By processing information locally instead of relying on remote servers, systems can achieve lower latency, enhanced data privacy, and greater reliability, even in environments with limited or no internet connectivity.

For example, a manufacturing camera that inspects thousands of products every minute, or a drone navigating complex environments, can’t afford the delays that come with cloud processing. Running YOLO11 directly on the device enables instant, on-device inference.

To make running Ultralytics YOLO models on the edge easier and more efficient, the new ExecuTorch integration supported by Ultralytics provides a streamlined way to export and deploy models directly to mobile and embedded devices. ExecuTorch is part of the PyTorch Edge ecosystem and provides an end-to-end solution for running AI models directly on mobile and edge hardware, including phones, wearables, embedded boards, and microcontrollers.

This integration makes it easy to take an Ultralytics YOLO model, such as YOLO11, from training to deployment on edge devices. By combining YOLO11’s vision capabilities with ExecuTorch’s lightweight runtime and PyTorch export pipeline, users can deploy models that run efficiently on edge hardware while preserving the accuracy and performance of PyTorch-based inference.

In this article, we’ll take a closer look at how the ExecuTorch integration works, why it’s a great fit for edge AI applications, and how you can start deploying Ultralytics YOLO models with ExecuTorch. Let’s get started!

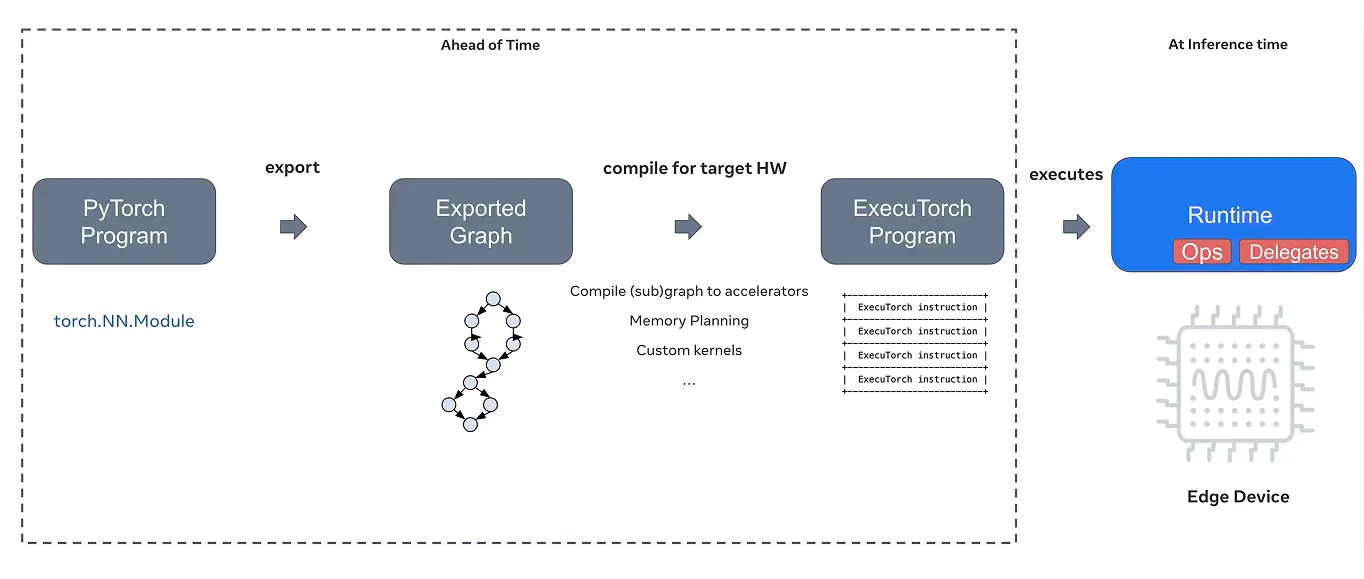

Typically, when you train a model in PyTorch, it runs on powerful servers or Graphics Processing Units (GPUs) in the cloud. However, deploying that same model to a mobile or embedded device, such as a smartphone, drone, or microcontroller, requires a specialized solution that can handle limited computing power, memory, and connectivity.

That’s exactly what ExecuTorch brings to the table. ExecuTorch is an end-to-end solution developed as part of the PyTorch Edge ecosystem that enables efficient on-device inference across mobile, embedded, and edge platforms. It extends PyTorch’s capabilities beyond the cloud, letting AI models run directly on local devices.

At its core, ExecuTorch provides a lightweight C++ runtime that allows PyTorch models to execute directly on the device. ExecuTorch uses the PyTorch ExecuTorch (.pte) model format, an optimized export designed for faster loading, smaller memory footprint, and improved portability.

It supports XNNPACK as the default backend for efficient Central Processing Unit (CPU) inference and extends compatibility across a wide range of hardware backends, including Core ML, Metal, Vulkan, Qualcomm, MediaTek, Arm EthosU, OpenVINO, and others.

These backends enable optimized acceleration on mobile, embedded, and specialized edge devices. ExecuTorch also integrates with the PyTorch export pipeline, providing support for advanced features such as quantization and dynamic shape handling to improve performance and adaptability across different deployment environments.

Quantization reduces model size and boosts inference speed by converting high-precision values (such as 32-bit floats) into lower-precision ones, while dynamic shape handling is used to enable models to process variable input sizes efficiently. Both features are crucial for running AI models on resource-limited edge devices.

Beyond its runtime, ExecuTorch also acts as a unified abstraction layer for multiple hardware backends. Simply put, it abstracts away hardware-specific details and manages how models interact with different processing units, including CPUs, GPUs and Neural Processing Units (NPUs).

Once a model is exported, ExecuTorch can be configured to target the most suitable backend for a given device. Developers can deploy models efficiently across diverse hardware without writing custom device-specific code or maintaining separate conversion workflows.

Because of its modular, portable design and seamless PyTorch integration, ExecuTorch is a great option for deploying computer vision models like Ultralytics YOLO11 to mobile and embedded systems. It bridges the gap between model training and real-world deployment, making edge AI faster, more efficient, and easier to implement.

Before we look at how to export Ultralytics YOLO models to the ExecuTorch format, let’s explore what makes ExecuTorch a reliable option for deploying AI on edge.

Here’s a glimpse of some of its key features:

Now that we have a better understanding of what ExecuTorch offers, let’s walk through how to export Ultralytics YOLO models to the ExecuTorch format.

To get started, you’ll need to install the Ultralytics Python package using pip, which is a package installer. You can do this by running “pip install ultralytics” in your terminal or command prompt.

If you’re working in a Jupyter Notebook or Google Colab environment, simply add an exclamation mark before the command, like "!pip install ultralytics". Once installed, the Ultralytics package provides all the tools you need to train, test, and export computer vision models, including Ultralytics YOLO11.

If you face any issues during installation or while exporting your model, the official Ultralytics documentation and Common Issues guide have detailed troubleshooting steps and best practices to help you get up and running smoothly.

After installing the Ultralytics package, you can load a variant of the YOLO11 model and export it to the ExecuTorch format. For example, you can use a pre-trained model such as “yolo11n.pt” and export it by calling the export function with the format set to “executorch”.

This creates a directory named “yolo11n_executorch_model”, which includes the optimized model file (.pte) and a separate metadata YAML file containing important details such as image size and class names.

Here’s the code to export your model:

from ultralytics import YOLO

model = YOLO("yolo11n.pt")

model.export(format="executorch")

Once exported, the model is ready to be deployed on edge and mobile devices using the ExecuTorch runtime. The exported .pte model file can be loaded into your application to run real-time, on-device inference without needing a cloud connection.

For example, the code snippet below shows how to load the exported model and run inference. Inference simply means using a trained model to make predictions on new data. Here, the model is tested on an image of a bus taken from a public URL.

executorch_model = YOLO("yolo11n_executorch_model")

results = executorch_model.predict("https://ultralytics.com/images/bus.jpg", save=True)

After running the code, you’ll find the output image with the detected objects saved in the “runs/detect/predict” folder.

While exploring the different export options supported by Ultralytics, you might wonder what makes the ExecuTorch integration unique. The key difference is how well it combines performance, simplicity, and flexibility, making it easy to deploy powerful AI models directly on mobile and edge devices.

Here’s a look at some of the key advantages of using the ExecuTorch integration:

Recently, Ultralytics was recognized as a PyTorch ExecuTorch success story, highlighting our early support for on-device inference and ongoing contributions to the PyTorch ecosystem. This recognition reflects a shared goal of making high-performance AI more accessible on mobile and edge platforms.

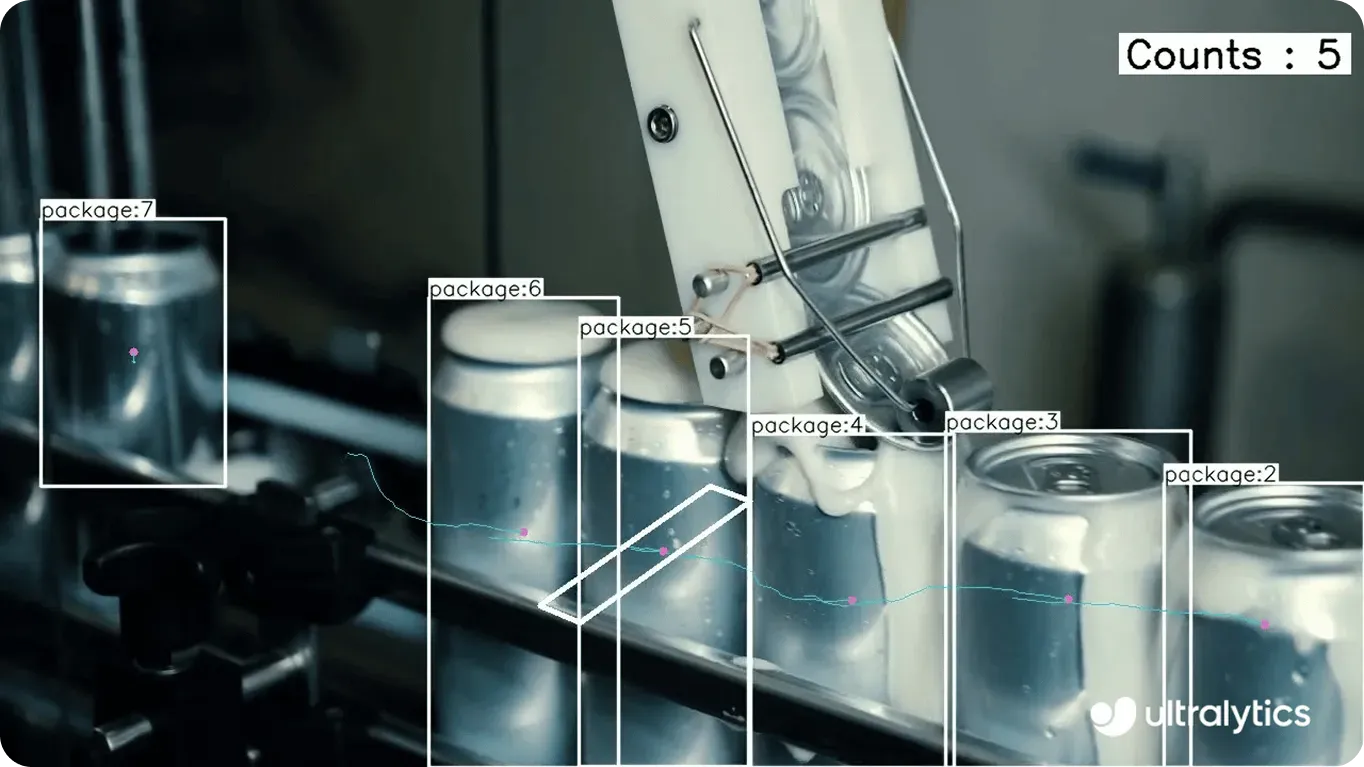

In action, this looks like real-world Vision AI solutions running efficiently on everything from smartphones to embedded systems. For instance, in manufacturing, edge devices play a crucial role in monitoring production lines and detecting defects in real time.

Instead of sending images or sensor data to the cloud for processing, which can introduce delays and depend on internet connectivity, the ExecuTorch integration enables YOLO11 models to run directly on local hardware. This means factories can detect quality issues instantly, reduce downtime, and maintain data privacy, all while operating with limited compute resources.

Here are a few other examples of how the ExecuTorch integration and Ultralytics YOLO models can be applied:

Exporting Ultralytics YOLO models to ExecuTorch format makes it easy to deploy computer vision models across many devices, including smartphones, tablets, and embedded systems such as the Raspberry Pi. This means it is possible to run optimized, on-device inference without relying on cloud connectivity, improving speed, privacy, and reliability.

Along with ExecuTorch, Ultralytics supports a wide range of integrations, including TensorRT, OpenVINO, CoreML, and more, giving developers the flexibility to run their models across platforms. As Vision AI adoption grows, these integrations simplify the deployment of intelligent systems built to perform efficiently in real-world conditions.

Curious about AI? Check out our GitHub repository, join our community, and explore our licensing options to kickstart your Vision AI project. Learn more about innovations like AI in retail and computer vision in logistics by visiting our solutions pages.