Exploring small object detection with Ultralytics YOLO11

Discover how Ultralytics YOLO11 delivers fast and accurate small object detection across real-world applications like surveillance and robotics.

Discover how Ultralytics YOLO11 delivers fast and accurate small object detection across real-world applications like surveillance and robotics.

Drones integrated with Vision AI can fly hundreds of meters above the ground, and still be expected to detect a person who appears as just a few pixels in their video feed. In fact, it’s a common challenge in applications like robotics, surveillance, and remote sensing, where systems must identify objects that are very small within an image.

But traditional object detection models can struggle to do so. Small objects in images and videos represent very limited visual information. Simply put, when a model looks at them, there isn’t much detail to learn from or recognize.

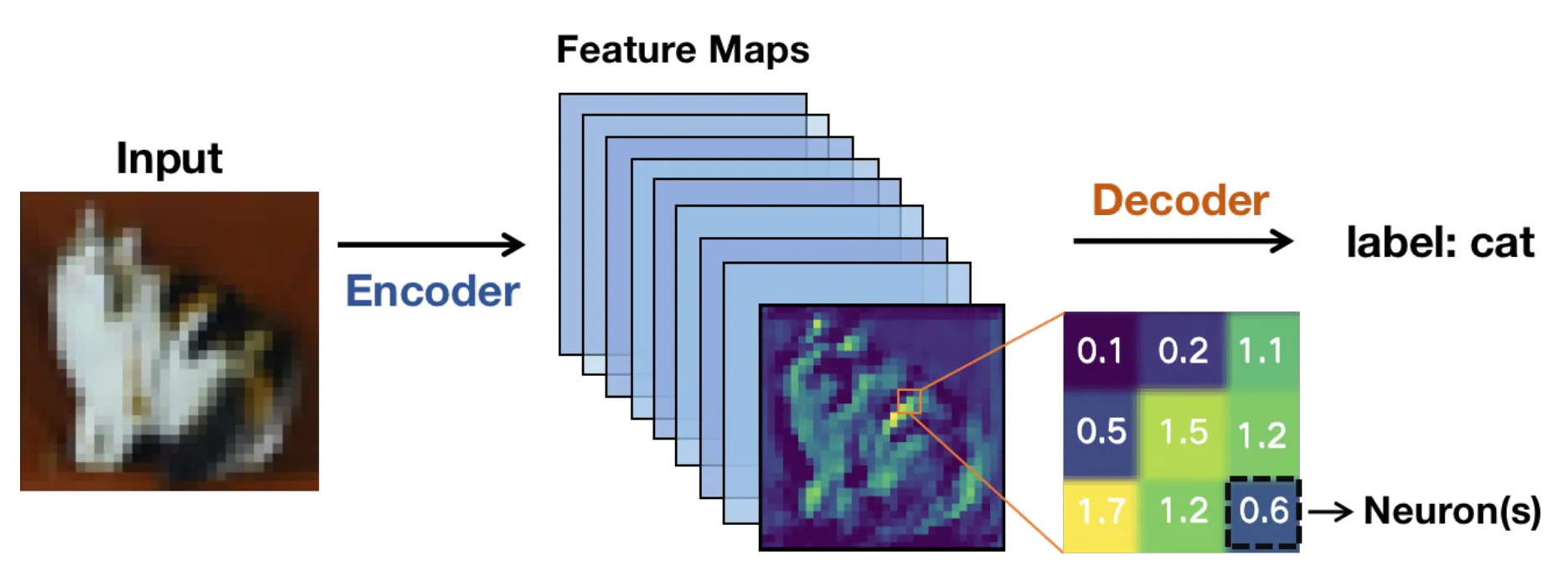

Under the hood, these models typically rely on a convolutional neural network (CNN)–based architecture. Images are passed through layers of the network and transformed into feature maps or simplified representations that highlight relevant patterns instead of raw pixels.

As the image moves deeper through the network, these feature maps become smaller. That makes computation faster, but it also means fine details can disappear.

For tiny objects, those details are crucial. Once those details disappear, a computer vision model may have difficulty detecting the object, which can lead to less accurate or inconsistent bounding boxes.

Real-time end-to-end computer vision systems make this even trickier. High-resolution images help preserve detail, but they slow down inference and require more GPU power. Lower resolutions run faster, but small objects become even harder to detect.

It becomes a constant balancing act between speed, accuracy, and hardware limits. Thanks to recent tech advancements, computer vision models like Ultralytics YOLO11 and the upcoming Ultralytics YOLO26 are designed to manage this trade-off more effectively.

In this article, we'll explore why small object detection is difficult and how YOLO11 can make it easier. Let’s get started!

Small object detection is a task in computer vision, a branch of AI, that focuses on identifying and locating objects that occupy a very small portion of an image. These objects are often represented within the image by a limited number of pixels, which are the smallest units of a digital image. This makes them harder to detect than larger and clearer targets (which often contain more pixels).

For instance, vehicles in aerial imagery, tools on a factory floor, or people captured by wide-angle surveillance cameras, can all appear as small objects within the image. Detecting them is important because they often carry critical information, and many real-world applications, such as surveillance, depend on these detections to function correctly.

When small objects are missed, system performance and decision-making can be affected. Unmanned aerial vehicle (UAV) monitoring is a good example, where missing a small moving object on the ground may impact navigation or tracking accuracy.

Earlier systems used handcrafted features and traditional computer vision methods, which had trouble in busy or varied scenes. Even today, with deep learning models performing far better, detecting small targets is still hard when they take up only a tiny part of the image.

Next, let’s look at some of the common challenges that appear across different real-world scenarios when detecting small objects.

Small objects contain very few pixels, which limits the amount of visual detail a model can learn during stages like feature extraction. As a result, patterns like edges, shapes, and textures are harder to detect, making small objects more likely to blend into the background.

As images move through convolutional layers of a neural network, visual information in the pixels is gradually compressed into feature maps. This helps the model stay efficient, but it also means fine details fade away.

For small targets, important cues can disappear before the detection network has a chance to act. When that happens, localization becomes less reliable, and bounding boxes may shift, overlap, or miss the target objects entirely.

Size-related challenges are also often brought up by occlusion. Occlusion occurs when objects, especially smaller ones, are partially hidden by other objects in the scene.

This reduces the visible area of a target, which limits the information available to the object detector. Even a small occlusion can confuse detection networks, especially when combined with low-resolution input. An interesting example of this can be seen in UAV datasets such as VisDrone, where pedestrians, bicycles, or vehicles may be partially blocked by buildings, trees, or other moving objects.

Similarly, scale variance introduces another layer of difficulty when the same object appears very small or relatively large depending on distance and camera position. Despite these hurdles, detection algorithms must recognize these small objects across different scales without losing accuracy.

Context also plays an important role in detection. For instance, large objects usually appear with clear surroundings that provide helpful visual cues. On the other hand, small targets often lack this contextual information, which makes pattern recognition harder.

Common evaluation metrics, such as Intersection over Union (IoU), measure how well a predicted bounding box overlaps with the ground-truth box. While IoU works well for larger objects, its behavior is quite different for small ones.

Small objects occupy only a few pixels, so even a minor shift in the predicted box can create a large proportional error and sharply lower the IoU score. This means small objects often fail to meet the standard IoU threshold used to count a prediction as correct, even when the object is visible in the image.

As a result, localization errors are more likely to be classified as false positives or false negatives. These limitations have prompted researchers to rethink how object detection systems evaluate and handle small, hard-to-detect targets.

As researchers worked to improve small object detection, it became clear that preserving and representing visual information across multiple scales is essential. This insight is echoed in recent arXiv research and in papers presented at venues such as IEEE International Conferences and the European Computer Vision Association (ECCV).

As images move deeper through a neural network, small objects can lose detail or disappear entirely, which is why modern computer vision models like YOLO11 place a strong focus on better feature extraction. Next, let’s walk through the core concepts behind feature maps and feature pyramid networks to understand them better.

When an input image, like a remote sensing image, enters a neural network, it is gradually transformed into feature maps. These are simplified representations of the image that highlight visual patterns like edges, shapes, and textures.

As the network goes deeper, these feature maps become smaller in spatial size. This reduction helps the model run efficiently and focus on high-level information. However, shrinking and deep feature maps also reduce spatial detail.

While large objects retain enough visual information for accurate detection, small targets may lose critical details after just a few network layers. When this happens, a model can struggle to recognize that a small object even exists. This is one of the main reasons small objects are missed in deep object detection models.

Feature pyramid networks, often called FPN, were introduced to address the loss of spatial detail, and they work as a supporting module that combines information from multiple layers so models can detect small objects more effectively. This process is also known as feature aggregation and feature fusion.

Shallow layers provide fine spatial details, while deeper layers add semantic context, allowing effective multi-scale feature learning. Unlike naive upsampling, which simply enlarges feature maps, FPN preserves meaningful information and improves small object detection.

Modern approaches build on this idea using adaptive feature fusion and context-aware designs to further enhance the detection of small targets. In other words, FPN helps models see both the big picture and the tiny details at the same time. This optimization is essential when objects are small.

Here is a glimpse of how object detection models have evolved and advanced over time to detect objects of different sizes better, including very small ones:

Now that we have a better understanding of how small object detection works, let’s look at a couple of real-world applications where YOLO11 can be applied.

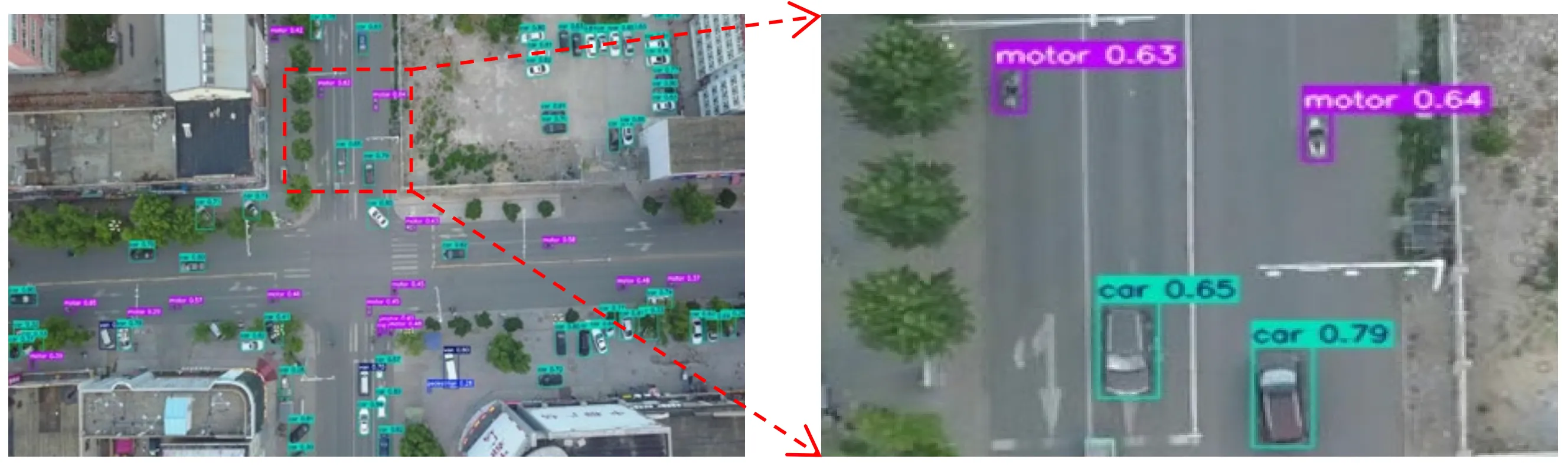

Picture a drone flying high above a busy city street. From that height, cars, bicycles, and even people shrink into only a few pixels on a screen.

UAV and aerial imaging modules often capture scenes like this, where objects of interest are tiny and surrounded by cluttered backgrounds, which makes them challenging for computer vision models to detect.

In these types of scenarios, YOLO11 can be an ideal model choice. For instance, a drone equipped with a model like YOLO11 could monitor traffic in real time, detecting vehicles, cyclists, and pedestrians as they move through the scene, even when each object only takes up a small portion of the image. This enables faster decision-making and more accurate insights in applications such as traffic management, public safety, or urban planning.

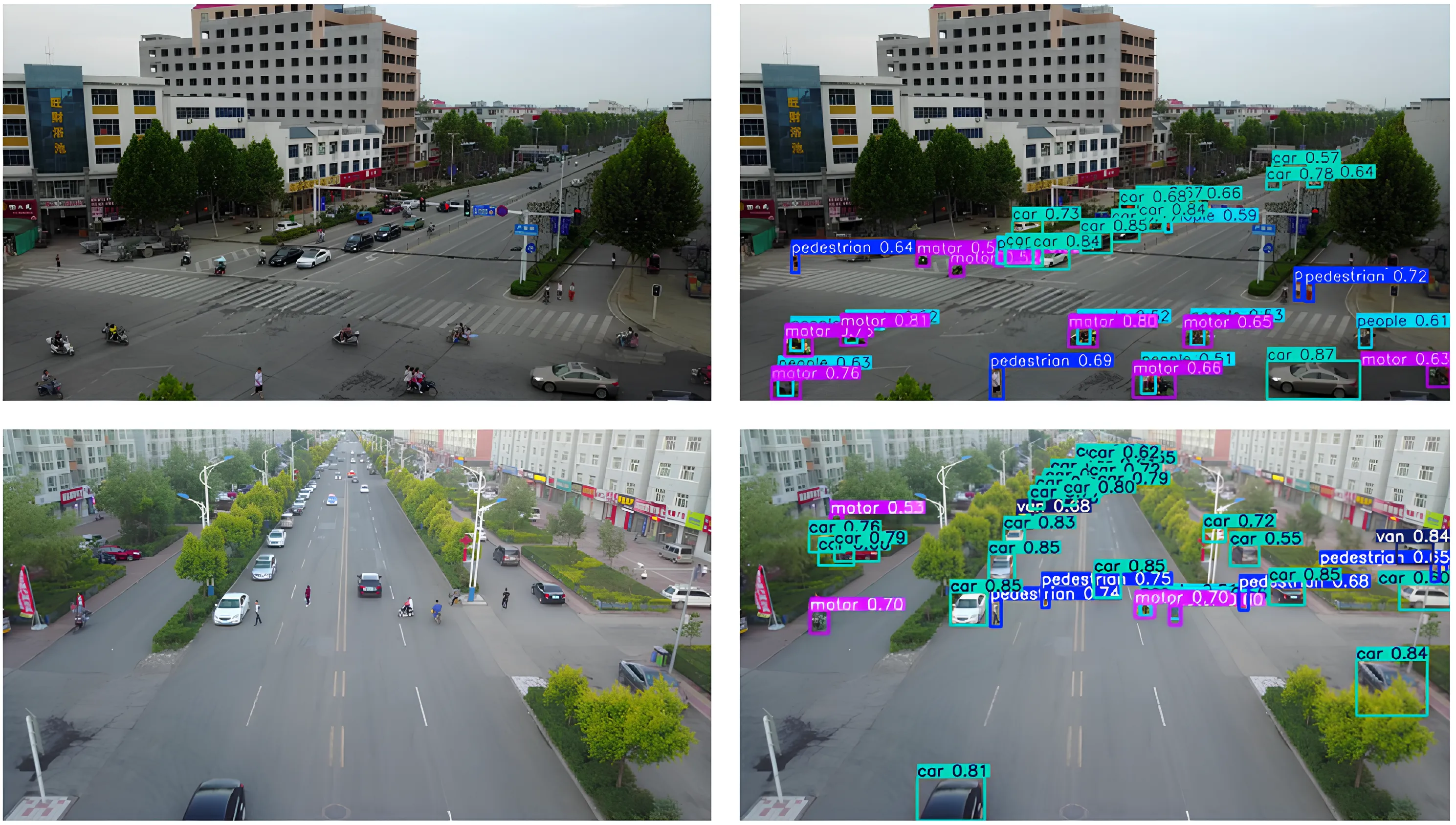

Robots are often used in environments where accuracy and timing are critical. In settings like warehouses, factories, and farms, a robot may need to recognize very small objects, such as a part on an assembly line, a label on a package, or a small plant bud in a field, and respond quickly.

Detecting objects of this size can be complicated, especially when they appear as only a few pixels in the camera feed or are partially occluded by other objects. Missing these small details can slow down automation or affect the robot’s ability to complete a task.

YOLO11 can make a difference in these situations. Its improved feature extraction and fast inference enable robots to detect small objects in real time and take action immediately.

YOLO11 also supports instance segmentation, which can help robots understand object boundaries and grasp points more precisely, rather than only locating general bounding boxes. For example, a robotic arm integrated with YOLO11 could spot small components on a conveyor belt, segment their exact shape, and pick them up before they move out of reach, helping the system stay efficient and reliable.

With so many computer vision models available today, you might be wondering what makes Ultralytics YOLO11 stand out.

Here are a few reasons why Ultralytics YOLO11 is a great option for applications where small objects need to be detected:

In addition to using a model such as YOLO11, the way you prepare your annotations, the overall dataset, and the model training procedure can make a significant difference in detection performance.

Here’s a quick overview of what to focus on:

Small object detection is difficult because small targets lose detail as images move through a computer vision model. YOLO11 improves how these details are preserved, making small object detection more reliable without sacrificing real-time performance. This balance allows YOLO11 to support accurate and efficient detection in real-world applications.

Join our growing community! Explore our GitHub repository to learn more about AI. Discover innovations like computer vision in retail and AI in the automotive industry by visiting our solution pages. To start building with computer vision today, check out our licensing options.