Exploring why Ultralytics YOLO26 is easier to ship to production!

See how Ultralytics YOLO26 bridges research and production with an edge-first design that simplifies deployment and integration.

.webp)

See how Ultralytics YOLO26 bridges research and production with an edge-first design that simplifies deployment and integration.

.webp)

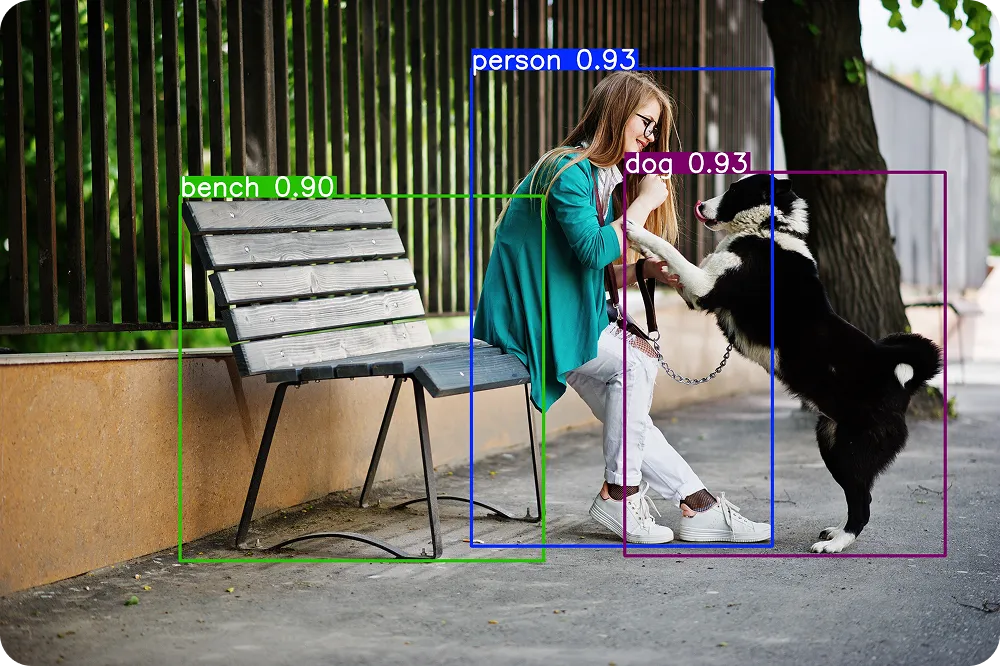

Ultralytics YOLO26, our latest computer vision model, marks a step forward in making real-time computer vision solutions easier to deploy. In other words, it is designed to move more smoothly from experimentation into systems that run continuously on real hardware.

Computer vision is now used in many real-world applications, including manufacturing, robotics, retail, and infrastructure. As these systems move from testing into everyday use, focus is shifting from individual model performance to how well the model fits into a larger software system. Factors like reliability, efficiency, and ease of integration are just as important as accuracy.

This shift has important implications for how computer vision models are designed and evaluated. Success in production depends not only on what a model can detect, but also on how easily it can be integrated, deployed, and maintained over time.

YOLO26 was built with these practical needs in mind. By focusing on end-to-end inference, edge-first performance, and simpler integration, it reduces complexity across the deployment process.

In this article, we’ll explore how Ultralytics YOLO26 helps bridge the gap between research and production, and why its features make it more straightforward to ship real-time computer vision systems into real-world applications. Let's get started!

As computer vision becomes more widely used, many teams are moving beyond research and starting to deploy models in real applications. This next step toward production often highlights challenges that weren't visible during experimentation.

In research settings, models are usually tested in controlled environments using fixed datasets. These tests are useful for measuring accuracy, but they don't fully reflect how a model will behave once it is deployed. In production, computer vision systems have to process live data, run continuously, and operate on real hardware alongside other software.

Once a model is part of a production system, factors beyond accuracy become more important. Inference pipelines may include additional steps; performance can vary across devices, and systems need to behave consistently over time. These practical considerations affect how easily a model can be integrated and maintained as applications scale.

Due to these factors, moving from research to production is often less about improving model results and more about simplifying deployment and operation. Models that are easier to integrate, run efficiently on target hardware, and behave predictably tend to move into production more smoothly.

Ultralytics YOLO26 was built with this transition in mind. Reducing complexity across the deployment process helps teams move computer vision models from experimentation into real-world production more efficiently.

One of the key reasons Ultralytics YOLO26 is more practical to deploy is its end-to-end inference design. Simply put, this means the model is designed to produce final predictions directly, without relying on additional post-processing steps outside the model itself.

In many traditional computer vision systems, inference doesn't stop when the model finishes running. Instead, the model outputs a large number of intermediate predictions that have to be filtered and refined before they can be used.

These extra steps are often handled by a separate post-processing stage called Non-Maximum Suppression (NMS), which adds complexity to the overall system. In production environments, this complexity can be an issue.

Post-processing steps can increase latency, behave differently across hardware platforms, and require additional integration work. They also introduce more components that need to be tested, maintained, and kept consistent as systems scale.

YOLO26 takes a different approach. Resolving duplicate predictions and producing final outputs within the model reduces the number of steps required in the inference pipeline. This makes deployment simpler, since there is less external logic to manage and fewer opportunities for inconsistencies between environments.

For teams deploying vision systems, this end-to-end, NMS-free design helps streamline integration. The model behaves more predictably once deployed, and exported models, meaning versions prepared to run outside the training environment on target hardware, are more self-contained.

As a result, what is tested during development more closely matches what runs in production. This makes Ultralytics YOLO26 easier to integrate into real software systems and simpler to ship at scale.

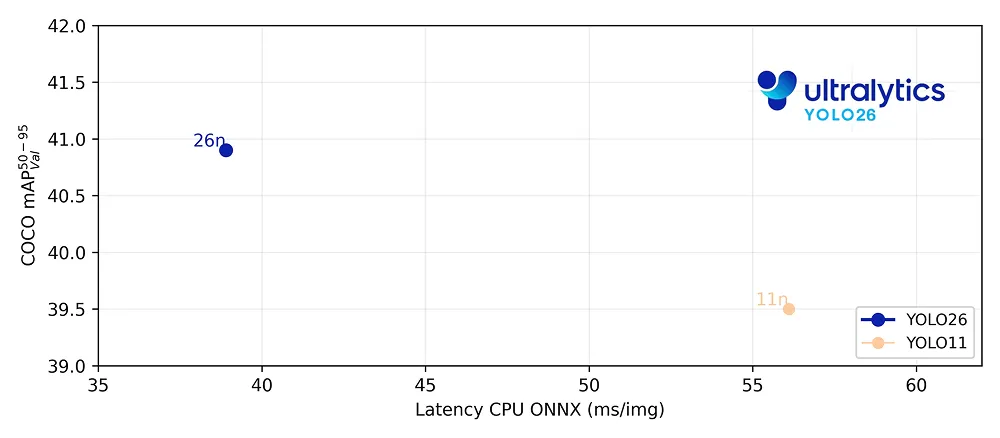

Beyond end-to-end inference, Ultralytics YOLO26 includes a set of performance and training choices designed to make production deployment more predictable.

Here are some of the key features that make Ultralytics YOLO26 simpler to ship and operate in production:

Overall, these innovations help reduce the risk and complexity of deploying computer vision systems in production. By combining edge-first performance with more stable training and predictable model behavior, Ultralytics YOLO26 makes it easier for teams to move from development to real-world deployment with confidence.

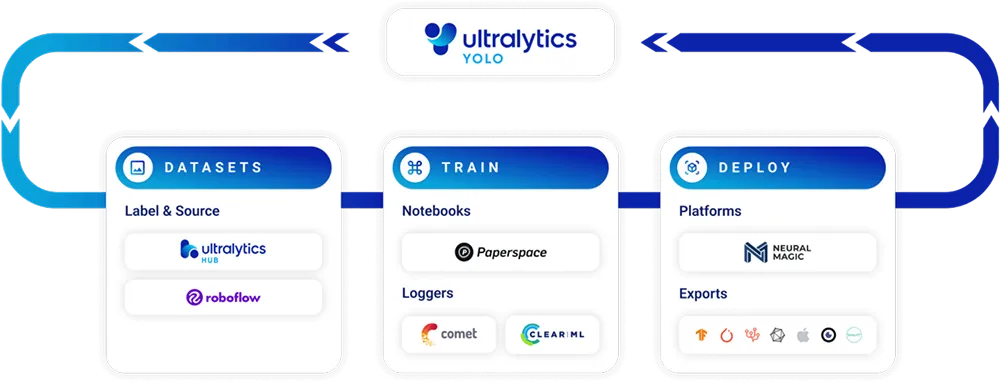

Deploying a computer vision model is rarely just about the model itself. In production, teams need to train models, run inference, monitor performance, and export models into formats that work across different platforms and hardware. Each additional tool or custom script in this pipeline increases complexity and the risk of failure.

The Ultralytics package is designed to reduce that complexity by bringing these steps into a single, consistent workflow. With one library, teams can train models like YOLO26, run predictions, validate results, and export models for deployment without switching tools or rewriting integration code.

It also supports a wide range of integrations across the full lifecycle, from training and evaluation to export and deployment on different hardware targets. This unified approach makes a difference in production environments.

The same commands and interfaces used during experimentation carry through to deployment, which reduces handoff friction between research, engineering, and operations teams. Exporting models becomes more predictable as well, since YOLO26 models can be converted directly into formats such as ONNX, TensorRT, CoreML, OpenVINO, and others that are commonly used in production systems.

By minimizing glue code and custom integration work, the Ultralytics package helps teams focus on building reliable applications rather than maintaining complex pipelines. This makes it more accessible to scale deployments, update models over time, and keep behavior consistent across development and production environments.

Next, let’s take a look at how Ultralytics YOLO26 can be used across real-world applications that require reliable, production-ready computer vision capabilities.

Robotic systems depend on fast, dependable perception to operate safely and effectively. Whether it’s an autonomous mobile robot navigating a warehouse or a robotic arm handling objects on a line, vision models have to deliver consistent results with minimal latency.

Ultralytics YOLO26 can detect obstacles, recognize objects, and monitor human presence directly on robotic hardware. Its end-to-end inference design simplifies integration into robotic control software, making it easier to deploy vision capabilities that run continuously in real-world environments.

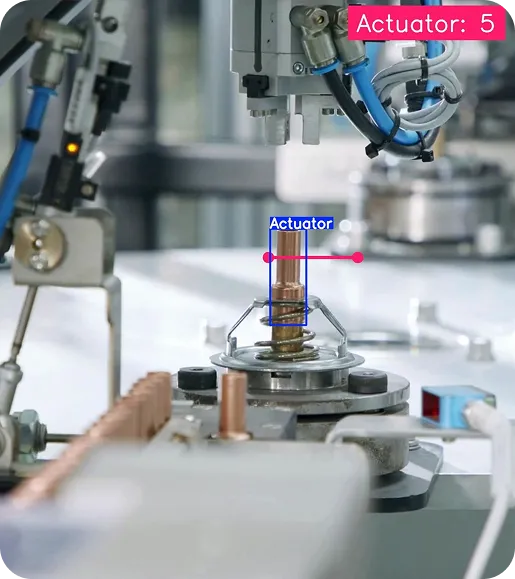

On factory floors, computer vision is commonly used to monitor equipment, inspect products, and ensure processes stay within safe operating limits. YOLO26 can be deployed on local industrial hardware to detect defects, verify assembly steps, or track the movement of mechanical components in real time.

Its ability to run efficiently on edge devices makes it well-suited for production lines where systems must operate continuously, with low latency and minimal infrastructure overhead.

Drones and remote systems often operate with limited power and unreliable connectivity. YOLO26 can process visual data directly on the device, enabling tasks like inspection, surveying, or monitoring while in flight. By analyzing images locally, systems can respond in real time and reduce the need to transmit large amounts of data back to a central location.

Consider a city rolling out cameras across intersections, public parks, and transit hubs. Each location may use different hardware and operate under different conditions, but the vision system still needs to behave consistently.

Ultralytics YOLO26 can help analyze these video streams for tasks such as traffic monitoring, pedestrian detection, or public space analysis. Its predictable deployment behavior and support for multiple hardware platforms make it easier to roll out, update, and maintain vision systems across large, distributed urban environments.

For many organizations, the biggest challenge with Vision AI isn't building a model that works in a demo. It is turning that work into a system that runs reliably in production.

Deployment often requires significant engineering effort, ongoing maintenance, and coordination across teams, which can slow projects down or limit their impact. When models are straightforward to ship, this changes the business equation.

Faster deployment reduces time to value. Simpler integration lowers engineering and operational costs. More predictable behavior across environments reduces risk and makes long-term planning practical.

Ultralytics YOLO26 is designed with these factors in mind. Simplifying deployment and supporting consistent behavior in production helps organizations move Vision AI from experimentation into everyday use. For business leaders, this makes computer vision a more practical and reliable investment, rather than a high-risk research effort.

Ultralytics YOLO26 is built to close the gap between research and production by making real-time computer vision easier to deploy and maintain. Its end-to-end design and edge-first performance reduce the complexity that often slows Vision AI projects down. This allows organizations to move faster and see value sooner.

Join our community and explore our GitHub repository to learn more about AI. Check out our solution pages to read about AI in retail and computer vision in agriculture. Discover our licensing options and start building with computer vision today!