How Ultralytics YOLO26 trains smarter with ProgLoss, STAL, and MuSGD

Learn how Ultralytics YOLO26 trains more reliably using Progressive Loss Balancing, Small-Target-Aware Label Assignment, and the MuSGD optimizer.

.webp)

Learn how Ultralytics YOLO26 trains more reliably using Progressive Loss Balancing, Small-Target-Aware Label Assignment, and the MuSGD optimizer.

.webp)

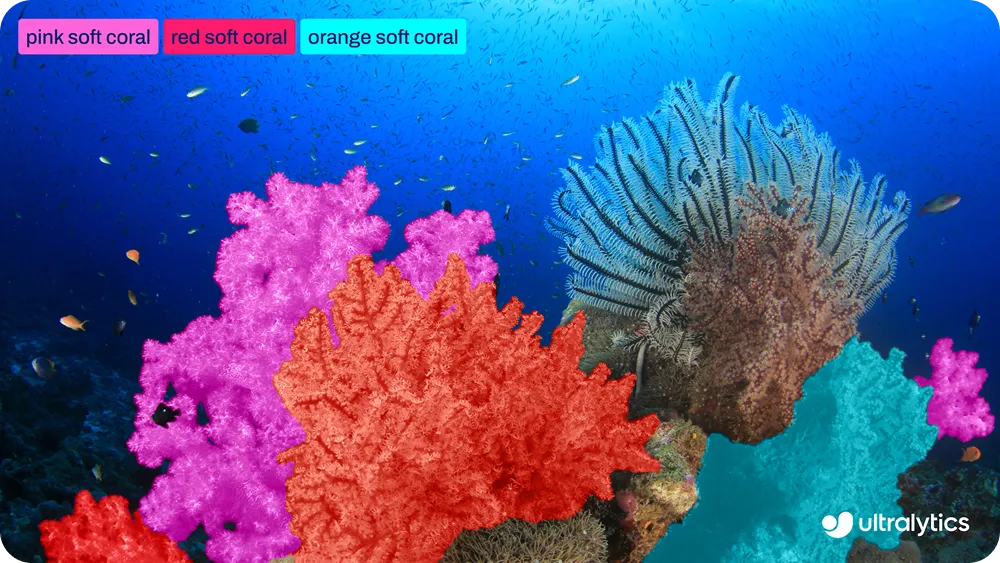

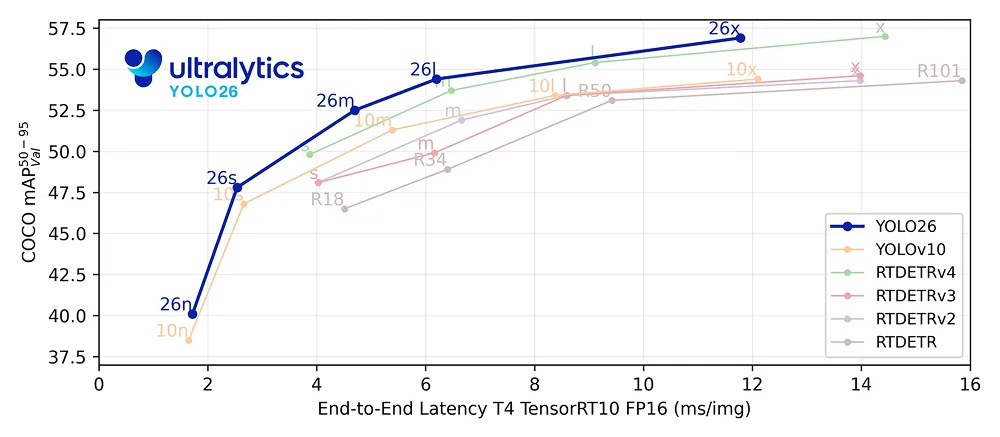

Last week, we released Ultralytics YOLO26, setting a new standard for edge-first, real-time computer vision models. Similar to previous Ultralytics YOLO models, such as Ultralytics YOLO11, YOLO26 supports the core computer vision tasks users are familiar with, including object detection, instance segmentation, and pose estimation.

However, YOLO26 isn’t just an incremental update. While the supported tasks may look familiar, this new model represents an innovative step forward in how computer vision models are trained. With YOLO26, the focus extends beyond inference efficiency to making training more stable.

YOLO26 was designed with the full training lifecycle in mind. This means faster convergence, more reliable training runs, and consistent model behavior. These improvements are especially important in real-world workflows, where training reliability directly affects how quickly models can be iterated on and deployed.

To enable this, YOLO26 introduces several targeted training innovations such as Progressive Loss Balancing (ProgLoss), Small-Target-Aware Label Assignment (STAL), and the MuSGD optimizer. Together, these changes improve how learning loss is balanced, how labels are assigned, and how optimization behaves over time.

In this article, we’ll explore how each of these mechanisms works and why they make Ultralytics YOLO26 easier to train and more reliable at scale. Let’s get started!

Ultralytics YOLO26 natively streamlines the entire inference pipeline by removing reliance on post-processing steps such as Non-Maximum Suppression. Instead of generating many overlapping predictions and filtering them afterward, YOLO26 produces final detections directly from the network.

It makes YOLO26 an end-to-end model, where prediction, duplicate resolution, and final outputs are all learned within the network itself. This simplifies deployment and improves inference efficiency, while also shaping how the model learns during training.

In an end-to-end system like this, training and inference are tightly connected. Since there is no external post-processing stage to correct predictions later, the model has to learn to make clear and confident decisions during training itself.

This makes alignment between training objectives and inference behavior especially important. Any mismatch between how the model is trained and how it is used at inference time can lead to unstable learning or slower convergence.

YOLO26 handles this by designing its training process around real-world usage from the start. Rather than focusing only on inference speed, the training system is built to support stable learning over long runs, consistent convergence across model sizes from Nano to Extra Large, and robust performance on diverse datasets.

One of the key training innovations in Ultralytics YOLO26 builds on a two-head training approach used in previous YOLO models. In object detection models, a head refers to the part of the network responsible for making predictions.

In other words, detection heads learn to predict where objects are located in an image and what those objects are. They do this by regressing bounding box coordinates, meaning they learn to estimate the position and size of each object in the input image.

During training, the model learns by minimizing a loss, which is a numerical measure of how far its predictions are from the correct answers or ground truth. A lower loss means the model’s predictions are closer to the ground truth, while a higher loss indicates larger errors. The loss calculation guides how the model updates its parameters during training.

YOLO26 uses two detection heads during training that share the same underlying model but serve different purposes. The one-to-one head is the head used at inference time. It learns to associate each object with a single, confident prediction, which is essential for YOLO26’s end-to-end, NMS-free design.

Meanwhile, the one-to-many head is used only during training. It allows multiple predictions to be associated with the same object, providing denser supervision. This richer learning signal helps stabilize training and improve accuracy, especially in the early stages.

In YOLO26, both heads use the same loss calculation for box regression and classification. Earlier implementations applied a fixed balance between these two loss signals throughout training.

In practice, however, the importance of each head changes over time. Dense supervision is most useful early on, while alignment with inference behavior becomes more important later in training. YOLO26 is designed around this insight, which leads directly to how it rebalances learning signals as training progresses.

So, how does Ultralytics YOLO26 handle these changing learning needs during training? It uses Progressive Loss Balancing to adjust how learning signals are weighted over time.

ProgLoss works by dynamically shifting how much each head contributes to the total loss as training progresses. Early on, more weight is placed on the one-to-many head to stabilize learning and improve recall. As training continues, the balance gradually shifts toward the one-to-one head, aligning training more closely with inference behavior.

This gradual transition allows YOLO26 to learn in the right order. Instead of forcing the model to optimize competing objectives all at once, Progressive Loss Balancing prioritizes the most useful learning signal at each stage of training. The result is smoother convergence, fewer unstable training runs, and more consistent final performance.

Another interesting training improvement in Ultralytics YOLO26 comes from how the model assigns training targets to predictions, a process known as label assignment. It is responsible for matching ground truth objects to candidate predictions, often called anchors.

These matches determine which predictions receive supervision and contribute to the loss. YOLO26 builds on an existing label assignment method called Task Alignment Learning (TAL), which was designed to better align classification and localization during training.

While TAL works well for most objects, training revealed an important limitation. During the matching process, very small objects could be dropped entirely. In practice, objects smaller than about 8 pixels in a 640-pixel input image often failed to receive any anchor assignments. When this happens, the model receives little or no supervision for those objects, making it difficult to learn to detect them reliably.

To address this issue, YOLO26 introduces Small-Target-Aware Label Assignment (STAL). STAL modifies the assignment process to ensure that small objects aren't ignored during training. Specifically, it enforces a minimum of four anchor assignments for objects smaller than 8 pixels. This guarantees that even tiny objects consistently contribute to the training loss.

By strengthening supervision for small targets, STAL improves learning stability and detection performance in scenarios where small or distant objects are common. This improvement is especially important for edge-first YOLO26 applications such as aerial imagery, robotics, and Internet of Things (IoT) systems, where objects are often small, distant, or partially visible and reliable detection is critical.

To support more stable and predictable training, Ultralytics YOLO26 also introduces a new optimizer called MuSGD. This optimizer is designed to improve convergence and training reliability in end-to-end detection models, especially as model size and training complexity increase.

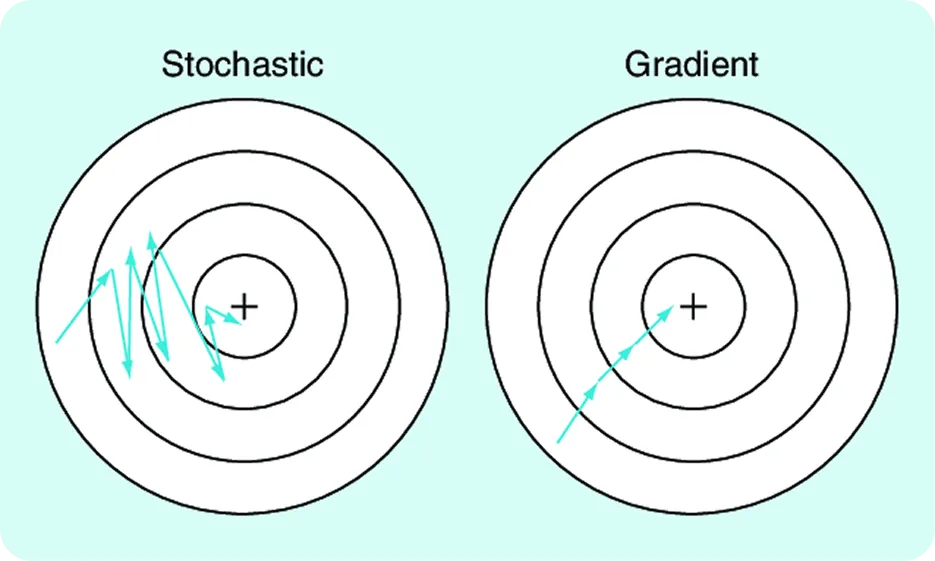

In order for a neural network to learn, and consequently change the weights accordingly, during training, we compute an error (also called ¨loss¨). The model, therefore, measures how wrong its predictions are using a loss value, computes gradients that indicate how its parameters should change, and then updates those parameters to reduce error. The Stochastic Gradient Descent (SGD) is a widely used optimizer that performs these updates, making training efficient and scalable.

MuSGD builds on this familiar foundation by incorporating optimization ideas inspired by Muon, a method used in large language model training. These ideas were influenced by recent advances such as Moonshot AI’s Kimi K2, which demonstrated improved training behavior through more structured parameter updates.

YOLO26 uses a hybrid update strategy. Some parameters are updated using a combination of Muon-inspired updates and SGD, while others use SGD alone. This makes it possible for YOLO26 to introduce additional structure into the optimization process while maintaining the robustness and generalization properties that have made SGD effective.

The result is smoother optimization, faster convergence, and more predictable training behavior across model sizes, making MuSGD a key part of why YOLO26 is easier to train and more reliable at scale.

Ultralytics YOLO26’s training innovations, combined with key features such as its end-to-end, NMS-free, and edge-first design, make the model easier to train and more reliable at scale. You might be wondering what that really means for computer vision applications.

In action, it makes bringing computer vision to where it actually runs much easier. Models train more predictably, scale more consistently across sizes, and are simpler to adapt to new datasets. This reduces the friction between experimentation and deployment, especially in environments where reliability and efficiency matter as much as raw performance.

For instance, in robotics and industrial vision applications, models often need to be retrained frequently as environments, sensors, or tasks change. With YOLO26, teams can iterate faster without worrying about unstable training runs or inconsistent behavior across model sizes.

Reliable computer vision systems depend as much on how models are trained as on how they perform at inference time. By improving how learning signals are balanced, how small objects are handled, and how optimization progresses, YOLO26 makes training more stable and easier to scale. This focus on reliable training helps teams move more smoothly from experimentation to real-world deployment, especially in edge-first applications.

Want to learn about AI? Visit our GitHub repository to discover more. Join our active community and find out about innovations in sectors like AI in logistics and Vision AI in the automotive industry. To get started with computer vision today, check out our licensing options.