Apple unveils FastVLM at CVPR 2025. This open‑source vision‑language model features the FastViTHD encoder, delivering up to 85 × faster time‑to‑first‑token.

Apple unveils FastVLM at CVPR 2025. This open‑source vision‑language model features the FastViTHD encoder, delivering up to 85 × faster time‑to‑first‑token.

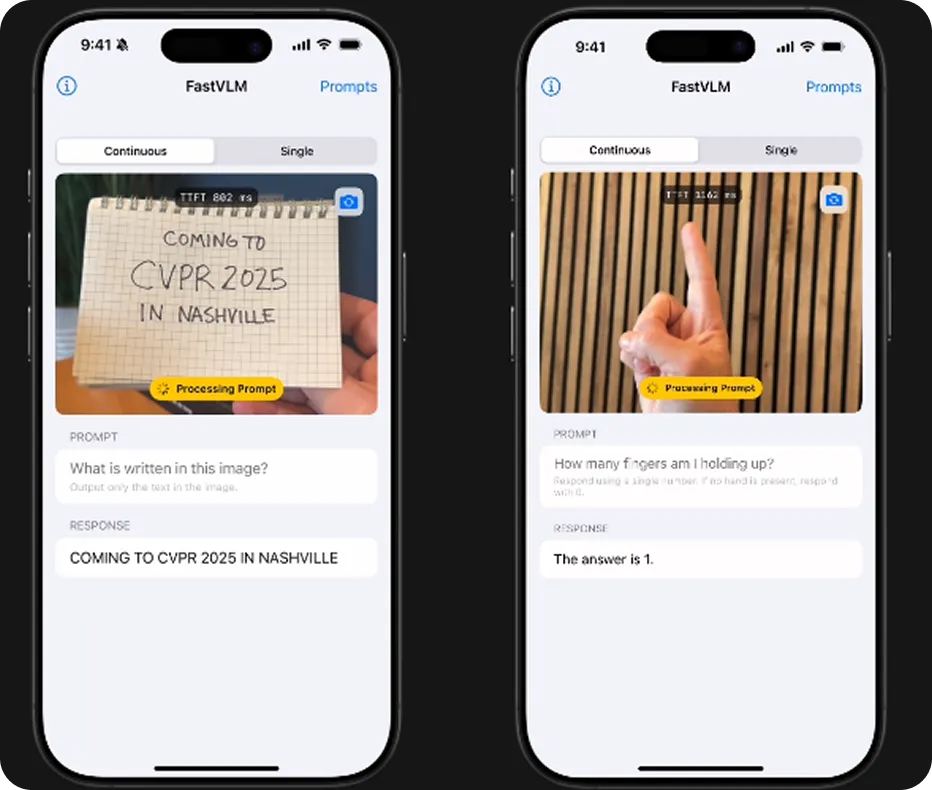

At the CVPR 2025 conference, Apple introduced a new open-source AI model called FastVLM. It is built to understand both images and language, and it runs on Apple devices like iPhones, iPads, and Macs. This means it can deliver smart results quickly, without sending your data to the cloud.

What makes FastVLM particularly interesting is how fast and efficient it is. Apple developed a new vision encoder called FastViTHD, which helps the model interpret high-quality images while using less memory and power. All processing takes place locally on the device, resulting in faster response times while preserving user privacy.

In this article, we’ll explore how FastVLM works, what sets it apart, and why this Apple release could be a significant step forward for everyday AI applications on your devices.

Before we dive into what makes FastVLM special, let’s walk through what the “VLM” in its name stands for. It refers to a vision-language model, which is designed to understand and connect visual content with language.

VLMs bring together visual understanding and language, enabling them to perform tasks like describing a photo, answering questions about a screenshot, or extracting text from a document. Vision-language models typically work in two parts: one processes the image and converts it into data, while the other interprets that data to generate a response you can read or hear.

You may have already used this kind of AI innovation without even realizing it. Apps that scan receipts, read ID cards, generate image captions, or help people with low vision interact with their screens often rely on vision-language models running quietly in the background.

Apple built FastVLM to perform the same tasks as other vision-language models, but with greater speed, stronger privacy, and optimized performance on its own devices. It can understand the contents of an image and respond with text, but unlike many models that rely on cloud servers, FastVLM can run entirely on your iPhone, iPad, or Mac.

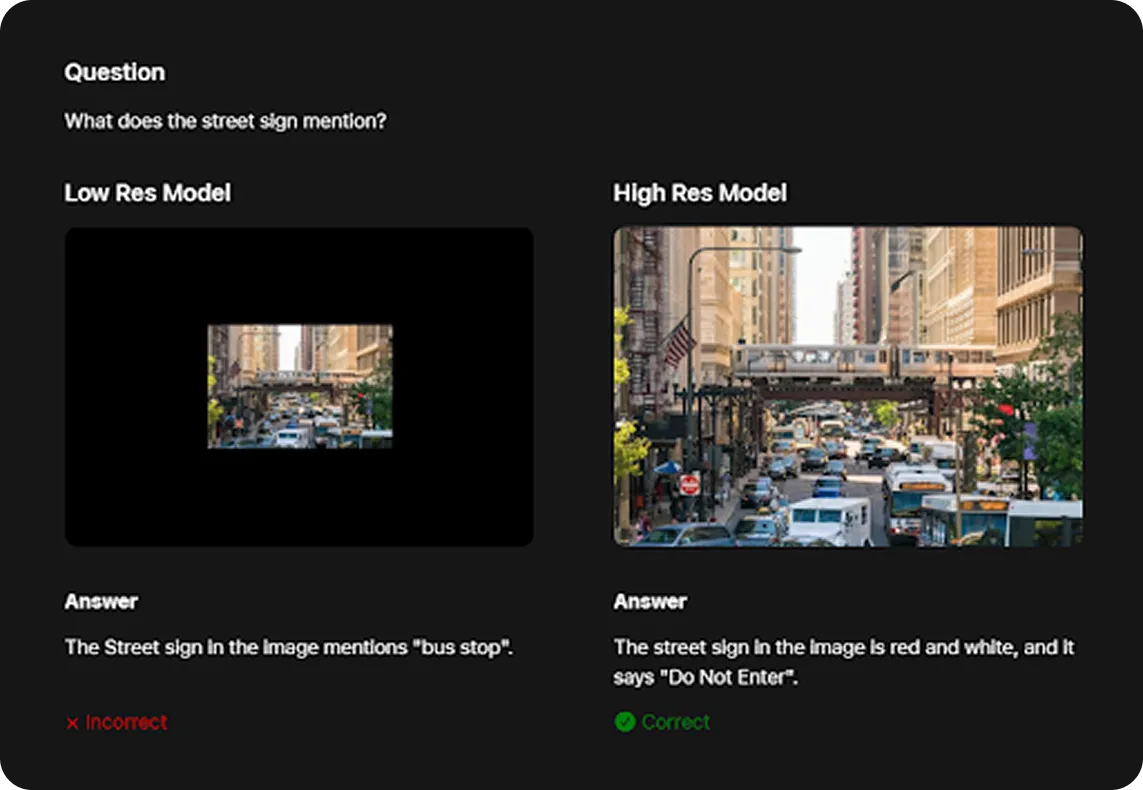

VLMs generally perform better with high-resolution images. For example, as shown below, FastVLM could only correctly identify a street sign as “Do Not Enter” when given a high-resolution version of the image. However, high-res inputs usually slow models down. This is where FastViTHD makes a difference.

Apple’s new vision encoder, FastViTHD, helps FastVLM process high-quality images more efficiently, using less memory and power. Specifically, FastViTHD is lightweight enough to run smoothly even on smaller devices.

Also, FastVLM is publicly available on the FastVLM GitHub repository, where developers can access the source code, make changes, and use it in their own apps in accordance with Apple’s license terms.

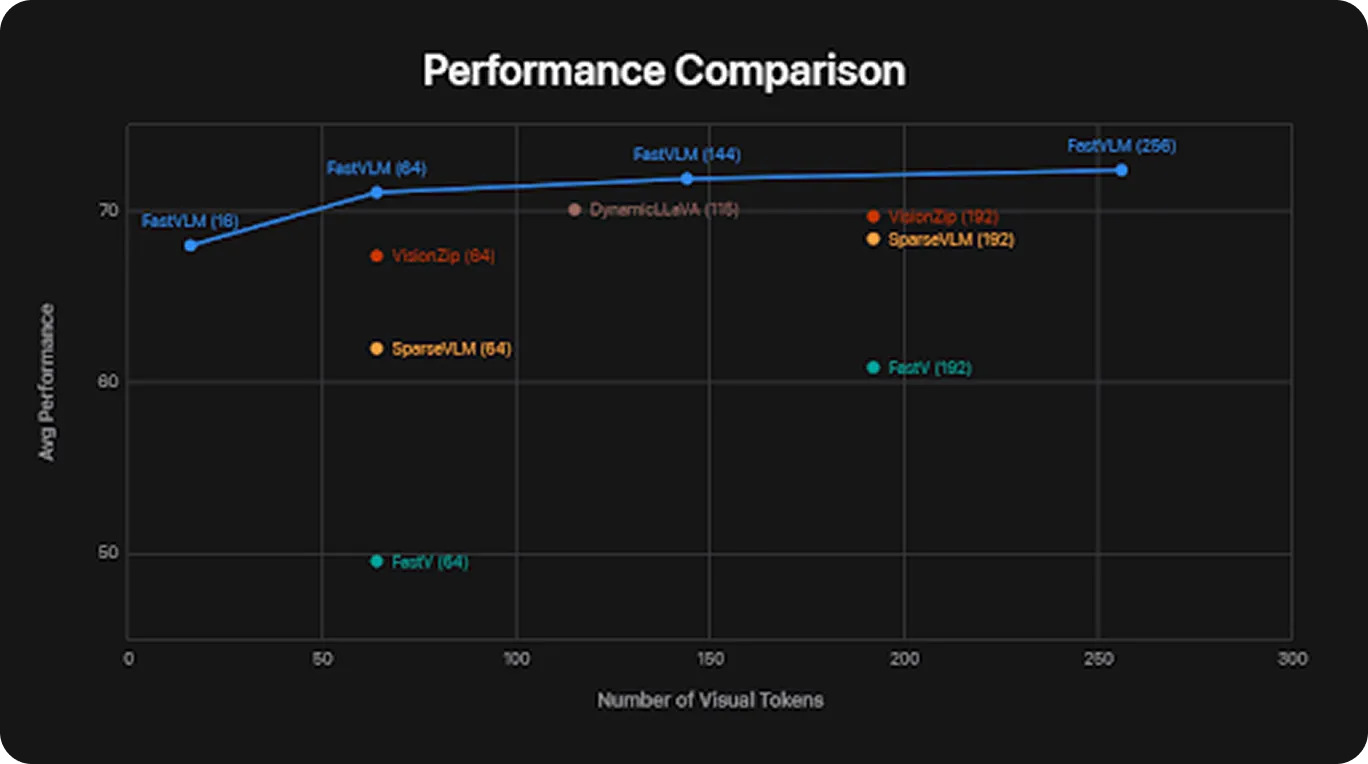

In comparison to other vision-language models, FastVLM is optimized to run on everyday devices such as smartphones and laptops. In performance tests, FastVLM generated its first word or output up to 85 times faster than models like LLaVA-OneVision-0.5B.

Here’s a glimpse of some of the standard benchmarks FastVLM has been evaluated on:

Across these benchmarks, FastVLM achieved competitive results while using fewer resources. It brings practical visual AI to everyday devices like phones, tablets, and laptops.

Next, let’s take a closer look at FastViTHD, the vision encoder that plays a crucial role in FastVLM’s image processing performance.

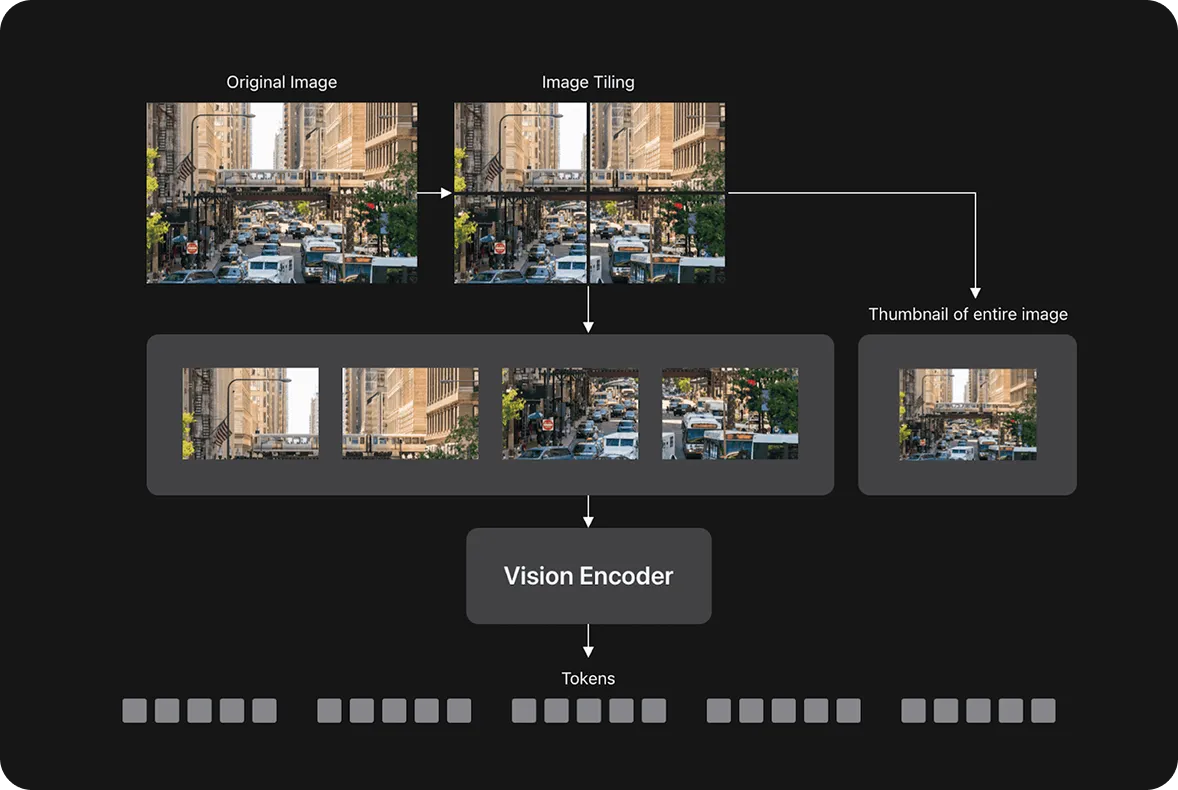

Most vision language models split an image into thousands of small patches called tokens. The more tokens, the more time and power the model needs to understand the image. This can make things slow, especially on phones or laptops.

FastViTHD avoids the slowdown that comes with processing too many tokens by using fewer of them, while still understanding the full image. It combines two approaches: transformers, which are good at modeling patterns and relationships, and convolutional layers, which are efficient at processing visual data. The result is a system that works faster and uses less memory.

According to Apple, FastViTHD is up to 3.4 times smaller than some traditional vision encoders, while still maintaining high accuracy. Instead of relying on model optimization techniques like token pruning (removing less important image patches to speed up processing), it achieves efficiency through a simpler, more streamlined architecture.

Apple has released FastVLM in three different sizes: 0.5B, 1.5B, and 7B parameters (where "B" stands for billion, referring to the number of trainable weights in the model). Each version is designed to fit different types of devices. The smaller models can run on phones and tablets, while the larger 7B model is better suited for desktops or more demanding tasks.

This gives developers the flexibility to choose what works best for their apps. They can build something fast and lightweight for mobile or something more complex for larger systems, all while using the same underlying model architecture.

Apple trained FastVLM model variants using the LLaVA‑1.5 pipeline, a framework for aligning vision and language models. For the language component, they evaluated FastVLM using existing open-source models like Qwen and Vicuna, which are known for generating natural and coherent text. This setup allows FastVLM to process both simple and complex images and produce readable, relevant responses.

You might be wondering, why does FastVLM’s efficient image processing matter? It comes down to how smoothly apps can work in real time without relying on the cloud. FastVLM can handle high-resolution images, up to 1152 by 1152 pixels, while staying fast and light enough to run directly on your device.

This means apps can describe what the camera sees, scan receipts as they are captured, or respond to changes on the screen, all while keeping everything local. It is especially helpful for areas like education, accessibility, productivity, and photography.

Since FastViTHD is efficient even when it comes to large images, it helps keep devices responsive and cool. It works with all model sizes, including the smallest one, which runs on entry-level iPhones. That means the same AI features can work across phones, tablets, and Macs.

FastVLM can power a wide range of applications, thanks to its key benefits like speed, efficiency, and on-device privacy. Here are a few ways it can be used:

On-device AI assistants: FastVLM can work well with AI assistants that need to quickly understand what is on the screen. Since it runs directly on the device and keeps data private, it can help with tasks like reading text, identifying buttons or icons, and guiding users in real time without needing to send information to the cloud.

FastVLM brings on-device vision-language AI to Apple devices, combining speed, privacy, and efficiency. With its lightweight design and open-source release, it enables real-time image understanding across mobile and desktop apps.

This helps make AI more practical and accessible for everyday use, and gives developers a solid foundation for building useful, privacy-focused applications. Looking ahead, it is likely that vision-language models will play an important role in how we interact with technology, making AI more responsive, context-aware, and helpful in everyday situations.

Explore our GitHub repository to learn more about AI. Join our active community and discover innovations in sectors like AI in the automotive industry and Vision AI in manufacturing. To get started with computer vision today, check out our licensing options.