Optimizing Ultralytics YOLO models with the TensorRT integration

Learn how to export Ultralytics YOLO models using the TensorRT integration for faster, more efficient AI performance on NVIDIA GPUs for real-time applications.

Learn how to export Ultralytics YOLO models using the TensorRT integration for faster, more efficient AI performance on NVIDIA GPUs for real-time applications.

Consider a self-driving car moving through a busy street with only milliseconds to detect a pedestrian stepping off the curb. At the same time, it might need to recognize a stop sign partially hidden by a tree or react quickly to a nearby vehicle swerving into its lane. In such situations, speed and real-time responses are critical.

This is where artificial intelligence (AI), specifically computer vision, a branch of AI that helps machines interpret visual data, plays a key role. For computer vision solutions to work reliably in real-world environments, oftentimes, they often need to process information quickly, handle multiple tasks at once, and use memory efficiently.

One way to achieve this is through hardware acceleration, using specialized devices like graphics processing units (GPUs) to run models faster. NVIDIA GPUs are especially well known for such tasks, thanks to their ability to deliver low latency and high throughput.

However, running a model on a GPU as-is doesn’t always guarantee optimal performance. Vision AI models typically require optimization to fully leverage the capabilities of hardware devices. In order to achieve full performance with specific hardware, we need to compile the model to use the specific set of instructions for the hardware.

For example, TensorRT is an export format and optimization library developed by NVIDIA to enhance performance on high-end machines. It uses advanced techniques to significantly reduce inference time while maintaining accuracy.

In this article, we’ll explore the TensorRT integration supported by Ultralytics and walk through how you can export your YOLO11 model for faster, more efficient deployment on NVIDIA hardware. Let’s get started!

TensorRT is a toolkit developed by NVIDIA to help AI models run faster and more efficiently on NVIDIA GPUs. It’s designed for real-world applications where speed and performance really matter, like self-driving cars and quality control in manufacturing and pharmaceuticals.

TensorRT includes tools like compilers and model optimizers that can work behind the scenes to make sure your models run with low latency and can handle a higher throughput.

The TensorRT integration supported by Ultralytics works by optimizing your YOLO model to run more efficiently on GPUs using methods like reducing precision. This refers to using lower-bit formats, such as 16-bit floating-point (FP16) or 8-bit integer (INT8), to represent model data, which reduces memory usage and speeds up computation with minimal impact on accuracy.

Also, compatible neural network layers are fused in optimized TensorRT models to reduce memory usage, resulting in faster and more efficient inference.

Before we discuss how you can export YOLO11 using the TensorRT integration, let’s take a look at some key features of the TensorRT model format:

Exporting Ultralytics YOLO models like Ultralytics YOLO11 to the TensorRT model format is easy. Let’s walk through the steps involved.

To get started, you can install the Ultralytics Python package using a package manager like ‘pip.’ This can be done by running the command “pip install ultralytics” in your command prompt or terminal.

After successfully installing the Ultralytics Python Package, you can train, test, fine-tune, export, and deploy models for various computer vision tasks, such as object detection, classification, and instance segmentation. While installing the package, if you encounter any difficulties, you can refer to the Common Issues guide for solutions and tips.

For the next step, you’ll need an NVIDIA device. Use the code snippet below to load and export YOLOv11 to the TensorRT model format. It loads a pre-trained nano variant of the YOLO11 model (yolo11n.pt) and exports it as a TensorRT engine file (yolo11n.engine), making it ready for deployment across NVIDIA devices.

from ultralytics import YOLO

model = YOLO("yolo11n.pt")

model.export(format="engine") After converting your model to TensorRT format, you can deploy it for various applications.

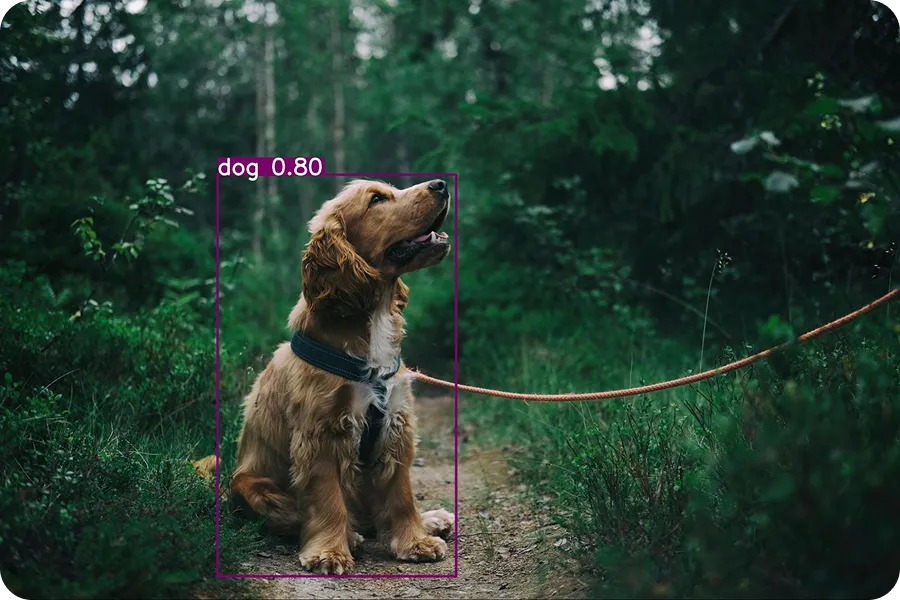

The example below shows how to load the exported YOLO11 model (yolo11n.engine) and run an inference using it. Inferencing involves using the trained model to make predictions on new data. In this case, we’ll use an input image of a dog to test the model.

tensorrt_model = YOLO("yolo11n.engine")

results = tensorrt_model("https://images.pexels.com/photos/1254140/pexels-photo-1254140.jpeg?auto=compress&cs=tinysrgb&w=1260&h=750&dpr=2.jpg", save=True)When you run this code, the following output image will be saved in the runs/detect/predict folder.

The Ultralytics Python package supports various integrations that allow exporting YOLO models to different formats like TorchScript, CoreML, ONNX, and TensorRT. So, when should you choose to use the TensorRT integration?

Here are a few factors that set the TensorRT model format apart from other export integration options:

Ultralytics YOLO models exported to the TensorRT format can be deployed across a wide range of real-world scenarios. These optimized models are especially useful where fast, efficient AI performance is key. Let’s explore some interesting examples of how they can be used.

A wide range of tasks in retail stores, such as scanning barcodes, weighing products, or packaging items, are still handled manually by staff. However, relying solely on employees can slow down operations and lead to customer frustration, especially at the checkout. Long lines are inconvenient for both shoppers and store owners. Smart self-checkout counters are a great solution to this problem.

These counters use computer vision and GPUs to speed up the process, helping reduce wait times. Computer vision enables these systems to see and understand their environment through tasks like object detection. Advanced models like YOLO11, when optimized with tools like TensorRT, can run much faster on GPU devices.

These exported models are well-suited for smart retail setups using compact but powerful hardware devices like the NVIDIA Jetson Nano, designed specifically for edge AI applications.

A computer vision model like YOLO11 can be custom-trained to detect defective products in the manufacturing industry. Once trained, the model can be exported to the TensorRT format for deployment in facilities equipped with high-performance AI systems.

As products move along conveyor belts, cameras capture images, and the YOLO11 model, running in TensorRT format, analyzes them in real time to spot defects. This setup allows companies to catch issues quickly and accurately, reducing errors and improving efficiency.

Similarly, industries such as pharmaceuticals are using these types of systems to identify defects in medical packaging. In fact, the global market for smart defect detection systems is set to grow to $5 billion by 2026.

While the TensorRT integration brings to the table many advantages, like faster inference speeds and reduced latency, here are a few limitations to keep in mind:

Exporting Ultralytics YOLO models to the TensorRT format makes them run significantly faster and more efficiently, making them ideal for real-time tasks like detecting defects in factories, powering smart checkout systems, or monitoring busy urban areas.

This optimization helps the models perform better on NVIDIA GPUs by speeding up predictions and reducing memory and power usage. While there are a few limitations, the performance boost makes TensorRT integration a great choice for anyone building high-speed computer vision systems on NVIDIA hardware.

Want to learn more about AI? Explore our GitHub repository, connect with our community, and check out our licensing options to jumpstart your computer vision project. Find out more about innovations like AI in manufacturing and computer vision in the logistics industry on our solutions pages.