Enhancing vehicle re-identification with Ultralytics YOLO models

Learn how Ultralytics YOLO models can play a role in vehicle re-identification solutions by providing precise and accurate detections.

Learn how Ultralytics YOLO models can play a role in vehicle re-identification solutions by providing precise and accurate detections.

When you watch a Formula One race, it is easy to spot your favorite team’s car. The bright red of Ferrari or the silver of Mercedes stands out lap after lap.

Asking a machine to do the same, not on a clean racetrack but on crowded city streets filled with traffic, is far more challenging. That’s why vehicle re-identification (vehicle re-ID) has been gaining attention in the AI space recently.

Vehicle re-identification gives machines the ability to recognize the same vehicle across multi-view or non-overlapping cameras. It also aims to identify vehicles after temporary occlusion (when a vehicle is partially hidden) or shifts in lighting and viewpoint.

A core technology powering vehicle re-ID is computer vision. Computer vision is a subfield of artificial intelligence that focuses on teaching machines to understand and interpret visual information, such as images and video. Using this technology, AI systems can analyze vehicle features and track them reliably across large camera networks for applications like urban surveillance and traffic monitoring.

In particular, Vision AI models such as Ultralytics YOLO11 and the upcoming Ultralytics YOLO26 support tasks like object detection and tracking. They can quickly locate vehicles in each frame and follow their movement through a scene. When these models are combined with vehicle re-identification networks, the combined system can recognize the same vehicle across different camera feeds, even when the views or lighting conditions change.

In this article, we look at how vehicle re-identification works, the technology that makes it possible, and where it is being used in intelligent transportation systems. Let’s get started!

Vehicle re-identification is an important application in computer vision. It focuses on recognizing the same vehicle as it appears across different, non-overlapping cameras, keeping its identity consistent as it moves through a city. This is challenging because each camera may capture the vehicle from a different angle, under different lighting, or with partial occlusion.

Consider a scenario where a blue sedan passes through an intersection and later appears on a different street, watched by another camera. The angle, lighting, and background have all changed, and other cars may briefly block the view. Despite this, the vehicle re-ID system still needs to determine that it is the same vehicle.

Recent advances in deep learning, especially with convolutional neural networks (CNNs) and transformer-based models, have made this process far more accurate. These models can extract meaningful visual patterns and distinguish between look-alike vehicles while still identifying the correct one.

In intelligent transportation systems, this capability supports continuous monitoring, route reconstruction, and citywide traffic analysis, giving smart-city systems a clearer picture of how vehicles move. They help improve safety and efficiency.

Typically, video footage from intersections, parking areas, and highways is analyzed using vehicle re-identification techniques to determine whether the same vehicle appears across different cameras. This concept is similar to person re-identification, where systems track individuals across multiple views, but here the focus is on analyzing vehicle-specific features instead of human appearance.

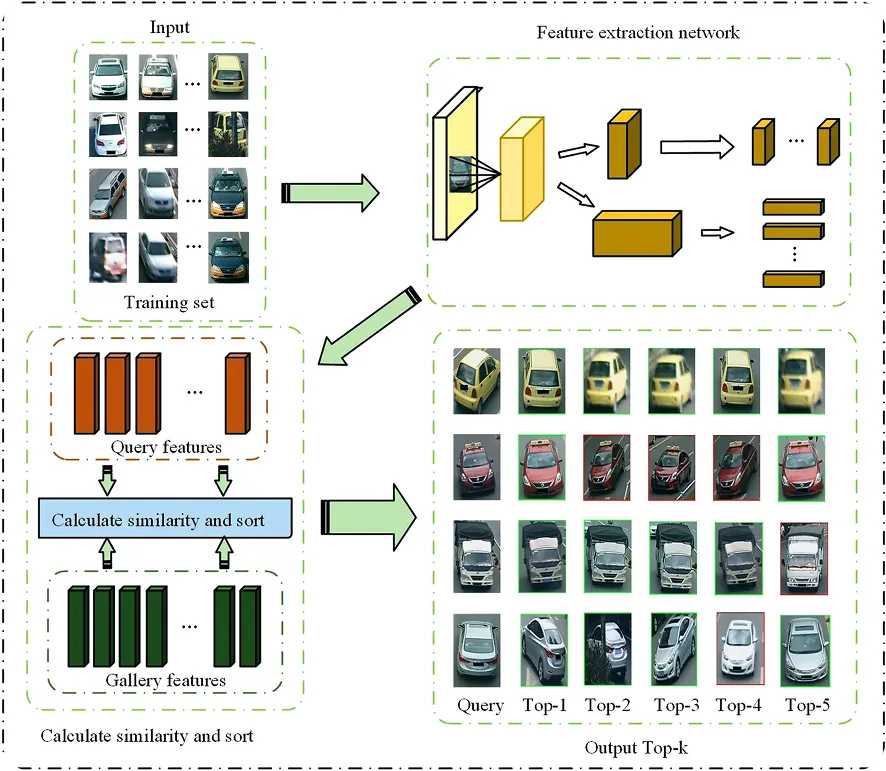

The process of doing so involves several key steps, each designed to help the system detect vehicles, extract their visual features, and match them reliably across different viewpoints.

At a high level, the system first detects vehicles in each frame and then extracts features such as color, shape, and texture to create a unique digital representation, or embedding, for each one. These embeddings are compared across time and across cameras, often supported by object tracking and spatio-temporal checks, to decide whether two sightings belong to the same vehicle.

Here is a closer look at this process:

Ultralytics YOLO models play an important supporting role in vehicle re-identification pipelines. While they don’t perform Re-ID on their own, they provide other essential capabilities, such as fast detection and stable tracking, that Re-ID networks depend on for accurate cross-camera matching.

Next, let’s take a closer look at how Ultralytics YOLO models like YOLO11 can enhance vehicle re-identification systems.

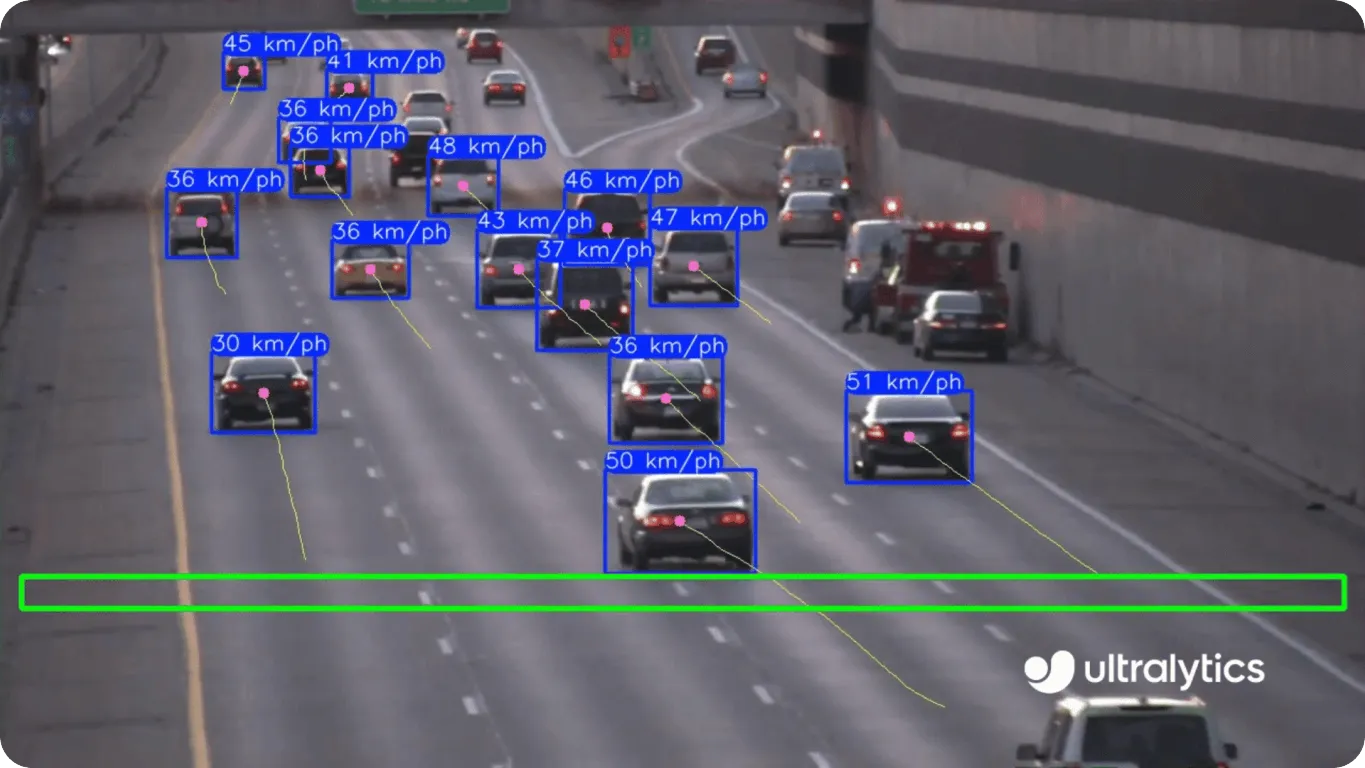

The foundation of any vehicle re-identification system is accurate object detection. Ultralytics YOLO models like YOLO11 are a great option for this, since they can quickly detect vehicles in each frame, even in busy scenes with partial occlusions, heavy traffic, or changing lighting conditions.

They can also be custom-trained, meaning you can fine-tune the model on your own dataset so it learns to recognize specific vehicle types, such as taxis, delivery vans, or fleet vehicles. This is especially useful when a solution requires more specialized detection. By providing clean, precise bounding boxes, Ultralytics YOLO models give Re-ID networks high-quality inputs to work with, which leads to more reliable matching across cameras.

Once vehicles are detected, models like YOLO11 can also support stable object tracking within a single camera view. Object tracking is the process of following a detected vehicle across consecutive frames and assigning it a consistent ID as it moves.

With built-in support for tracking algorithms such as ByteTrack and BoT-SORT in the Ultralytics Python package, YOLO11 can maintain consistent IDs as vehicles move through a scene. This stable tracking reduces identity switches before the Re-ID system takes over, which ultimately improves the accuracy of cross-camera matching.

In addition to standard motion-based tracking, the Ultralytics Python package includes optional appearance-based Re-ID capabilities within its BoT-SORT tracker. This means the tracker can use visual appearance features, not just motion patterns or bounding-box overlap, to determine whether two detections belong to the same vehicle.

When enabled, BoT-SORT extracts lightweight appearance embeddings from the detector or from a YOLO11 classification model and uses them to verify identity between frames. This additional appearance cue helps the tracker maintain more stable IDs in challenging situations, such as brief occlusions, vehicles passing close together, or small shifts caused by camera motion.

While this built-in Re-ID is not intended to replace full cross-camera vehicle re-identification, it does improve identity consistency within a single camera view and produces cleaner tracklets that downstream Re-ID modules can rely on. To use these appearance-based tracking features, you simply enable Re-ID in a BoT-SORT tracker configuration file by setting “with_reid” to “True” and selecting which model will provide the appearance features.

For more details, you can check out the Ultralytics documentation page on object tracking, which explains the available Re-ID options and how to configure them.

Beyond improving identity stability during tracking, YOLO models also play an important role in preparing clean visual inputs for the Re-ID network itself.

After a vehicle is detected, its bounding box is typically cropped and sent to a re-identification network, which extracts the visual features needed for matching. Because Re-ID models rely heavily on these cropped images, poor inputs, such as blurry, misaligned, or incomplete crops, can lead to weaker embeddings and less reliable cross-camera matching.

Ultralytics YOLO models help reduce these issues by consistently producing clean, well-aligned bounding boxes that capture the vehicle of interest fully. With clearer and more accurate crops, the Re-ID network can focus on meaningful details like color, shape, texture, and other distinguishing features. High-quality inputs lead to more dependable and accurate Re-ID performance across camera views.

Although Ultralytics YOLO models don’t perform re-identification on their own, they provide the critical information that a re-ID network needs to compare vehicles across different camera views. Models like YOLO11 can take care of locating and tracking vehicles within each camera, while the Re-ID model determines whether two vehicle crops from different locations belong to the same identity.

When these components work together, YOLO for detection and tracking, and a dedicated embedding model for feature extraction, they form a complete multi-camera vehicle matching pipeline. This makes it possible to associate the same vehicle as it moves through a larger camera network.

For instance, in a recent study, researchers used a lightweight YOLO11 model as the vehicle detector in an online multi-camera tracking system. The study found that using YOLO11 helped reduce detection time without sacrificing accuracy, which improved the overall performance of downstream tracking and cross-camera matching.

Now that we have a better understanding of how Ultralytics YOLO models can support vehicle re-identification, let’s take a closer look at the deep learning models that handle the feature extraction and matching steps. These models are responsible for learning how vehicles look, creating robust embeddings, and distinguishing between visually similar vehicles across different camera views.

Here are some examples of the core deep learning components used in object re-identification systems:

In addition to these architectural components, metric learning plays a key role in training vehicle Re-ID models. Loss functions such as triplet loss, contrastive loss, and cross-entropy loss help the system learn strong, discriminative embeddings by pulling together images of the same vehicle while pushing apart different ones.

In computer vision research, the quality of a dataset has a major impact on how well a model performs once deployed. A dataset provides the labeled images or videos a model learns from.

For vehicle re-identification, these state-of-the-art datasets must capture diverse conditions such as lighting, viewpoint changes, and weather variations. This diversity helps models handle the complexity of real-world transportation environments.

Here’s a glimpse of popular datasets that support the training, optimization, and evaluation of vehicle re-identification models:

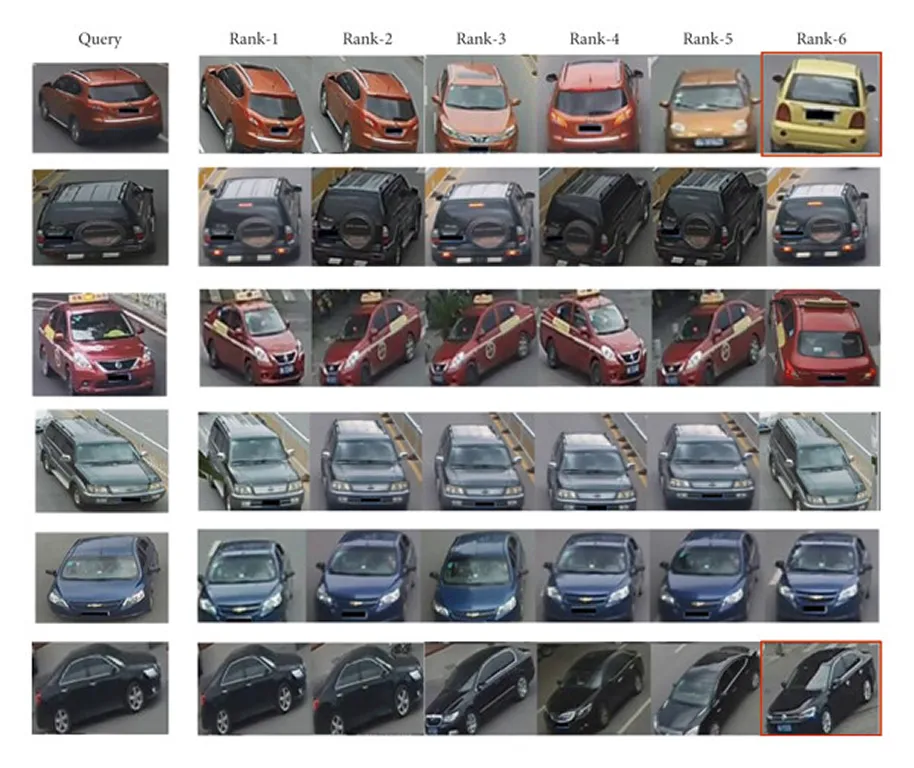

Model performance on these datasets is usually evaluated using metrics like mean average precision (mAP) and Rank-1 or Rank-5 accuracy. mAP measures how accurately the model retrieves all relevant matches for a given vehicle, while Rank-1 and Rank-5 scores indicate whether the correct match appears at the top of the results list or within the first few predictions.

Together, these benchmarks give researchers a consistent way to compare different approaches and play an important role in guiding the development of more accurate and reliable vehicle re-identification systems for real-world use.

Now that we’ve covered the fundamentals, let’s walk through some real-world use cases where vehicle re-identification supports practical transportation, mobility, and surveillance workflows.

Busy city roads are constantly filled with movement, and traffic cameras often struggle to keep track of the same vehicle as it moves between different areas. Changes in lighting, crowded scenes, and vehicles that look nearly identical can cause identities to be lost across cameras.

Vehicle re-identification addresses this by detecting vehicles clearly, extracting distinctive features, and maintaining consistent IDs even in low-resolution or busy footage. The result is smoother, continuous tracking across the network, giving traffic teams a clearer picture of how vehicles move through the city and enabling faster, more informed responses to congestion and incidents.

Smart parking facilities rely on consistent vehicle identification to manage entry, exit, access control, and space allocation. However, cameras in these environments often capture vehicles from unusual angles and under challenging lighting, such as in underground garages, shaded areas, or outdoor lots at dusk.

These conditions make it harder to confirm whether the same vehicle is being seen across different zones. When identities are inconsistent, parking records can break, access control becomes less reliable, and drivers may experience delays. That’s why many smart-parking systems incorporate vehicle re-identification models to maintain a stable identity for each vehicle as it moves through the facility.

Building on top of traffic monitoring, vehicle re-identification also plays an important role in law enforcement and forensic investigations. In many cases, officers need to follow a vehicle across several cameras, but license plates may be unreadable, missing, or deliberately obscured.

Crowded scenes, low visibility, and partial occlusion can make different vehicles look deceptively similar, making manual identification slow and unreliable. Vehicle re-identification can be used to trace a vehicle’s movement across non-overlapping camera networks by analyzing its visual features rather than depending solely on license plates.

This means investigators can more easily follow a vehicle’s movements, understand when it appeared in different locations, and confirm its path before and after an incident. AI-powered vehicle re-ID also supports tasks such as tracking suspect vehicles, reviewing incident footage, or determining which direction a vehicle traveled before or after an event.

Fleet and logistics operations often rely on GPS, RFID tags, and manual logs to track vehicle movement, but these tools leave gaps in areas covered by security or yard cameras, such as loading bays, warehouse yards, and internal road networks.

Vehicles frequently move between cameras that don’t overlap, disappear behind structures, or look nearly identical to others in the fleet, making it difficult to confirm whether the same vehicle has been seen in different locations. Vehicle re-identification systems can help close these gaps by analyzing visual details and timing information to maintain a consistent identity for each vehicle as it moves through the facility.

This gives fleet managers a more complete view of activity inside their hubs, supporting tasks such as verifying delivery paths, identifying unusual movement, and ensuring that vehicles follow expected routes.

Here are some of the key benefits of using AI-enabled vehicle re-identification:

While vehicle re-identification offers many advantages, there are also some limitations to consider. Here are a few factors that affect its reliability in real-world environments:

Vehicle re-identification is continuing to advance as technology evolves. Recent publications from IEEE, CVPR, and arXiv, along with presentations at international conferences, highlight a clear shift toward richer models that combine multiple data sources and more advanced feature reasoning. Future work in this area will likely focus on building systems that are more robust, efficient, and capable of handling real-world variability at scale.

For example, one promising direction is the use of transformer-based models and graph aggregation networks. Transformers can analyze an entire image and understand how all the visual details fit together, which helps the system recognize the same vehicle even when the angle or lighting changes.

Graph-based models take this a step further by treating different vehicle parts or camera views as connected points in a network. This enables the system to understand the correlation between those key points and make better decisions about vehicle identities and discriminative features.

Another key advancement is multi-modal data fusion and feature fusion. Instead of relying only on images, newer systems combine visual information with other multimedia signals, such as GPS data or motion patterns from sensors. This extra context makes it easier for the system to stay accurate when vehicles are partially blocked, when lighting is poor, or when camera angles change suddenly.

Vehicle re-identification is becoming a key methodology in intelligent transportation systems, helping cities track vehicles more reliably across different cameras. Thanks to advances in deep learning and better validation using richer, more diverse datasets, these systems are getting more accurate and practical in real-world conditions.

As the technology evolves, it’s important to balance innovation with responsible practices around privacy, security, and ethics. Overall, these advancements are paving the way for smarter, safer, and more efficient transportation networks.

Explore more about AI by visiting our GitHub repository and joining our community. Check out our solution pages to learn about AI in robotics and computer vision in manufacturing. Discover our licensing options to get started with Vision A today!