Find out how adding realistic variations to training data through data augmentation helps improve AI model robustness and real-world performance.

Find out how adding realistic variations to training data through data augmentation helps improve AI model robustness and real-world performance.

Testing is a crucial part of building any technological solution. It shows teams how a system really works before it goes live and lets them fix problems early. This is true across many fields, including AI, where models are expected to handle unpredictable real-world conditions once they are deployed.

For instance, computer vision is a branch of AI that teaches machines to understand images and videos. Computer vision models such as Ultralytics YOLO26 support tasks like object detection, instance segmentation, and image classification.

They can be used across many industries for applications like patient monitoring, traffic analysis, automated checkout, and quality inspection in manufacturing. However, even with advanced models and high-quality training data, Vision AI solutions can still struggle once they face real-world variations such as changing lighting, motion, or partially obstructed objects.

This happens because models learn from the examples they are given during training. If they haven't seen conditions like glare, motion blur, or partial visibility before, they are less likely to recognize objects correctly in those scenarios.

One way to improve model robustness is through data augmentation. Instead of collecting large amounts of new data, engineers can make small and meaningful changes to existing images, such as adjusting lighting, cropping, or mixing images. This helps the model learn to recognize the same objects across a wider range of situations.

In this article, we'll explore how data augmentation enhances model robustness and the reliability of Vision AI systems when deployed outside controlled settings. Let's get started!

Before we dive into data augmentation, let’s discuss how to tell if a computer vision model is truly ready for real-world use.

A robust model continues to perform well even when conditions change, rather than only working on clean, perfectly labeled images. Here are some practical factors to consider when assessing AI model robustness:

Good results on clean, perfectly captured images don’t always translate to strong performance in the real world. Regular testing across varied conditions helps show how well a model holds up once deployed.

The way an object appears in a photo can change depending on lighting, angle, distance, or background. When a computer vision model is trained, the dataset it learns from needs to include this kind of variation so it can perform well in unpredictable environments.

Data augmentation expands a training dataset by creating additional examples from the images you already have. This is done by applying intentional changes such as rotating or flipping an image, adjusting brightness, or cropping part of it.

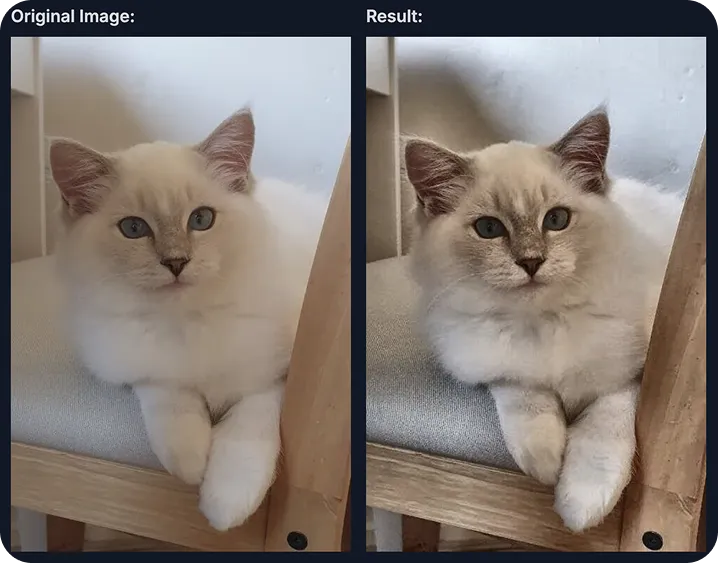

For example, imagine you only have one photo of a cat. If you rotate the image or change its brightness, you can create several new versions from that single picture. Each version looks slightly different, but it is still a photo of the same cat. These variations help teach the model that an object can look different while still being the same thing.

During model training, data augmentation can be built directly into the training pipeline. Instead of manually creating and storing new copies of images, random transformations can be applied as each image is loaded.

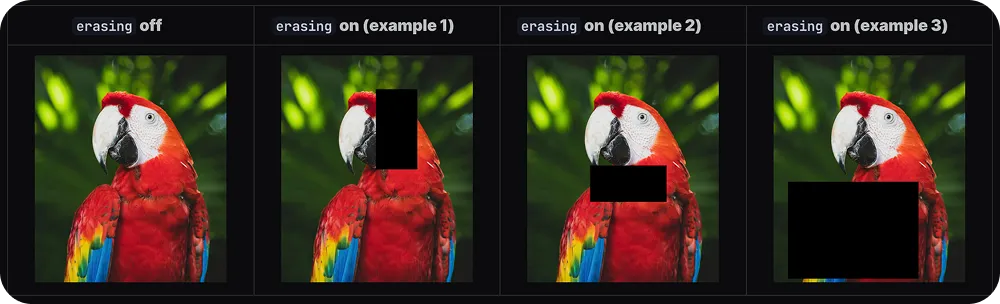

This means the model sees a slightly different version of the image every time, whether it appears brighter, flipped, or partly hidden. Techniques like random erasing can even remove small regions of the image to simulate real-world situations where an object is blocked or only partially visible.

Seeing many different versions of the same image makes it possible for the model to learn which features are important, rather than depending on one perfect example. This variety builds AI model robustness so it can perform more reliably in real-world conditions.

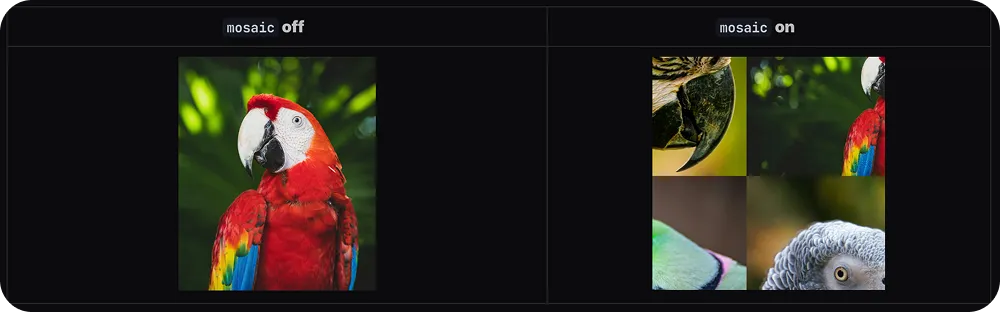

Here are some data augmentation techniques used to introduce variation into training images:

Managing datasets, creating image variations, and writing transformation code can add extra steps to building a computer vision application. The Ultralytics Python package helps simplify this by providing a single interface for training, running, and deploying Ultralytics YOLO models like YOLO26. As part of this effort to streamline training workflows, the package includes built-in, Ultralytics-tested data augmentation optimized for YOLO models.

It also supports useful integrations that remove the need for separate tools or custom code. Specifically, for data augmentation, the package integrates with Albumentations, a widely used image augmentation library. This integration allows augmentations to be applied automatically during training, without needing extra scripts or custom code.

Another factor that impacts model robustness is annotation quality. Clean, accurate labels, created and managed with annotation tools such as Roboflow, help the model understand where objects are and what they look like.

During training, data augmentations such as flips, crops, and rotations are applied dynamically, and annotations are automatically adjusted to match these changes. When labels are precise, this process works smoothly and provides the model with many realistic examples of the same scene.

If annotations are inaccurate or inconsistent, those errors can end up being repeated across augmented images, which can make training less effective. Starting with accurate annotations prevents these errors from spreading and contributes to better model robustness.

Next, let’s walk through examples of how data augmentation contributes to AI model robustness in real-world applications.

Synthetic images are often used to train object detection systems when real data is limited, sensitive, or difficult to collect. They let teams quickly generate examples of products, environments, and camera angles without needing to capture every scenario in real life.

However, synthetic datasets can sometimes look too clean compared to real-world footage, where lighting shifts, objects overlap, and scenes include background clutter. Data augmentation helps bridge this gap by introducing realistic variations, such as different lighting, noise, or object placement, so the model learns to handle the types of conditions it will see when deployed.

For example, in a recent study, a YOLO11 model was trained entirely on synthetic images, and data augmentation was added to introduce extra variation. This played a role in the model learning to recognize objects more broadly. It performed well when tested on real images, even though it had never seen real-world data during training.

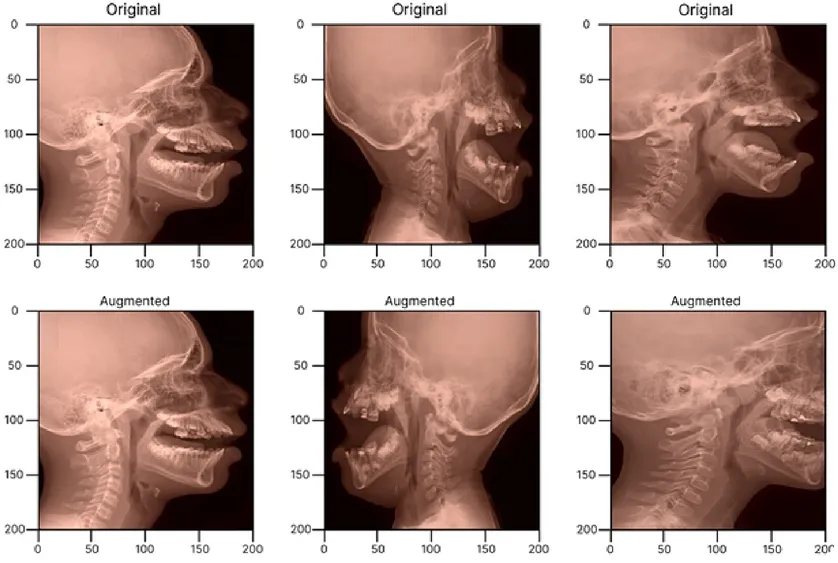

Medical imaging datasets are often limited, and the scans themselves can vary based on equipment type, imaging settings, or clinical environment. Differences in patient anatomy, angles, lighting, or visual noise can make it difficult for computer vision models to learn patterns that generalize well across patients and hospitals.

Data augmentation helps address this by creating multiple variations of the same scan during training, such as adding noise, slightly shifting the image, or applying small distortions. These changes make the training data feel more representative of real clinical conditions.

For instance, in a pediatric imaging study, researchers used YOLO11 for anatomical segmentation and trained it on augmented medical data. They introduced variations like added noise, slight position shifts, and small distortions to make the images more realistic.

By learning from these variations, the model focused on meaningful anatomical features rather than surface-level differences. This made its segmentation results more stable across different scans and patient cases.

Collecting diverse data is difficult, but data augmentation allows models to learn from a broader range of visual conditions. This results in stronger model robustness when dealing with occlusions, lighting changes, and crowded scenes. Overall, this helps them perform more reliably outside controlled training environments.

Join our community and explore the latest in Vision AI on our GitHub repository. Visit our solution pages to learn how applications like AI in manufacturing and computer vision in healthcare are driving progress, and check out our licensing options to power your next AI solution.