Understand EfficientNet architecture & its compound scaling magic! Explore EfficientNet B0-B7 for top-tier image classification and segmentation efficiency.

Understand EfficientNet architecture & its compound scaling magic! Explore EfficientNet B0-B7 for top-tier image classification and segmentation efficiency.

In 2019, researchers at Google AI introduced EfficientNet, a state-of-the-art computer vision model built to recognize objects and patterns in images. It was primarily designed for image classification, which involves assigning an image to one of several predefined categories. However, today, EfficientNet also serves as a backbone for more complex tasks such as object detection, segmentation, and transfer learning.

Before EfficientNet, such machine learning and Vision AI models tried to improve accuracy by adding more layers or increasing the size of those layers. Layers are the steps in a neural network model (a type of deep learning model inspired by the human brain) that process data to learn patterns and improve accuracy.

These changes created a trade-off, making traditional AI models larger and slower, while the extra accuracy was often minimal compared to the significant increase in computing power required.

EfficientNet took a different approach. It increased the depth (number of layers), width (number of units in each layer), and image resolution (the detail level of input images) together in a balanced way. This method, called compound scaling, reliably uses all available processing power. The end result is a smaller and faster model that can perform better than older models like ResNet or DenseNet.

Today, newer computer vision models like Ultralytics YOLO11 offer greater accuracy, speed, and efficiency. Even so, EfficientNet remains an important milestone that influenced the design of many advanced architectures.

In this article, we’ll break down EfficientNet in five minutes, covering how it works, what makes it unique, and why it still matters in computer vision. Let’s get started!

Before EfficientNet was designed, most image recognition models improved accuracy by adjusting their layers or increasing the input image size to capture more detail. While these strategies improved results, they also made models heavier and more demanding. This meant they needed more memory and better hardware.

Instead of changing individual layers, EfficientNet scales depth, width, and image resolution together using a method called compound scaling. This approach allows the model to grow efficiently without overloading any single aspect.

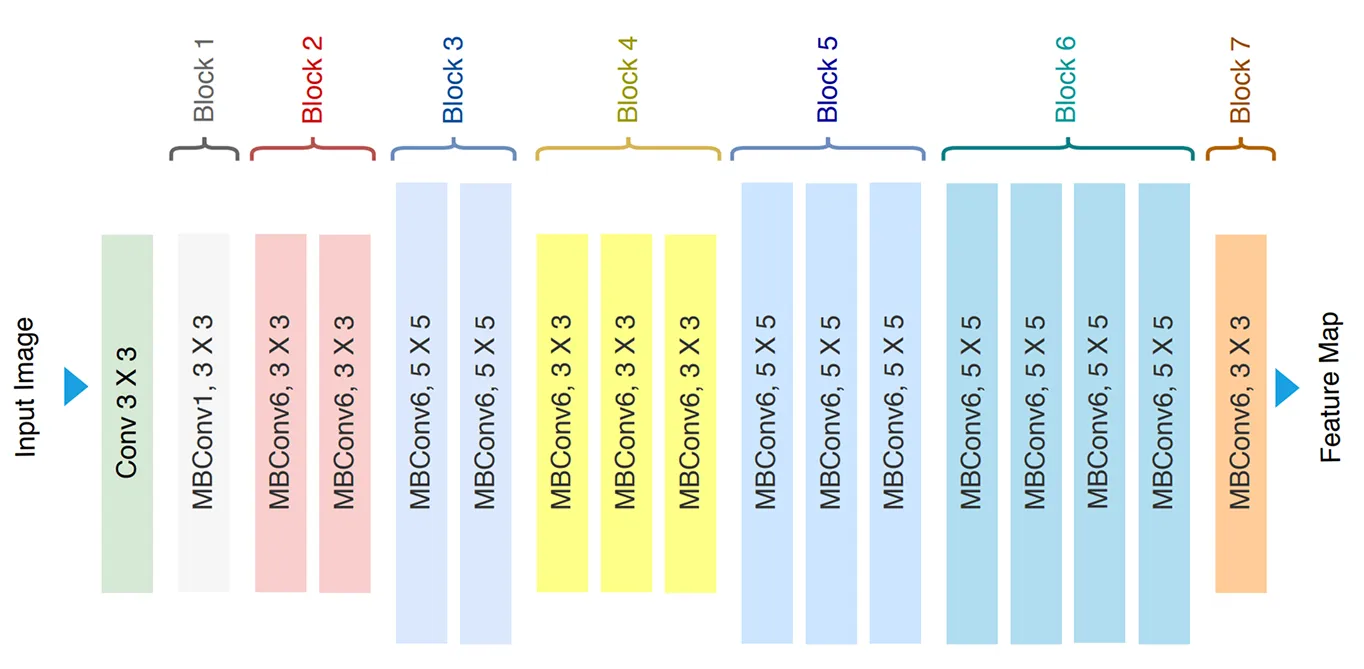

The EfficientNet architecture processes images through a series of blocks, each built from smaller modules. The number of modules in each block depends on the model size.

Smaller versions use fewer modules, while larger versions repeat modules more often. This flexible design enables EfficientNet to deliver high accuracy and efficiency across a wide range of applications, from mobile devices to large-scale systems.

The compound scaling method expands a model’s depth, width, and image resolution but keeps them in balance. This makes it possible to use computing power efficiently. The series begins with a smaller baseline model called EfficientNet-B0, which serves as the foundation for all other versions.

From B0, the models scale up into larger variants named EfficientNet-B1 through EfficientNet-B7. With each step, the network gains additional layers, increases the number of channels (units used for processing), and handles higher-resolution input images. The amount of growth at each step is determined by a parameter called the compound coefficient, which ensures that depth, width, and resolution increase in fixed proportions rather than independently.

Next, let’s take a look at the architecture of EfficientNet.

It builds on MobileNetV2, a lightweight computer vision model optimized for mobile and embedded devices. At its core is the Mobile Inverted Bottleneck Convolution (MBConv) block, a special layer that processes image data like a standard convolution but with fewer calculations. This block makes the model both fast and more memory-efficient.

Inside each of the MBConv blocks is a squeeze-and-excitation (SE) module. This module adjusts the strength of different channels in the network. It boosts the strength of essential channels and reduces the strength of others. The module helps the network focus on the most important features in an image, while disregarding the rest. The EfficientNet model also uses a Swish activation function (a mathematical function that helps the network learn patterns), which helps it spot patterns in images better than older methods.

Beyond this, it uses DropConnect, where some connections inside the network are switched off at random during training. This stochastic regularization method (a randomization technique to prevent the model from memorizing training data instead of generalizing) reduces overfitting by forcing the network to learn more robust feature representations (stronger, more general patterns in the data) that transfer better to unseen data.

Now that we have a better understanding of how EfficientNet models work, let’s discuss the different model variants.

EfficientNet models scale from B0 to B7, starting with B0 as the baseline that balances speed and accuracy. Each version increases depth, width, and image resolution, improving accuracy. However, they also demand more computational power, from the B1 and B2 to the high-performing B6 and B7.

While EfficientNet-B3 and EfficientNet-B4 models strike a balance for larger images, B5 is often chosen for complex datasets that require precision. Beyond these models, the latest model, EfficientNet V2, can improve training speed, handle small datasets better, and is optimized for modern hardware.

EfficientNet can produce accurate results while using less memory and processing power than many other models. This makes it useful in many fields, from scientific research to products that people use daily.

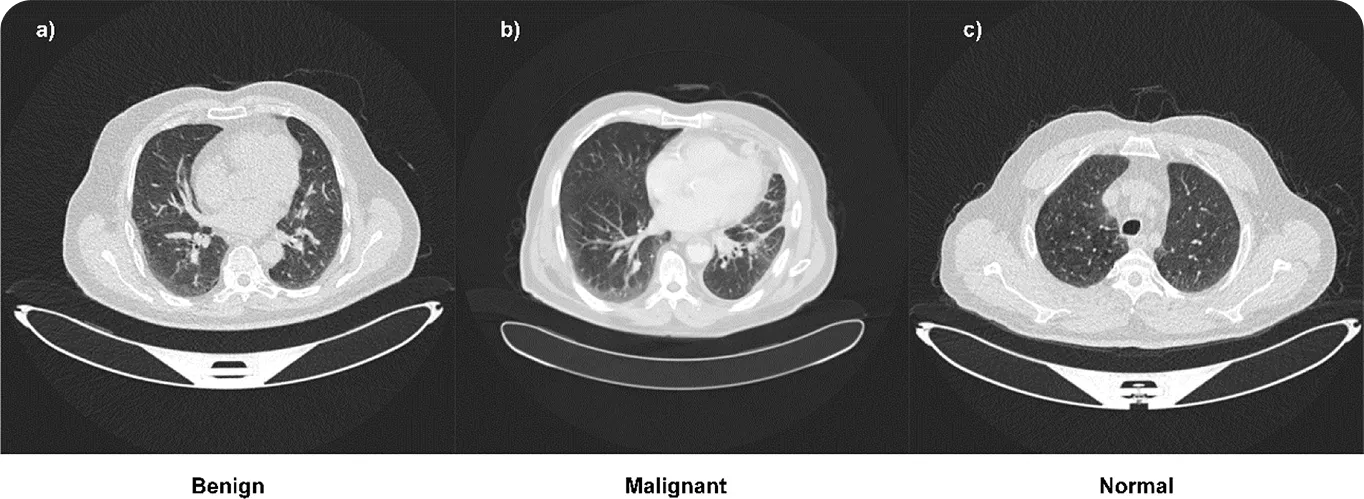

Medical images, such as CT scans of the lungs, often contain subtle details that are critical for accurate diagnosis. AI models can assist in analyzing these images to uncover patterns that might be difficult for humans to detect. One adaptation of EfficientNet for this purpose is MONAI (Medical Open Network for AI) EfficientNet, which is specifically designed for medical image analysis.

Building on EfficientNet’s architecture, researchers have also developed Lung-EffNet, a model that classifies lung CT scans to detect tumors. It can categorize tumors as benign, malignant, or normal, achieving a reported accuracy of over 99% in experimental settings.

Object detection is the process of finding objects in an image and determining their locations. It is a key part of applications like security systems, self-driving cars, and drones.

EfficientNet became important in this area because it offered a very efficient way to extract features from images. Its method of scaling depth, width, and resolution showed how models could be accurate without being too heavy or slow. This is why many detection systems, like EfficientDet, use EfficientNet as their backbone.

Newer models, such as Ultralytics YOLO11, share the same goal of combining speed with accuracy. This trend toward efficient models was strongly influenced by ideas from architectures like EfficientNet.

Here are some benefits of using EfficientNet in computer vision projects:

While there are many benefits related to using EfficientNet, here are some of the limitations of EfficientNet to keep in mind:

EfficientNet changed how computer vision models grow by keeping depth, width, and image resolution in balance. It’s still an important model and has influenced newer architectures as well. In particular, it holds a meaningful place in the history of computer vision.

Join our community and GitHub repository to explore more about AI. Check out our solution pages to read about AI in healthcare and computer vision in automotive. Discover our licensing options and start building with computer vision today!