Explore the best object detection models in 2025, with a look at popular architectures, performance trade-offs, and practical deployment factors.

Explore the best object detection models in 2025, with a look at popular architectures, performance trade-offs, and practical deployment factors.

Earlier this year, Andrew Ng, a pioneer in AI and machine learning, introduced the concept of agentic object detection. This approach uses a reasoning agent to detect objects based on a text prompt without requiring vast amounts of training data.

Being able to identify objects in images and videos without needing huge labeled datasets is a step toward smarter and more flexible computer vision systems. However, agentic Vision AI is still in its early stages.

While it can handle general tasks, such as detecting people or street signs in an image, more precise computer vision applications still rely on traditional object detection models. These models are trained on large, carefully labeled datasets to learn exactly what to look for and where objects are located.

Traditional object detection is essential because it provides both recognition, identifying what the object is, and localization, determining exactly where it is in the image. This combination enables machines to perform complex real-world tasks reliably, from autonomous vehicles to industrial automation and healthcare diagnostics.

Thanks to tech advancements, object detection models are continuing to improve, becoming faster, more accurate, and better suited for real-world environments. In this article, we'll walk through some of the best object detection models available today. Let’s get started!

Computer vision tasks like image classification can be used to tell whether an image contains a car, a person, or another object. However, they can't determine where the object is located within the image.

This is where object detection can be insightful. Object detection models can identify what objects are present and also pinpoint their exact locations. This process, known as localization, allows machines to understand scenes more accurately and respond appropriately, whether it is stopping a self-driving car, guiding a robot arm, or highlighting an area in medical imaging.

The rise of deep learning has transformed object detection. Instead of relying on hand-coded rules, modern models learn patterns directly from annotations and visual data. These datasets teach models what objects look like, where they usually appear, and how to handle challenges such as small objects, cluttered scenes, or varying lighting conditions.

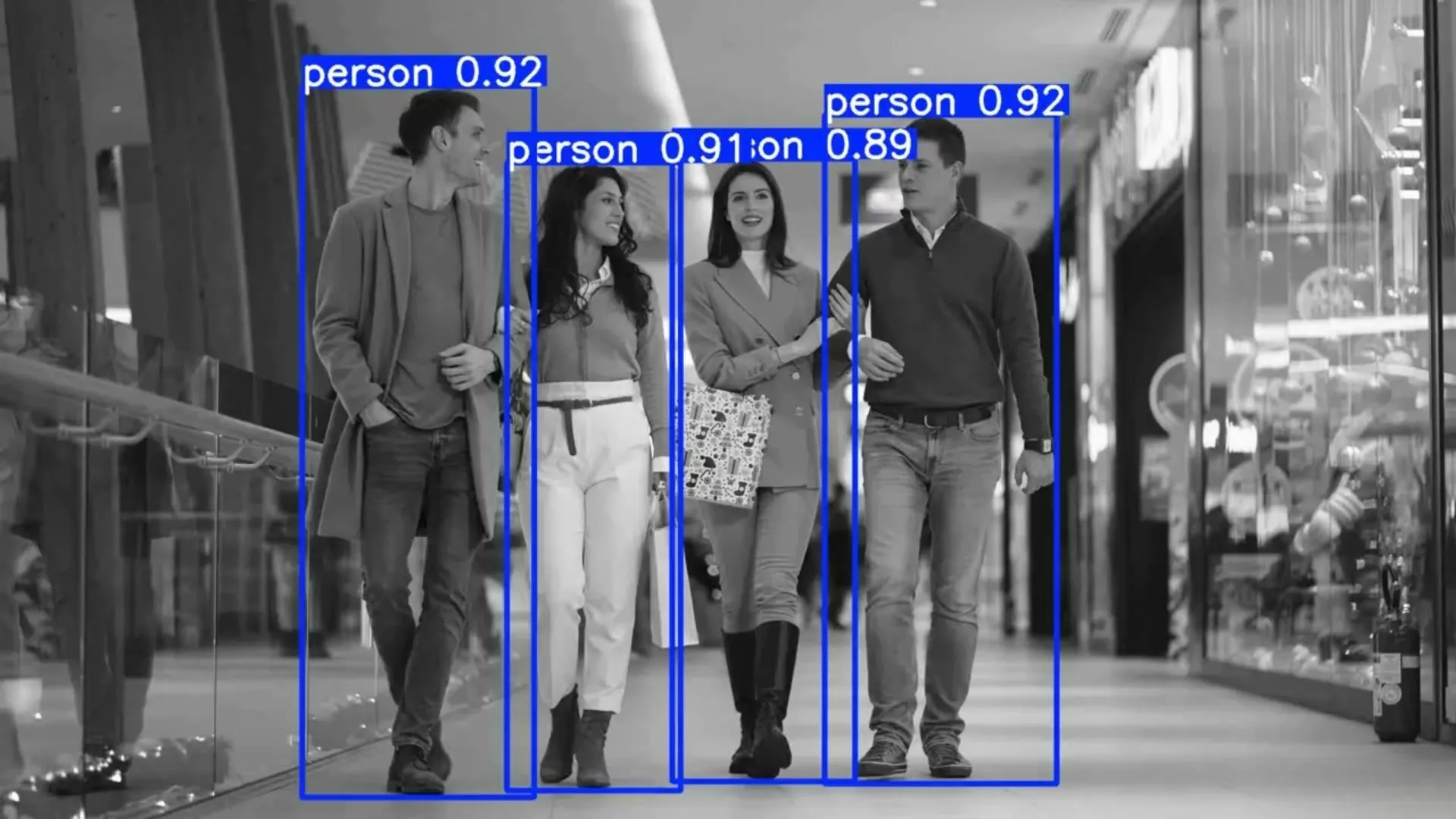

In fact, state-of-the-art object detection systems can accurately detect multiple objects at once. This makes object detection a critical technology in applications like autonomous driving, robotics, healthcare, and industrial automation.

The input to an object detection model is an image, which could come from a camera, a video frame, or even a medical scan. The input image is processed through a neural network, typically a convolutional neural network (CNN), which is trained to recognize patterns in visual data.

Inside the network, the image is analyzed in stages. Based on the features it detects, the model predicts which objects are present and where they appear.

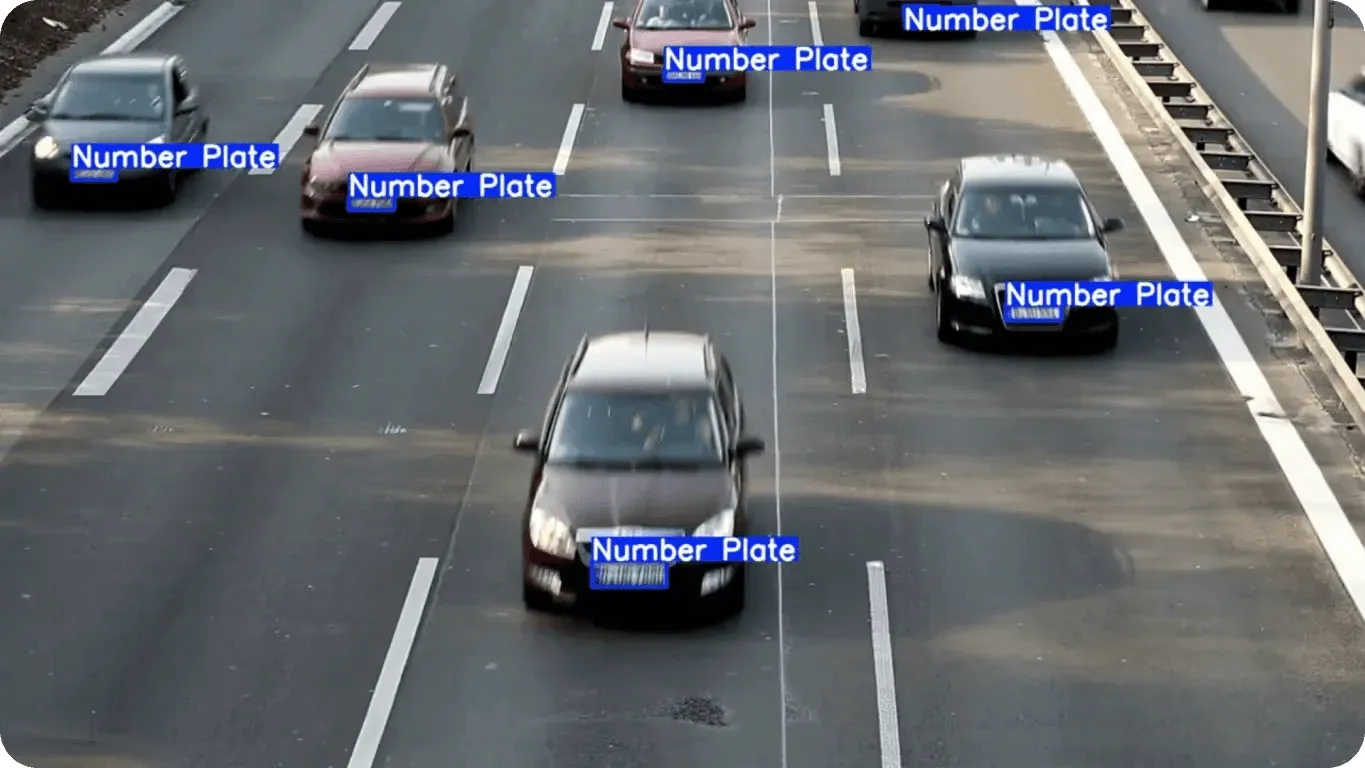

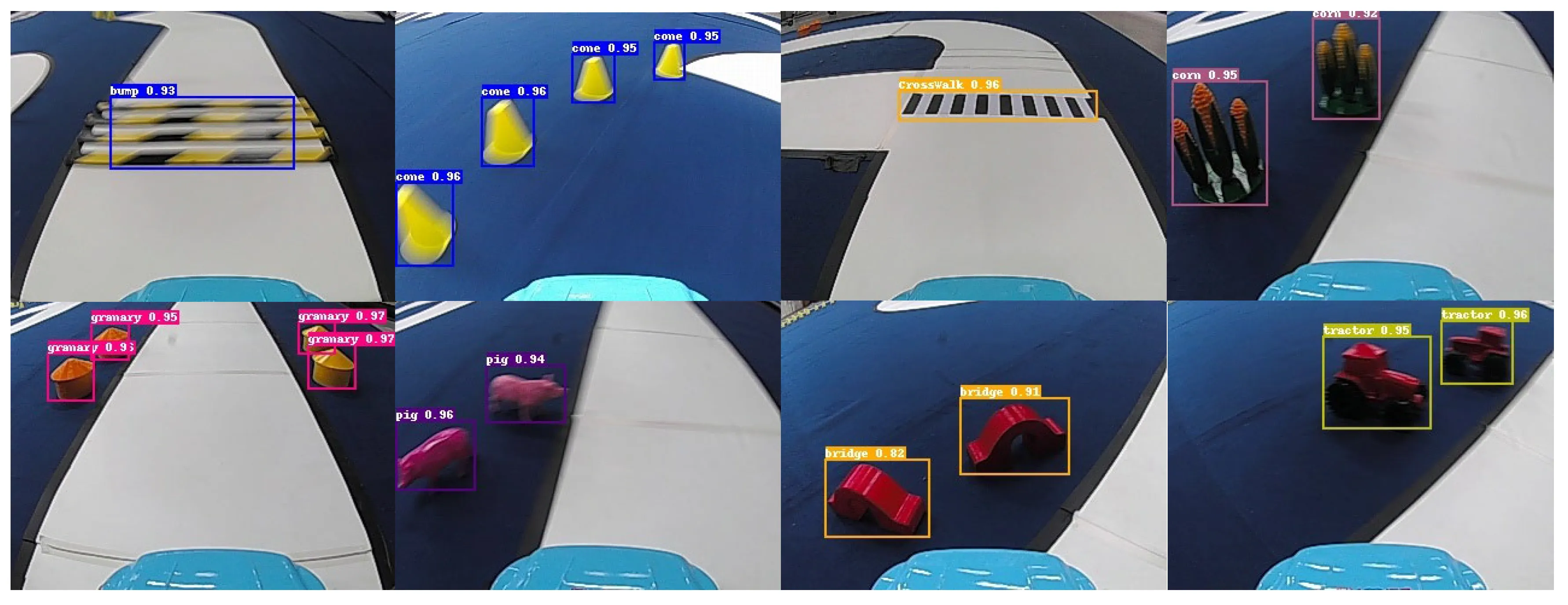

These predictions are represented using bounding boxes, which are rectangles drawn around each detected object. For every bounding box, the model assigns a class label (for example, car, person, or dog) and a confidence score indicating how certain it is about the prediction (this can also be thought of as a probability).

The overall process relies heavily on feature extraction. The model learns to identify useful visual patterns, such as edges, shapes, textures, and other distinguishing characteristics. These patterns are encoded in feature maps, which help the network understand the image at multiple levels of detail.

Depending on the model architecture, object detectors use different strategies to locate objects, balancing speed, accuracy, and complexity.

Many object detection models, particularly two-stage detectors like Faster R-CNN, focus on specific parts of the image called regions of interest (ROIs). By concentrating on these areas, the model prioritizes regions more likely to contain objects instead of analyzing every pixel equally.

On the other hand, single-stage models like early YOLO models don’t select specific ROIs like two-stage models do. Instead, they divide the image into a grid and use predefined boxes, called anchor boxes, along with feature maps to predict objects across the entire image in one pass.

Nowadays, cutting-edge object detection models are exploring anchor-free approaches. Unlike traditional single-stage models that rely on predefined anchor boxes, anchor-free models predict object locations and sizes directly from feature maps. This can simplify the architecture, reduce computational overhead, and improve performance, especially for detecting objects of varying shapes and sizes.

Today, there are many object detection models, each designed with specific goals in mind. Some are optimized for real-time performance, while others focus on achieving the highest accuracy. Choosing the right model for a computer vision solution often depends on your particular use case and performance requirements.

Next, let’s explore some of the best object detection models of 2025.

One of the most widely used families of object detection models today is the Ultralytics YOLO model family. YOLO, which stands for You Only Look Once, is popular across industries because it delivers strong detection performance while being fast, reliable, and easy to work with.

The Ultralytics YOLO family includes Ultralytics YOLOv5, Ultralytics YOLOv8, Ultralytics YOLO11, and the upcoming Ultralytics YOLO26, offering a range of options for different performance and use-case requirements. Thanks to their lightweight design and speed optimization, Ultralytics YOLO models are ideal for real-time detection and can be deployed on edge devices with limited computing power and memory.

Beyond basic object detection, these models are highly versatile. They also support tasks such as instance segmentation, which outlines objects at the pixel level, and pose estimation, which identifies key points on people or objects. This flexibility makes Ultralytics YOLO models a go-to option for a wide range of applications, from agriculture and logistics to retail and manufacturing.

Another key reason for the popularity of Ultralytics YOLO models is the Ultralytics Python package, which provides a simple and user-friendly interface for training, fine-tuning, and deploying models. Developers can start with pre-trained weights, customize the models for their own datasets, and deploy them with just a few lines of code.

RT‑DETR (Real-Time Detection Transformer) and the newer RT‑DETRv2 are object detection models built for real-time use. Unlike many traditional models, they can take an image and give the final detections directly without using non-maximum suppression (NMS).

NMS is a step that removes extra overlapping boxes when a model predicts the same object more than once. Skipping NMS makes the detection process simpler and faster.

These models combine CNNs with transformers. The CNN finds visual details like edges and shapes, while the transformer is a type of neural network that can look at the whole image at once and understand how different parts relate to each other. This comprehensive understanding lets the model detect objects that are close together or overlapping.

RT‑DETRv2 improves on the original model with features like multi-scale detection, which helps find both small and large objects, and better handling of complex scenes. These changes keep the model fast while improving accuracy.

RF‑DETR is a real-time, transformer-based model designed to combine the accuracy of transformer architectures with the speed needed for real-world applications. Like RT‑DETR and RT‑DETRv2, it uses a transformer to analyze the whole image and a CNN to extract fine visual features such as edges, shapes, and textures.

The model predicts objects directly from the input image, skipping anchor boxes and non-maximum suppression, which simplifies the detection process and keeps inference fast. RF‑DETR also supports instance segmentation, allowing it to outline objects at the pixel level in addition to predicting bounding boxes.

Released in late 2019, EfficientDet is an object detection model designed for efficient scaling and high performance. What sets EfficientDet apart is compound scaling, a method that scales the input resolution, network depth, and network width simultaneously rather than adjusting just one factor. This approach helps the model maintain stable accuracy whether it is scaled up for high-performance tasks or scaled down for lightweight deployments.

Another key component of EfficientDet is its efficient feature pyramid network (FPN), which allows the model to analyze images at multiple scales. This multi-scale analysis is crucial for detecting objects of different sizes, enabling EfficientDet to reliably identify both small and large objects within the same image.

Released in 2022, PP-YOLOE+ is a YOLO-style object detection model, meaning it detects and classifies objects in a single pass over the image. This approach makes it fast and suitable for real-time applications, while still maintaining high accuracy.

One of the key improvements in PP-YOLOE+ is task-aligned learning, which helps the model’s confidence scores reflect how accurately objects are located. This is especially useful for detecting small or overlapping objects.

The model also uses a decoupled head architecture, which separates the tasks of predicting object locations and class labels. This allows it to draw bounding boxes more precisely while classifying objects correctly.

GroundingDINO is a transformer-based object detection model that combines vision and language. Instead of relying on a fixed set of categories, it lets users detect objects using natural language text prompts.

By aligning visual features from an image with text descriptions, the model can locate objects even if those exact labels were not in its training data. This means you can prompt the model with descriptions like “a person wearing a helmet” or “a red car near a building,” and it will generate accurate bounding boxes around matching objects.

Also, by supporting zero-shot detection, GroundingDINO reduces the need to retrain or fine-tune the model for each new use case, making it highly flexible across a wide range of applications. This combination of language understanding and visual recognition opens up new possibilities for interactive and adaptive AI systems.

As you compare various object detection models, you might be wondering how to tell which one actually performs best. It’s a good question, because beyond model architecture and the quality of your data, many factors can affect performance.

Researchers often rely on shared benchmarks and standard performance metrics to evaluate models consistently, compare results, and understand trade-offs between speed and accuracy. Standard benchmarks are especially important because many object detection models are evaluated on the same datasets, such as the COCO dataset.

Here’s a closer look at some common metrics used to evaluate object detection models:

Here are some of the key advantages of using object detection models in real-world applications:

Despite these pros, there are practical limitations that can affect how object detection models perform. Here are some vital factors to consider:

The best object detection model for your computer vision project depends on your use case, data setup, performance requirements, and hardware constraints. Some models are optimized for speed, while others focus on accuracy, and most real-world applications need a balance of both. Thanks to open-source frameworks and active communities on GitHub, these models are becoming easier to evaluate, adapt, and deploy for practical use.

To learn more, explore our GitHub repository. Join our community and check out our solutions pages to read about applications like AI in healthcare and computer vision in the automotive industry. Discover our licensing options to get started with Vision AI today.