Explore using vision AI for smarter product experiences and discover how real-time visual data, automation, and AI models create more engaging products.

Explore using vision AI for smarter product experiences and discover how real-time visual data, automation, and AI models create more engaging products.

Product experiences are changing fast. Nowadays, people expect products to be smarter, more responsive, and easier to use, whether they’re shopping, working, or managing everyday tasks.

Especially with AI becoming more accessible and embedded into everyday products, expectations have shifted even further. Users are now assuming products will adapt to their needs, reduce effort, and provide meaningful guidance in the moment, not after the fact.

That shift is pushing teams to use AI in more practical, grounded ways. Take vision AI, or computer vision: it builds on artificial intelligence (AI) and machine learning to analyze images and video, allowing products to understand visual context and respond while an interaction is happening.

This enables AI-powered functionality that can optimize workflows, streamline common tasks, and improve the customer experience without adding unnecessary complexity. As vision AI continues to mature, it is becoming a natural fit for real-world product use cases.

By using AI-driven computer vision models and algorithms, products can interpret what users see and act on that information in real time. This makes it possible to support smoother checkout experiences, improve quality control, and highlight relevant information exactly when it’s needed.

For product managers, this opens up new ways to think about product development across the entire lifecycle. Vision AI can feed data-driven dashboards with valuable insights about customer behavior, helping teams validate ideas, refine functionality, and make smarter decisions. When combined with scalable AI tools and integrated end-to-end, vision AI supports operational efficiency and enables meaningful digital transformation without overcomplicating the user experience.

In this article, we’ll explore how vision AI for smarter product experiences is being used across different industries, the key use cases shaping modern products, and what it takes to build and scale these capabilities in real-world applications. Let's get started!

Vision AI is redefining product experiences because it enables products to understand what’s happening visually and respond in real time. Instead of relying only on buttons, forms, or predefined rules, products can now react to what users are actually seeing and doing.

This makes interactions feel more natural, faster, and better aligned with real-world behavior. It is made possible through computer vision models like Ultralytics YOLO26 that can process images and video quickly and accurately enough to be used directly in products.

In particular, models like YOLO26 support a range of core computer vision tasks that are essential for real product experiences. These include object detection to locate and identify items in a scene, image classification to understand what an image represents, instance segmentation to separate objects from their surroundings, and pose estimation to understand body positions and movement. Together, these capabilities let products move past simple inputs and respond to visual context in real-time.

Since models like YOLO26 are fast and flexible, product teams can use them across many scenarios, from recognizing products on a retail shelf to detecting tools in a healthcare setting or understanding activity in a smart home. This versatility is why vision AI is becoming a foundational layer for building smarter, more responsive product experiences.

Before we dive further into how vision AI can be used to create smarter product experiences, let’s take a closer look at how it connects to product design. When visual understanding becomes part of a product, design decisions have to account for that.

This means that the product design extends beyond screens and static interfaces to include real-world context. Designers have to think about how and when users will capture visual input, what conditions the product needs to work under, and how feedback is delivered in a clear and timely way.

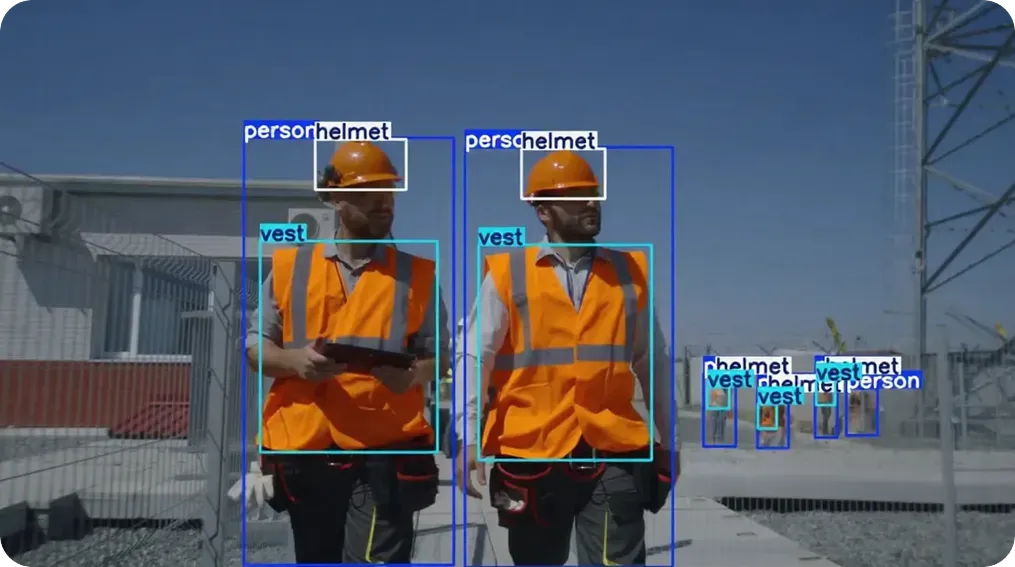

Let's say we are building an industrial safety application that uses vision AI to monitor equipment or work areas. The design needs to account for how cameras are positioned, how workers know when the system is actively analyzing a scene, and how alerts are delivered without causing distractions.

Specifically, in an industrial safety setting, users need to understand what the system is seeing and why it is responding. The design should make it clear when the vision AI solution is confident, when it is uncertain, and when human judgment is still required. Simple confirmations, clear alert reasoning, and predictable behavior all help build trust in the system.

Here are some of the key benefits of using vision AI in products:

Next, let’s walk through some examples that show how vision applications are being used to create smarter, more intuitive product experiences.

Healthcare products aren't always easy to understand. Labels can be small, instructions can be confusing, and important details are often hidden behind medical language that is hard to process without domain expertise.

Vision AI helps reduce that friction by letting patients and clinicians point a camera at a medical product and get clear, helpful information instantly. For example, a mobile app integrated with a computer vision model can be used to recognize a prescription pill in real time and explain what it is, how to take it, and what to be aware of.

Similarly, vision AI systems can go beyond identifying pills by detecting medical objects and reading printed information. Using vision tasks like object detection, such solutions can recognize devices, packaging, or tools, and then apply optical character recognition (OCR) technology to extract labels, dosage instructions, or warnings.

We’ve all been there, standing in a store aisle trying to compare products, prices, or features while juggling labels and tiny print. Vision AI can simplify the moment by enabling shoppers to use their phone cameras to interact with products directly, making discovery faster and more intuitive.

Instead of scanning shelves or digging through menus, customers can point their phone at an item and instantly see useful information overlaid on the screen. This can include product details, ratings, pricing, or side-by-side comparisons with similar items nearby.

By combining real-time object detection with augmented reality (AR), vision AI keeps shoppers in the moment while letting them make more confident decisions. Research prototypes in this space are a good example of this.

Using vision AI to identify products in physical stores and display relevant details in real time, these systems reduce decision time. They also create in-store experiences that feel more interactive, helpful, and enjoyable.

Everyday appliances have a lot of potential to be more useful, but they often lack awareness of what is happening around them. Vision AI changes that by giving appliances the ability to see and understand user activity in real time, allowing them to respond in more timely and relevant ways.

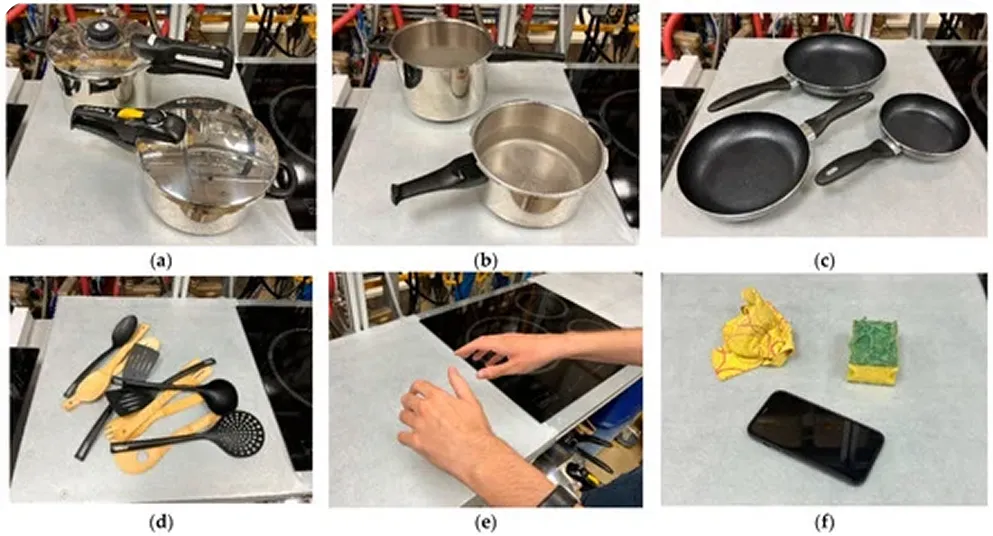

So, what does that look like in practice? In a smart kitchen, it might mean an appliance that can recognize objects, food items, or cooking conditions using a built-in camera and computer vision models trained on custom data.

For instance, a few smart refrigerators are already using internal cameras to identify food items and track inventory, letting users check what they have while shopping or get reminders when items are running low.

Vision AI can also be applied to cooking appliances that detect pots on a stove, monitor boiling or overheating, or recognize unsafe conditions like smoke. By responding to real-world visual signals instead of relying only on timers or manual input, these products behave in ways that better align with what users are actually doing in the kitchen.

As you explore vision AI, you might wonder how product teams actually bring these experiences to life. It usually starts by identifying where visual input can meaningfully improve a product, such as recognizing objects or understanding real-world environments to reduce friction for users.

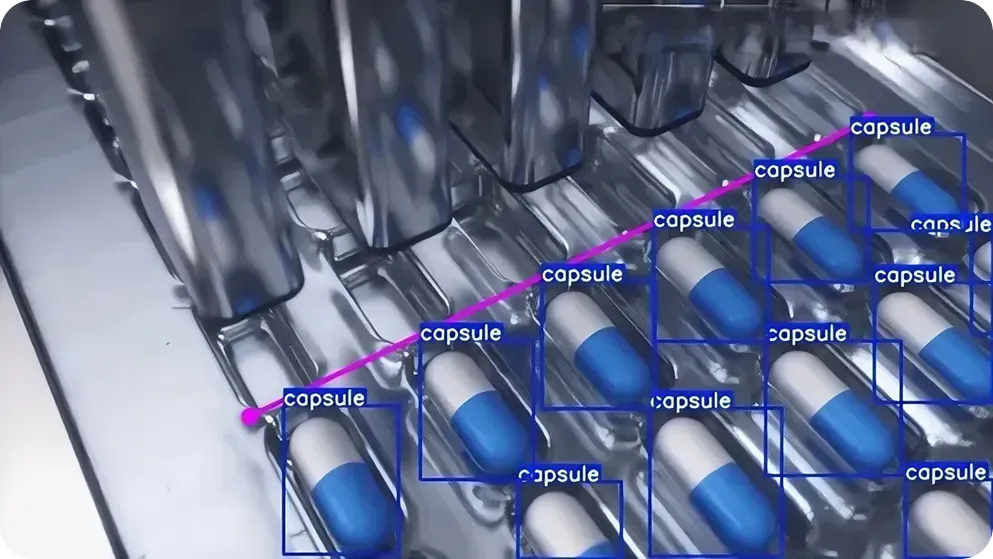

From there, teams collect visual data that reflects real usage and prepare it for training. This includes labeling images or videos and training computer vision models like Ultralytics YOLO26 for tasks like object detection or instance segmentation. The models are tested and refined to ensure they perform reliably in real-world settings.

Once ready, the models are deployed into products through APIs, edge devices, or cloud services, depending on latency and performance requirements. Teams then monitor accuracy, gather feedback, and continuously update the models so the vision AI experience stays reliable and aligned with how users interact with the product over time.

As vision AI becomes more capable and AI adoption grows, the AI community is seeing computer vision models being integrated into larger, more complete systems. Instead of operating on their own, vision models are increasingly being made part of vision AI agentic systems that combine visual perception with reasoning and decision-making.

Consider a smart retail environment as an example. Computer vision models identify products on shelves, detect when items are picked up, and monitor inventory changes in real time.

That visual information is passed to an AI agent, which reasons about what’s happening and determines the next step, such as updating inventory, triggering a restock request, or deciding when to engage a shopper. Generative AI then plays a key role by turning those decisions into natural, user-facing interactions, like generating personalized product explanations, answering questions, or recommending alternatives in plain language.

Together, vision AI, AI agents, and generative AI can create a closed loop between seeing, thinking, and acting. Vision AI provides awareness of the real world, AI agents coordinate decisions and workflows, and generative AI shapes how those decisions are communicated.

Vision AI is quickly becoming more than a nice-to-have feature. As products move beyond screens and into physical spaces, the ability to understand visual context is turning into a core capability.

Products that can see and interpret the world around them are better positioned to reduce friction, respond in real time, and deliver experiences that feel more natural to users. From a business strategy perspective, vision AI creates leverage across multiple parts of a product.

The same visual capabilities can power user-facing features, automation, safety checks, and operational insights. Over time, the visual data generated by these systems also gives product teams a clearer picture of how products are used in real-world environments, informing better design decisions and prioritization.

Most importantly, vision AI supports long-term differentiation. As competitors adopt similar interfaces and workflows, products that can adapt to real-world conditions stand out.

By investing in vision AI early and building it into the roadmap, product teams create a foundation for smarter automation, more adaptive experiences, and sustained competitive advantage as AI capabilities continue to evolve.

Vision AI makes it possible for products to understand visual information in real time, which leads to smoother interactions and more intuitive user experiences. When combined with generative AI and AI agents, products can turn what they see into meaningful actions and guidance for users. For product teams, adopting vision AI is a practical way to build smarter products that stay relevant and competitive over time.

Join our community and check our GitHub repository to learn more about AI. Explore our solutions pages to read more about computer vision in healthcare and AI in agriculture. Discover our licensing options and start building your own computer vision solutions.