Explore 60 real-world computer vision applications, from healthcare to retail, and see how Vision AI is making an impact across industries.

Explore 60 real-world computer vision applications, from healthcare to retail, and see how Vision AI is making an impact across industries.

Images and videos play an essential role in decision-making today. We rely on visual information while navigating busy roads, shopping online, scrolling through social media, visiting hospitals, and even while managing businesses.

Visual data has become a natural part of everyday life, influencing many of the choices we make. For machines to understand this information in a similar way, they also need the ability to see and interpret visual content.

This is where computer vision makes a difference. As a branch of artificial intelligence (AI), computer vision enables machines to interpret and make sense of visual information.

Instead of simply recording what’s happening, computer vision technology can analyze images to extract useful insights. Computer vision solutions can detect objects, track movement, and classify items by shape, size, or color.

Consider a simple example. Let’s say there’s a store manager who wants to identify which shelves run out of stock the fastest. Computer vision systems can be used to analyze shelf images to spot missing items and highlight products that sell quickly. This makes it possible for store managers to restock on time.

Such systems are driven by computer vision models, which are trained on datasets to recognize objects and identify patterns from visual data. For instance, Ultralytics YOLO26 is a fast, reliable vision model designed for real-time computer vision capabilities.

In this article, we’ll explore 60 impactful computer vision applications and see how they are used across different industries. Let’s get started!

Before we dive into various computer vision applications, let’s quickly take a look at the significance of computer vision today.

For years, monitoring and analyzing images or videos was a manual process. This manual approach was time-consuming, error-prone, and inconsistent. In fact, studies show that human error accounts for nearly a quarter of inspection-related issues in factory settings, slowing down decision-making across many industries.

Things shifted with the rise of machine learning and major advancements in computer vision. At the core of vision AI is image analysis, which enables models to understand what they see.

This has led to the rapid adoption of applications such as inspection, tracking, and automation, with the global computer vision market projected to reach about $58 billion by 2032.

That growth comes from the value computer vision brings to real-world applications. By automating image and video analysis, it delivers faster, more accurate, and reliable results. For example, roads can be monitored for accidents. Similarly, farms can monitor crop health in real time, while stores can track which shelves run out first.

These use cases help teams act faster and make better decisions using reliable data. To achieve this, computer vision relies on a core set of tasks that enable a wide range of applications.

Computer vision tasks are supported by trained computer vision models that learn from large datasets and apply that knowledge to live footage. For example, Ultralytics YOLO models, such as YOLO26, support several tasks in real-time environments.

Here are some of the core computer vision tasks used across a wide range of applications:

Next, let’s explore how computer vision is applied across a wide range of real-world use cases, spanning industries such as retail, manufacturing, healthcare, automotive, and agriculture.

Factories consist of a huge number of machines operating simultaneously, and it can be tricky to keep an eye on all of them. Computer vision-based predictive maintenance systems use cameras to continuously monitor equipment and analyze visual signs such as corrosion, leaks, misalignment, and surface wear. By detecting early indicators of failure, these vision-driven systems help teams schedule maintenance proactively, reduce unplanned downtime, extend machine lifespan, and maintain safer, more efficient industrial operations.

With computer vision technology, you can detect license plates. These systems are often integrated with optical character recognition (OCR) technology to scan a vehicle’s license plate and extract the letters and numbers.

This makes it easier to identify vehicles as they move through roads or checkpoints. Such technology is commonly used in traffic monitoring, toll booths, and parking systems. It is also applied at the entry and exit points of residential or commercial buildings to automate vehicle tracking and reduce manual checks.

You can monitor suspicious human behavior with computer vision. Instead of monitoring every camera feed, the vision-integrated cameras and sensors rely on detection and tracking.

They can detect activity and flag anomalies, such as loitering, sudden running, or restricted-area access. It’s primarily used in public spaces, retail stores, transport stations, and high-security areas, alerting security teams to respond quickly when something appears suspicious.

Fire and smoke detection can provide early warnings before a major incident. This is made possible by computer vision models.

These models can be used to continuously observe visual changes, such as drifting smoke, flickering flames, or unusual haze in the air. Fire and smoke detection is typically used in warehouses, factories, forests, and large buildings, where early fire detection can make all the difference.

Autonomous vehicles typically rely on computer vision to interpret constant motion. Tesla, for example, uses cameras and computer vision systems for their self-driving cars to process visual data and detect lanes, traffic signs, nearby vehicles, and people. Vision-based models support tasks such as detection, tracking, and segmentation, helping the car understand its surroundings and prioritize critical information.

Graffiti detection can be done using computer vision to identify painted markings on walls, bridges, and other public property. Intelligent systems can scan images or video to recognize shapes, colors, and patterns that match graffiti, even in busy urban scenes.

Computer vision models such as YOLO26 support object detection and image classification that can be used to detect graffiti, enabling real-time flagging of new markings. Smart cities can use YOLO26-driven graffiti detection solutions to schedule cleanups more quickly, monitor areas, and maintain public spaces.

Keeping a city running smoothly involves multiple maintenance checks every day. Computer vision solutions can change that by monitoring streets and public spaces.

For example, Singapore is well-known for its initiatives that use vision AI to maintain urban spaces. Vision-based systems monitor streets, public areas, and infrastructure, detecting issues like potholes, broken signs, or overflowing trash bins.

Crowd monitoring involves analyzing how people move and gather in busy spaces. Cameras and sensors, integrated with a vision algorithm, can process live video feeds to estimate crowd size, track movement patterns, and detect sudden changes.

This helps identify bottlenecks, overcrowding, or unusual activity before they become issues. Crowd monitoring is valuable in places such as railway stations, stadiums, public events, and city centers.

In theft detection, computer vision technology is used to identify suspicious activity. Vision AI can help analyze camera footage using deep learning and object detection algorithms to track people, objects, and movement patterns in real time.

Instead of relying only on alarms or after-the-fact reviews, these computer vision applications flag unusual behavior. This automation helps retail stores, warehouses, and smart cities reduce losses and streamline security workflows.

To drive safely, self-driving vehicles need a clear understanding of the road. Lane detection is a core computer vision application used to understand road structure in real time.

Vision-based systems can identify lane markings, road edges, and curves. By applying vision tasks like segmentation and object detection, computer vision models can track lanes even when lighting changes or traffic is heavy.

Accident and collision detection uses computer vision technology to detect crashes and near-misses in real time. Computer vision models in combination with collision-detection algorithms can help analyze real-time video feeds from traffic cameras, dashcams, or drones.

By tracking sudden vehicle stops, abnormal motion, or unexpected interactions with objects, these AI-powered systems can identify accidents within seconds. As a result, this enables faster emergency response and better traffic management for smart cities.

Long drives and heavy traffic can affect driver alertness. Driver attention monitoring and drowsiness detection enabled by computer vision systems can understand a driver’s physical state in real time.

For instance, cameras inside the vehicle can observe cues such as eye closure, blink rate, head movement, and gaze direction. Machine learning and deep learning models then interpret these signals. When signs of fatigue or distraction appear, the system can issue alerts or warnings.

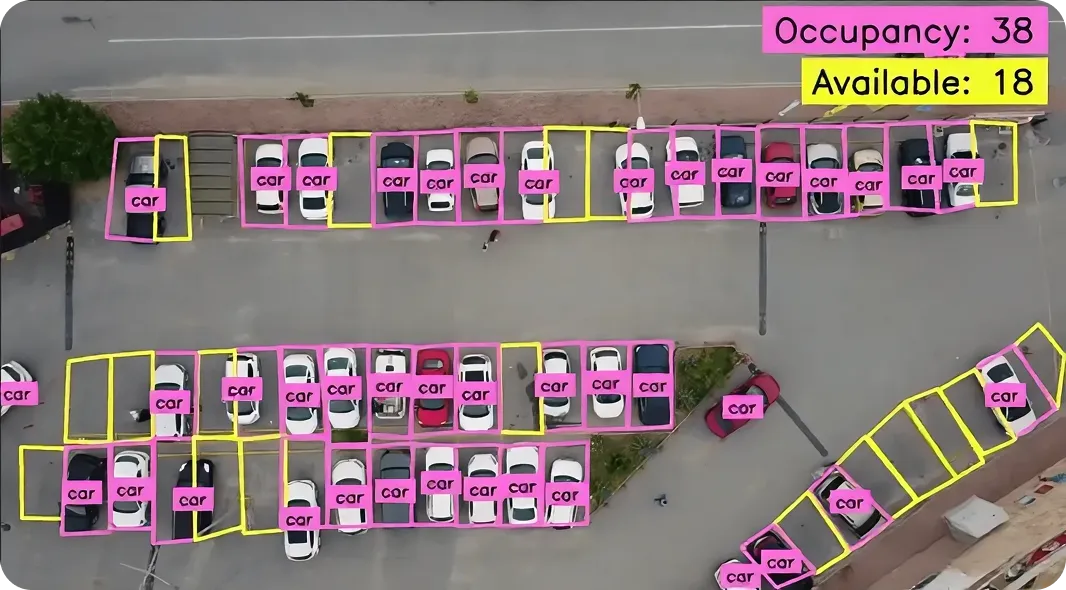

Finding a parking spot in a busy city can be challenging, but computer vision technology is making it easier nowadays. Smart parking systems use cameras and AI-powered computer vision models to monitor parking lots in real time.

Vision models can detect free and occupied spaces, helping drivers quickly and efficiently find parking spots. They are commonly used in malls, airports, office complexes, and city centers to improve parking efficiency.

Retailers can use customer heat map analysis to understand how shoppers move through a store. Vision-enabled cameras track where customers walk, pause, or gather, then turn this data into color-coded heatmaps.

Busy areas appear in warmer colors, while quieter zones show up in cooler shades. These are particularly useful when improving layouts, better placing products, reducing crowding near checkout, and analyzing customer behavior.

Many media companies are now using computer vision to detect logos in images and videos across platforms, including advertisements, events, and social media posts. By detecting and classifying logos, companies can measure campaign reach, monitor brand exposure, and detect unauthorized or fraudulent use of logos early. This means marketing and legal teams can monitor brand presence at scale without manually reviewing large volumes of visual content.

Empty shelves often go unnoticed until a customer points them out. Shelf stock monitoring can prevent this by using cameras to scan shelves regularly. Vision AI systems can scan shelf images, detect products, count items, and track changes over time using object detection and tracking. This solves a common retail issue of missed restocking opportunities.

Computer vision technology can be used to identify leaks in building slabs by analyzing thermal camera images. These systems perform tasks such as object detection and segmentation to spot subtle signs of moisture, cracks, or structural issues. Using thermal cameras, maintenance teams can detect issues early, reducing reliance on manual inspections. Slab leak detection is widely used in homes, commercial buildings, and large facilities to reduce repair costs.

Quality control focuses on whether a finished product meets the required standard before it reaches customers. Computer vision models can be used to compare products against predefined benchmarks, checking for visible issues that affect usability, safety, or appearance. This lets manufacturers maintain consistent quality at scale and reduce returns without slowing down production.

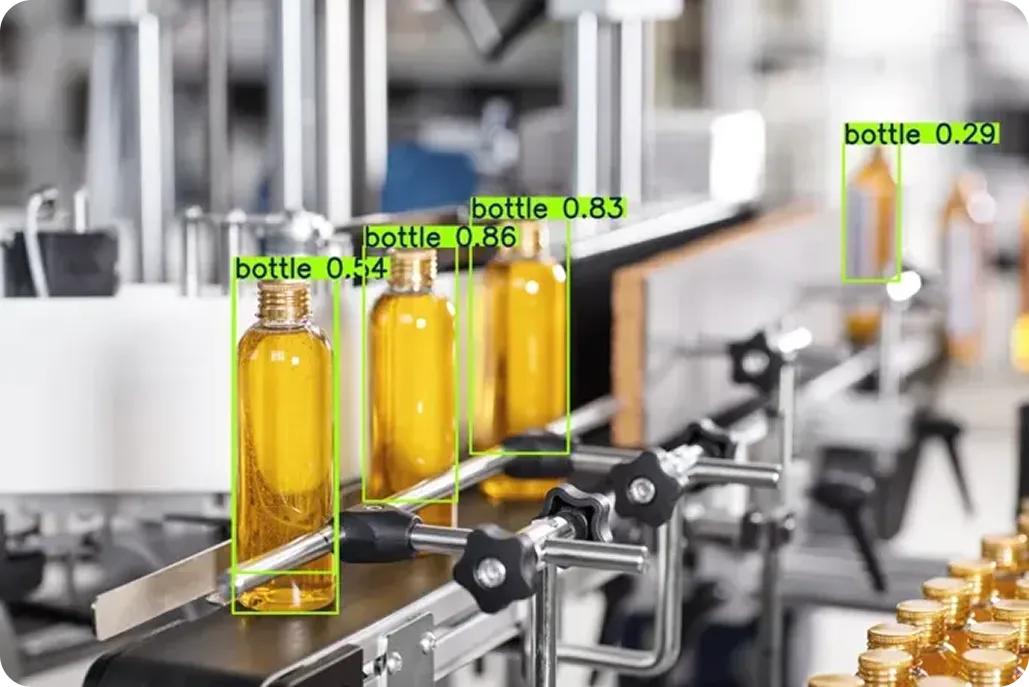

Defect detection checks products for issues such as cracks, scratches, or incorrect labels. It uses computer vision tasks like object detection to spot defects, even when items are moving quickly on a production line.

When a defect is found, the product can be automatically flagged or removed. This ensures that only high-quality items move forward without slowing down manufacturing processes.

Computer vision is also used to inspect the outer finish of products and ensure consistent quality. Vision-based models analyze texture, color consistency, coatings, and polish to detect uneven finishes or surface damage. This application is common in industries where appearance is as important as performance, such as electronics, automotive manufacturing, and consumer goods.

Before products are sealed or shipped, AI-powered cameras can check that all required items are present. Using machine learning and computer vision, these systems can quickly detect missing bottles, parts, or packaged components, reducing errors and rework. By combining object detection with real-time monitoring, manufacturers can maintain consistent quality and avoid costly mistakes.

Production lines can be monitored in real time using computer vision technology to identify misaligned parts, jams, or skipped steps. Vision systems can track objects and check their positions as items move along the line.

When an issue is detected, teams can be alerted immediately, reducing downtime, improving workflows, and maintaining product quality. This automation makes sure operations run efficiently while supporting timely decision-making.

Computer vision systems can play a crucial role in modern warehouse automation. For example, at Amazon warehouses, vision-guided robots identify packages, track their movement, and determine where to store or pick them. By combining visual data with AI-powered robotics, warehouses can streamline workflows, reduce human error, and ensure packages reach their destination faster.

Thanks to advancements in computer vision technology, businesses can monitor stock levels in real-time, detect missing or misplaced items, and update records automatically. This leads to more accurate inventory management, helps prevent overstocking or shortages, and supports faster decision-making across warehouses, retail stores, and manufacturing environments.

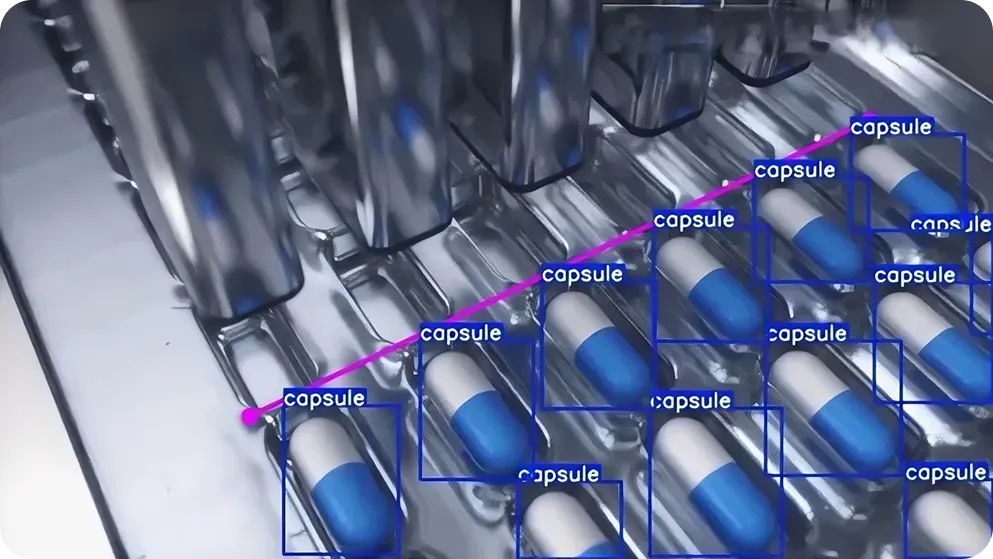

In healthcare, accurately counting and identifying pills is crucial to prevent errors. Computer vision systems can use object detection and image classification to identify pill types and automatically count them. Vision-integrated cameras capture high-resolution images of the medicine, and AI-powered algorithms analyze them in real-time, helping pharmacies, hospitals, and clinics maintain workflows.

In large-scale laundry operations, manual sorting is slow and often error-prone. Computer vision systems can use cameras and AI models to automatically sort clothes by color, size, or fabric type.

By detecting each item and directing it to the correct bin or washing cycle, these systems improve speed and consistency. This makes them especially useful in hotels, hospitals, and industrial laundries where efficiency and accuracy are critical.

Computer vision helps spot cracks that are easy to miss with the human eye. Using cameras and image processing, AI models scan surfaces such as roads, walls, bridges, and machines to detect early signs of damage.

With tasks like object detection and segmentation, even tiny fractures can be identified early. This helps teams plan repairs on time and reduce safety risks.

Lab experiments often rely on knowing the exact number of cells in a sample. This has led researchers to use computer vision models that support image segmentation and object counting. These models detect individual cells, separate overlapping ones, and count them automatically, saving time and improving accuracy.

Computer vision can assist doctors with spotting buckle fractures in X-ray images, which are common in children and easy to miss. Deep learning models can be fine-tuned to analyze medical imaging data, learning bone shapes and textures to detect subtle bends or cracks. In particular, image classification can highlight areas of concern, helping radiologists make faster and more accurate diagnoses.

A crucial issue in hospitals and care homes is keeping patients safe around the clock. Staff can’t always be present at every moment. However, technologies like computer vision can help by monitoring patient movement and detecting potential risks in real time.

For instance, by tracking body posture and motion patterns, vision-based systems can detect sudden falls in real time. When a fall is detected, the system can instantly alert caregivers, enabling a rapid response. This is especially impactful for elderly or recovering patients, where fast assistance can reduce the risk of serious injury and improve overall care.

Inside an ICU, patients need to be closely monitored at all times. This can be tedious and demanding for medical staff, especially during long shifts. Computer vision systems can be employed to help by continuously tracking patient movement and posture, allowing care teams to focus on critical tasks while still responding quickly when issues arise.

During surgery, tracking every medical instrument is critical. Overhead cameras can be integrated with computer vision to detect and track surgical tools throughout the procedure. This improves operating room safety, reduces delays, and enables surgeons and nurses to remain fully focused on the procedure.

Medical image diagnostics can be powered by computer vision. It allows doctors to analyze scans more clearly and quickly.

Using deep learning and convolutional neural networks, vision systems analyze X-rays, MRIs, and CT scans to find visual patterns. For example, in tumor detection, vision capabilities such as image processing, segmentation, and object detection highlight suspicious regions and support accurate diagnostics.

In busy industrial environments, it’s difficult to monitor every worker at all times. Vision-enabled cameras can address this by continuously observing work areas and checking for required safety gear such as helmets, gloves, and reflective vests. By detecting missing personal protective equipment (PPE) in real time, these systems help prevent accidents and improve overall workplace safety.

Plant and crop monitoring lets farmers monitor crop health throughout the growing season. Cameras placed on drones, tractors, or fixed poles can capture regular images of plants in the field.

This use of computer vision enables systems to analyze visual cues, such as leaf color, plant size, and growth patterns, to detect early signs of stress, nutrient deficiencies, or water shortages. By identifying issues early, farmers can respond more quickly, improve crop yields, and avoid large-scale crop losses.

Livestock monitoring leverages computer vision to observe animal behavior without constant human supervision. Cameras track movement, posture, and activity levels to identify signs of injury, illness, or stress.

For example, reduced movement or unusual walking patterns can signal health issues. These systems rely on detection and tracking to continuously monitor herds, helping farmers manage large farms more efficiently.

Forest fires often start in remote areas where human monitoring is limited. Computer vision systems analyze visual data from watchtowers, drones, and aerial imagery to detect early signs such as thin smoke trails, changes in vegetation color, or subtle heat-related movement. By reducing false alarms caused by fog or clouds, these real-time systems allow authorities to respond faster and prevent fires from spreading.

Knowing the right time to harvest dragon fruit is a great example of a highly specific computer vision use case where timing directly affects quality and shelf life. Vision-based models use detection and image classification to assess ripeness and predict the optimal harvest time. Farms are already starting to use AI-powered cameras to streamline ripeness checks, making harvesting faster, more accurate, and more consistent.

Birdwatching has become more accurate thanks to computer vision. Smart cameras and AI-powered binoculars use computer vision algorithms, including models like YOLO26, to support tasks such as object detection and pose estimation. This enables researchers and enthusiasts to track populations, observe behaviors, and study migration patterns.

In snowy regions, animal tracks can reveal valuable clues about wildlife movement. Computer vision models such as YOLO26 can be used to detect and track animal tracks in snowy regions.

By analyzing visual patterns, these models make it easier to identify species, estimate movement, and study migration. This allows researchers and conservationists to monitor populations in real time, observe behaviors, and protect wildlife.

Railway networks operate under constant movement, tight schedules, and safety risks, making manual monitoring complicated. Computer vision technology can automate these checks by analyzing visual data from trackside cameras, stations, and onboard systems.

Using object detection and instance segmentation, vision models can detect and track cracks, signal issues, trackside obstacles, or people entering restricted areas in real time. This reduces human error, streamlines workflows, and supports safer, more reliable railway operations at scale.

Document processing has become much easier with computer vision–powered optical character recognition systems. These systems first detect text regions within images such as invoices, forms, and receipts, then extract the content so it can be searched and used.

Once captured, the text can be automatically processed, analyzed, or summarized. This helps businesses improve accuracy and streamline document-heavy workflows in finance, healthcare, and operations.

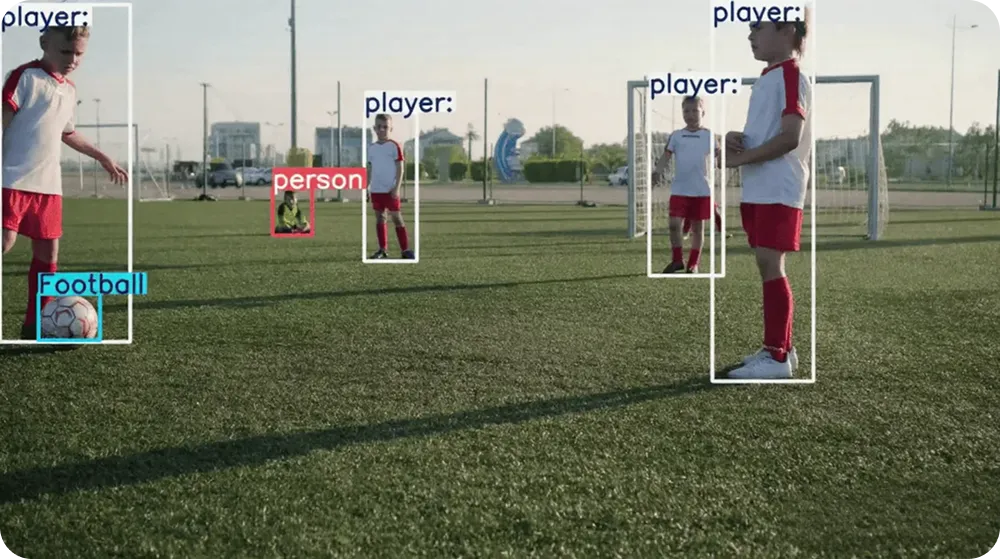

Major sports events have started using computer vision technology to track players' movements on the field. Vision models closely analyze live match footage using object detection, object tracking, and pose estimation.

Coaches and analysts use this data to study performance, positioning, and teamwork. In fact, player tracking is now common in football, basketball, and cricket, letting teams make data-backed decisions during training and matches.

Another good example of how computer vision can support sports analysts is ball tracking. In fast-paced sports, following the ball can be challenging.

Computer vision systems can detect the ball and track its movement frame by frame, recording its position, speed, and direction in real time. This data supports performance analysis and fair decision-making across sports such as football, cricket, and golf.

Regulated gaming environments like casinos use computer vision to monitor card games such as blackjack by identifying and tracking playing cards on the table in real time. This helps ensure fair gameplay, prevent cheating, and maintain transparency. Vision models like YOLO26 can be used to recognize cards based on their shapes, numbers, and symbols.

Athlete injuries often develop gradually due to poor posture or repetitive strain. Vision AI systems can help catch these issues early by analyzing how players move during training and games.

AI cameras can track body position, balance, and motion patterns to identify unsafe movements. This allows teams to correct form, improve training routines, and reduce the risk of serious injury.

Gesture control in gaming is closely related to computer vision. Vision-based systems detect and interpret hand and body movements, letting players control games without physical controllers.

This approach is widely used in augmented and virtual reality experiences, where actions like waving, jumping, or pointing are translated into real-time in-game responses, creating a more immersive experience.

Reading nutrition labels can be time-consuming, especially when formats differ across brands. With computer vision solutions, this can be simplified.

By processing images of food labels, computer vision systems can extract key details such as calories, ingredients, and nutrient information. Using image processing, optical character recognition, and machine learning, nutrition labels can be scanned with smartphones or simple scanners, making the information easier to access and compare.

Knowing how many people are in a space helps businesses and cities plan better. Computer vision-based systems can count people entering or leaving an area using video feeds from public places.

Such solutions rely on object detection and tracking to follow movement in real time. It is used in retail stores, transport hubs, and smart cities to manage crowd flow and improve safety.

Monitoring traffic is essential for keeping roads safe and reducing congestion. Cameras and sensors combined with computer vision can track vehicles in real time and analyze traffic flow. This helps city planners better understand traffic patterns and optimize signal timings to improve overall traffic management.

Computer vision technology can inspect long pipelines without putting people at risk. Drones equipped with high-resolution cameras and vision-based algorithms can inspect pipelines for corrosion, leaks, or cracks. This automation reduces human risk, speeds up maintenance checks, and enables continuous monitoring over long distances, making pipeline operations safer.

Bottle caps can sometimes go missing or fail to seal properly, leading to spoilage or safety issues. This is a key concern in the beverage industry. Computer vision systems can help address this by monitoring production lines and using cameras to detect missing, loose, or misaligned caps.

Managing large storage yards with containers and vehicles moving constantly isn’t as easy as it looks. Vision-based systems manage this complexity by identifying container IDs, tracking their positions, and recording movements in real time.

Cameras monitor yard activity and automatically update systems. This AI-powered vision solution focuses on improving logistics and overall workflow.

Rare species are often difficult to study because they are uncommon and usually live in protected or remote areas. However, vision-based systems can collect visual data using camera traps, drones, or satellite imagery.

These systems use image classification to recognize animals based on features such as shape, color, and markings. This allows Vision AI to automatically detect species, record sightings over time, and track populations without disturbing wildlife.

Computer vision has made self-checkout faster and easier. Shoppers can scan and pay for items without waiting in long lines.

This is enabled by in-store cameras, smart scanners, and vision-enabled kiosks that monitor how products are picked up and placed, helping systems accurately recognize items. As a result, errors are reduced, checkout is faster, and the overall shopping experience is smoother in busy retail stores.

Over time, tires lose grip, but the changes are often subtle and hard to notice. Vision-based systems installed in garages or service centers inspect tire surfaces to detect signs of wear or damage, such as shallow tread depth or uneven patterns. By identifying issues early, these systems help prevent unsafe driving conditions and make tire maintenance more predictable.

With computer vision, item counting can be automated by detecting and tracking products in images or video. For example, vision systems can count packaged cartons on a conveyor belt, monitor inventory levels in supermarkets, or track items moving along an assembly line during washing or processing stages. This approach is widely used in warehouses, factories, and retail environments to reduce stock mismatches, identify missing items early, and maintain accurate inventory data.

Exploring life below the ocean surface isn’t easy, but computer vision has made it more streamlined to track underwater species more effectively. Researchers can use visual data from underwater drones and submersible cameras to identify fish, corals, and other marine species in real time. This information helps track populations, study habitats, and monitor ocean ecosystems without disturbing marine life.

Large commercial kitchens produce significant food waste every day. Today, vision-based systems are being used to automate the entire process of reducing food waste.

These computer vision systems use cameras placed near preparation areas or smart waste bins to identify food, measure portion sizes, and track waste patterns. Multiple hotel chains and food service companies use this data to adjust menus, reduce waste, and cut costs.

Food quality grading is increasingly being automated using computer vision systems in food processing plants. As fruits, vegetables, and packaged items move along production lines, vision models can sort them based on size, color, ripeness, and surface defects using detection and classification. This reduces manual inspections, minimizes human error, and ensures that only high-quality food reaches customers, even when large volumes are processed daily.

Computer vision is quickly becoming a core part of cutting-edge production and operational systems. Core vision tasks, such as detection, tracking, segmentation, and classification, are now supporting applications across many industries, including healthcare, retail, agriculture, and autonomous vehicles. What is changing most is how scalable and practical these systems have become.

Want to dive deeper into AI? Join our growing community and learn more about computer vision on our GitHub repository. Check out solution pages and learn about AI in manufacturing and Vision AI in healthcare. Discover our licensing options to get started with Vision AI today!