Physical AI

Explore how Physical AI bridges digital intelligence and hardware. Learn how Ultralytics YOLO26 powers perception in robotics, drones, and autonomous systems.

Physical AI refers to the branch of artificial intelligence that bridges the gap between digital models and the

physical world, enabling machines to perceive their environment, reason about it, and execute tangible actions. Unlike

purely software-based AI, which processes data to generate text, images, or recommendations, Physical AI is embodied

in hardware systems—such as robots, drones, and autonomous vehicles—that interact directly with reality. This field

integrates advanced computer vision, sensor fusion, and control theory to create systems capable of navigating

complex, unstructured environments safely and efficiently. By combining brain-like cognitive processing with body-like

physical capabilities, Physical AI is driving the next wave of automation in industries ranging from manufacturing to

healthcare.

The Convergence of Robotics and AI

The core of Physical AI lies in the seamless integration of software intelligence with mechanical hardware.

Traditional robotics relied on rigid, pre-programmed instructions suitable for repetitive tasks in controlled

settings. In contrast, modern Physical AI systems leverage

machine learning and deep neural networks to

adapt to dynamic situations.

Key components enabling this convergence include:

-

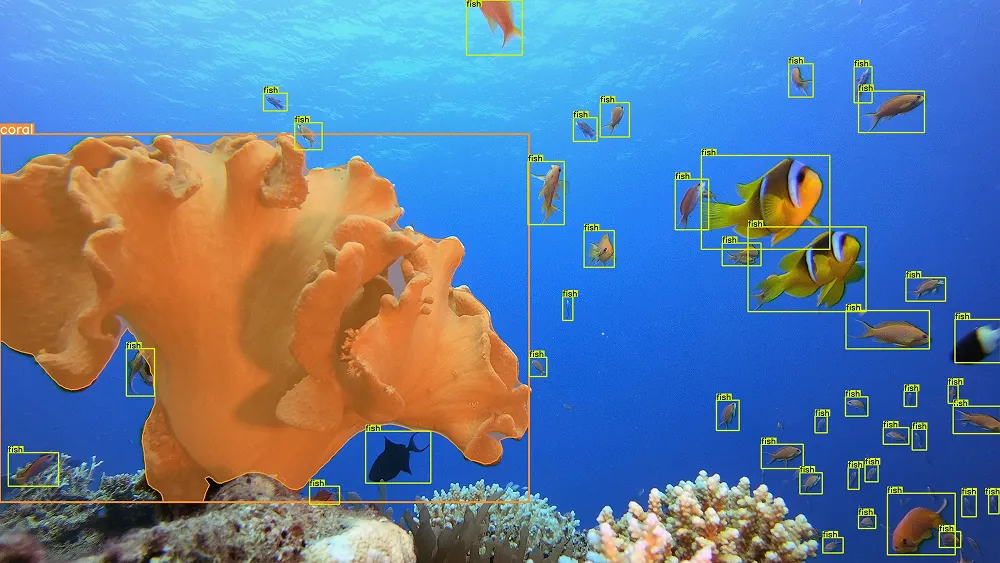

Perception: Systems use cameras and LiDAR to gather visual data, often processing it with

high-speed models like Ultralytics YOLO26 to identify

objects, obstacles, and humans in real-time.

-

Reasoning: The AI analyzes sensory input to make decisions, such as planning a path around a moving

obstacle or determining the best way to grasp a fragile object. This often involves

reinforcement learning where the agent

learns optimal behaviors through trial and error.

-

Actuation: The system translates decisions into physical movement, controlling motors and actuators

with precision. This closes the loop between

sensing and acting, allowing for responsive and dexterous manipulation.

Real-World Applications

Physical AI is transforming sectors by enabling machines to perform tasks that were previously too complex or

dangerous for automation.

Autonomous Mobile Robots (AMRs) in Logistics

In modern warehousing, AI in logistics powers

fleets of Autonomous Mobile Robots. unlike traditional automated guided vehicles (AGVs) that follow magnetic tape,

AMRs use Physical AI to navigate freely. They utilize

Simultaneous Localization and Mapping (SLAM)

to build maps of their environment and rely on

object detection to avoid forklifts and workers.

These robots can dynamically reroute based on congestion, optimizing the flow of goods without human intervention.

Surgical Robotics in Healthcare

Physical AI is revolutionizing

AI in healthcare through intelligent surgical

assistants. These systems provide surgeons with enhanced precision and control. By employing

computer vision to track surgical tools and

vital organs, the AI can stabilize the surgeon's hand movements or even automate specific suturing tasks. This

collaboration between human expertise and machine precision reduces patient recovery times and minimizes surgical

errors.

Physical AI vs. Generative AI

It is important to distinguish Physical AI from

Generative AI. While Generative AI focuses on

creating new digital content—such as text, code, or images—Physical AI focuses on interaction and

manipulation within the real world.

-

Generative AI: Outputs digital artifacts (e.g., ChatGPT writing an email or Stable Diffusion

creating art).

-

Physical AI: Outputs physical actions (e.g., a robot arm sorting recycling or a drone inspecting a

bridge).

However, these fields are increasingly intersecting. Recent developments in

multimodal AI allow robots to understand natural

language commands (a generative capability) and translate them into physical tasks, creating more intuitive

human-machine interfaces.

Implementing Perception for Physical AI

A critical first step in building a Physical AI system is giving it the ability to "see." Developers often

use robust vision models to detect objects before passing that information to a control system. The

Ultralytics Platform simplifies the process of training these models

for specific hardware deployment.

Here is a concise example of how a robot might use Python to perceive an object's position using a pre-trained model:

from ultralytics import YOLO

# Load the YOLO26 model (optimized for speed and accuracy)

model = YOLO("yolo26n.pt")

# Perform inference on a camera feed or image

results = model("robot_view.jpg")

# Extract bounding box coordinates for robot control

for result in results:

for box in result.boxes:

# Get coordinates (x1, y1, x2, y2) to guide the robotic arm

coords = box.xyxy[0].tolist()

print(f"Object detected at: {coords}")

Challenges and Future Outlook

Deploying Physical AI involves unique challenges compared to purely digital software.

AI safety is paramount; a software bug in a chatbot might

produce text errors, but a bug in a self-driving car or industrial robot could cause physical harm. Therefore,

rigorous model testing and simulation are essential.

Researchers are actively working on sim-to-real transfer, enabling

robots to learn in physics simulations before being deployed in the real world to reduce training risks. As

edge computing power increases, we can expect

Physical AI devices to become more autonomous, processing complex data locally without relying on cloud latency.

Innovations in neuromorphic engineering are also paving

the way for more energy-efficient sensors that mimic the biological eye, further enhancing the responsiveness of

physical agents.