Learn how to improve model mAP on small objects with practical tips on data quality, augmentation, training strategies, evaluation, and deployment.

Learn how to improve model mAP on small objects with practical tips on data quality, augmentation, training strategies, evaluation, and deployment.

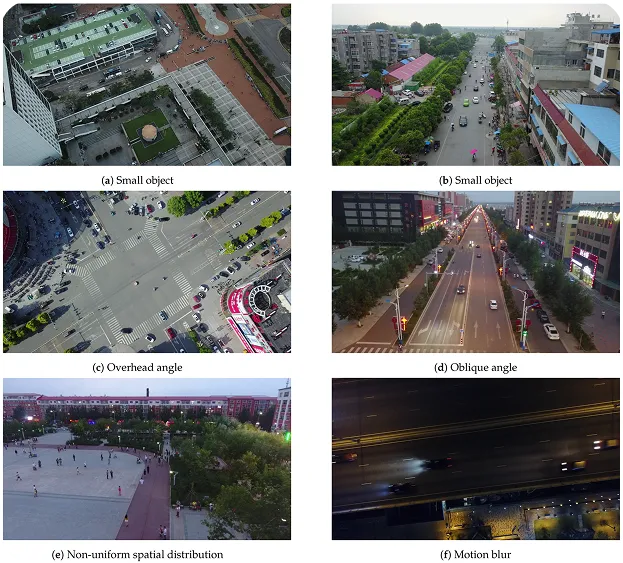

As artificial intelligence (AI), machine learning, and computer vision adoption continue to grow, object detection systems are being used everywhere, from smart traffic cameras to drones and retail analytics tools. Often, these systems are expected to detect objects of all sizes, whether it is a large truck close to the camera or a tiny pedestrian far in the distance.

Typically, spotting large and clearly visible objects is more straightforward. In contrast, detecting small objects is more challenging.

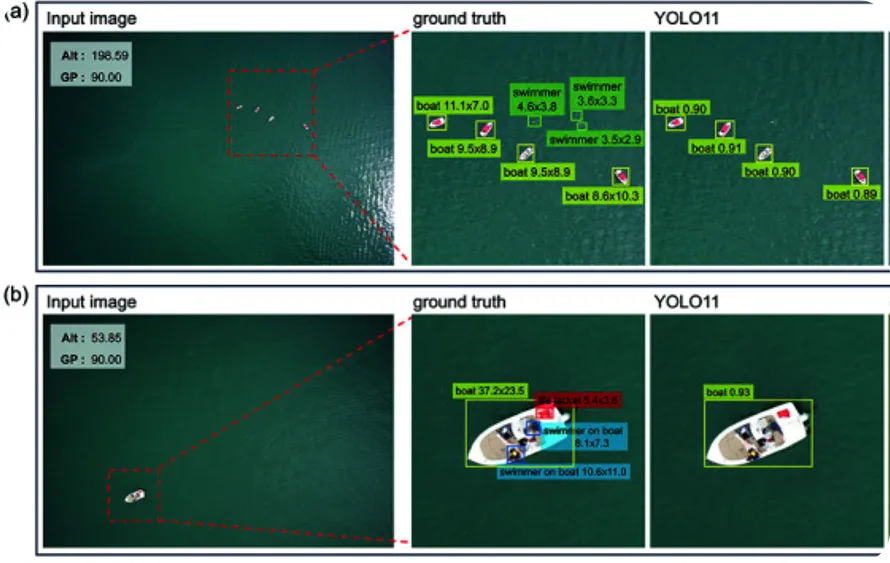

When an object occupies only a tiny portion of the image, there is very little visual information to work with. A distant pedestrian in a traffic feed or a small vehicle captured from an aerial view might contain only a few pixels, yet those pixels can carry critical information.

Computer vision models like Ultralytics YOLO models rely on visual patterns to recognize objects, and when those patterns are limited or unclear, performance suffers. Important details can be lost during processing, making predictions more sensitive to localization errors. Even a slight shift in a bounding box can turn a correct detection into a missed one.

This gap becomes clear when we look at model performance. Most detection and segmentation models handle medium and large objects well, but small objects often reduce overall accuracy.

Deep learning performance is typically measured using mean average precision, or mAP. This metric reflects both how accurate detections are and how well predicted boxes align with real objects.

It combines precision, which shows how many predicted objects are correct, and recall, which shows how many actual objects are successfully detected, across different confidence levels and Intersection over Union, or IoU (a metric that measures how much the predicted bounding box overlaps with the ground truth box) thresholds.

Previously, we’ve explored small object detection and why it’s such a tough problem for computer vision models. In this article, we'll build on that foundation and focus on how to improve mAP when small objects are involved. Let's get started!

When it comes to applications involving object detectors, a small object is defined by how much space it takes up in an image, not necessarily by how small it looks to the human eye. If it occupies only a tiny portion of the image, it contains very little visual information, which makes it harder for a computer vision algorithm to detect accurately.

With fewer pixels to work with, important details such as edges, shapes, and textures can be unclear or easily lost. As the image is processed by the model, it is resized and simplified to highlight useful patterns.

While this helps the model understand the overall scene, it can also reduce fine details even further. For small objects, those details are often essential for correct detection.

These challenges become even more apparent when looking at evaluation metrics. Small objects are especially sensitive to localization errors. Even a slightly misaligned bounding box can fall below the required Intersection over Union, or IoU, threshold.

When that happens, a prediction that looks reasonable may be counted as incorrect. This lowers both precision and recall, which ultimately reduces mean average precision, or mAP.

Because these factors are closely connected, improving performance often requires thinking about the entire system. That means carefully balancing image resolution, feature extraction, model design, and evaluation settings so that small visual details are better preserved and interpreted.

When it comes to small object detection, the quality of a dataset often makes the biggest difference in performance. Small objects occupy only a tiny portion of an image, which means there is very little visual information available for the model to learn from. Because of this, the training data becomes especially important. If the dataset doesn’t include enough clear and representative examples, the object detection model will struggle to recognize consistent patterns.

Datasets that work well for small object detection usually contain high-resolution images, frequent appearances of small targets, and consistent visual conditions. While generic datasets such as the COCO dataset are useful starting points, they often don’t match the scale, density, or context of specific real-world use cases. In such cases, collecting domain-specific training data becomes necessary to improve model performance.

Annotation quality also plays a critical role. Annotations establish the ground truth by specifying the correct object labels and bounding box locations that the model learns to predict.

For small objects, bounding boxes must be drawn carefully and consistently. Even slight differences in box placement can noticeably affect localization accuracy because small objects are highly sensitive to pixel-level shifts.

Poor or inconsistent annotations can significantly reduce mAP. If objects are mislabeled, the model learns incorrect patterns, which can increase false positives.

If objects appear in the image but are missing from the ground truth, correct detections may be counted as false positives during evaluation. Both situations lower overall performance.

Interestingly, recent research indicates that average precision for small objects often remains between 20% and 40% on standard benchmarks, which is significantly lower than for larger objects. This gap highlights the importance of dataset design and annotation consistency in overall detection accuracy.

With a better understanding of the importance of dataset quality and annotation consistency, let’s walk through how an object detection model can learn more effectively from existing data. Even when collecting additional images is difficult or expensive, there are still ways to improve performance by making better use of the data already available.

One of the most practical approaches is data augmentation. It has an especially important role in small object detection because small objects provide fewer visual cues for the model to learn from. By introducing controlled variations during training, augmentation helps the model generalize better without requiring new data collection.

Effective data augmentation focuses on keeping small objects clearly visible. Techniques like controlled resizing, light cropping, and image tiling can make small objects stand out more while preserving their shape and appearance. The goal is to help the model see small objects more often and under slightly different conditions, without changing what they look like in real situations.

However, augmentation needs to be applied carefully. Some transformations can reduce the visibility of small objects or change their appearance in ways that are unlikely to occur in real data. When this happens, the model may struggle to learn accurate object boundaries.

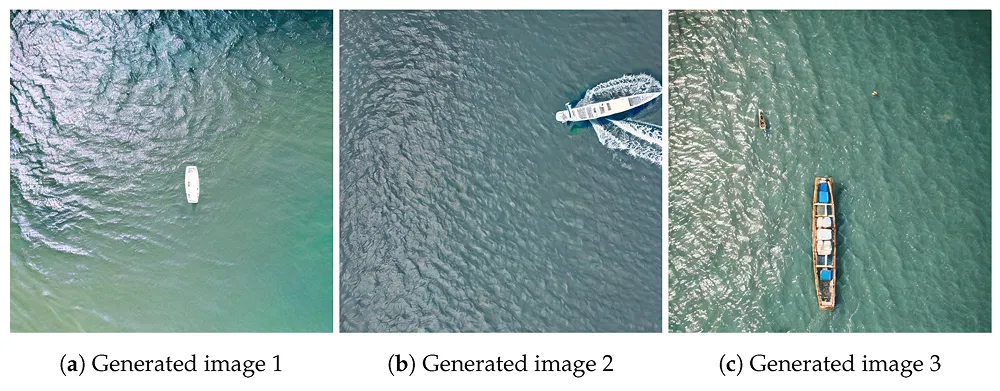

Another interesting type of data augmentation becoming more popular is the use of generative AI to create synthetic training data. Instead of relying on manually collected and labeled images, teams can now generate realistic scenes that simulate specific environments, object sizes, lighting conditions, and background variations.

This approach is particularly useful for small object detection, where real-world examples may be difficult to capture consistently. By controlling how small objects appear in synthetic images, such as adjusting scale, density, and placement, it is possible to expose models to a broader range of training scenarios.

When combined carefully with real data, synthetic augmentation can improve model robustness, reduce data collection costs, and support more targeted performance improvements.

Other than dataset quality and annotation consistency, model training choices also have a strong impact on small object detection performance.

Here are some of the key training strategies to consider:

While you can use a general object detection model for small object tasks, there are also model architectures designed specifically to improve small object detection. For instance, there are P2 model variants of the Ultralytics YOLOv8 model that are optimized for preserving fine spatial detail.

YOLOv8 processes images at multiple scales by gradually shrinking them as they move deeper through the network. This helps the model understand the overall scene, but it also reduces fine details.

When an object is already very small, important visual information can disappear during this process. The P2 variant of Ultralytics YOLOv8 addresses this by using a stride of 2 in its feature pyramid.

A feature pyramid is the part of the model that analyzes the image at multiple internal resolutions so it can detect objects of different sizes. With a stride of 2, the image is reduced more gradually at this stage, allowing more of the original pixel-level detail to be preserved.

Because more spatial detail is preserved, small objects retain more visible structure inside the network. This makes it easier for the model to localize and detect objects that occupy only a few pixels, which can help improve small object mAP.

While mean average precision summarizes overall model performance, it doesn’t always show how well a model handles objects of different sizes. For small objects, performance is often constrained by localization accuracy rather than classification alone, meaning slight bounding box shifts can significantly affect results.

In other words, the model may correctly identify the object’s class, but if the predicted bounding box is slightly misaligned, the detection may still be considered incorrect. Because small objects cover only a small number of pixels, even a minor shift in box placement can significantly reduce the overlap between the predicted box and the ground truth. As a result, evaluation scores can drop even when the object was identified correctly.

A more informative approach is to evaluate performance by object size. Most widely used benchmarks report average precision separately for small, medium, and large objects.

This size-specific breakdown provides a clearer view of where the model performs well and where it struggles. In practice, small-object AP often lags behind overall mAP, highlighting localization challenges that may not be obvious in aggregated metrics.

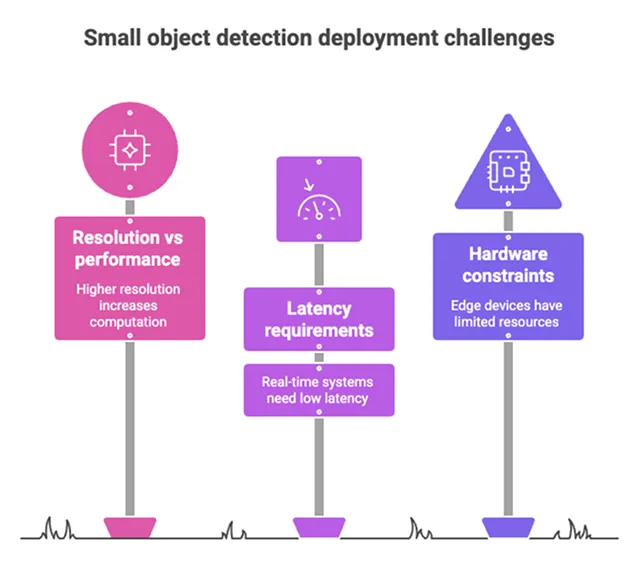

Model performance often changes when moving from controlled testing environments to real-world deployment. Factors such as image resolution, processing speed, and available hardware introduce trade-offs that directly affect small object detection.

For example, increasing input resolution can improve small object mAP because small targets occupy more pixels and retain more detail. However, higher resolution also increases memory usage and processing time. This can slow down inference and raise operational costs.

Hardware choices play a key role in managing these trade-offs. More powerful GPUs allow larger models and faster processing, but deployment environments, especially edge devices, often have limited compute and memory resources.

Real-time applications add another constraint: maintaining low latency may require reducing model size or input resolution, which can negatively impact small object recall. Ultimately, deployment decisions require balancing detection performance with hardware limitations, speed requirements, and overall cost.

Improving small object detection takes a practical and structured approach, especially when working in real-world environments. Here’s an overview of the main steps to keep in mind:

Improving mAP for small objects takes a structured, data-driven approach instead of random tweaks. Real improvements come from combining good data, consistent annotations, careful training, and the right evaluation methods. In real-world projects, steady testing and small, measurable changes are what lead to better and more reliable small object detection over time.

Join our growing community and explore our GitHub repository for hands-on AI resources. To build with vision AI today, explore our licensing options. Learn how AI in agriculture is transforming farming and how vision AI in robotics is shaping the future by visiting our solutions pages.