Speculative Decoding

Discover how speculative decoding accelerates AI inference by 2x-3x. Learn how this technique optimizes LLMs and Ultralytics YOLO26 for faster, efficient output.

Speculative decoding is an advanced optimization technique used primarily in Large Language Models (LLMs) and other

sequential generation tasks to significantly accelerate inference without compromising output quality. In traditional

autoregressive generation, a model produces one token at a time, with each step waiting for the previous one to

complete. This process can be slow, especially on powerful hardware where the memory bandwidth, rather than

computation speed, often becomes the bottleneck. Speculative decoding addresses this by employing a smaller, faster

"draft" model to predict a sequence of future tokens in parallel, which are then verified in a single pass

by the larger, more accurate "target" model. If the draft is correct, the system accepts multiple tokens at

once, effectively leaping forward in the generation process.

How Speculative Decoding Works

The core mechanism relies on the observation that many tokens in a sequence—such as function words like

"the," "and," or obvious completions—are easy to predict and do not require the full computational

power of a massive model. By offloading these easy predictions to a lightweight proxy model, the system reduces the

number of times the heavy model needs to be invoked.

When the target model reviews the drafted sequence, it uses a parallel verification step. Because GPUs are highly

optimized for batched processing, checking five drafted tokens simultaneously takes roughly the same amount of time as

generating a single token. If the target model agrees with the draft, those tokens are finalized. If it disagrees at

any point, the sequence is truncated, the correct token is inserted, and the process repeats. This method ensures that

the final output is mathematically identical to what the target model would have produced on its own, preserving

accuracy while boosting speed by 2x to 3x in many

scenarios.

Real-World Applications

This technique is transforming how industries deploy generative AI, particularly where latency is critical.

-

Real-Time Code Completion: In integrated development environments (IDEs), AI coding assistants must

provide suggestions instantly as a developer types. Speculative decoding allows these assistants to draft entire

lines of code using a small model, while a large foundation model verifies the syntax and logic in the background.

This results in a snappy, seamless user experience that feels like typing in real-time rather than waiting for a

server response.

-

Interactive Chatbots on Edge Devices: Running powerful LLMs on smartphones or laptops is

challenging due to limited hardware resources. By using speculative decoding, a device can run a quantized, tiny

model locally to draft responses, while occasionally querying a larger model (either cloud-based or a heavier local

model) for verification. This hybrid approach enables high-quality

virtual assistant interactions with minimal

lag, making edge AI more viable for complex tasks.

Relationship to Other Concepts

It is important to distinguish speculative decoding from similar optimization strategies.

-

Model Quantization: While

quantization reduces the precision of model weights (e.g., from FP16 to INT8) to save memory and speed up

computation, it permanently alters the model and may slightly degrade performance. Speculative decoding, conversely,

does not change the target model's weights and guarantees the same output distribution.

-

Knowledge Distillation:

This involves training a smaller student model to mimic a larger teacher model. The student model replaces the

teacher entirely. In speculative decoding, the small model (drafter) and large model (verifier) work in tandem

during inference, rather than one replacing the

other.

Implementation Example

While speculative decoding is often built into serving frameworks, the concept of verifying predictions is fundamental

to efficient AI. Below is a conceptual example using PyTorch to illustrate how a larger model might score or verify a

sequence of candidate inputs, similar to the verification step in speculative decoding.

import torch

def verify_candidate_sequence(model, input_ids, candidate_ids):

"""Simulates the verification step where a target model checks candidate tokens."""

# Concatenate input with candidates for parallel processing

full_sequence = torch.cat([input_ids, candidate_ids], dim=1)

with torch.no_grad():

logits = model(full_sequence) # Single forward pass for all tokens

# Get the model's actual predictions (greedy decoding for simplicity)

predictions = torch.argmax(logits, dim=-1)

# In a real scenario, we check if predictions match candidate_ids

return predictions

# Example tensor setup (conceptual)

# input_ids = torch.tensor([[101, 2054, 2003]])

# candidate_ids = torch.tensor([[1037, 3024]])

# verify_candidate_sequence(my_model, input_ids, candidate_ids)

Impact on Future AI Development

As models continue to grow in size, the disparity between compute capability and memory bandwidth—often called the

"memory wall"—widens. Speculative decoding helps bridge this gap by maximizing the arithmetic intensity of

each memory access. This efficiency is crucial for the sustainable deployment of

generative AI at scale, reducing both energy

consumption and operational costs.

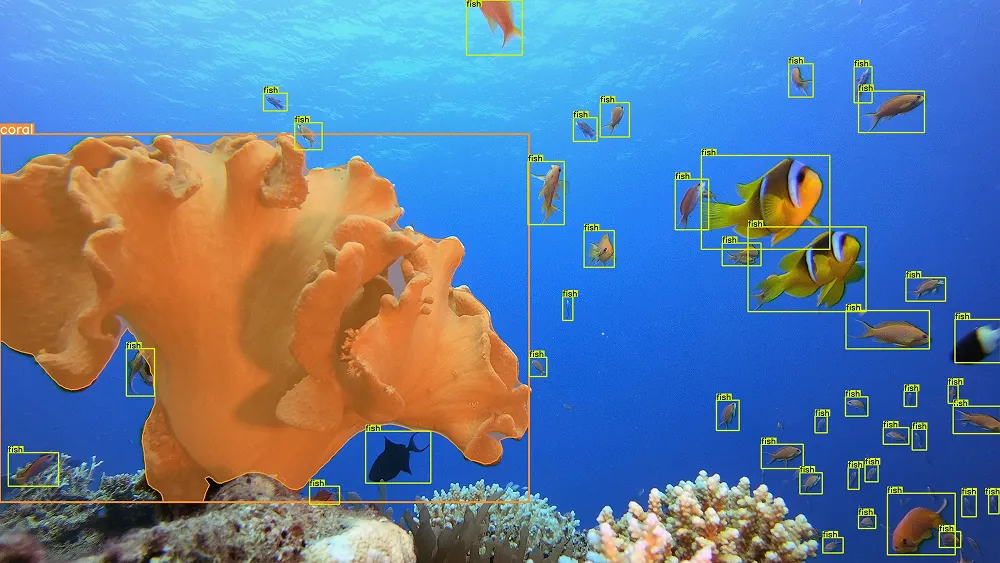

Researchers are currently exploring ways to apply similar speculative principles to

computer vision tasks. For instance, in

video generation, a lightweight model could draft

future frames that are subsequently refined by a high-fidelity diffusion model. As frameworks like

PyTorch and

TensorFlow integrate these optimizations natively, developers

can expect faster inference latency across a

wider range of modalities, from text to complex visual data processed by advanced architectures like

Ultralytics YOLO26.

For those managing the lifecycle of such models, utilizing tools like the

Ultralytics Platform ensures that the underlying datasets and training

pipelines are robust, providing a solid foundation for advanced inference techniques. Whether you are working with

large language models or state-of-the-art

object detection, optimizing the inference

pipeline remains a key step in moving from prototype to production.