Video Generation

Explore the world of AI video generation. Learn how diffusion models create synthetic footage and how to analyze clips using Ultralytics YOLO26 for computer vision.

Video Generation refers to the process where artificial intelligence models create synthetic video sequences based on

various input modalities, such as text prompts, images, or existing video footage. Unlike

image segmentation or object detection which

analyze visual data, video generation focuses on the synthesis of new pixels across a temporal dimension. This

technology leverages advanced

deep learning (DL) architectures to predict and

construct frames that maintain visual coherence and logical motion continuity over time. Recent advancements in 2025

have pushed these capabilities further, allowing for the creation of high-definition, photorealistic videos that are

increasingly difficult to distinguish from real-world footage.

How Video Generation Works

The core mechanism behind modern video generation typically involves

diffusion models or sophisticated

transformer-based architectures. These models learn the statistical distribution of video data from massive

datasets containing millions of video-text pairs. During the

generation phase, the model starts with random noise and iteratively refines it into a structured video sequence,

guided by the user's input.

Key components of this workflow include:

-

Temporal Attention: To ensure smooth motion, models utilize

attention mechanisms that reference previous

and future frames. This prevents the "flickering" effect often seen in early generative AI attempts.

-

Space-Time Modules: Architectures often employ 3D

convolutions or specialized transformers that process

spatial data (what is in the frame) and temporal data (how it moves) simultaneously.

-

Conditioning: The generation is conditioned on inputs like text prompts (e.g., "a cat running

in a meadow") or initial images, similar to how

text-to-image models function but with an added

time axis.

Real-World Applications

Video generation is rapidly transforming industries by automating content creation and enhancing digital experiences.

-

Entertainment and Filmmaking: Studios use

generative AI to create storyboards, visualize

scenes before filming, or generate background assets. This significantly reduces production costs and allows for

rapid iteration of visual concepts.

-

Autonomous Vehicle Simulation: Training self-driving cars requires diverse driving scenarios. Video

generation can create synthetic data representing

rare or dangerous edge cases—such as pedestrians suddenly crossing a dark road—which are difficult to capture safely

in the real world. This synthetic footage is then used to train robust

object detection models like Ultralytics YOLO.

Distinguishing Video Generation from Text-to-Video

While often used interchangeably, it is helpful to distinguish Video Generation as the broader

category.

-

Text-to-Video: A specific subset

where the input is exclusively a natural language prompt.

-

Video-to-Video: A process where an existing video is styled or altered (e.g., turning a video of a

person into a claymation animation).

-

Image-to-Video: Generating a moving clip from a single static

image classification input or photograph.

Video Analysis vs. Video Generation

It is crucial to differentiate between generating pixels and analyzing them. While generation

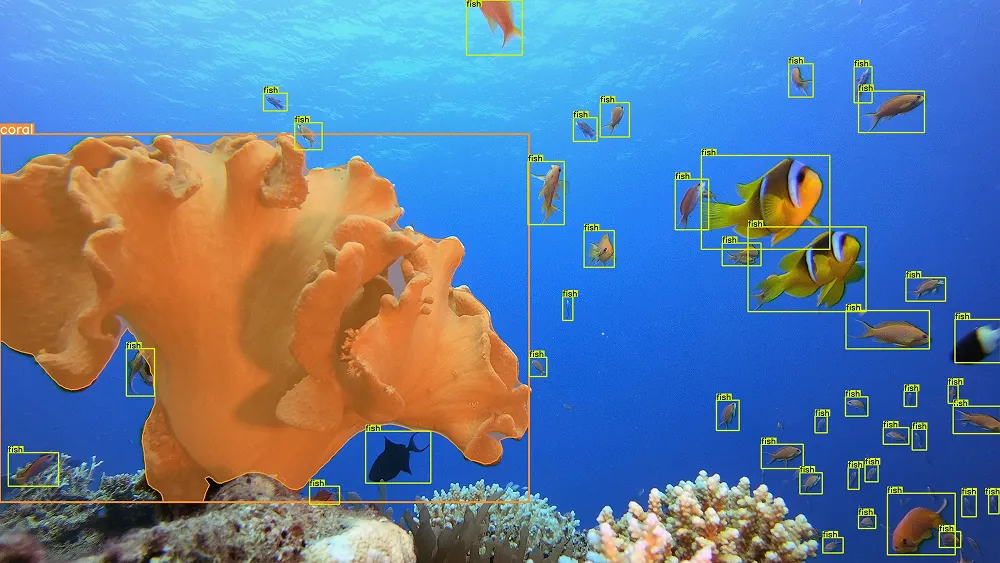

creates content, analysis extracts insights. For instance, after generating a synthetic training video, a developer

might use Ultralytics YOLO26 to verify that objects are

correctly identifiable.

The following example demonstrates how to use the ultralytics package to track objects within a generated

video file, ensuring the synthesized content contains recognizable entities.

from ultralytics import YOLO

# Load the YOLO26n model for efficient analysis

model = YOLO("yolo26n.pt")

# Track objects in a video file (e.g., a synthetic video)

# 'stream=True' is efficient for processing long video sequences

results = model.track(source="generated_clip.mp4", stream=True)

for result in results:

# Process results (e.g., visualize bounding boxes)

pass

Challenges and Future Outlook

Despite impressive progress, video generation faces hurdles regarding computational costs and

AI ethics. Generating high-resolution video requires

significant GPU resources, often

necessitating optimization techniques like

model quantization to be feasible for broader

use. Additionally, the potential for creating

deepfakes raises concerns about misinformation, prompting

researchers to develop watermarking and detection tools.

As the field evolves, we expect tighter integration between generation and analysis tools. For example, using the

Ultralytics Platform to manage datasets of generated videos could

streamline the training of next-generation

computer vision models, creating a virtuous

cycle where AI helps train AI. Researchers at organizations like

Google DeepMind and

OpenAI continue to push the boundaries of temporal consistency and physics

simulation in generated content.