Vision Language Model (VLM)

Explore Vision Language Models (VLM) with Ultralytics. Learn how they bridge computer vision and LLMs for VQA and open-vocabulary detection using Ultralytics YOLO26.

A Vision Language Model (VLM) is a type of artificial intelligence that can process and interpret both visual

information (images or video) and textual information simultaneously. Unlike traditional

computer vision models that focus solely on

pixel data, or

Large Language Models (LLMs) that only

understand text, VLMs bridge the gap between these two modalities. By training on massive datasets containing

image-text pairs, these models learn to associate visual features with linguistic concepts, allowing them to describe

images, answer questions about visual scenes, and even execute commands based on what they "see."

How Vision Language Models Work

At their core, VLMs typically consist of two main components: a vision encoder and a text encoder. The vision encoder

processes images to extract feature maps and visual

representations, while the text encoder handles the linguistic input. These distinct streams of data are then fused

using mechanisms like cross-attention to align

the visual and textual information in a shared embedding space.

Recent advancements in 2024 and 2025 have moved towards more unified architectures where a single transformer backbone

handles both modalities. For instance, models like

Google PaliGemma 2

demonstrate how effectively integrating these streams can enhance performance on complex reasoning tasks. This

alignment allows the model to understand context, such as recognizing that the word "apple" refers to a

fruit in a grocery store image but a tech company in a logo.

Real-World Applications

The ability to understand the world through both sight and language opens up diverse applications across various

industries:

-

Visual Question Answering (VQA): VLMs are heavily used in

healthcare diagnostics to assist radiologists.

A doctor might ask a system, "Is there a fracture in this X-ray?" and the model analyzes the medical image

to provide a preliminary assessment, reducing diagnostic errors.

-

Smart E-Commerce Search: In

retail environments, VLMs enable users to search

for products using natural language descriptions combined with images. A shopper could upload a photo of a

celebrity's outfit and ask, "Find me a dress with this pattern but in blue," and the system uses

semantic search to retrieve accurate matches.

-

Automated Captioning and Accessibility: VLMs automatically generate descriptive

alt text for images on the web, making digital

content more accessible to visually impaired users who rely on screen readers.

Differentiating VLMs from Related Concepts

It is helpful to distinguish VLMs from other AI categories to understand their specific role:

-

VLM vs. LLM: A

Large Language Model (like GPT-4

text-only versions) processes only text data. While it can generate creative stories or code, it cannot

"see" an image. A VLM effectively gives eyes to an LLM.

-

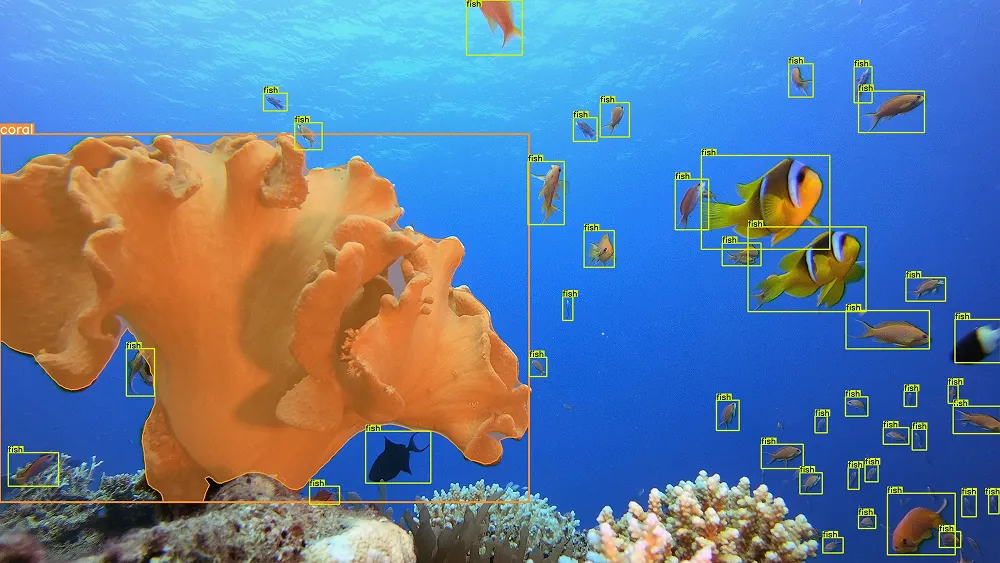

VLM vs. Object Detection: Traditional

object detection models, such as early YOLO

versions, identify where objects are and what class they belong to (e.g., "Car: 99%"). A

VLM goes further by understanding the relationships and attributes, such as "a red sports car parked next to a

fire hydrant."

-

VLM vs. Multimodal AI:

Multimodal AI is a broader umbrella term. While all

VLMs are multimodal (combining vision and language), not all multimodal models are VLMs; some might combine audio

and text (like speech-to-text) or video and sensor data without a language component.

Open-Vocabulary Detection with YOLO

Modern VLMs enable "open-vocabulary" detection, where you can detect objects using free-form text prompts

rather than pre-defined classes. This is a key feature of models like

Ultralytics YOLO-World, which allows for dynamic class

definitions without retraining.

The following example demonstrates how to use the ultralytics package to detect specific objects

described by text:

from ultralytics import YOLOWorld

# Load a model capable of vision-language understanding

model = YOLOWorld("yolov8s-world.pt")

# Define custom classes using natural language text prompts

model.set_classes(["person wearing sunglasses", "red backpack"])

# Run inference to find these text-defined objects in an image

results = model.predict("https://ultralytics.com/images/bus.jpg")

# Display the detection results

results[0].show()

Challenges and Future Directions

While powerful, Vision Language Models face significant challenges. One major issue is

hallucination, where the model confidently

describes objects or text in an image that simply aren't there. Researchers are actively working on techniques like

Reinforcement Learning from Human Feedback (RLHF)

to improve grounding and accuracy.

Another challenge is the computational cost. Training these massive models requires substantial

GPU resources. However, the release of

efficient architectures like Ultralytics YOLO26 is helping

to bring advanced vision capabilities to edge devices. As we move forward, we expect to see VLMs playing a crucial

role in robotic agents, allowing robots to navigate

and manipulate objects based on complex verbal instructions.

For those interested in the theoretical foundations, the original

CLIP paper by OpenAI provides excellent insight into contrastive

language-image pre-training. Additionally, keeping up with

CVPR conference papers is essential for tracking the rapid evolution of these

architectures. To experiment with training your own vision models, you can utilize the

Ultralytics Platform for streamlined dataset management and model

deployment.