KV Cache

Discover how KV Cache optimizes Transformer models like LLMs. Learn how this technique reduces inference latency and boosts efficiency for Ultralytics YOLO26.

KV Cache (Key-Value Cache) is a critical optimization technique used primarily in

Large Language Models (LLMs)

and other Transformer-based architectures to accelerate

inference latency and reduce

computational costs. At its core, the KV cache stores the Key and Value matrices generated by the

attention mechanism for

previous tokens in a sequence. By saving these intermediate calculations, the model avoids recomputing the attention

states for the entire history of the conversation every time it generates a new token. This process transforms the

text generation workflow from a

quadratic complexity operation into a linear one, making real-time interactions with chatbots and

AI agents feasible.

Mechanism and Benefits

In a standard Transformer model,

generating the next word requires paying attention to all previous words to understand the context. Without caching,

the model would need to recalculate the mathematical relationships for the entire sequence at every step. The KV cache

solves this by acting as a memory bank.

-

Speed Improvement: By retrieving pre-computed keys and values from memory, the system drastically

speeds up the inference engine. This is essential for applications requiring low latency, such as

real-time inference in

customer service bots.

-

Resource Efficiency: While it increases memory usage (VRAM), it significantly reduces the compute

(FLOPs) required per token. This trade-off is often managed through techniques like

model quantization or paging,

similar to how operating systems manage RAM.

-

Extended Context: Efficient management of the KV cache allows models to handle a larger

context window, enabling them to process long documents or maintain coherent conversations over extended periods.

Real-World Applications

The KV cache is a fundamental component in deploying modern generative AI, but its principles also extend into

computer vision (CV).

-

Generative Chatbots: Services like

ChatGPT or

Claude rely heavily on KV caching. When a user

asks a follow-up question, the model does not re-read the entire chat history from scratch. Instead, it appends the

new input to the cached states of the previous turn, allowing for near-instant responses.

-

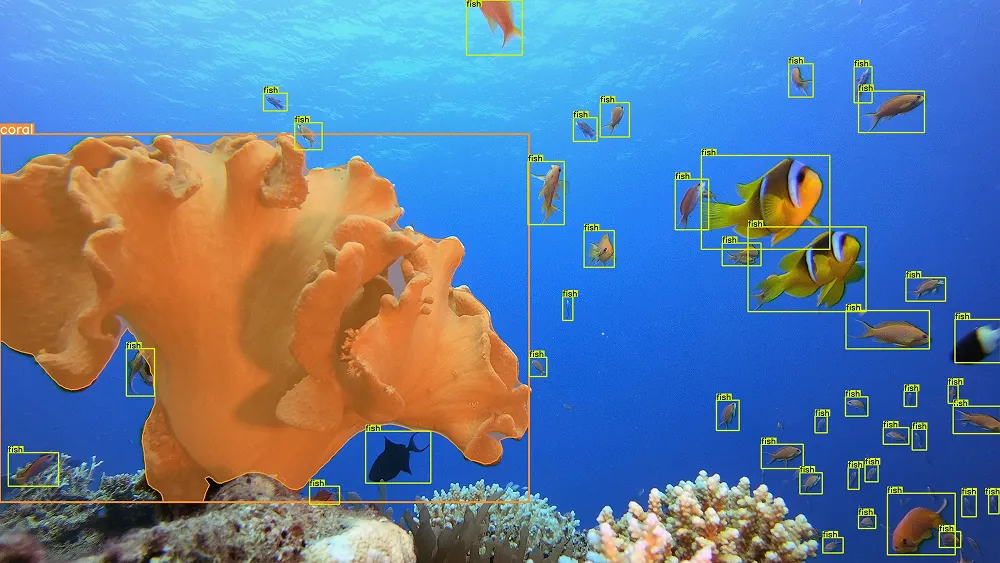

Video Understanding: In

video understanding tasks,

models process frames sequentially. Similar to text tokens, visual features from past frames can be cached to help

the model track objects or recognize actions without re-processing the entire video history. This is particularly

relevant for

action recognition where

temporal context is crucial.

Efficient Memory Management

As models grow larger, the size of the KV cache can become a bottleneck, consuming gigabytes of GPU memory. Recent

advancements focus on optimizing this storage.

-

PagedAttention: Inspired by virtual memory in operating systems,

PagedAttention (introduced by vLLM) allows the KV

cache to be stored in non-contiguous memory blocks. This reduces fragmentation and allows for higher batch sizes

during model serving.

-

KV Cache Quantization: To save space, developers often apply

mixed precision or int8

quantization specifically to the cached values. This reduces the memory footprint, allowing

edge AI devices with limited RAM to run

capable models.

-

Prompt Caching: A related technique where the KV states of a static system prompt (e.g., "You

are a helpful coding assistant") are computed once and reused across many different user sessions. This is a

core feature for optimizing

prompt engineering workflows

at scale.

Distinguishing Related Concepts

It is helpful to differentiate KV Cache from other caching and optimization terms:

-

KV Cache vs. Prompt Caching: KV

Cache typically refers to the dynamic, token-by-token memory used during a single generation stream. Prompt caching

specifically refers to storing the processed state of a fixed input instruction to be reused across multiple

independent inference calls.

-

KV Cache vs. Embeddings: Embeddings

are vector representations of input data (text or images) that capture semantic meaning. The KV cache stores the

activations (keys and values) derived from these embeddings within the attention layers, specifically for

the purpose of sequence generation.

-

KV Cache vs. Model Weights: Model

weights are the static, learned parameters of the neural network. The KV cache consists of dynamic, temporary data

generated during the forward pass of a specific input sequence.

Example: Context in Vision Models

While KV caching is most famous in NLP, the concept of maintaining state applies to advanced vision models. In the

example below, we simulate the idea of passing state (context) in a video tracking scenario using

Ultralytics YOLO26. Here, the tracker maintains the identity of objects across frames, conceptually similar to how a cache maintains

context across tokens.

from ultralytics import YOLO

# Load the Ultralytics YOLO26 model

model = YOLO("yolo26n.pt")

# Track objects in a video, maintaining identity state across frames

# The 'track' mode effectively caches object features to link detections

results = model.track(source="https://ultralytics.com/images/bus.jpg", show=False)

# Print the ID of the tracked objects

if results[0].boxes.id is not None:

print(f"Tracked IDs: {results[0].boxes.id.numpy()}")

Developers looking to manage datasets and deploy optimized models can utilize the

Ultralytics Platform, which simplifies the pipeline from data annotation to efficient

model deployment. For those interested in the deeper mechanics of attention, libraries like

PyTorch

provide the foundational blocks where these caching mechanisms are implemented.