Model Context Protocol (MCP)

Learn how the Model Context Protocol (MCP) standardizes AI connections to data and tools. Discover how to integrate Ultralytics YOLO26 with MCP for smarter workflows.

The Model Context Protocol (MCP) is an open standard designed to standardize how AI models interact with external

data, tools, and environments. Historically, connecting

large language models (LLMs) or computer

vision systems to real-world data sources—such as local files, databases, or API endpoints—required building custom

integrations for every single tool. MCP solves this fragmentation by providing a universal protocol, similar to a USB

port for AI applications. This allows developers to build a connector once and have it work across multiple AI

clients, significantly reducing the complexity of creating

context-aware customer support agents and

intelligent assistants.

How MCP Works

At its core, MCP functions through a client-host-server architecture. The "client" is the AI application

(like a coding assistant or a chatbot interface) that initiates the request. The "host" provides the runtime

environment, and the "server" is the bridge to the specific data or tool. When an

AI agent needs to access a file or query a database, it

sends a request via the protocol. The MCP server handles this request, retrieves the necessary context, and formats it

back to the model in a structured way.

This architecture supports three primary capabilities:

-

Resources: These allow the model to read data, such as logs, code files, or business documents,

providing the necessary grounding for

retrieval-augmented generation (RAG).

-

Prompts: Pre-defined templates that help users or models interact with the server effectively,

streamlining prompt engineering workflows.

-

Tools: Executable functions that allow the model to take action, such as editing a file, running a

script, or interacting with a

computer vision pipeline.

Real-World Applications

MCP is rapidly gaining traction because it decouples the model from the integration logic. Here are two concrete

examples of its application:

-

Unified Development Environments: In software engineering, developers often switch between an

IDE, a terminal, and documentation. An MCP-enabled coding assistant can connect to a GitHub repository, a local

file system, and a bug-tracking database simultaneously. If a developer asks, "Why is the login

failing?", the AI can use MCP servers to pull recent error logs, read the relevant authentication code, and

check open issues, synthesizing this

multi-modal data into a solution without

the user copying and pasting context.

-

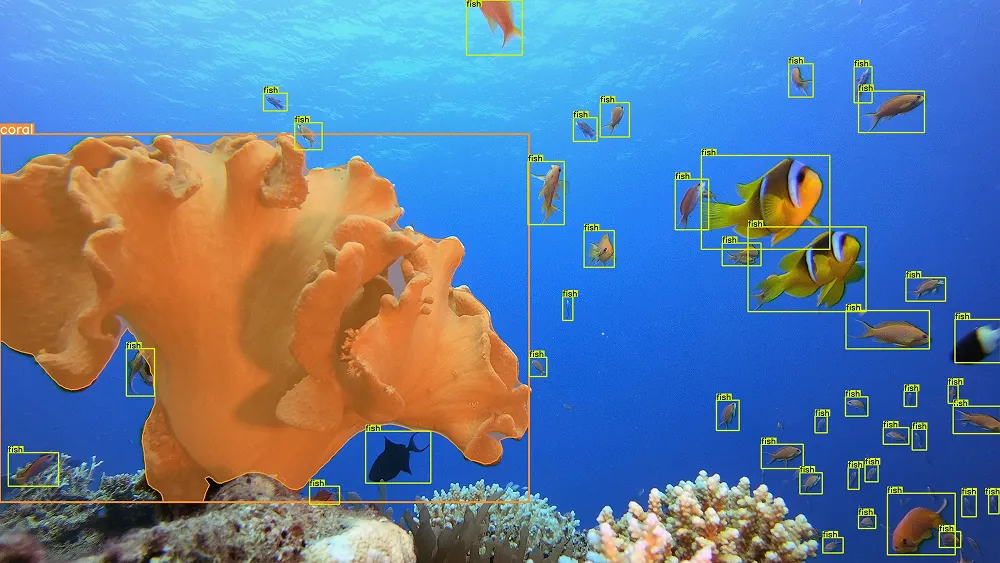

Context-Aware Visual Inspection: In industrial settings, a standard vision model detects defects

but lacks historical context. By using MCP, an

Ultralytics YOLO26 detection system can be linked to an

inventory database. When the model detects a "damaged part," it triggers an MCP tool to query the

database for replacement availability and automatically drafts a maintenance ticket. This transforms a simple

object detection task into a complete

automation workflow.

Differentiating Related Terms

It is helpful to distinguish MCP from similar concepts in the AI ecosystem:

-

MCP vs. API: An Application Programming Interface (API) is a specific set of rules for one piece of

software to talk to another. MCP is a protocol that standardizes how any AI model interacts with

any API or data source. You might build an MCP server that wraps a specific API, making it universally

accessible to MCP-compliant clients.

-

MCP vs. RAG:

Retrieval-Augmented Generation

is a technique for feeding external data to a model. MCP is the infrastructure that facilitates this. RAG

is the "what" (getting data), while MCP is the "how" (the standard connection pipe).

-

MCP vs. Function Calling: Many models, including

OpenAI GPT-4, support function calling natively. MCP

creates a standard way to define and expose these functions (tools) so that they don't have to be hard-coded into

the model's system prompt every time.

Integration with Computer Vision

While originally popularized for text-based LLMs, MCP is increasingly relevant for vision-centric workflows.

Developers can create MCP servers that expose computer vision capabilities as tools. For instance, an LLM acting as a

central controller could delegate a visual task to an Ultralytics model via a local Python script exposed as an MCP

tool.

The following Python snippet demonstrates a conceptual workflow where a script uses a vision model to generate

context, which could then be served via an MCP-compatible endpoint:

from ultralytics import YOLO

# Load the YOLO26 model (efficient, end-to-end detection)

model = YOLO("yolo26n.pt")

# Perform inference to get visual context from an image

results = model("https://ultralytics.com/images/bus.jpg")

# Extract detection data to be passed as context

detections = []

for r in results:

for box in r.boxes:

cls_name = model.names[int(box.cls)]

detections.append(f"{cls_name} (conf: {box.conf.item():.2f})")

# This string serves as the 'context' a downstream agent might request

context_string = f"Visual Analysis: Found {', '.join(detections)}"

print(context_string)

The Future of AI Connectivity

The introduction of the Model Context Protocol marks a shift toward

agentic AI

systems that are modular and interoperable. By standardizing connections, the industry moves away from siloed chatbots

toward integrated assistants capable of meaningful work within an organization's existing infrastructure. As tools

like the Ultralytics Platform continue to evolve, standard protocols

like MCP will likely play a crucial role in how

custom trained models are deployed and utilized within larger

enterprise workflows.