Vision Mamba

Explore Vision Mamba, a linear-complexity alternative to Transformers. Learn how State Space Models (SSMs) enhance efficiency for high-resolution computer vision.

Vision Mamba represents a significant shift in deep learning architectures for computer vision, moving away from the

dominance of attention-based mechanisms found in Transformers. It is an adaptation of the Mamba

architecture—originally designed for efficient sequence modeling in natural language processing—tailored specifically

for visual tasks. By leveraging State Space Models (SSMs), Vision Mamba offers a linear complexity alternative to the

quadratic complexity of traditional self-attention layers. This allows it to process high-resolution images more

efficiently, making it particularly valuable for applications where computational resources are constrained or where

long-range dependencies in visual data must be captured without the heavy memory footprint typical of

Vision Transformers (ViT).

How Vision Mamba Works

At the core of Vision Mamba is the concept of selectively scanning data. Traditional

Convolutional Neural Networks (CNNs)

process images using local sliding windows, which are excellent for detecting textures and edges but struggle with

global context. Conversely, Transformers use global attention to relate every pixel (or patch) to every other pixel,

which provides excellent context but becomes computationally expensive as image resolution increases. Vision Mamba

bridges this gap by flattening images into sequences and processing them using selective state spaces. This allows the

model to compress visual information into a fixed-size state, retaining relevant details over long distances in the

image sequence while discarding irrelevant noise.

The architecture typically involves a bidirectional scanning mechanism. Since images are 2D structures and not

inherently sequential like text, Vision Mamba scans the image patches in forward and backward directions (and

sometimes varying paths) to ensure that spatial relationships are understood regardless of the scanning order. This

approach enables the model to achieve global

receptive fields similar to Transformers but with

faster inference speeds and lower memory usage, often rivaling state-of-the-art results on benchmarks like

ImageNet.

Real-World Applications

Vision Mamba's efficiency makes it highly relevant for resource-constrained environments and high-resolution tasks.

-

Medical Image Analysis: In fields like radiology, analyzing high-resolution MRI or CT scans

requires detecting subtle anomalies that may be spatially distant within a large image. Vision Mamba can process

these large medical image analysis files

effectively without the memory bottlenecks that often plague standard Transformers, assisting doctors in identifying

tumors or fractures with high precision.

-

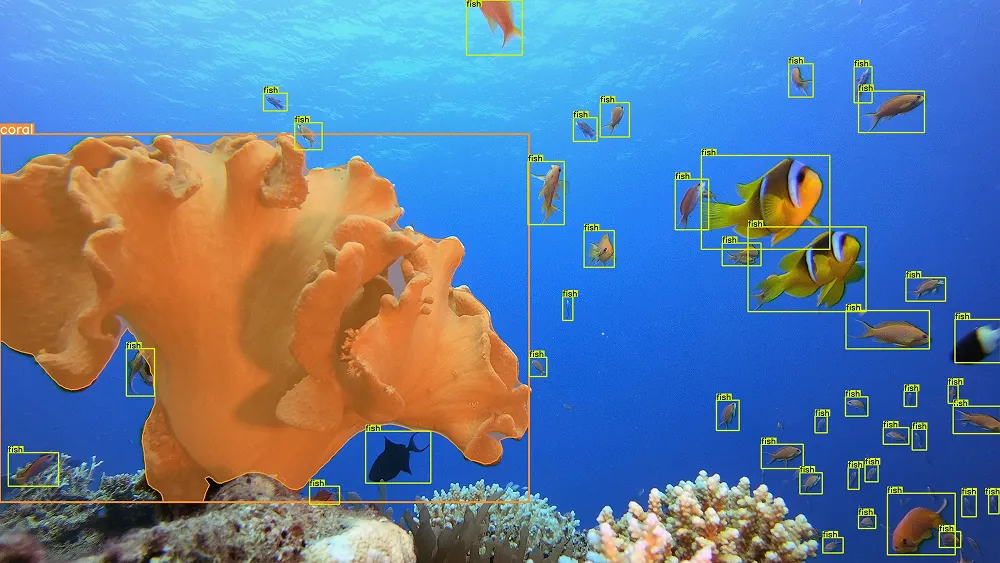

Autonomous Navigation on Edge Devices: Self-driving cars and drones rely on

edge computing to process video feeds in real

time. The linear scaling of Vision Mamba allows these systems to handle high-frame-rate video inputs for

object detection and

semantic segmentation more efficiently than

heavy Transformer models, ensuring faster reaction times for safety-critical decisions.

Vision Mamba vs. Vision Transformers (ViT)

While both architectures aim to capture global context, they differ fundamentally in operation.

-

Vision Transformer (ViT):

Relies on the attention mechanism, which

calculates the relationship between every pair of image patches. This results in quadratic complexity ($O(N^2)$),

meaning that doubling the image size quadruples the computational cost.

-

Vision Mamba: Utilizes State Space Models (SSMs) to process visual tokens linearly ($O(N)$). It

maintains a running state that updates as it sees new patches, allowing it to scale much better with higher

resolutions while maintaining comparable accuracy.

Example: Efficient Inference Workflow

While Vision Mamba is a specific architecture, its principles of efficiency align with the goals of modern real-time

models like Ultralytics YOLO26. Users looking for optimized

vision tasks can leverage the Ultralytics Platform for training and

deployment. Below is an example using the ultralytics package to run inference, demonstrating the ease of

using highly optimized vision models.

from ultralytics import YOLO

# Load a pre-trained YOLO26 model (optimized for speed and accuracy)

model = YOLO("yolo26n.pt") # 'n' for nano, emphasizing efficiency

# Run inference on an image

results = model.predict("path/to/image.jpg")

# Display the results

results[0].show()

Key Benefits and Future Outlook

The introduction of Mamba-based architectures into computer vision signals a move towards more hardware-aware AI. By

reducing the computational overhead associated with global attention,

researchers are opening doors for deploying advanced

AI agents on smaller devices.

Recent research, such as the VMamba paper and developments in

efficient deep learning, highlights the potential

for these models to replace traditional backbones in tasks ranging from

video understanding to

3D object detection. As the community continues

to refine scanning strategies and integration with

convolutional layers, Vision Mamba

is poised to become a standard component in the

deep learning toolbox alongside CNNs and

Transformers.