World Model

Explore how World Models simulate environments to predict future outcomes. Learn how they enhance Ultralytics YOLO26 for autonomous driving and advanced robotics.

A World Model is an advanced artificial intelligence system designed to learn a comprehensive simulation of its

environment, predicting how the world evolves over time and how its own actions influence that future. Unlike

traditional predictive modeling which typically

focuses on mapping static inputs to outputs—such as classifying an image—a World Model seeks to understand the causal

dynamics of a scene. By internalizing the physics, logic, and temporal sequences of the data it observes, it can

simulate potential outcomes before they happen. This capability is analogous to a human's mental model, allowing the

AI to "dream" or visualize future scenarios to plan complex tasks or generate realistic video content.

Moving Beyond Static Perception

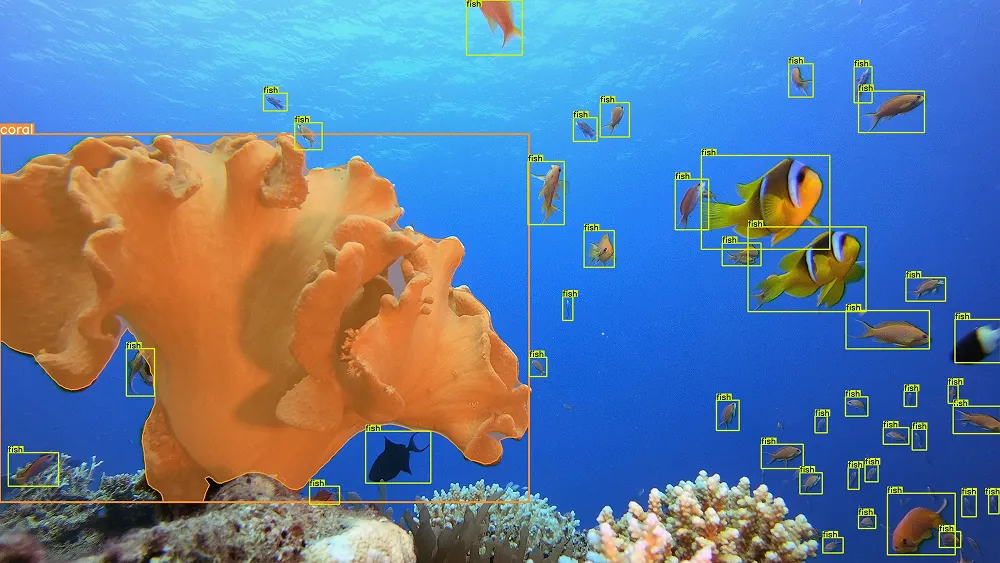

The core innovation of World Models lies in their ability to reason about time and cause-and-effect. In standard

computer vision tasks, models like

Ultralytics YOLO26 excel at detecting objects within a

single frame. However, a World Model takes this further by anticipating where those objects will be in the next frame.

This shift from static recognition to dynamic prediction is crucial for developing

autonomous vehicles and sophisticated robotics.

Recent breakthroughs, such as OpenAI's Sora text-to-video model, demonstrate the

generative power of World Models. By understanding how light, motion, and geometry interact, these systems can

hallucinate highly realistic environments from simple text prompts. Similarly, in the realm of

reinforcement learning, agents use these

internal simulations to train safely in a virtual mind before attempting dangerous tasks in the real world,

significantly improving AI safety and efficiency.

World Models vs. Foundation Models

It is helpful to distinguish World Models from other broad AI categories.

-

World Models vs. Foundation Models:

A foundation model is a general-purpose model trained on vast data (like GPT-4). A World Model is often a specific

type of foundation model or a component within one, specifically architected to simulate environmental dynamics and

temporal consistency.

-

World Models vs.

Large Language Models (LLMs):

While LLMs predict the next text token based on linguistic patterns, World Models predict the next "state"

of the world (often video frames or sensory data) based on physical and spatial rules.

Real-World Applications

The utility of World Models extends far beyond creating entertainment videos. They are becoming essential components

in industries that require complex decision-making.

-

Autonomous Driving: Self-driving car companies like

Waymo utilize World Models to simulate millions of driving scenarios. The

vehicle's AI can predict the trajectory of pedestrians and other cars, planning safe paths through busy

intersections without needing to experience every potential accident in reality.

-

Robotics and Manufacturing: In

smart manufacturing, robots equipped with World

Models can manipulate objects they have never seen before. By simulating the physics of a grasp or a lift, the robot

predicts whether an item will slip or break, adapting its actions in

real-time inference loops to ensure

precision.

Practical Example: Visualizing Future States

While full-scale World Models require immense compute, the concept of predicting future frames can be illustrated

using video understanding principles. The

following example demonstrates how to set up an environment where an agent (or model) might begin to track and

anticipate object movement, a foundational step in building a predictive worldview.

import cv2

from ultralytics import YOLO26

# Load the Ultralytics YOLO26 model to act as the perception engine

model = YOLO26("yolo26n.pt")

# Open a video source (0 for webcam or a video file path)

cap = cv2.VideoCapture(0)

while cap.isOpened():

success, frame = cap.read()

if not success:

break

# The 'track' mode maintains object identity over time,

# a prerequisite for learning object dynamics

results = model.track(frame, persist=True)

# Visualize the tracking, showing how the model follows movement

annotated_frame = results[0].plot()

cv2.imshow("Object Tracking Stream", annotated_frame)

if cv2.waitKey(1) & 0xFF == ord("q"):

break

cap.release()

cv2.destroyAllWindows()

The Future of Predictive AI

The development of World Models represents a step toward

Artificial General Intelligence (AGI). By learning to model the world effectively, AI systems gain

spatial intelligence and a form of

"common sense" about physical interactions. Researchers are currently exploring

Joint Embedding Predictive Architectures (JEPA) to

make these models more efficient, avoiding the heavy computational cost of generating every pixel and focusing instead

on high-level feature prediction. As these technologies mature, we can expect deeper integration with the

Ultralytics Platform, enabling developers to train agents that not only

see the world but truly understand it.