World Models

Explore how world models enable AI to predict future states using environmental dynamics. Learn how Ultralytics YOLO26 provides the perception for predictive AI.

A "World Model" refers to an AI system's internal representation of how an environment functions, allowing

it to predict future states or outcomes based on current observations and potential actions. Unlike traditional models

that map inputs directly to outputs (like classifying an image), a world model learns the underlying dynamics,

physics, and causal relationships of a system. This concept is central to advancing

Artificial General Intelligence (AGI)

because it gives machines a form of "common sense" reasoning, enabling them to simulate scenarios mentally

before acting in the real world.

The Mechanism Behind World Models

At its core, a world model functions similarly to human intuition. When you throw a ball, you don't calculate wind

resistance equations; your brain simulates the trajectory based on past experiences. Similarly, in

machine learning (ML), these models compress

high-dimensional sensory data (like video frames) into a compact latent state. This compressed state allows the agent

to "dream" or hallucinate potential futures efficiently.

Leading research, such as the work on Recurrent World Models by Ha and

Schmidhuber, demonstrates how agents can learn policies entirely inside a simulated dream environment. More recently,

generative AI advancements like OpenAI's Sora

represent a visual form of world modeling, where the system understands physics, lighting, and object permanence to

generate coherent video continuity.

Applications in Robotics and Simulation

World models are particularly transformative in fields requiring complex decision-making.

-

Autonomous Vehicles: Self-driving cars use world models to predict the behavior of other drivers

and pedestrians. By simulating thousands of potential traffic scenarios per second, the vehicle can choose the

safest path. This ties closely to

computer vision in automotive solutions, where

accurate perception is the foundation for prediction.

-

Robotics: In

manufacturing robotics, a

robot arm trained with a world model can adapt to novel objects or unexpected obstacles without needing retraining.

It understands the physics of grasping and movement, improving

smart manufacturing solutions.

World Models vs. Standard Reinforcement Learning

It is helpful to distinguish world models from standard approaches:

-

World Models vs.

Reinforcement Learning (RL):

Traditional RL is often "model-free," meaning the agent learns purely through trial and error in the

environment. A world model approach is "model-based," where the agent builds a simulator to learn from,

drastically reducing the amount of real-world interaction needed.

-

World Models vs.

Large Language Models (LLMs):

While LLMs predict the next text token, world models often predict the next visual frame or state. However, the

lines are blurring with the rise of

multi-modal learning, where models integrate

text, vision, and physics.

Practical Implementation Concepts

While building a full world model is complex, the foundational concept relies on predicting future states. For

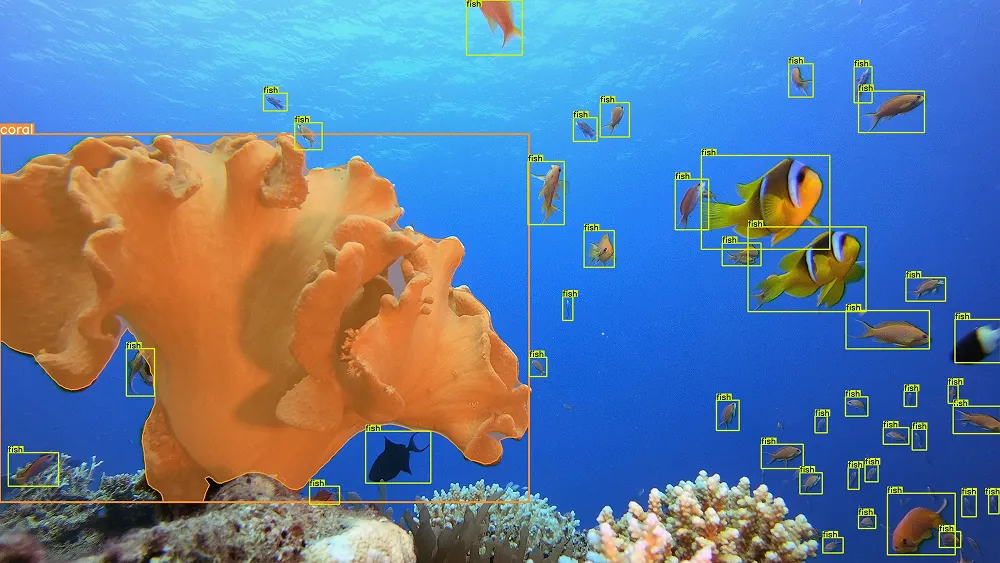

computer vision tasks, high-speed detection models like

Ultralytics YOLO26 act as the sensory "eyes" that

feed observations into the decision-making logic.

The following Python snippet demonstrates how you might use a YOLO model to extract the current state (object

positions) which would serve as the input for a world model's predictive step.

from ultralytics import YOLO

# Load the Ultralytics YOLO26 model to act as the perception layer

model = YOLO("yolo26n.pt")

# Perform inference to get the current state of the environment

results = model("https://ultralytics.com/images/bus.jpg")

# Extract bounding boxes (xyxy) representing object states

for result in results:

boxes = result.boxes.xyxy.cpu().numpy()

print(f"Observed State (Object Positions): {boxes}")

# A World Model would take these 'boxes' to predict the NEXT frame's state

The Future of Predictive AI

The evolution of world models is moving toward

physical AI, where digital intelligence interacts

seamlessly with the physical world. Innovations like

Yann LeCun's JEPA (Joint Embedding Predictive Architecture)

propose learning abstract representations rather than predicting every pixel, making the models significantly more

efficient.

As these architectures mature, we expect to see them integrated into the

Ultralytics Platform, enabling developers to not only detect objects

but also forecast their trajectories and interactions within dynamic environments. This shift from static detection to

dynamic prediction marks the next great leap in

computer vision (CV).