Explore the best computer vision tools for environmental monitoring that support scalable analysis of satellite imagery and environmental visual data.

Explore the best computer vision tools for environmental monitoring that support scalable analysis of satellite imagery and environmental visual data.

Small changes in satellite imagery, like a lake gradually shrinking or tree cover slowly thinning, might not seem important at first glance. However, over time, these subtle shifts can tell a much bigger story about how the environment is changing.

Tracking these changes is the goal of environmental monitoring, but doing so at scale isn’t easy. Monitoring large and remote areas can mean generating enormous amounts of visual data, and traditional analysis methods often struggle to keep up with the volume, frequency, and complexity of this information.

Recent advances in AI help close this gap. In particular, computer vision, a branch of artificial intelligence that enables machines to interpret images and videos in real time, is making a difference.

By analyzing visual data from satellites, drones, and camera systems, computer vision can enable a wide range of monitoring use cases, from tracking deforestation and water quality to observing wildlife activity. This ability to detect changes early provides clearer insights and supports more informed decision-making for mitigation.

Vision AI models such as Ultralytics YOLO26 support core computer vision tasks like object detection and instance segmentation. These capabilities make it easier to identify environmental features, monitor changes over time, and scale analysis across large and diverse regions.

In this article, we’ll explore some of the leading computer vision tools used for real-world environmental monitoring. Let’s get started!

Environmental monitoring tracks how natural systems change over time and how human activity affects them. As pressure on ecosystems continues to increase, having a clear and current view of what is happening on the ground is key to taking action and supporting long-term sustainability.

Here are some examples of how environmental monitoring is used to extract valuable insights:

However, scaling environmental monitoring solutions isn’t easy. Traditional methods rely heavily on manual surveys and limited coverage, making it hard to capture changes quickly.

At the same time, modern real-time monitoring methods produce massive amounts of visual data from satellites, drones, and cameras, making it difficult to review data manually. Due to these challenges, environmental scientists are turning to Vision AI technology to analyze visual data accurately and consistently at scale.

Computer vision systems play a key role in environmental monitoring by making it possible to analyze large amounts of visual data efficiently. It uses vision AI models that are trained to interpret images and videos by learning visual patterns, similar to how people recognize objects by sight.

Models like YOLO26 are trained on large sets of labeled images and learn to identify environmental features using computer vision tasks such as object detection. With object detection, a model can locate and label individual objects in an image, such as trees, water bodies, buildings, or animals.

For example, in forest monitoring systems, a model can detect individual trees across a satellite or drone image and count them automatically. When images of the same area are collected over time, these detections can be compared to measure change.

This makes it simple to track factors like deforestation, reductions in water surface area, or the spread of urban infrastructure. By applying the same detection logic consistently, computer vision enables reliable monitoring of environmental change, even across large or remote regions.

Here’s a glimpse of some other key computer vision tasks commonly used for environmental monitoring:

Today, a wide range of Vision AI tools support environmental monitoring. Some are designed to analyze large-scale satellite imagery, while others focus on real-time data from drones or ground-based cameras.

Next, we’ll explore some of the top computer vision tools and how they are used to analyze environmental data.

Ultralytics YOLO models are a family of real-time computer vision models used for tasks such as object detection, instance segmentation, image classification, and pose estimation. YOLO stands for “You Only Look Once,” meaning the model analyzes an entire image in a single pass, which allows it to run quickly.

The latest YOLO26 models include improvements that make them lighter, faster, and easier to deploy. They come in different sizes, so environmental teams can balance speed, accuracy, and available resources.

YOLO26 models are pretrained on large benchmark datasets like the COCO dataset, which helps them recognize general objects like cats and dogs out of the box. They can then be fine-tuned using domain-specific environmental datasets to improve accuracy for specific tasks, such as identifying vegetation, water bodies, or infrastructure.

Once trained and validated, YOLO26 models can be exported and run on a variety of hardware formats. This makes them suitable for use in larger systems that process visual data from satellites, drones, or camera networks.

FlyPix AI is a geospatial analysis platform used to work with high-resolution aerial imagery from drones and satellites. The platform turns large volumes of imagery into usable information for ongoing environmental monitoring.

This tool uses AI-based analysis to automatically detect objects, track changes over time, and flag unusual patterns or anomalies in the data. These capabilities support the analysis of both gradual trends and sudden or unexpected changes visible in imagery.

As a result, users can monitor environmental conditions and identify issues such as waste accumulation, oil spills, deforestation, and changes in land or coastal areas. The results can be incorporated into standard Geographic Information Systems (GIS) workflows, supporting consistent monitoring and documentation across large geographic areas.

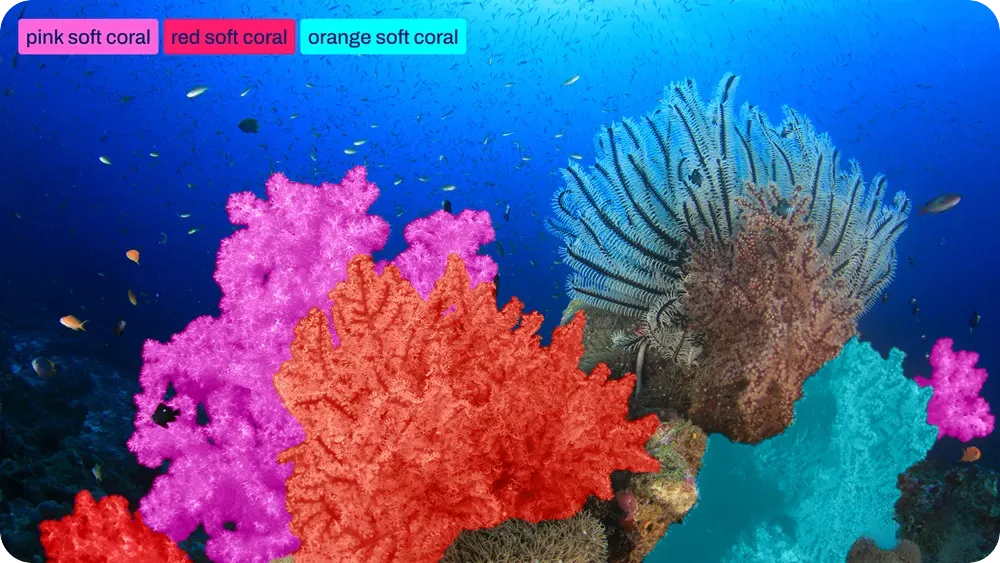

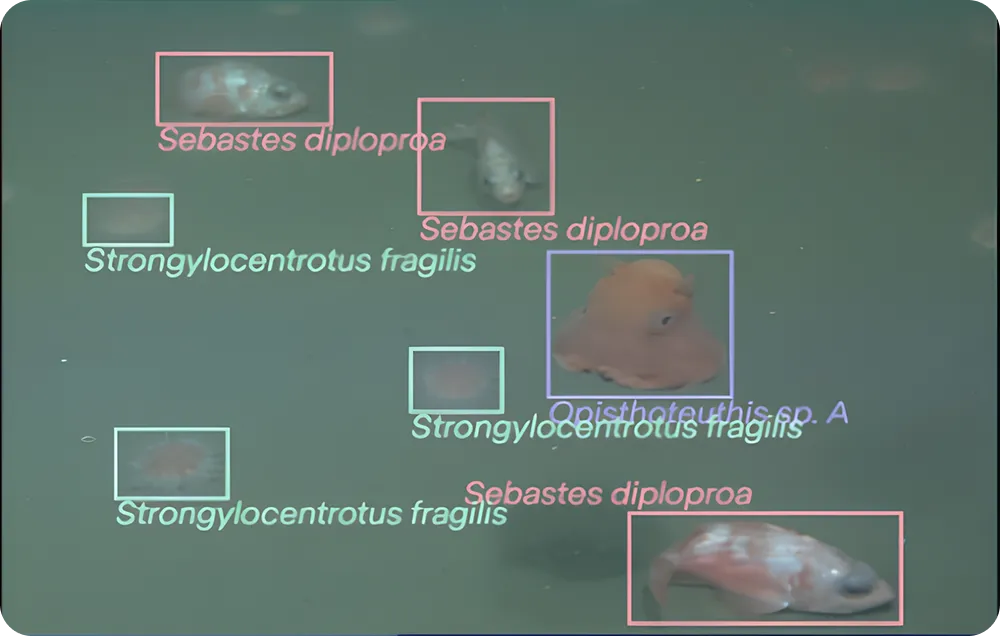

Ocean Vision AI is a computer vision and machine-learning platform that integrates tools, services, and community participation to support large-scale analysis of underwater imagery. In other words, it brings together visual data from different ocean sources and uses AI to handle the heavy lifting of sorting and data analysis.

The platform is designed for researchers who work with large amounts of underwater data collection. It supports data organization, the creation of high-quality annotations, and the development of models that can be reused and shared within the research community.

Ocean Vision AI also incorporates public participation initiatives through a game-based annotation system that allows non-experts to help label underwater images. These contributions are used to expand annotated datasets and improve model performance over time.

Raster Vision is an open-source library for working with satellite images and aerial imagery that combines geospatial data handling with deep learning–based computer vision. It integrates GIS-aware data processing with machine learning workflows to support large-scale analysis of geographic imagery.

Raster Vision includes a flexible vision pipeline that supports tasks such as image chip classification, semantic segmentation, and object detection. Because satellite and aerial images are typically very large, the library is designed to scale to large datasets and is commonly applied to problems such as land cover mapping, deforestation detection, and urban growth analysis.

To support efficient processing, Raster Vision divides large images into smaller units known as chips, which are used for model training and inference. The library also supports the full computer vision workflow, including data preparation, model training, evaluation, and batch deployment for recurring or large-scale image analysis.

Detectron2 is an open-source computer vision library developed by Facebook AI Research. It provides implementations of state-of-the-art algorithms for tasks such as object detection, instance segmentation, and panoptic segmentation, including models like Mask R-CNN. Detectron2 is widely used in research and applied computer vision projects due to its modular design and strong benchmark performance.

Specifically, for environmental monitoring, Detectron2 is often used to analyze satellite and drone imagery. It can be trained to detect forest fires, deforestation, wildlife, and land-cover changes. Its flexibility and strong performance make it a good option for building practical monitoring solutions across different ecosystems.

While exploring various computer vision tools for environmental monitoring, you may find yourself wondering how to choose the right one for your project or AI system.

Here are the key factors to consider when selecting a computer vision tool for environmental monitoring:

Environmental monitoring often involves tracking changes across large areas and over long periods of time. Computer vision technology enables consistent, scalable analysis of visual data. When used with the right data and workflows, these approaches streamline timely monitoring of land, marine, and atmospheric environments and help turn large volumes of imagery into useful insights.

To learn more, explore our GitHub repository. Join our community and check out our solutions pages to read about applications like AI in healthcare and computer vision in the automotive industry. Discover our licensing options to get started with Vision AI today.