Synthetic Data Generation

Explore how synthetic data generation creates high-fidelity AI training sets. Learn to boost Ultralytics YOLO26 performance and overcome data privacy hurdles.

Synthetic Data Generation is the process of creating artificial datasets that mimic the statistical properties and

patterns of real-world data without containing any actual real-world individuals or events. In the realm of

artificial intelligence (AI) and

machine learning (ML), this technique has

become a cornerstone for overcoming data scarcity, privacy concerns, and bias. Unlike traditional data collection,

which relies on recording events as they happen, synthetic generation uses algorithms, simulations, and generative

models to manufacture high-fidelity data on demand. This approach is particularly vital for training robust

computer vision (CV) models, as it allows

developers to create vast amounts of perfectly labeled

training data for scenarios that are rare, dangerous,

or expensive to capture in reality.

The Mechanism Behind Synthetic Generation

The core technology driving synthetic data generation often involves advanced

generative AI architectures. These systems analyze a

smaller sample of real data to understand its underlying structure and correlations. Once the model learns these

distributions, it can sample from them to produce new, unique instances.

Two primary methods dominate the landscape:

-

Computer Simulations: For vision tasks, developers use 3D graphics engines—similar to those used in

video games—to render photorealistic scenes. This allows for precise control over lighting, weather, and object

placement. Because the computer generates the scene, it also automatically generates perfect annotations (like

bounding boxes for object detection), bypassing

the need for manual data annotation.

-

Deep Generative Models: Architectures such as

Generative Adversarial Networks (GANs)

and diffusion models can synthesize highly

realistic images or tabular data. For example, NVIDIA researchers utilize

these models to create diverse training environments for autonomous machines.

Real-World Applications in AI

Synthetic data generation is transforming industries where data is a bottleneck.

-

Autonomous Driving: Training self-driving cars requires billions of miles of driving data.

Collecting this physically is impossible. Instead, companies use synthetic environments to simulate dangerous edge

cases—like a child chasing a ball into the street or blinding glare from the sun. This ensures the

autonomous vehicle perception systems are

trained on critical scenarios they might rarely encounter on actual roads.

-

Healthcare and Medical Imaging: Patient privacy laws such as

HIPAA strictly limit the sharing of

medical records. Synthetic generation allows researchers to create datasets of X-rays or MRI scans that retain the

biological markers of diseases like tumors but are completely disconnected from real patients. This enables the

development of

medical image analysis tools without

compromising patient confidentiality.

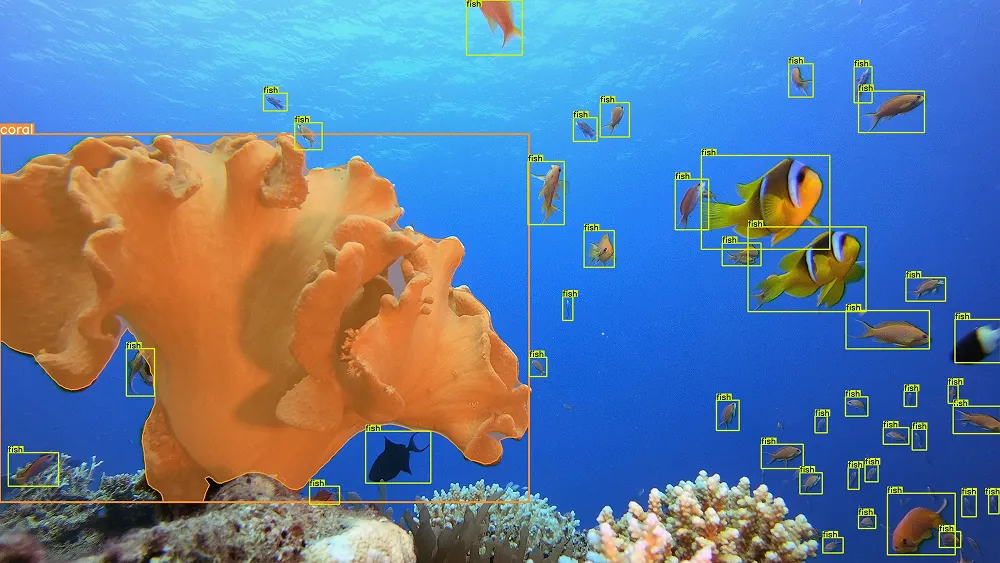

Synergy with Ultralytics YOLO26

Integrating synthetic data into your workflow can significantly boost the performance of state-of-the-art models like

Ultralytics YOLO26. By supplementing real-world datasets

with synthetic examples, you can improve the model's ability to generalize to new environments.

Below is a Python example showing how to load a model that could be trained on a mix of real and synthetic data to

perform inference.

from ultralytics import YOLO

# Load a YOLO26 model (trained on diverse synthetic and real data)

model = YOLO("yolo26n.pt")

# Run inference on an image to verify detection capabilities

# Synthetic training helps models handle varied lighting and angles

results = model("https://ultralytics.com/images/bus.jpg")

# Display the resulting bounding boxes and confidence scores

results[0].show()

Differentiating Synthetic Data from Data Augmentation

While both techniques aim to expand datasets, it is important to distinguish Synthetic Data Generation from

data augmentation.

-

Data Augmentation takes existing real-world images and modifies them—flipping, rotating,

or changing color balance—to create variations. It is strictly derivative of the original capture.

-

Synthetic Data Generation creates entirely new data points from scratch. It does not

require a one-to-one correspondence with a real source image during generation, allowing for the creation of scenes

that have never physically existed.

Best Practices and Challenges

To effectively use synthetic data, it is crucial to ensure "sim-to-real" transferability. This refers to how

well a model trained on synthetic data performs on real-world inputs. If the synthetic data lacks the texture or noise

of real images, the model may fail in deployment. To mitigate this, developers use techniques like

domain randomization, varying the textures and lighting in simulations to force the model to learn shape-based features rather than

relying on specific artifacts.

Using the Ultralytics Platform, teams can manage these hybrid datasets,

monitor model performance, and ensure that the inclusion of synthetic data is genuinely improving accuracy metrics

like mean Average Precision (mAP). As

noted by

Gartner, synthetic data is rapidly becoming a standard requirement for building capable AI systems, offering a path to

training models that are fairer, more robust, and less biased.